Manage your Databricks account

Databricks account-level configurations are managed by account admins. This article includes various settings the account admin can manage through the account console. The other articles in this section cover additional tasks performed by account admins.

As an account admin, you can also manage your Databricks account using the Account API.

Transfer account ownership

The user who originally set up your Databricks account is automatically assigned an account owner role in the background. Account owners have the same permissions as account admins except their account admin role cannot be removed. You will see a permissions denied error if you try to remove this user's account admin role.

If you need to remove this user's account admin role, contact Databricks support to help you transfer the account owner role to a different user in your account.

Switch between Databricks accounts

If you belong to more than one account, you can switch between accounts in the Databricks UI. To use the account switcher, click your account initial at the top-right corner of the Databricks UI, then use Switch account to select the account you want to navigate to.

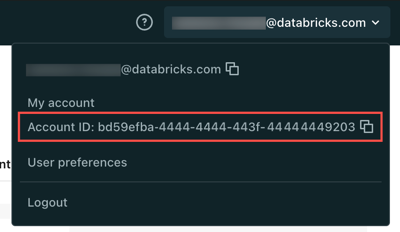

Locate your account ID

To retrieve your account ID, go to the account console and click the down arrow next to your username in the upper right corner. In the drop-down menu, you can view and copy your Account ID.

You must be in the account console to retrieve the account ID. The ID will not display inside a workspace.

Add an account nickname

To help identify your Databricks account in the Databricks UI, give your account a human-readable nickname. This nickname displays at the top of the account console and in the dropdown menu next to your account ID. Account nicknames are especially useful if you have more than one Databricks account.

To add an account nickname:

- Log in to the account console and click the Settings icon in the sidebar.

- Click the Account settings tab.

- Under Account name, enter your new account nickname, and then click Save.

You can update account nicknames at any time.

Change the account console language settings

The account console is available in multiple languages. To change the account console language, click Settings > Language Settings > My Preferences tab.

Manage email preferences

Databricks can occasionally send emails with personalized product and feature recommendations based on your use of Databricks. These messages may include information to help users get started with Databricks or learn about new features and previews.

You can manage whether you receive these emails in the account console:

- Log in to the account console and click the Settings icon in the sidebar.

- In the My preferences section, click the Instructional product and feature emails toggle.

You can also manage your promotional email communications by clicking Manage under Promotional email communications or by going to the Marketing preference center. Non-admin users can update this setting by clicking the My preferences link next to their workspace in the account console.