Identify and delete empty Vector Search endpoints

This page describes how you can identify and delete empty Vector Search endpoints. Because Vector Search endpoints are workspace-specific resources, you need to repeat this process for each workspace separately.

Requirements

- Databricks SDK for Python (

databricks-sdk). - Databricks Vector Search Python SDK (

databricks-vectorsearch). - Authentication configured (OAuth, PAT, or configuration profiles).

CAN_MANAGEpermission for Vector Search endpoints in target workspaces.

To install the required SDKs in your Databricks notebook or local Python environment:

# In a Databricks notebook

%pip install databricks-sdk databricks-vectorsearch

# In local Python environment

# pip install databricks-sdk databricks-vectorsearch

Identify empty endpoints

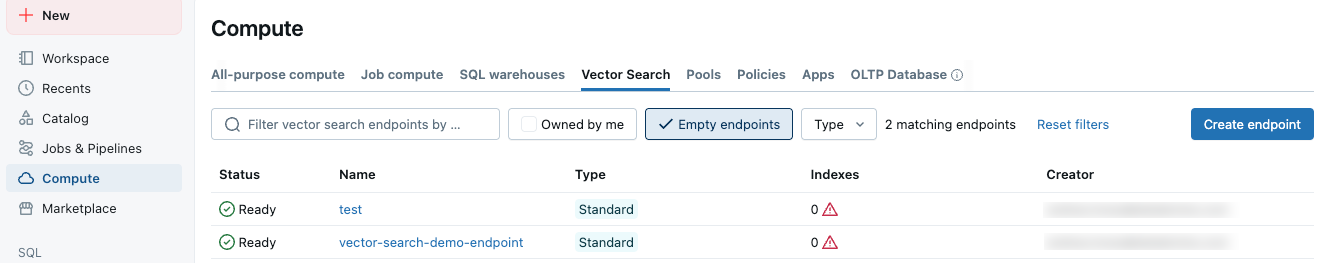

In the Databricks UI, vector search endpoints are shown on the Vector Search tab of the Compute screen. Toggle the Empty endpoints checkbox to display endpoints that have no indexes associated with them. Empty endpoints are also marked with a warning triangle icon as shown.

Authentication

This section describes authentication options.

Option 1. Run inside a Databricks notebook

When you run the code in a Databricks workspace notebook, authentication is automatic:

from databricks.vector_search.client import VectorSearchClient

# Credentials are picked up automatically from notebook context

client = VectorSearchClient()

Option 2. Personal access token (PAT)

For external environments, provide explicit credentials:

from databricks.vector_search.client import VectorSearchClient

client = VectorSearchClient(

workspace_url="https://<your-instance>.cloud.databricks.com",

personal_access_token="dapiXXXXXXXXXXXXXXXXXXXXXXXX"

)

Option 3. Use configuration profiles (Recommended for multiple workspaces)

Create a .databrickscfg file in your home directory and include a profile for each workspace:

[DEFAULT]

host = https://workspace1.cloud.databricks.com

token = dapiXXXXXXXXXXXXXXXXXXXXXXXX

[PRODUCTION]

host = https://workspace2.cloud.databricks.com

token = dapiYYYYYYYYYYYYYYYYYYYYYYYY

[DEVELOPMENT]

host = https://workspace3.cloud.databricks.com

token = dapiZZZZZZZZZZZZZZZZZZZZZZZZ

If you prefer not to use configuration profiles, you can specify credentials directly:

# Define workspaces with explicit credentials

workspace_configs = [

{

'workspace_url': 'https://workspace1.cloud.databricks.com',

'token': 'dapiXXXXXXXXXXXXXXXXXXXXXXXX'

},

{

'workspace_url': 'https://workspace2.cloud.databricks.com',

'token': 'dapiYYYYYYYYYYYYYYYYYYYYYYYY'

}

]

# Run cleanup, set `dry_run=False` to perform actual deletion

results = cleanup_multiple_workspaces(workspace_configs, dry_run=True)

Delete endpoints in a single workspace

Vector Search endpoints are workspace-specific. Here is a basic script to find and delete empty endpoints in a single workspace. To clean up empty endpoints across multiple workspaces, see Delete endpoints across multiple workspaces.

Endpoint deletion is irreversible. Use the option dry_run=True to see a list of the endpoints that will be deleted. After you have confirmed that the list is correct, run the script with dry_run=False.

from databricks.vector_search.client import VectorSearchClient

def cleanup_empty_endpoints(client, dry_run=True):

"""

Find and delete empty Vector Search endpoints.

Args:

client: VectorSearchClient instance

dry_run: If True, only print what would be deleted without actually deleting

Returns:

List of deleted endpoint names

"""

deleted_endpoints = []

# List all Vector Search endpoints

endpoints = client.list_endpoints()

for endpoint in endpoints["endpoints"]:

# List indexes in this endpoint

indexes = list(client.list_indexes(name=endpoint["name"])['vector_indexes'])

if len(indexes) == 0:

if dry_run:

print(f"[DRY RUN] Would delete empty endpoint: '{endpoint["name"]}'")

else:

print(f"Deleting empty endpoint: '{endpoint["name"]}'")

try:

client.delete_endpoint(endpoint["name"])

deleted_endpoints.append(endpoint["name"])

print(f"✓ Successfully deleted: {endpoint["name"]}")

except Exception as e:

print(f"✗ Failed to delete {endpoint["name"]}: {str(e)}")

else:

print(f"Endpoint '{endpoint["name"]}' has {len(indexes)} indexes - keeping")

return deleted_endpoints

# Example usage

client = VectorSearchClient() # Uses default authentication

# Set `dry_run=False` when you are ready to delete endpoints

deleted = cleanup_empty_endpoints(client, dry_run=True)

print(f"\nTotal endpoints deleted: {len(deleted)}")

Delete endpoints across multiple workspaces

To clean up empty endpoints across multiple workspaces, iterate through your configuration profiles:

-

Endpoint deletion is irreversible. Use the option

dry_run=Trueto see a list of the endpoints that will be deleted. After you have confirmed that the list is correct, run the script withdry_run=False. -

When processing many workspaces, be mindful of API rate limits. Add delays if necessary:

Pythonimport time

for config in workspace_configs:

# Set `dry_run=False` to perform actual deletion

result = cleanup_workspace(**config, dry_run=True)

time.sleep(2) # Add delay between workspaces

from databricks.sdk import WorkspaceClient

from databricks.vector_search.client import VectorSearchClient

import logging

# Configure logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

logger = logging.getLogger(__name__)

def cleanup_workspace(profile_name=None, workspace_url=None, token=None, dry_run=True):

"""

Clean up empty endpoints in a specific workspace.

Args:

profile_name: Name of configuration profile to use

workspace_url: Direct workspace URL (if not using profile)

token: PAT token (if not using profile)

dry_run: If True, only show what would be deleted

Returns:

Dict with cleanup results

"""

try:

# Initialize client based on authentication method

if profile_name:

# Use Databricks SDK to get credentials from profile

w = WorkspaceClient(profile=profile_name)

workspace_url = w.config.host

client = VectorSearchClient(

workspace_url=workspace_url,

personal_access_token=w.config.token

)

logger.info(f"Connected to workspace using profile '{profile_name}': {workspace_url}")

elif workspace_url and token:

client = VectorSearchClient(

workspace_url=workspace_url,

personal_access_token=token

)

logger.info(f"Connected to workspace: {workspace_url}")

else:

# Use default authentication (notebook context)

client = VectorSearchClient()

logger.info("Connected using default authentication")

# Perform cleanup

deleted = cleanup_empty_endpoints(client, dry_run=dry_run)

return {

'workspace': workspace_url or 'default',

'success': True,

'deleted_count': len(deleted),

'deleted_endpoints': deleted

}

except Exception as e:

logger.error(f"Failed to process workspace: {str(e)}")

return {

'workspace': workspace_url or profile_name or 'default',

'success': False,

'error': str(e)

}

def cleanup_multiple_workspaces(workspace_configs, dry_run=True):

"""

Clean up empty endpoints across multiple workspaces.

Args:

workspace_configs: List of workspace configurations

dry_run: If True, only show what would be deleted

Returns:

Summary of cleanup results

"""

results = []

for config in workspace_configs:

logger.info(f"\n{'='*60}")

result = cleanup_workspace(**config, dry_run=dry_run)

results.append(result)

logger.info(f"{'='*60}\n")

# Print summary

total_deleted = sum(r['deleted_count'] for r in results if r['success'])

successful = sum(1 for r in results if r['success'])

failed = sum(1 for r in results if not r['success'])

logger.info("\n" + "="*60)

logger.info("CLEANUP SUMMARY")

logger.info("="*60)

logger.info(f"Workspaces processed: {len(results)}")

logger.info(f"Successful: {successful}")

logger.info(f"Failed: {failed}")

logger.info(f"Total endpoints deleted: {total_deleted}")

if failed > 0:

logger.warning("\nFailed workspaces:")

for r in results:

if not r['success']:

logger.warning(f" - {r['workspace']}: {r['error']}")

return results

# Example: Clean up using configuration profiles

workspace_configs = [

{'profile_name': 'DEFAULT'},

{'profile_name': 'PRODUCTION'},

{'profile_name': 'DEVELOPMENT'}

]

# Set `dry_run=False` to do actual deletion.

results = cleanup_multiple_workspaces(workspace_configs, dry_run=True)

Custom filtering

You can add custom logic to exclude certain endpoints from deletion, as shown:

def should_delete_endpoint(endpoint, indexes):

"""

Custom logic to determine if an endpoint should be deleted.

Args:

endpoint: Endpoint object

indexes: List of indexes in the endpoint

Returns:

Boolean indicating if endpoint should be deleted

"""

# Don't delete if it has indexes

if len(indexes) > 0:

return False

# Don't delete endpoints with specific naming patterns

protected_patterns = ['prod-', 'critical-', 'do-not-delete']

for pattern in protected_patterns:

if pattern in endpoint.name.lower():

logger.warning(f"Skipping protected endpoint: {endpoint.name}")

return False

# Add more custom logic as needed

return True

Export results

To save cleanup results to a file for auditing:

import json

from datetime import datetime

def export_results(results, filename=None):

"""Export cleanup results to JSON file."""

if not filename:

timestamp = datetime.now().strftime('%Y%m%d_%H%M%S')

filename = f'vector_search_cleanup_{timestamp}.json'

with open(filename, 'w') as f:

json.dump({

'timestamp': datetime.now().isoformat(),

'results': results

}, f, indent=2)

logger.info(f"Results exported to: {filename}")

Troubleshooting

Authentication issues

- Verify that your PAT tokens are valid and not expired.

- Ensure configuration profiles are correctly formatted.

- Check that your tokens have the necessary permissions.

Permission errors

Verify that your user or service principal has CAN_MANAGE permission for Vector Search endpoints.

Network issues

For environments with proxy requirements, configure the SDK appropriately:

import os

os.environ['HTTPS_PROXY'] = 'http://your-proxy:po

Next steps

- Schedule this script to run periodically using Lakeflow Jobs.

- Integrate with your infrastructure-as-code pipeline.

- Add email or Slack notifications for cleanup summaries.

- Create a dashboard to track endpoint usage across workspaces.