MLflow for ML model lifecycle

This article describes how MLflow on Databricks is used to develop high-quality generative AI agents and machine learning models.

If you're just getting started with Databricks, consider trying MLflow on Databricks Free Edition.

What is MLflow?

MLflow is an open source platform for developing models and generative AI applications. It has the following primary components:

- Tracking: Allows you to track experiments to record and compare parameters and results.

- Models: Allow you to manage and deploy models from various ML libraries to various model serving and inference platforms.

- Model Registry: Allows you to manage the model deployment process from staging to production, with model versioning and annotation capabilities.

- AI agent evaluation and tracing: Allows you to develop high-quality AI agents by helping you compare, evaluate, and troubleshoot agents.

MLflow supports Java, Python, R, and REST APIs.

MLflow 3

MLflow 3 on Databricks delivers state-of-the-art experiment tracking, observability, and performance evaluation for machine learning models, generative AI applications, and agents on the Databricks lakehouse. Using MLflow 3 on Databricks, you can:

-

Centrally track and analyze the performance of your models, AI applications, and agents across all environments, from interactive queries in a development notebook through production batch or real-time serving deployments.

-

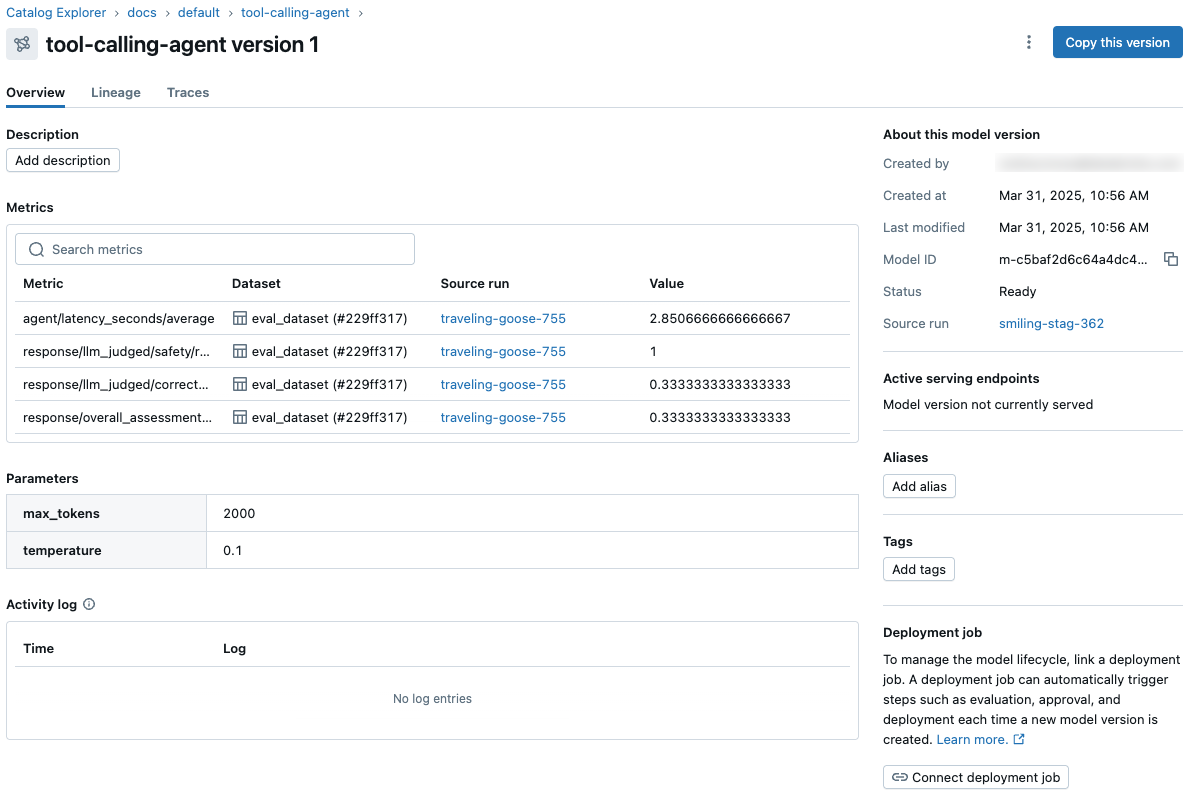

Orchestrate evaluation and deployment workflows using Unity Catalog and access comprehensive status logs for each version of your model, AI application, or agent.

-

View and access model metrics and parameters from the model version page in Unity Catalog and from the REST API.

-

Annotate requests and responses (traces) for all of your gen AI applications and agents, enabling human experts and automated techniques (such as LLM-as-a-judge) to provide rich feedback. You can leverage this feedback to assess and compare the performance of application versions and to build datasets for improving quality.

These capabilities simplify and streamline evaluation, deployment, debugging, and monitoring for all of your AI initiatives.

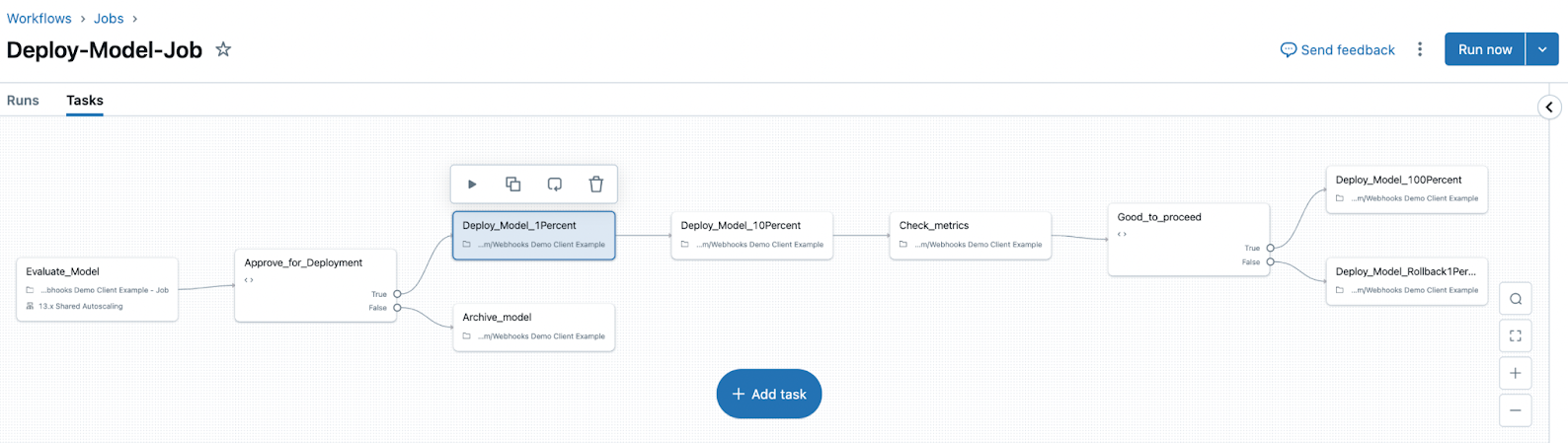

MLflow 3 also introduces the concepts of Logged Models and Deployment Jobs.

- Logged Models help you track a model's progress throughout its lifecycle. When you log a model using

log_model(), aLoggedModelis created that persists throughout the model's lifecycle, across different environments and runs, and contains links to artifacts such as metadata, metrics, parameters, and the code used to generate the model. You can use the Logged Model to compare models against each other, find the most performant model, and track down information during debugging. - Deployment jobs can be used to manage the model lifecycle, including steps like evaluation, approval, and deployment. These model workflows are governed by Unity Catalog, and all events are saved to an activity log that is available on the model version page in Unity Catalog.

See the following articles to install and get started using MLflow 3.

- Get started with MLflow 3 for models.

- Track and compare models using MLflow Logged Models.

- Model Registry improvements with MLflow 3.

- MLflow 3 deployment jobs.

Databricks-managed MLflow

Databricks provides a fully managed and hosted version of MLflow, building on the open source experience to make it more robust and scalable for enterprise use.

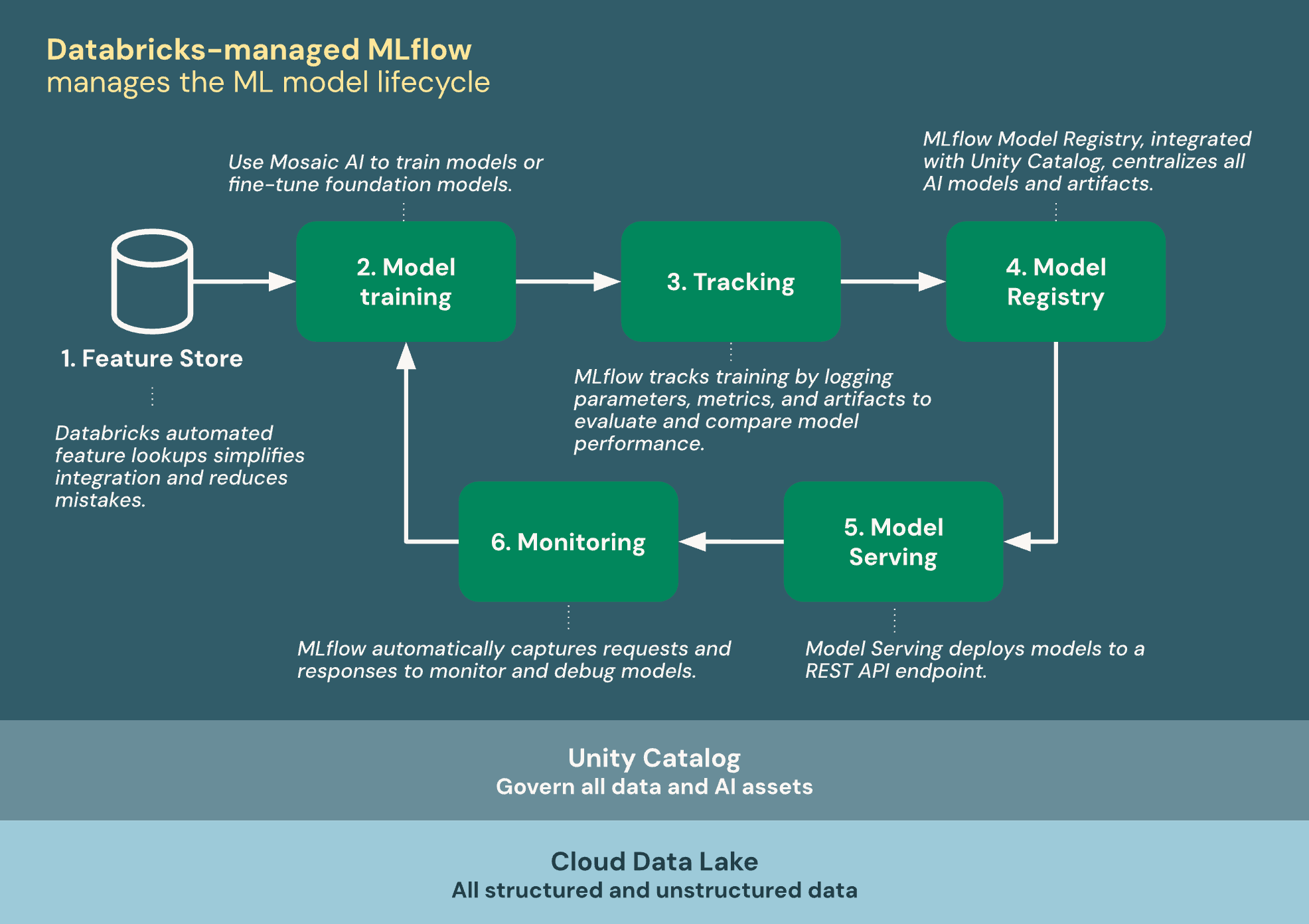

The following diagram shows how Databricks integrates with MLflow to train and deploy machine learning models.

Databricks-managed MLflow is built on Unity Catalog and the Cloud Data Lake to unify all your data and AI assets in the ML lifecycle:

- Feature store: Databricks automated feature lookups simplifies integration and reduces mistakes.

- Train models: Use Mosaic AI to train models or fine-tune foundation models.

- Tracking: MLflow tracks training by logging parameters, metrics, and artifacts to evaluate and compare model performance.

- Model Registry: MLflow Model Registry, integrated with Unity Catalog centralizes AI models and artifacts.

- Model Serving: Mosaic AI Model Serving deploys models to a REST API endpoint.

- Monitoring: Mosaic AI Model Serving automatically captures requests and responses to monitor and debug models. MLflow augments this data with trace data for each request.

Model training

MLflow Models are at the core of AI and ML development on Databricks. MLflow Models are a standardized format for packaging machine learning models and generative AI agents. The standardized format ensures that models and agents can be used by downstream tools and workflows on Databricks.

- MLflow documentation - Models.

Databricks provides features to help you train different kinds of ML models.

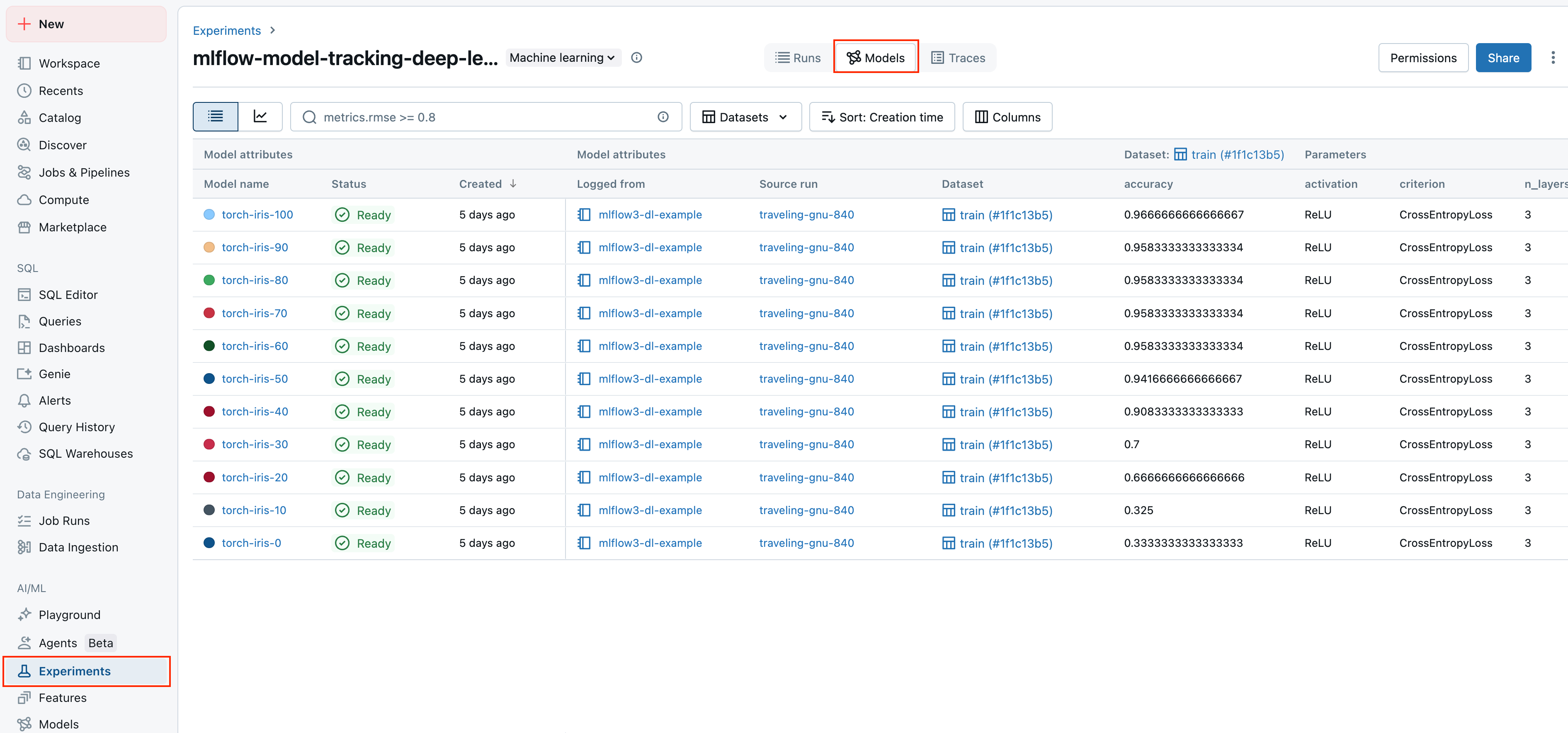

Experiment tracking

Databricks uses MLflow experiments as organizational units to track your work while developing models.

Experiment tracking lets you log and manage parameters, metrics, artifacts, and code versions during machine learning training and agent development. Organizing logs into experiments and runs allows you to compare models, analyze performance, and iterate more easily.

- Experiment tracking using Databricks.

- See MLflow documentation for general information on runs and experiment tracking.

Model Registry with Unity Catalog

MLflow Model Registry is a centralized model repository, UI, and set of APIs for managing the model deployment process.

Databricks integrates Model Registry with Unity Catalog to provide centralized governance for models. Unity Catalog integration allows you to access models across workspaces, track model lineage, and discover models for reuse.

- Manage models using Databricks Unity Catalog.

- See MLflow documentation for general information on Model Registry.

Model Serving

Databricks Model Serving is tightly integrated with MLflow Model Registry and provides a unified, scalable interface for deploying, governing, and querying AI models. Each model you serve is available as a REST API that you can integrate into web or client applications.

While they are distinct components, Model Serving heavily relies on MLflow Model Registry to handle model versioning, dependency management, validation, and governance.

AI agent development and evaluation

For AI agent development, Databricks integrates with MLflow similarly to ML model development. However, there are a few key differences:

- To create AI agents on Databricks, use Mosaic AI Agent Framework, which relies on MLflow to track agent code, performance metrics, and agent traces.

- To evaluate agents on Databricks, use Mosaic AI Agent Evaluation, which relies on MLflow to track evaluation results.

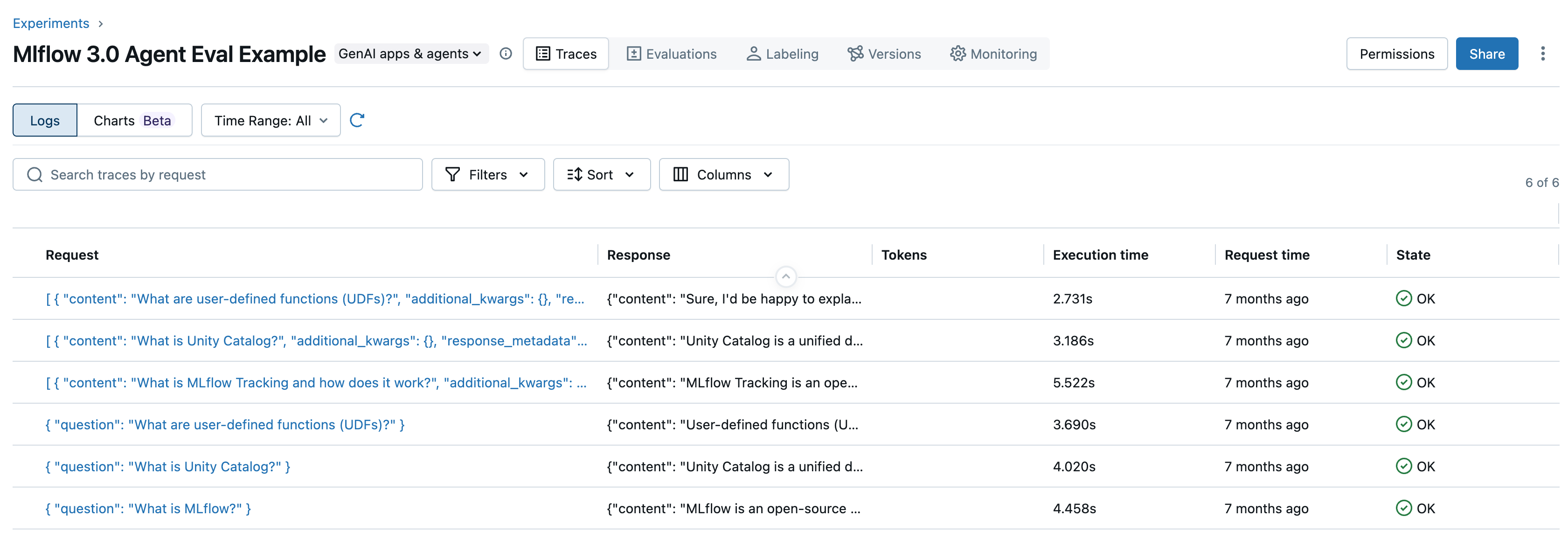

- MLflow tracking for agents also includes MLflow Tracing. MLflow Tracing allows you to see detailed information about the execution of your agent's services. Tracing records the inputs, outputs, and metadata associated with each intermediate step of a request, letting you quickly find the source of unexpected behavior in agents.

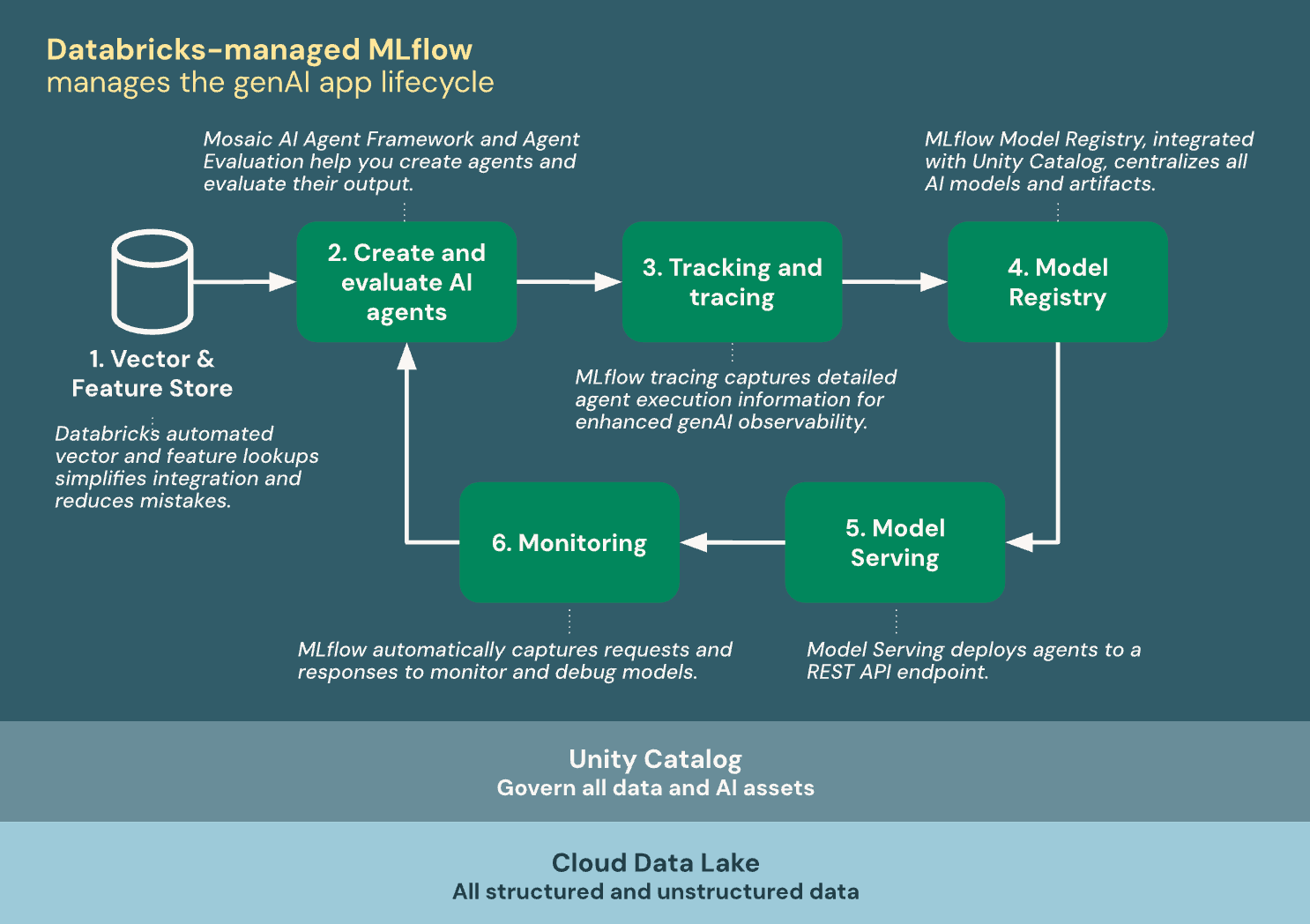

The following diagram shows how Databricks integrates with MLflow to create and deploy AI agents.

Databricks-managed MLflow is built on Unity Catalog and the Cloud Data Lake to unify all your data and AI assets in the gen AI app lifecycle:

- Vector & feature store: Databricks automated vector and feature lookups simplify integration and reduce mistakes.

- Create and evaluate AI agents: Mosaic AI Agent Framework and Agent Evaluation help you create agents and evaluate their output.

- Tracking & tracing: MLflow tracing captures detailed agent execution information for enhanced generative AI observability.

- Model Registry: MLflow Model Registry, integrated with Unity Catalog centralizes AI models and artifacts.

- Model Serving: Mosaic AI Model Serving deploys models to a REST API endpoint.

- Monitoring: MLflow automatically captures requests and responses to monitor and debug models.

Open source vs. Databricks-managed MLflow features

For general MLflow concepts, APIs, and features shared between open source and Databricks-managed versions, refer to MLflow documentation. For features exclusive to Databricks-managed MLflow, see Databricks documentation.

The following table highlights the key differences between open source MLflow and Databricks-managed MLflow and provides documentation links to help you learn more:

Feature | Availability on open source MLflow | Availability on Databricks-managed MLflow |

|---|---|---|

Security | User must provide their own security governance layer | |

Disaster recovery | Unavailable | |

Experiment tracking | MLflow Tracking API integrated with Databricks advanced experiment tracking | |

Model Registry | MLflow Model Registry integrated with Databricks Unity Catalog | |

Unity Catalog integration | Open source integration with Unity Catalog | |

Model deployment | User-configured integrations with external serving solutions (SageMaker, Kubernetes, container services, and so on) | Databricks Model Serving and external serving solutions |

AI agents | MLflow LLM development integrated with Mosaic AI Agent Framework and Agent Evaluation | |

Encryption | Unavailable | Encryption using customer-managed keys |

Open source telemetry collection was introduced in MLflow 3.2.0, and is disabled on Databricks by default. For more details, refer to the MLflow usage tracking documentation.