Serverless compute plane networking

This page introduces tools to secure network access between the compute resources in the Databricks serverless compute plane and customer resources. To learn more about the control plane and the serverless compute plane, see Networking security architecture.

To learn more about classic compute and serverless compute, see Compute.

Databricks charges for networking costs when serverless workloads connect to customer resources. See Understand Databricks serverless networking costs.

Serverless compute plane networking overview

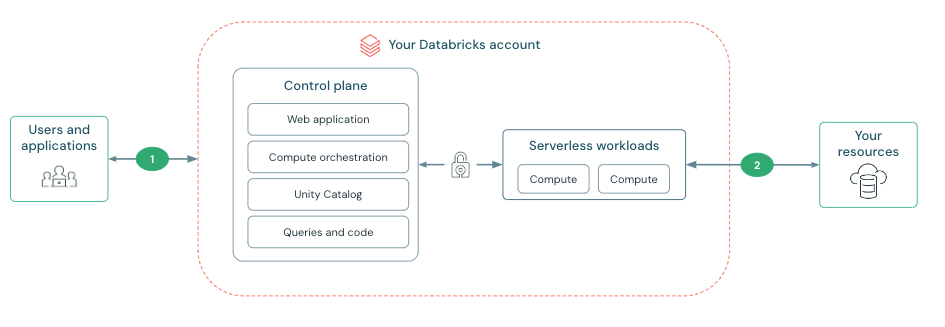

Serverless compute resources run in the serverless compute plane, which is managed by Databricks. Account admins can configure secure connectivity between the serverless compute plane and their resources. This network connection is labeled as 2 on the diagram below:

Connectivity between the control plane and the serverless compute plane is always over the cloud network backbone and not the public internet. For more information on configuring security features on the other network connections in the diagram, see Networking.

Data transfer costs

Serverless compute plane networking products might incur data transfer costs. For detailed information on data transfer pricing and types of data transfer, see the Data transfer and connectivity. To avoid cross-region charges, Databricks recommends you create a workspace in the same region as your resources.

Databricks private connectivity enables secure connections to cloud resources without public IP addresses. When using private connectivity with serverless workloads customers are charged per hour for each private endpoint and per GB for data processed through these endpoints.

Features using Databricks private connectivity are in private preview, contact your Databricks account team for access.

What is a network connectivity configuration (NCC)?

Serverless network connectivity is managed with network connectivity configurations (NCC). NCCs are account-level regional constructs that are used to manage private endpoints creation and firewall enablement at scale.

Account admins create NCCs in the account console and an NCC can be attached to one or more workspaces to enable firewalls for resources. An NCC contains a list of stable IP addresses. When an NCC is attached to a workspace, serverless compute in that workspace uses one of those IP addresses to connect the cloud resource. You can allow list those networks on your resource firewall. See Configure a firewall for serverless compute access.

Creating a resource firewall also affects connectivity from the classic compute plane to your resource. You must also allow list the networks on your resource firewalls to connect to them from classic compute resources.

NCC firewall enablement is not supported for Amazon S3 or Amazon DynamoDB. When reading or writing to Amazon S3 buckets in the same region as your workspace, serverless compute resources use direct access to S3 using AWS gateway endpoints. This applies when serverless compute reads and writes to your workspace storage bucket in your AWS account and to other S3 data sources in the same region.

Video walkthrough

This video provides a deep dive into network connectivity configuration (7 minutes).

Databricks uses S3 gateway endpoints, private IPs, and public IPs to connect to resources based on their location and type. These connectivity methods are generally available unless explicitly stated otherwise.