Create vector search endpoints and indexes

This article describes how to create vector search endpoints and indexes using Mosaic AI Vector Search.

You can create and manage vector search components, like a vector search endpoint and vector search indices, using the UI, the Python SDK, or the REST API.

For example notebooks illustrating how to create and query vector search endpoints, see Vector search example notebooks. For reference information, see the Python SDK reference.

Requirements

- Unity Catalog enabled workspace.

- Serverless compute enabled. For instructions, see Connect to serverless compute.

- For standard endpoints, the source table must have Change Data Feed enabled. See Use Delta Lake change data feed on Databricks.

- To create a vector search index, you must have CREATE TABLE privileges on the catalog schema where the index will be created.

- To query an index that is owned by another user, you must have additional privileges. See How to query a vector search index.

Permission to create and manage vector search endpoints is configured using access control lists. See Vector search endpoint ACLs.

Installation

To use the vector search SDK, you must install it in your notebook. Use the following code to install the package:

%pip install databricks-vectorsearch

dbutils.library.restartPython()

Then use the following command to import VectorSearchClient:

from databricks.vector_search.client import VectorSearchClient

For information about authentication, see Data protection and authentication.

Create a vector search endpoint

You can create a vector search endpoint using the Databricks UI, Python SDK, or the API.

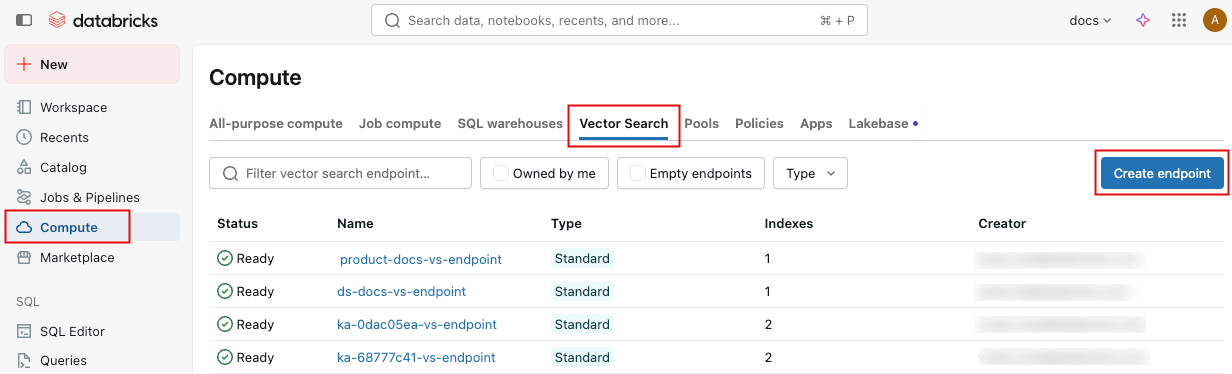

Create a vector search endpoint using the UI

Follow these steps to create a vector search endpoint using the UI.

-

In the left sidebar, click Compute.

-

Click the Vector Search tab and click Create endpoint.

-

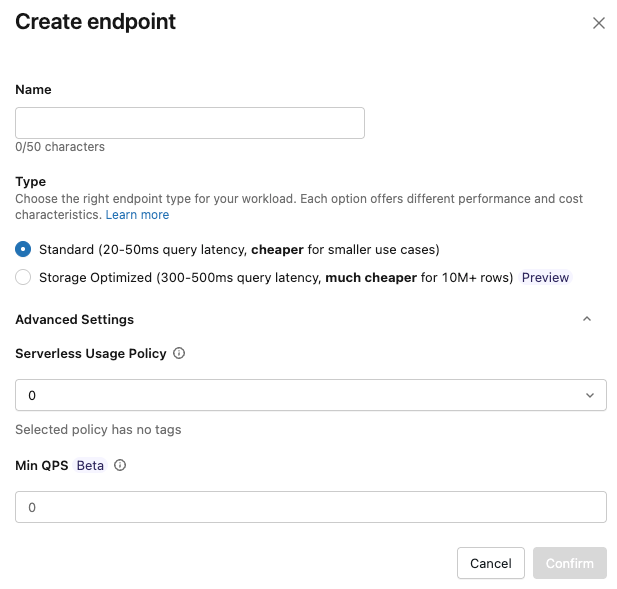

The Create endpoint form opens. Enter a name for this endpoint.

-

In the Type field, select Standard or Storage Optimized. See Endpoint options.

-

(Optional) Under Advanced settings, select a budget policy. See Mosaic AI Vector Search: Budget policies.

-

Click Confirm.

Create a vector search endpoint using the Python SDK

The following example uses the create_endpoint() SDK function to create a vector search endpoint.

# The following line automatically generates a PAT Token for authentication

client = VectorSearchClient()

# The following line uses the service principal token for authentication

# client = VectorSearchClient(service_principal_client_id=<CLIENT_ID>,service_principal_client_secret=<CLIENT_SECRET>)

client.create_endpoint(

name="vector_search_endpoint_name",

endpoint_type="STANDARD" # or "STORAGE_OPTIMIZED"

)

Create a vector search endpoint using the REST API

See the REST API reference documentation: POST /api/2.0/vector-search/endpoints.

Create an endpoint with a minimum QPS target for high-throughput workloads

This feature is in Beta. Workspace admins can control access to this feature from the Previews page. See Manage Databricks previews.

For high-throughput workloads, you can create an endpoint with a minimum QPS target. This feature is available for standard endpoints only.

To set a minimum QPS target, use the min_qps parameter. See Scale endpoint throughput with high QPS (Beta).

Setting min_qps provisions additional capacity, which increases the cost of the endpoint. You are charged for this additional capacity regardless of actual query traffic. To stop incurring these charges, reset the endpoint using min_qps=-1. Throughput scaling is best-effort and not guaranteed during Beta.

client.create_endpoint(

name="vector_search_endpoint_name",

endpoint_type="STANDARD",

min_qps=500, # Beta: minimum QPS target for high-throughput workloads

)

To change the minimum QPS on an existing endpoint, use update_endpoint().

from databricks.vector_search.client import VectorSearchClient, MIN_QPS_RESET_TO_DEFAULT

client = VectorSearchClient()

# Set or update minimum QPS

response = client.update_endpoint(name="vector_search_endpoint_name", min_qps=500)

# Check scaling status

scaling_info = response.get("endpoint", {}).get("scaling_info", {})

print(f"State: {scaling_info.get('state')}") # SCALING_CHANGE_IN_PROGRESS or SCALING_CHANGE_APPLIED

# Remove high QPS configuration and return to default

client.update_endpoint(name="vector_search_endpoint_name", min_qps=MIN_QPS_RESET_TO_DEFAULT)

After updating minimum QPS, sync your indexes to apply the new configuration.

(Optional) Create and configure an endpoint to serve the embedding model

If you choose to have Databricks compute the embeddings, you can use a pre-configured Foundation Model APIs endpoint or create a model serving endpoint to serve the embedding model of your choice. See Pay-per-token Foundation Model APIs or Create foundation model serving endpoints for instructions. For example notebooks, see Vector search example notebooks.

When you configure an embedding endpoint, Databricks recommends that you remove the default selection of Scale to zero. Serving endpoints can take a couple of minutes to warm up, and the initial query on an index with a scaled down endpoint might timeout.

The vector search index initialization might time out if the embedding endpoint isn't configured appropriately for the dataset. You should only use CPU endpoints for small datasets and tests. For larger datasets, use a GPU endpoint for optimal performance.

Create a vector search index

You can create a vector search index using the UI, the Python SDK, or the REST API. The UI is the simplest approach.

There are two types of indexes:

- Delta Sync Index automatically syncs with a source Delta Table, automatically and incrementally updating the index as the underlying data in the Delta Table changes.

- Direct Vector Access Index supports direct read and write of vectors and metadata. The user is responsible for updating this table using the REST API or the Python SDK. This type of index cannot be created using the UI. You must use the REST API or the SDK.

The column name _id is reserved. If your source table has a column named _id, rename it before creating a vector search index.

Create index using the UI

-

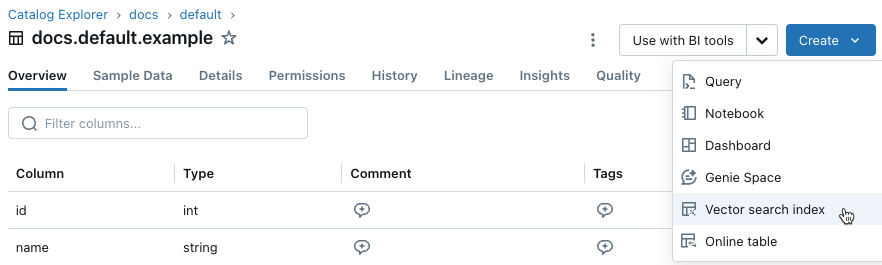

In the left sidebar, click Catalog to open the Catalog Explorer UI.

-

Navigate to the Delta table you want to use.

-

Click the Create button at the upper-right, and select Vector search index from the drop-down menu.

-

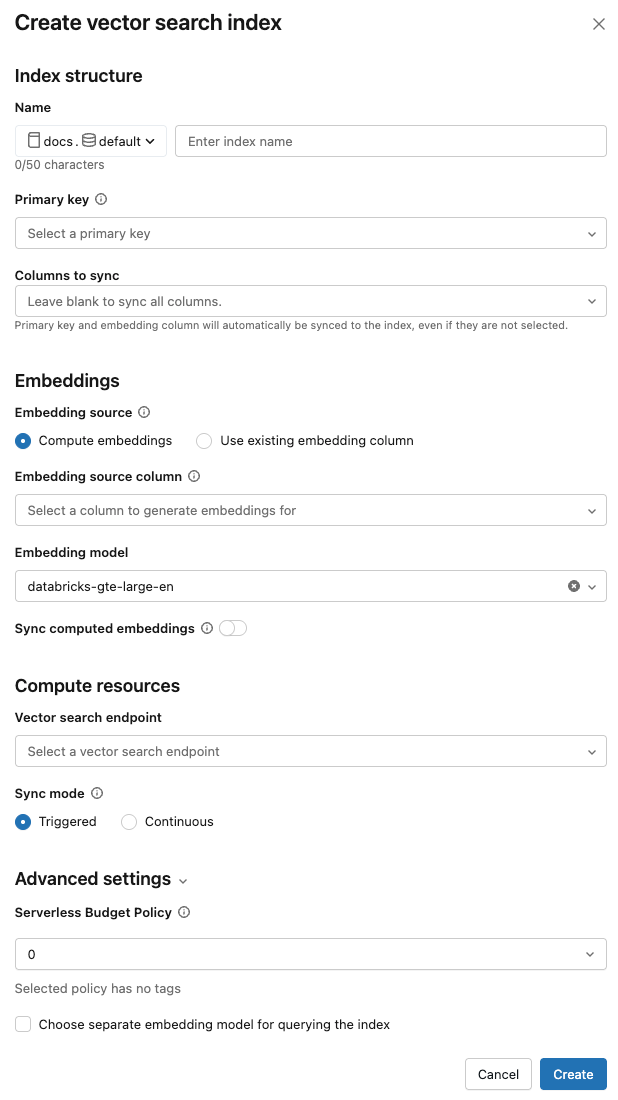

Use the selectors in the dialog to configure the index.

Name: Name to use for the online table in Unity Catalog. The name requires a three-level namespace,

<catalog>.<schema>.<name>. Only alphanumeric characters and underscores are allowed.Primary key: Column to use as a primary key.

Columns to sync: Select the columns to sync with the vector index. If you leave this field blank, all columns from the source table are synced with the index. The primary key column and embedding source column or embedding vector column are always synced.

Embedding source: Indicate if you want Databricks to compute embeddings for a text column in the Delta table (Compute embeddings), or if your Delta table contains precomputed embeddings (Use existing embedding column).

-

If you selected Compute embeddings, select the column that you want embeddings computed for and the embedding model to use for the computation. Only text columns are supported.

-

For production applications using standard endpoints, Databricks recommends using the foundation model

databricks-gte-large-enwith a provisioned throughput serving endpoint. -

For production applications using storage-optimized endpoints with Databricks-hosted models, use the model name directly (for example,

databricks-gte-large-en) as the embedding model endpoint. Storage optimized endpoints useai_querywith batch inference at ingestion time, providing high throughput for the embedding job. If you prefer to use a provisioned throughput endpoint for querying, specify it in themodel_endpoint_name_for_queryfield when you create the index.

-

-

If you selected Use existing embedding column, select the column that contains the precomputed embeddings and the embedding dimension. The format of the precomputed embedding column should be

array[float]. For storage-optimized endpoints, the embedding dimension must be evenly divisible by 16.

Sync computed embeddings: Toggle this setting to save the generated embeddings to a Unity Catalog table. For more information, see Save generated embedding table.

Vector search endpoint: Select the vector search endpoint to store the index.

Sync mode: Continuous keeps the index in sync with seconds of latency. However, it has a higher cost associated with it since a compute cluster is provisioned to run the continuous sync streaming pipeline.

- For standard endpoints, both Continuous and Triggered perform incremental updates, so only data that has changed since the last sync is processed.

- For storage-optimized endpoints, every sync partially rebuilds the index. For managed indexes on subsequent syncs, any generated embeddings where the source row has not changed are reused and do not need to be recomputed. See Storage-optimized endpoints limitations.

With Triggered sync mode, you use the Python SDK or the REST API to start the sync. See Update a Delta Sync Index.

For storage-optimized endpoints, only Triggered sync mode is supported.

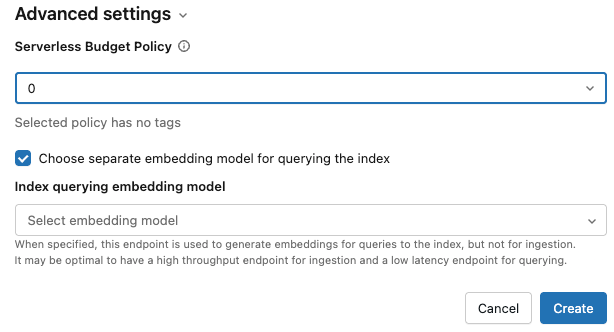

Advanced Settings: (Optional)

-

You can apply a budget policy to the index. See Mosaic AI Vector Search: Budget policies.

-

If you selected Compute embeddings, you can specify a separate embedding model to query your vector search index. This can be useful if you need a high throughput endpoint for ingestion but a lower latency endpoint for querying the index. The model specified in the Embedding model field is always used for ingestion and is also used for querying, unless you specify a different model here. To specify a different model, click Choose separate embedding model for querying the index and select a model from the drop-down menu.

-

-

When you have finished configuring the index, click Create.

Create index using the Python SDK

The following example creates a Delta Sync Index with embeddings computed by Databricks. For details, see the Python SDK reference.

This example also shows the optional parameter model_endpoint_name_for_query, which specifies a separate embedding model serving endpoint to be used for querying the index.

client = VectorSearchClient()

index = client.create_delta_sync_index(

endpoint_name="vector_search_demo_endpoint",

source_table_name="vector_search_demo.vector_search.en_wiki",

index_name="vector_search_demo.vector_search.en_wiki_index",

pipeline_type="TRIGGERED",

primary_key="id",

embedding_source_column="text",

embedding_model_endpoint_name="e5-small-v2", # This model is used for ingestion, and is also used for querying unless model_endpoint_name_for_query is specified.

model_endpoint_name_for_query="e5-mini-v2" # Optional. If specified, used only for querying the index.

)

The following example creates a Delta Sync Index with self-managed embeddings.

client = VectorSearchClient()

index = client.create_delta_sync_index(

endpoint_name="vector_search_demo_endpoint",

source_table_name="vector_search_demo.vector_search.en_wiki",

index_name="vector_search_demo.vector_search.en_wiki_index",

pipeline_type="TRIGGERED",

primary_key="id",

embedding_dimension=1024,

embedding_vector_column="text_vector"

)

By default, all columns from the source table are synced with the index. To select a subset of columns to sync, use columns_to_sync. The primary key and embedding columns are always included in the index.

To sync only the primary key and the embedding column, you must specify them in columns_to_sync as shown:

index = client.create_delta_sync_index(

...

columns_to_sync=["id", "text_vector"] # to sync only the primary key and the embedding column

)

To sync additional columns, specify them as shown. You do not need to include the primary key and the embedding column, as they are always synced.

index = client.create_delta_sync_index(

...

columns_to_sync=["revisionId", "text"] # to sync the `revisionId` and `text` columns in addition to the primary key and embedding column.

)

The following example creates a Direct Vector Access Index.

client = VectorSearchClient()

index = client.create_direct_access_index(

endpoint_name="storage_endpoint",

index_name=f"{catalog_name}.{schema_name}.{index_name}",

primary_key="id",

embedding_dimension=1024,

embedding_vector_column="text_vector",

schema={

"id": "int",

"field2": "string",

"field3": "float",

"text_vector": "array<float>"}

)

Create index using the REST API

See the REST API reference documentation: POST /api/2.0/vector-search/indexes.

Save generated embedding table

If Databricks generates the embeddings, you can save the generated embeddings to a table in Unity Catalog. This table is created in the same schema as the vector index and is linked from the vector index page.

The name of the table is the name of the vector search index, appended by _writeback_table. The name is not editable.

You can access and query the table like any other table in Unity Catalog. However, you should not drop or modify the table, as it is not intended to be manually updated. The table is deleted automatically if the index is deleted.

Update a vector search index

Update a Delta Sync Index

Indexes created with Continuous sync mode automatically update when the source Delta table changes. If you are using Triggered sync mode, you can start the sync using the UI, the Python SDK, or the REST API.

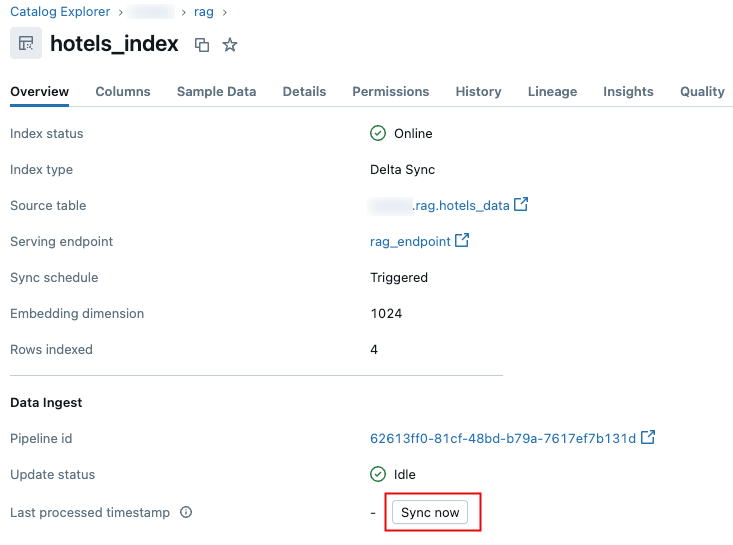

- Databricks UI

- Python SDK

- REST API

-

In Catalog Explorer, navigate to the vector search index.

-

On the Overview tab, in the Data Ingest section, click Sync now.

.

.

For details, see the Python SDK reference.

client = VectorSearchClient()

index = client.get_index(index_name="vector_search_demo.vector_search.en_wiki_index")

index.sync()

See the REST API reference documentation: POST /api/2.0/vector-search/indexes/{index_name}/sync.

Update a Direct Vector Access Index

You can use the Python SDK or the REST API to insert, update, or delete data from a Direct Vector Access Index.

- Python SDK

- REST API

For details, see the Python SDK reference.

index.upsert([

{

"id": 1,

"field2": "value2",

"field3": 3.0,

"text_vector": [1.0] * 1024

},

{

"id": 2,

"field2": "value2",

"field3": 3.0,

"text_vector": [1.1] * 1024

}

])

See the REST API reference documentation: POST /api/2.0/vector-search/indexes.

For production applications, Databricks recommends using service principals instead of personal access tokens. Performance can be improved by up to 100 msec per query.

The following code example illustrates how to update an index using a service principal.

export SP_CLIENT_ID=...

export SP_CLIENT_SECRET=...

export INDEX_NAME=...

export WORKSPACE_URL=https://...

export WORKSPACE_ID=...

# Set authorization details to generate OAuth token

export AUTHORIZATION_DETAILS='{"type":"unity_catalog_permission","securable_type":"table","securable_object_name":"'"$INDEX_NAME"'","operation": "WriteVectorIndex"}'

# Generate OAuth token

export TOKEN=$(curl -X POST --url $WORKSPACE_URL/oidc/v1/token -u "$SP_CLIENT_ID:$SP_CLIENT_SECRET" --data 'grant_type=client_credentials' --data 'scope=all-apis' --data-urlencode 'authorization_details=['"$AUTHORIZATION_DETAILS"']' | jq .access_token | tr -d '"')

# Get index URL

export INDEX_URL=$(curl -X GET -H 'Content-Type: application/json' -H "Authorization: Bearer $TOKEN" --url $WORKSPACE_URL/api/2.0/vector-search/indexes/$INDEX_NAME | jq -r '.status.index_url' | tr -d '"')

# Upsert data into vector search index.

curl -X POST -H 'Content-Type: application/json' -H "Authorization: Bearer $TOKEN" --url https://$INDEX_URL/upsert-data --data '{"inputs_json": "[...]"}'

# Delete data from vector search index

curl -X DELETE -H 'Content-Type: application/json' -H "Authorization: Bearer $TOKEN" --url https://$INDEX_URL/delete-data --data '{"primary_keys": [...]}'

The following code example illustrates how to update an index using a personal access token (PAT).

export TOKEN=...

export INDEX_NAME=...

export WORKSPACE_URL=https://...

# Upsert data into vector search index.

curl -X POST -H 'Content-Type: application/json' -H "Authorization: Bearer $TOKEN" --url $WORKSPACE_URL/api/2.0/vector-search/indexes/$INDEX_NAME/upsert-data --data '{"inputs_json": "..."}'

# Delete data from vector search index

curl -X DELETE -H 'Content-Type: application/json' -H "Authorization: Bearer $TOKEN" --url $WORKSPACE_URL/api/2.0/vector-search/indexes/$INDEX_NAME/delete-data --data '{"primary_keys": [...]}'

How to make schema changes with no downtime

If the schema of existing columns in the source table changes, you must rebuild the index. If the writeback table is enabled, you must also rebuild the index when new columns are added to the source table. If the writeback table is not enabled, new columns do not require rebuilding the index.

Follow these steps to rebuild and deploy the index with no downtime:

- Perform the schema change on your source table.

- Create a new index.

- After the new index is ready, switch traffic to the new index.

- Delete the original index.