Create a training run using the Foundation Model Fine-tuning UI

This feature is in Public Preview in us-east-1 and us-west-2.

This article describes how to create and configure a training run using the Foundation Model Fine-tuning (now part of Mosaic AI Model Training) UI. You can also create a run using the API. For instructions, see Create a training run using the Foundation Model Fine-tuning API.

Requirements

See Requirements.

Create a training run using the UI

Follow these steps to create a training run using the UI.

-

In the left sidebar, click Experiments.

-

On the Foundation Model Fine-tuning card, click Create Mosaic AI Model Experiment.

-

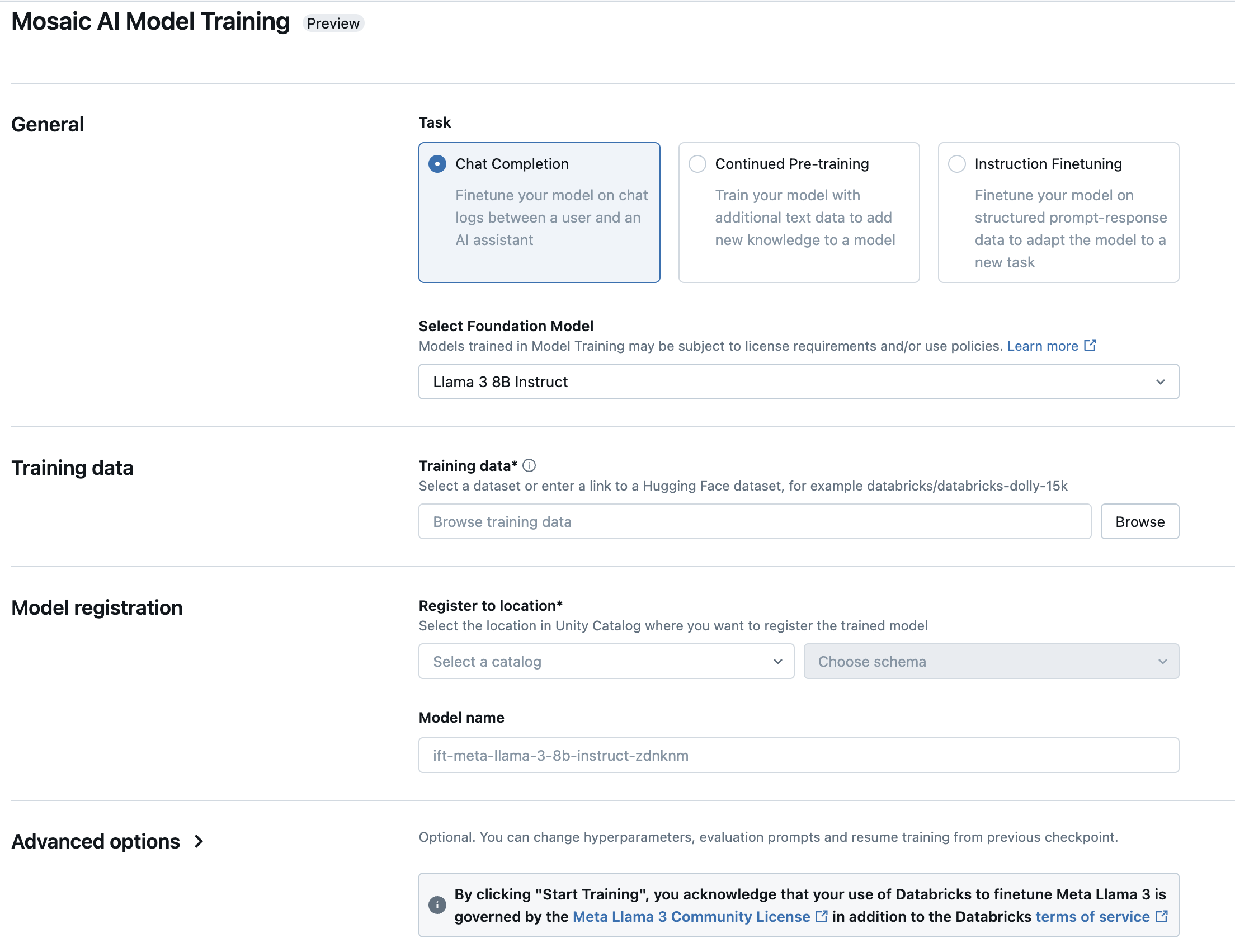

The Foundation Model Fine-tuning form opens. Items marked with an asterisk are required. Make your selections, and then click Start Training.

Type: Select the task to perform.

Task

Description

Instruction fine-tuning

Continue training a foundation model with prompt-and-response input to optimize the model for a specific task.

Continued pre-training

Continue training a foundation model to give it domain-specific knowledge.

Chat completion

Continue training a foundation model with chat logs to optimize it for Q&A or conversation applications.

Select foundation model: Select the model to tune or train. For a list of supported models, see Supported models.

Training data: Click Browse to select a table in Unity Catalog, or enter the full URL for a Hugging Face dataset. For data size recommendations, see Recommended data size for model training.

If you select a table in Unity Catalog, you must also select the compute to use to read the table.

Register to location: Select the Unity Catalog catalog and schema from the drop-down menus. The trained model is saved to this location.

Model name: The model is saved with this name in the catalog and schema you specified. A default name appears in this field, which you can change if desired.

Advanced options: For more customization, you can configure optional settings for evaluation, hyperparameter tuning, or train from an existing proprietary model.

Setting

Description

Training duration

Duration of the training run, specified in epochs (for example,

10ep) or tokens (for example,1000000tok). Default is1ep.Learning rate

The learning rate for model training. All models are trained using the AdamW optimizer, with learning rate warmup. The default learning rate may vary per model. We suggest running a hyperparameter sweep trying different learning rates and training durations to get the highest quality models.

Context length

The maximum sequence length of a data sample. Data longer than this setting is truncated. The default depends on the model selected.

Evaluation data

Click Browse to select a table in Unity Catalog, or enter the full URL for a Hugging Face dataset. If you leave this field blank, no evaluation is performed.

Model evaluation prompts

Type optional prompts to use to evaluate the model.

Experiment name

By default, a new, automatically generated name is assigned for each run. You can optionally enter a custom name or select an existing experiment from the drop-down list.

Custom weights

By default, training begins by using the original weights of the selected model. To start with a checkpoint produced by the fine-tuning API, enter the path to the MLflow artifact folder containing the checkpoint.

NOTE: If you trained a model before 3/26/2025, you will no longer be able to continuously train from those model checkpoints. Any previously completed training runs can still be served with provisioned throughput without issue.

Next steps

After your training run is complete, you can review metrics in MLflow and deploy your model for inference. See steps 5 through 7 of Tutorial: Create and deploy a Foundation Model Fine-tuning run.

See the Instruction fine-tuning: Named Entity Recognition demo notebook for an instruction fine-tuning example that walks through data preparation, fine-tuning training run configuration and deployment.