Configure the serverless environment

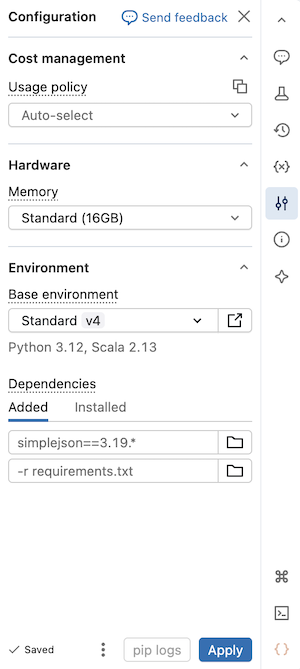

This article explains how to use a serverless notebook's Environment side panel to configure dependencies, serverless usage policies, memory, and base environment. This panel provides a single place to manage the notebook's serverless settings. Settings configured in this panel only apply when the notebook is connected to serverless compute.

To expand the Environment side panel, click on the button to the right of the notebook.

Use serverless GPU compute

Serverless GPU compute is in Beta.

Use the following steps to use serverless GPU compute on your Databricks notebook:

- From a notebook, click the Connect drop-down menu at the top and select Serverless GPU.

- Click the

to open the Environment side panel.

- Select A10 or H100 from the Accelerator field.

- Under Base environment, select Standard for the default environment or AI for the AI-optimized environment with pre-installed machine learning libraries.

- Click Apply and then Confirm that you want to apply the serverless GPU compute to your notebook environment.

For more details, see Serverless GPU compute.

Use high memory serverless compute

This feature is in Public Preview.

If you run into out-of-memory errors in your notebook, you can configure the notebook to use a higher memory size. This setting increases the size of the REPL memory used when running code in the notebook. It does not affect the memory size of the Spark session. Serverless usage with high memory has a higher DBU emission rate than standard memory.

- In the notebook UI, click the Environment side panel

.

- Under Memory, select High memory.

- Click Apply.

This setting also applies to notebook job tasks, which run using the notebook's memory preferences. Updating the memory preference in the notebook affects the next job run.

Select a serverless usage policy

This feature is in Public Preview.

Serverless usage policies allow your organization to apply custom tags on serverless usage for granular billing attribution.

If your workspace uses serverless usage policies to attribute serverless usage, you can select the serverless usage policy you want to apply to the notebook. If a user is assigned to only one serverless usage policy, that policy is selected by default.

You can select the serverless usage policy after your notebook is connected to serverless compute by using the Environment side panel:

- In the notebook UI, click the Environment side panel

.

- Under Usage policy select the serverless usage policy you want to apply to your notebook.

- Click Apply.

When this setup is complete, all notebook usage inherits the serverless usage policy's custom tags.

If your notebook originates from a Git repository or does not have an assigned serverless usage policy, it defaults to your last chosen serverless usage policy when it is next attached to serverless compute.

Select a base environment

A base environment determines the pre-installed libraries and environment version available for your serverless notebook. The Base environment selector in the Environment side panel provides a unified interface for selecting your environment. To see details on each environment version, see Serverless environment versions. Databricks recommends using the latest version to get the most up-to-date notebook features.

The Base environment selector includes the following options:

- Standard: The default base environment with Databricks-provided libraries.

- AI: An AI-optimized base environment with pre-installed machine learning libraries. This option appears only when an accelerator (GPU) is selected.

- More: Expands to show additional options:

- Previous versions of Standard and AI environments.

- Custom: Allows you to specify a custom environment using a YAML file.

- Workspace environments: Lists all compatible base environments configured for your workspace by an administrator.

To select a base environment:

- In the notebook UI, click the Environment side panel

.

- Under Base environment, select an environment from the dropdown menu.

- Click Apply.

Add dependencies to the notebook

Because serverless does not support compute policies or init scripts, you must add custom dependencies using the Environment side panel. You can add dependencies individually or use a shareable base environment to install multiple dependencies.

To individually add a dependency:

-

In the notebook UI, click the Environment side panel

.

-

In the Dependencies section, click Add Dependency and enter the path of the dependency in the field. You can specify a dependency in any format that is valid in a requirements.txt file. Python wheel files or Python projects (for example, the directory containing a

pyproject.tomlor asetup.py) can be located in workspace files or Unity Catalog volumes.- If using a workspace file, the path should be absolute and start with

/Workspace/. - If using a file in a Unity Catalog volume, the path should be in the following format:

/Volumes/<catalog>/<schema>/<volume>/<path>.whl.

- If using a workspace file, the path should be absolute and start with

-

Click Apply. This installs the dependencies in the notebook virtual environment and restarts the Python process.

Do not install PySpark or any library that installs PySpark as a dependency on your serverless notebooks. Doing so will stop your session and result in an error. If this occurs, remove the library and reset your environment.

To view installed dependencies, click the Installed tab in the Environments side panel. The pip installation logs for the notebook environment are also available by clicking pip logs at the bottom of the panel.

Create a custom environment specification

You can create and reuse custom environment specifications.

- In a serverless notebook, select an environment version and add any dependencies you want to install.

- Click the kebab menu icon

at the bottom of the environment panel then click Export environment.

- Save the specification as a Workspace file or in a Unity Catalog volume.

To use your custom environment specification in a notebook, select Custom from the Base environment dropdown menu, then use the folder icon to select your YAML file.

Create common utilities to share across your workspace

The following example shows you how to store a common utility in a workspace file and add it as a dependency in your serverless notebook:

-

Create a folder with the following structure. Verify that consumers of your project have appropriate access to the file path:

Shellhelper_utils/

├── helpers/

│ └── __init__.py # your common functions live here

├── pyproject.toml -

Populate

pyproject.tomllike this:Python[project]

name = "common_utils"

version = "0.1.0" -

Add a function to the

init.pyfile. For example:Pythondef greet(name: str) -> str:

return f"Hello, {name}!" -

In the notebook UI, click the Environment side panel

.

-

In the Dependencies section, click Add Dependency then enter the path of your util file. For example:

/Workspace/helper_utils. -

Click Apply.

You can now use the function in your notebook:

from helpers import greet

print(greet('world'))

This outputs as:

Hello, world!

Reset the environment dependencies

If your notebook is connected to serverless compute, Databricks automatically caches the content of the notebook's virtual environment. This means you generally do not need to reinstall the Python dependencies specified in the Environment side panel when you open an existing notebook, even if it has been disconnected due to inactivity.

Python virtual environment caching also applies to jobs. When a job is run, any task of the job that shares the same set of dependencies as a completed task in that run is faster, as required dependencies are already available.

If you change the implementation of a custom Python package used in a job on serverless, you must also update its version number so that jobs can pick up the latest implementation.

To clear the environment cache and perform a fresh install of the dependencies specified in the Environment side panel of a notebook attached to serverless compute, click the arrow next to Apply and then click Reset to defaults.

If you install packages that break or change the core notebook or Apache Spark environment, remove the offending packages and then reset the environment. Starting a new session does not clear the entire environment cache.

Configure default Python package repositories

Workspace admins can configure private or authenticated package repositories within workspaces as the default pip configuration for both serverless notebooks and serverless jobs. This allows users to install packages from internal Python repositories without explicitly defining index-url or extra-index-url.

For instructions, workspace admins can reference Configure default Python package repositories.

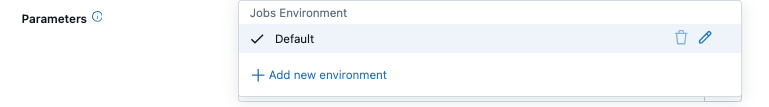

Configure environment for job tasks

For job task types such as notebook, Python script, Python wheel, JAR, or dbt tasks, the library dependencies are inherited from the serverless environment version. To view the list of installed libraries, see the Installed Python libraries or Installed Java and Scala libraries section of the environment version you are using. If a task requires a library that is not installed, you can install the library from workspace files, Unity Catalog volumes, or public package repositories.

For notebooks with an existing notebook’s environment, you can run the task using the notebook's environment or override it by selecting a job-level environment instead.

Using serverless compute for JAR tasks is in Beta.

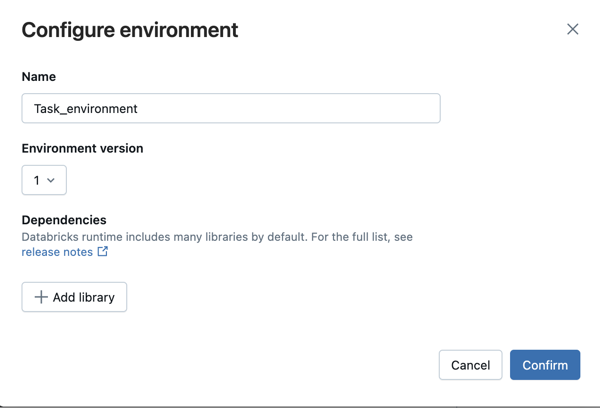

To add a library when you create or edit a job task:

-

In the Environment and Libraries dropdown menu, click

next to the Default environment or click + Add new environment.

-

Select the environment version from the Environment version drop-down. See Serverless environment versions. Databricks recommends picking the latest version to get the most up-to-date features.

-

In the Configure environment dialog, click + Add library.

-

Select the type of dependency from the dropdown menu under Libraries.

-

In the File Path text box, enter the path to the library.

-

For a Python Wheel in a workspace file, the path should be absolute and start with

/Workspace/. -

For a Python Wheel in a Unity Catalog volume, the path should be

/Volumes/<catalog>/<schema>/<volume>/<path>.whl. -

For a

requirements.txtfile, select PyPi and enter-r /path/to/requirements.txt.

- Click Confirm or + Add library to add another library.

- If you're adding a task, click Create task. If you're editing a task, click Save task.

Base environments for job tasks

Serverless jobs support custom base environments defined with YAML files for Python, Python wheel, and notebook tasks. For notebook tasks, you can either select a custom base environment in the job’s environment configuration or use the notebook’s own environment settings, which support both workspace environments and custom base environments. In all cases, only the dependencies required for the task are installed at runtime.