Upgrade Hive tables and views to Unity Catalog

This article describes how to upgrade tables and views registered in your existing workspace-local Hive metastore to Unity Catalog.

As an alternative to the table migration processes described in this article, you can use Hive metastore federation to create a catalog in Unity Catalog that mirrors your Hive metastore. See Hive metastore federation: enable Unity Catalog to govern tables registered in a Hive metastore.

You can upgrade a Hive table either to a managed table or external table in Unity Catalog.

-

Managed tables are the preferred way to create tables in Unity Catalog. Unity Catalog fully manages their lifecycle, file layout, and storage. Unity Catalog also optimizes their performance automatically. Managed tables always use the Delta table format.

Managed tables reside in a managed storage location that you reserve for Unity Catalog. Because of this storage requirement, you must use CLONE or CREATE TABLE AS SELECT (CTAS) if you want to copy existing Hive tables to Unity Catalog as managed tables.

-

External tables are tables whose data lifecycle, file layout, and storage location are not managed by Unity Catalog. Multiple data formats are supported for external tables.

Typically you use external tables only when you also need direct access to data using non-Databricks compute (that is, not using Databricks clusters or Databricks SQL warehouses). External tables are also convenient in migration scenarios, because you can register existing data in Unity Catalog quickly without having to that copy data. This is thanks to the fact that data in external tables doesn't have to reside in reserved managed storage.

For more information about managed and external tables in Unity Catalog, see Databricks tables.

Hive to Unity Catalog migration options

When you are ready to migrate Hive tables to Unity Catalog, you have several options, depending on your use case:

Migration tool | Description | Hive table requirements | Unity Catalog table created | Why should I use it? |

|---|---|---|---|---|

A comprehensive set of command-line utilities and other tools that assess your workspace's readiness for Unity Catalog migration and perform workflows that migrate identities, permissions, storage locations, and tables to Unity Catalog. UCX is available on GitHub at databrickslabs/ucx. | Managed or external Hive tables | Managed or external | You want a comprehensive workspace upgrade planning tool that goes beyond upgrading Hive tables to Unity Catalog. You want to upgrade workspaces that have large amounts of data in the Hive metastore. You are comfortable running scripts. If you want to perform a bulk upgrade of Hive tables to Unity Catalog managed tables, this is your only option. UCX, like all Databricks Labs projects, is a public GitHub repo and not supported directly by Databricks. | |

A Catalog Explorer feature that enables you to bulk-copy entire schemas (databases) and multiple managed and external tables from your Hive metastore to the Unity Catalog metastore as external tables. The upgrade wizard performs the | Managed or external Hive tables | External only | You want to quickly upgrade your Hive tables to external tables in Unity Catalog, and you prefer a visual interface. The ability to schedule regular syncs when the source Hive table changes makes it a useful tool for managing a “hybrid” Hive and Unity Catalog workspace during the transition to Unity Catalog. | |

| Managed or external Hive tables | External only | You want to quickly upgrade your Hive tables to external tables in Unity Catalog, and you prefer to use SQL commands rather than a visual interface. Scheduling regular Because you cannot use | |

Deep clones copy source table data and metadata to the clone target. | Managed Hive tables that are in Delta format. | Managed only | You want to migrate Hive managed tables to Unity Catalog managed tables to take full advantage of Unity Catalog data governance, and your Hive tables meet the criteria listed in the “Hive table requirements” cell. If your Hive tables do not meet the “Hive table requirements”, you can use the CREATE TABLE AS SELECT SQL command to upgrade a Hive table to a Unity Catalog managed table. However |

This article describes how to perform all but the UCX-driven upgrade process. Databricks recommends UCX for most workspace upgrade scenarios. However, for simpler use cases, you might prefer one or more of the tools described here.

Before you begin

This section describes some of the impacts of migration that you should be prepared for, along with permissions and compute requirements.

Understand the impact

You should be aware that when you modify your workloads to use the new Unity Catalog tables, you might need to change some behaviors:

- Unity Catalog manages partitions differently than Hive. Hive commands that directly manipulate partitions are not supported on tables managed by Unity Catalog.

- Table history is not migrated when you run

CREATE TABLE CLONE. Any tables in the Hive metastore that you clone to Unity Catalog are treated as new tables. You cannot perform Delta Lake time travel or other operations that rely on pre-migration history.

For more information, see Work with the legacy Hive metastore alongside Unity Catalog.

Requirements

To perform migrations, you must have:

-

A workspace that that has a Unity Catalog metastore and at least one Unity Catalog catalog. See Get started with Unity Catalog.

-

Privileges on the Unity Catalog catalogs to which you are migrating tables. These privilege requirements are enumerated at the start of each procedure covered in this article.

-

For migration to Unity Catalog external tables: storage credentials and external locations defined in Unity Catalog, and the

CREATE EXTERNAL TABLEprivilege on the external location. -

Access to Databricks compute that meets both of the following requirements:

- Supports Unity Catalog (SQL warehouses or compute resources that use standard or dedicated access mode).

- Allows access to the tables in the Hive metastore.

Because compute resources that use standard access mode are enabled for legacy table access control by default, that means that if you use that access mode, you must have table access control privileges on the Hive metastore that you are migrating from. You can grant yourself access using the following SQL command:

SQLGRANT ALL PRIVILEGES ON catalog hive_metastore TO `<user>`Alternatively, you can use a compute resource in dedicated access mode.

noteFor demos of updating compute for Unity Catalog, see:

For more information about managing privileges on objects in the Hive metastore, see Hive metastore privileges and securable objects (legacy). For more information about managing privileges on objects in the Unity Catalog metastore, see Manage privileges in Unity Catalog.

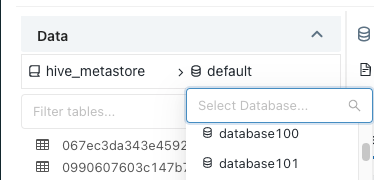

Identify tables that are managed by the Hive metastore

To determine whether a table is currently registered in Unity Catalog, check the catalog name. Tables in the catalog hive_metastore are registered in the workspace-local Hive metastore. Any other catalogs listed are governed by Unity Catalog.

To view the tables in the hive_metastore catalog using Catalog Explorer:

- Click

Catalog in the sidebar.

- In the catalog pane, browse to the

hive_metastorecatalog and expand the schema nodes.

You can also search for a specific table using the filter field in the Catalog pane.

Upgrade a schema or tables from the Hive metastore to Unity Catalog external tables using the upgrade wizard

You can copy complete schemas (databases) and multiple external or managed tables from your Databricks default Hive metastore to the Unity Catalog metastore using the Catalog Explorer upgrade wizard. The upgraded tables will be external tables in Unity Catalog.

For help deciding when to use the upgrade wizard, see Hive to Unity Catalog migration options.

Requirements

Data format requirements:

Compute requirements:

- A compute resource that supports Unity Catalog. See Before you begin.

Unity Catalog object and permission requirements:

- A storage credential for an IAM role that authorizes Unity Catalog to access the tables' location path.

- An external location that references the storage credential you just created and the path to the data on your cloud tenant.

CREATE EXTERNAL TABLEpermission on the external locations of the tables to be upgraded.

Hive table access requirements:

- If your compute uses standard access mode, you need access to the tables in the Hive metastore, granted using legacy table access control. See Before you begin.

Upgrade process

-

Click

Catalog in the sidebar to open the Catalog Explorer.

-

Select

hive_metastoreas your catalog and select the schema (database) that you want to upgrade.

-

Click Upgrade at the top right of the schema detail view.

-

Select all of the tables that you want to upgrade and click Next.

Only external tables in formats supported by Unity Catalog can be upgraded using the upgrade wizard. See Work with external tables.

-

Set the destination catalog, schema (database), and owner for each table.

Users will be able to access the newly created table in the context of their privileges on the catalog and schema.

Table owners have all privileges on the table, including

SELECTandMODIFY. If you don't select an owner, the managed tables are created with you as the owner. Databricks generally recommends that you grant table ownership to groups. To learn more about object ownership in Unity Catalog, see Manage Unity Catalog object ownership.To assign the same catalog and schema to multiple tables, select the tables and click the Set destination button.

To assign the same owner to multiple tables, select the tables and click the Set owner button.

-

Review the table configurations. To modify them, click the Previous button.

-

Click Run upgrade to run the upgrade immediately or click Create notebook for upgrade.

If you create a notebook for upgrade, name your notebook and choose a path to save it. Then run the notebook.

When the upgrade is done, each table's metadata has been copied from Hive metastore to Unity Catalog. These tables are marked as upgraded in the upgrade wizard.

-

Define fine-grained access control using the Permissions tab of each new table.

-

(Optional) Add comments to each upgraded Hive table that points users to the new Unity Catalog table.

Return to the original table in the

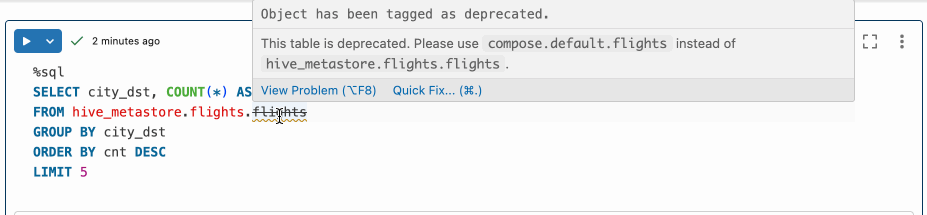

hive.metastorecatalog to add the table comment.If you use the following syntax in the table comment, notebooks and SQL query editor queries that reference the deprecated Hive table will display the deprecated table name using strikethrough text, display the comment as a warning, and provide a Quick Fix link to Databricks Assistant, which can update your code to reference the new table.

This table is deprecated. Please use catalog.default.table instead of hive_metastore.schema.table.See Add comments to indicate that a Hive table has been migrated.

-

Modify your workloads to use the new tables.

If you added a comment to the original Hive table like the one listed in the optional previous step, you can use the Quick Fix link and Databricks Assistant to help you find and modify workloads.

noteIf you no longer need the old tables, you can drop them from the Hive metastore. Dropping an external table does not modify the data files on your cloud tenant.

Upgrade a Hive table to a Unity Catalog external table using SYNC

You can use the SYNC SQL command to copy external tables in your Hive metastore to external tables in Unity Catalog. You can sync individual tables or entire schemas.

You can also use SYNC to copy Hive managed tables that are stored outside of Databricks workspace storage (sometimes called DBFS root) to external tables in Unity Catalog. You cannot use it to copy Hive managed tables stored in workspace storage. To copy those tables, use CREATE TABLE CLONE instead.

The SYNC command performs a write operation to each source table it upgrades to add additional table properties for bookkeeping, including a record of the target Unity Catalog external table.

SYNC can also be used to update existing Unity Catalog tables when the source tables in the Hive metastore are changed. This makes it a good tool for transitioning to Unity Catalog gradually.

- For a demo of using

SYNCto upgrade external tables, see Unity Catalog - External Table on HMS. - For a demo of using

SYNCto upgrade managed tables, see Unity Catalog - Managed Table on HMS.

For details, see SYNC. For help deciding when to use the upgrade wizard, see Hive to Unity Catalog migration options.

Requirements

Data format requirements:

Compute requirements:

- A compute resource that supports Unity Catalog. See Before you begin.

Unity Catalog object and permission requirements:

- A storage credential for an IAM role that authorizes Unity Catalog to access the tables' location path.

- An external location that references the storage credential you just created and the path to the data on your cloud tenant.

CREATE EXTERNAL TABLEpermission on the external locations of the tables to be upgraded.

Hive table access requirements:

- If your compute uses standard access mode, you need access to the tables in the Hive metastore, granted using legacy table access control. See Before you begin.

Upgrade process

To upgrade tables in your Hive metastore to Unity Catalog external tables using SYNC:

-

In a notebook or the SQL query editor, run one of the following:

Sync an external Hive table:

SQLSYNC TABLE <uc-catalog>.<uc-schema>.<new-table> FROM hive_metastore.<source-schema>.<source-table>

SET OWNER <principal>;Sync an external Hive schema and all of its tables:

SQLSYNC SCHEMA <uc-catalog>.<new-schema> FROM hive_metastore.<source-schema>

SET OWNER <principal>;Sync a managed Hive table that is stored outside of Databricks workspace storage:

SQLSYNC TABLE <uc-catalog>.<uc-schema>.<new-table> AS EXTERNAL FROM hive_metastore.<source-schema>.<source-table>

SET OWNER <principal>;Sync a schema that contains managed Hive tables that are stored outside of Databricks workspace storage:

SQLSYNC SCHEMA <uc-catalog>.<new-schema> AS EXTERNAL FROM hive_metastore.<source-schema>

SET OWNER <principal>; -

Grant account-level users or groups access to the new table. See Manage privileges in Unity Catalog.

-

(Optional) Add a comment to the original Hive table that points users to the new Unity Catalog table.

Return to the original table in the

hive.metastorecatalog to add the table comment. To learn how to add table comments using Catalog Explorer, see Add comments to data and AI assets. To learn how to add table comments using SQL statements in a notebook or the SQL query editor, see COMMENT ON.If you use the following syntax in the table comment, notebooks and SQL query editor queries that reference the deprecated Hive table will display the deprecated table name using strikethrough text, display the comment as a warning, and provide a Quick Fix link to Databricks Assistant, which can update your code to reference the new table.

This table is deprecated. Please use catalog.default.table instead of hive_metastore.schema.table.See Add comments to indicate that a Hive table has been migrated.

-

After the table is migrated, users should update their existing queries and workloads to use the new table.

If you added a comment to the original Hive table like the one listed in the optional previous step, you can use the Quick Fix link and Databricks Assistant to help you find and modify workloads.

-

Before you drop the old table, test for dependencies by revoking access to it and re-running related queries and workloads.

Don't drop the old table if you are still relying on deprecation comments to help you find and update existing code that references the old table. Likewise, don't drop the old table if that table has changed since your original sync:

SYNCcan be used to update existing Unity Catalog tables with changes from source Hive tables.

Upgrade a Hive managed table to a Unity Catalog managed table using CLONE

Use CREATE TABLE CLONE to upgrade managed Delta tables in your Hive metastore to managed tables in Unity Catalog. You can clone individual tables. You must use deep clones when you clone tables from the legacy Hive metastore to Unity Catalog.

For help deciding when to use CLONE, see Hive to Unity Catalog migration options. For more information about CLONE, see Clone a table on Databricks.

Requirements

Data format requirements:

- Managed Hive tables in Delta format.

Compute requirements:

- A compute resource that supports Unity Catalog. See Before you begin.

Permission requirements:

- The

USE CATALOGandUSE SCHEMAprivileges on the catalog and schema that you add the table to, along withCREATE TABLEon the schema, or you must be the owner of the catalog or schema. See Unity Catalog privileges and securable objects. - If your compute uses standard access mode, you need access to the tables in the Hive metastore, granted using legacy table access control. See Before you begin.

Upgrade process

To upgrade managed tables in your Hive metastore to managed tables in Unity Catalog:

-

In a notebook or the SQL query editor, run one of the following:

Deep clone a managed table in the Hive metastore:

SQLCREATE OR REPLACE TABLE <uc-catalog>.<uc-schema>.<new-table>

DEEP CLONE hive_metastore.<source-schema>.<source-table>;For information about additional parameters, including table properties, see CREATE TABLE CLONE.

-

Grant account-level users or groups access to the new table. See Manage privileges in Unity Catalog.

-

(Optional) Add a comment to the original Hive table that points users to the new Unity Catalog table.

Return to the original table in the

hive.metastorecatalog to add the table comment. To learn how to add table comments using Catalog Explorer, see Add comments to data and AI assets. To learn how to add table comments using SQL statements in a notebook or the SQL query editor, see COMMENT ON.If you use the following syntax in the table comment, notebooks and SQL query editor queries that reference the deprecated Hive table will display the deprecated table name using strikethrough text, display the comment as a warning, and provide a Quick Fix link to Databricks Assistant, which can update your code to reference the new table.

This table is deprecated. Please use catalog.default.table instead of hive_metastore.schema.table.See Add comments to indicate that a Hive table has been migrated.

-

After the table is migrated, users should update their existing queries and workloads to use the new table.

If you added a comment to the original Hive table like the one listed in the optional previous step, you can use the Quick Fix link and Databricks Assistant to help you find and modify workloads.

-

Before you drop the old table, test for dependencies by revoking access to it and re-running related queries and workloads.

Don't drop the old table if you are still relying on deprecation comments to help you find and update existing code that references the old table. Likewise, don't drop the old table if you performed a shallow clone. Shallow clones reference data from the source Hive table.

Upgrade a Hive table to a Unity Catalog managed table using CREATE TABLE AS SELECT

If you cannot use or prefer not to use CREATE TABLE CLONE to migrate a table in your Hive metastore to a managed table in Unity Catalog, you can create a new managed table in Unity Catalog by querying the Hive table using CREATE TABLE AS SELECT. For information about the differences between CREATE TABLE CLONE and CREATE TABLE AS SELECT, see Hive to Unity Catalog migration options.

For a demo of upgrading a managed table using CREATE TABLE AS SELECT, see Managed Tables On HMS Without SYNC.

Requirements

Compute requirements:

- A compute resource that supports Unity Catalog. See Before you begin.

Permission requirements:

- The

USE CATALOGandUSE SCHEMAprivileges on the catalog and schema that you add the table to, along withCREATE TABLEon the schema, or you must be the owner of the catalog or schema. See Unity Catalog privileges and securable objects. - If your compute uses standard access mode, you need access to the tables in the Hive metastore, granted using legacy table access control. See Before you begin.

Upgrade process

To upgrade a table in your Hive metastore to a managed table in Unity Catalog using CREATE TABLE AS SELECT:

-

Create a new Unity Catalog table by querying the existing table. Replace the placeholder values:

<uc-catalog>: The Unity Catalog catalog for the new table.<uc-schema>: The Unity Catalog schema for the new table.<new-table>: A name for the Unity Catalog table.<source-schema>: The schema for the Hive table, such asdefault.<source-table>: The name of the Hive table.

- SQL

- Python

- R

- Scala

SQLCREATE TABLE <uc-catalog>.<new-schema>.<new-table>

AS SELECT * FROM hive_metastore.<source-schema>.<source-table>;Pythondf = spark.table("hive_metastore.<source-schema>.<source-table>")

df.write.saveAsTable(

name = "<uc-catalog>.<uc-schema>.<new-table>"

)R%r

library(SparkR)

df = tableToDF("hive_metastore.<source-schema>.<source-table>")

saveAsTable(

df = df,

tableName = "<uc-catalog>.<uc-schema>.<new-table>"

)Scalaval df = spark.table("hive_metastore.<source-schema>.<source-table>")

df.write.saveAsTable(

tableName = "<uc-catalog>.<uc-schema>.<new-table>"

)If you want to migrate only some columns or rows, modify the

SELECTstatement.noteThe commands presented here create a managed table in which data is copied into a dedicated managed storage location. If instead you want to create an external table, where the table is registered in Unity Catalog without moving the data in cloud storage, see Upgrade a schema or tables from the Hive metastore to Unity Catalog external tables using the upgrade wizard. See also Specify a managed storage location in Unity Catalog.

-

Grant account-level users or groups access to the new table. See Manage privileges in Unity Catalog.

-

(Optional) Add a comment to the original Hive table that points users to the new Unity Catalog table.

Return to the original table in the

hive.metastorecatalog to add the table comment. To learn how to add table comments using Catalog Explorer, see Add comments to data and AI assets. To learn how to add table comments using SQL statements in a notebook or the SQL query editor, see COMMENT ON.If you use the following syntax in the table comment, notebooks and SQL query editor queries that reference the deprecated Hive table will display the deprecated table name using strikethrough text, display the comment as a warning, and provide a Quick Fix link to Databricks Assistant, which can update your code to reference the new table.

This table is deprecated. Please use catalog.default.table instead of hive_metastore.schema.table.See Add comments to indicate that a Hive table has been migrated.

-

After the table is migrated, users should update their existing queries and workloads to use the new table.

If you added a comment to the original Hive table like the one listed in the optional previous step, you can use the Quick Fix link and Databricks Assistant to help you find and modify workloads.

-

Before you drop the old table, test for dependencies by revoking access to it and re-running related queries and workloads.

Don't drop the old table if you are still relying on deprecation comments to help you find and update existing code that references the old table.

Upgrade a view to Unity Catalog

After you upgrade all of a view's referenced tables to the same Unity Catalog metastore, you can create a new view that references the new tables.

Add comments to indicate that a Hive table has been migrated

When you add a comment to the deprecated Hive table that points users to the new Unity Catalog table, notebooks and SQL query editor queries that reference the deprecated Hive table will display the deprecated table name using strikethrough text, display the comment as a warning, and provide a Quick Fix link to Databricks Assistant, which can update your code to reference the new table.

Your comment must use the following format:

This table is deprecated. Please use catalog.default.table instead of hive_metastore.schema.table.

To learn how to add table comments using Catalog Explorer, see Add comments to data and AI assets. To learn how to add table comments using SQL statements in a notebook or the SQL query editor, see COMMENT ON.

Use Databricks Assistant to update a deprecated table reference

If you see strikethrough text on a table name in a notebook cell or statement in the SQL query editor, hover over the table name to reveal a warning notice. If that warning notice describes the table as deprecated and displays the new table name, click Quick Fix, followed by Fix Deprecation. Databricks Assistant opens, offering to replace the deprecated table name with the new Unity Catalog table name. Follow the prompts to complete the task.

See also Use Databricks Assistant.