View billable usage (legacy)

This documentation has been retired and might not be updated. The products, services, or technologies mentioned in this content are no longer supported. To view current admin documentation, see Manage your Databricks account.

This page is for legacy workspaces. For the E2 version of this page, see Usage dashboards. Legacy workspaces will be retired on December 31st, 2023. For more information, see End-of-life for legacy workspaces.

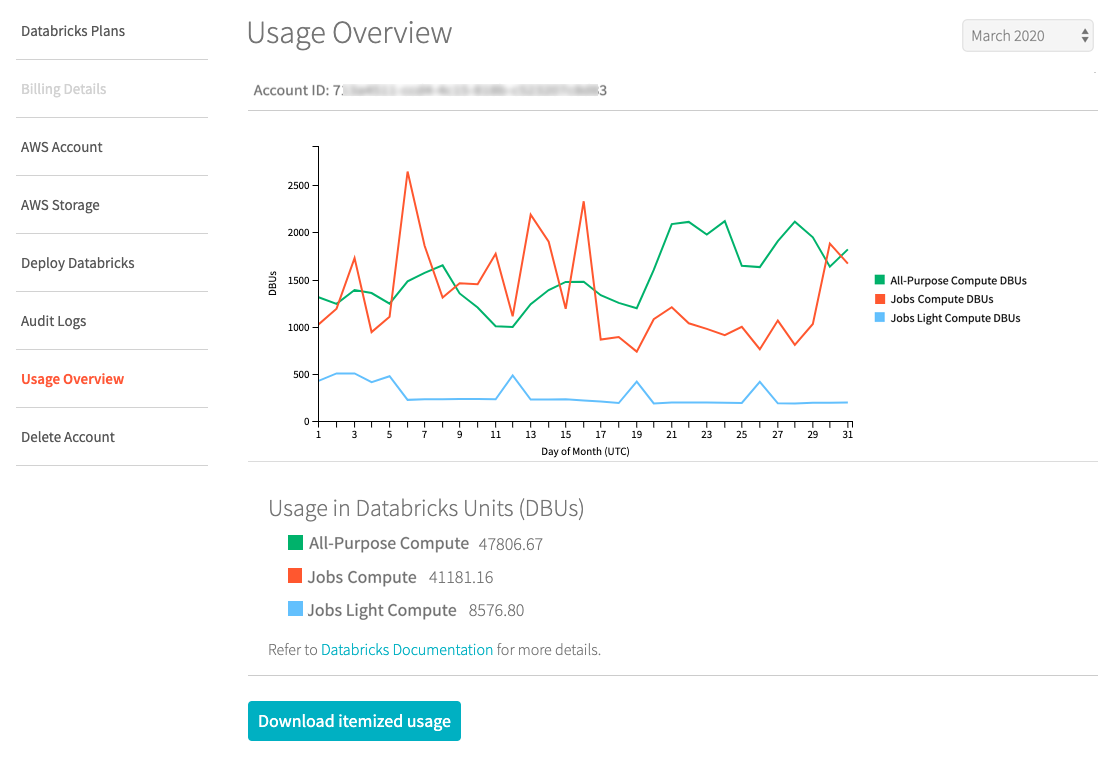

The Usage Overview tab in the Databricks account console lets you:

-

View historical account usage in Databricks Units (DBUs), grouped by workload type (All-Purpose Compute, Jobs Compute, Jobs Compute Light).

-

Download a CSV file that contains itemized usage details by cluster. If you want to automate delivery of these files to an S3 bucket, see Deliver and access billable usage logs (legacy).

noteThe downloadable CSV file includes personal data. As always, handle it with care.

-

View your Databricks account ID.

View the usage graph

-

Log into the account console. See Access the account console (legacy).

-

Click the Usage Overview tab.

-

Select a <month year> to see historical account usage.

Download usage as a CSV file

To get a CSV file containing detailed usage data, you can either:

- Download it from the account console by going to the Usage Overview tab and clicking the Download itemized usage button.

- Download it using the billable usage log download API.

For the CSV file schema, see CSV file schema. You can import this file into Databricks for analysis.

Deliver billable usage logs to your own S3 bucket

You can configure automatic delivery of billable usage CSV files into an S3 bucket in your AWS account. See Deliver and access billable usage logs (legacy).

Automatic log delivery allows you to control sharing of these details with other users. Other users who are authorized for this data do not need to rely on the account owner to regularly navigate to the account console to download a CSV file to share.

For the CSV schema, see CSV file schema.

For information about how to analyze these files using Databricks, see Analyze usage data in Databricks.