Share feature tables across workspaces (legacy)

- This documentation has been retired and might not be updated.

- Databricks recommends using Feature Engineering in Unity Catalog to share feature tables across workspaces. The approach in this article is deprecated.

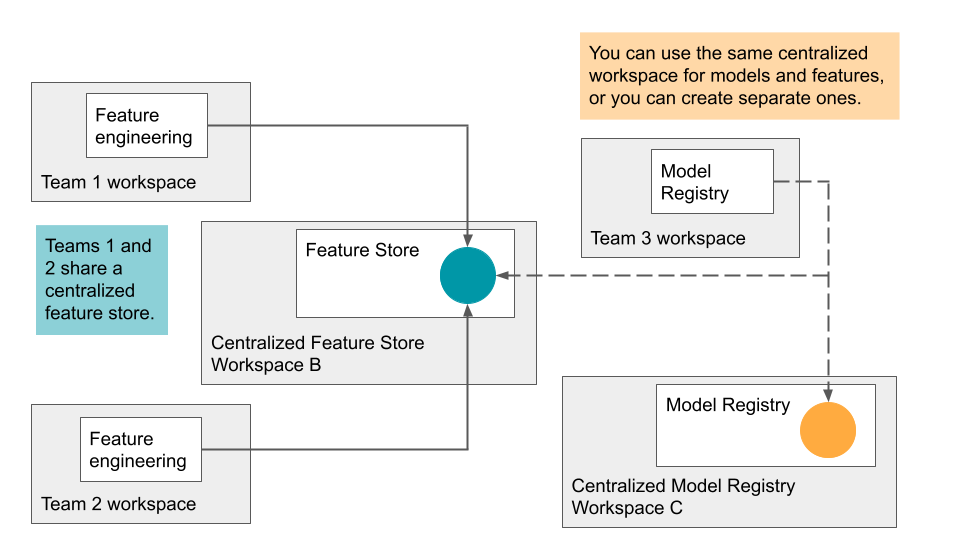

Databricks supports sharing feature tables across multiple workspaces. For example, from your own workspace, you can create, write to, or read from a feature table in a centralized feature store. This is useful when multiple teams share access to feature tables or when your organization has multiple workspaces to handle different stages of development.

For a centralized feature store, Databricks recommends that you designate a single workspace to store all feature store metadata, and create accounts for each user who needs access to the feature store.

If your teams are also sharing models across workspaces, you may choose to dedicate the same centralized workspace for both feature tables and models, or you could specify different centralized workspaces for each.

Access to the centralized feature store is controlled by tokens. Each user or script that needs access creates a personal access token in the centralized feature store and copies that token into the secret manager of their local workspace. Each API request sent to the centralized feature store workspace must include the access token; the Feature Store client provides a simple mechanism to specify the secrets to be used when performing cross-workspace operations.

As a security best practice when you authenticate with automated tools, systems, scripts, and apps, Databricks recommends that you use OAuth tokens.

If you use personal access token authentication, Databricks recommends using personal access tokens belonging to service principals instead of workspace users. To create tokens for service principals, see Manage tokens for a service principal.

Requirements

Using a feature store across workspaces requires:

- Feature Store client v0.3.6 and above.

- Both workspaces must have access to the raw feature data. They must share the same external Hive metastore and have access to the same DBFS storage.

- If IP access lists are enabled, workspace IP addresses must be on access lists.

Set up the API token for a remote registry

In this section, “Workspace B” refers to the centralized or remote feature store workspace.

- In Workspace B, create an access token.

- In your local workspace, create secrets to store the access token and information about Workspace B:

- Create a secret scope:

databricks secrets create-scope --scope <scope>. - Pick a unique identifier for Workspace B, shown here as

<prefix>. Then create three secrets with the specified key names:-

databricks secrets put --scope <scope> --key <prefix>-host: Enter the hostname of Workspace B. Use the following Python commands to get the hostname of a workspace:Pythonimport mlflow

host_url = mlflow.utils.databricks_utils.get_webapp_url()

host_url -

databricks secrets put --scope <scope> --key <prefix>-token: Enter the access token from Workspace B. -

databricks secrets put --scope <scope> --key <prefix>-workspace-id: Enter the workspace ID for Workspace B which can be found in the URL of any page.

-

- Create a secret scope:

You might want to share the secret scope with other users, since there is a limit on the number of secret scopes per workspace.

Specify a remote feature store

Based on the secret scope and name prefix you created for the remote feature store workspace, you can construct a feature store URI of the form:

feature_store_uri = f'databricks://<scope>:<prefix>'

Then, specify the URI explicitly when you instantiate a FeatureStoreClient:

fs = FeatureStoreClient(feature_store_uri=feature_store_uri)

Create a database for feature tables in the shared DBFS location

Before you create feature tables in the remote feature store, you must create a database to store them. The database must exist in the shared DBFS location.

For example, to create a database recommender in the shared location /mnt/shared, use the following command:

%sql CREATE DATABASE IF NOT EXISTS recommender LOCATION '/mnt/shared'

Create a feature table in the remote feature store

The API to create a feature table in a remote feature store depends on the Databricks runtime version you are using.

- V0.3.6 and above

- V0.3.5 and below

Use the FeatureStoreClient.create_table API:

fs = FeatureStoreClient(feature_store_uri=f'databricks://<scope>:<prefix>')

fs.create_table(

name='recommender.customer_features',

primary_keys='customer_id',

schema=customer_features_df.schema,

description='Customer-keyed features'

)

Use the FeatureStoreClient.create_feature_table API:

fs = FeatureStoreClient(feature_store_uri=f'databricks://<scope>:<prefix>')

fs.create_feature_table(

name='recommender.customer_features',

keys='customer_id',

schema=customer_features_df.schema,

description='Customer-keyed features'

)

For examples of other Feature Store methods, see Notebook example: Share feature tables across workspaces.

Use a feature table from the remote feature store

You can read a feature table in the remote feature store with the FeatureStoreClient.read_table method by first setting the feature_store_uri:

fs = FeatureStoreClient(feature_store_uri=f'databricks://<scope>:<prefix>')

customer_features_df = fs.read_table(

name='recommender.customer_features',

)

Other helper methods for accessing the feature table are also supported:

fs.read_table()

fs.get_feature_table() # in v0.3.5 and below

fs.get_table() # in v0.3.6 and above

fs.write_table()

fs.publish_table()

fs.create_training_set()

Use a remote model registry

In addition to specifying a remote feature store URI, you may also specify a remote model registry URI to share models across workspaces.

To specify a remote model registry for model logging or scoring, you can use a model registry URI to instantiate a FeatureStoreClient.

fs = FeatureStoreClient(model_registry_uri=f'databricks://<scope>:<prefix>')

customer_features_df = fs.log_model(

model,

"recommendation_model",

flavor=mlflow.sklearn,

training_set=training_set,

registered_model_name="recommendation_model"

)

Using feature_store_uri and model_registry_uri, you can train a model using any local or remote feature table, and then register the model in any local or remote model registry.

fs = FeatureStoreClient(

feature_store_uri=f'databricks://<scope>:<prefix>',

model_registry_uri=f'databricks://<scope>:<prefix>'

)

Notebook example: Share feature tables across workspaces

The following notebook shows how to work with a centralized feature store.