Create and work with output tables in Databricks Clean Rooms

This page introduces output tables, which are temporary read-only tables generated by a notebook run and shared to the notebook runner's Unity Catalog metastore. This article describes how to use a notebook to create output tables and how runners can read these output tables in their Unity Catalog metastore.

Overview of output tables

Output tables let you temporarily save the output of notebooks that are run in a clean room to an output catalog in your Unity Catalog metastore, where you can make the data available to members of your team who don't have the ability to run the notebooks themselves. You can also use Lakeflow Jobs to run notebooks and perform tasks on output tables. Combined with the Clean Room notebook task type and support for task values, output tables let you create complex workflows that depend on Clean room notebooks.

Output tables are read-only.

Only the specific principal (user, group, or service principal) who runs the notebook has default read access to the output table. There is no write access. A metastore admin can grant read access to other principals in their Databricks account, using standard Unity Catalog privileges.

Output tables are stored for 30 days in the central clean room's default storage location and shared to the runner's metastore using Delta Sharing. If you want to keep an output table for more than 30 days, you must copy it to local storage.

Each notebook run creates a new schema in the output catalog. New runs cannot append an existing output table.

Collaborators in Databricks on all three clouds—AWS, Azure, and Google Cloud—can share notebooks that create output tables and can read output tables that are generated when they run shared notebooks.

Create an output table

To create an output table, use the parameters cr_output_catalog and cr_output_schema in the three-part table namespace. Each run of the notebook produces a new schema.

In the following example, the notebook cell creates an output table called overlapping_users in the runner's output catalog that lists the users whose email address shows up in both the collaborator.advertiser.profiles and creator.publisher.profiles tables.

CREATE TABLE identifier(:cr_output_catalog || '.' || :cr_output_schema || '.overlapping_users') AS

SELECT collab_profiles.*

FROM collaborator.advertiser.profiles AS collab_profiles

JOIN creator.publisher.profiles AS creator_profiles

ON collab_profiles.email = creator_profiles.email

Read an output table

Output tables appear in a shared catalog in the notebook runner's metastore. In the Catalog Explorer Catalog pane, they appear in the Shared catalogs list.

Reading an output table is like reading any other table in Unity Catalog. You must have SELECT on the table, USE CATALOG on the shared output catalog, and USE SCHEMA on the automatically-generated schema. The user who ran the notebook that created the table has these permissions by default.

Deleting a clean room removes all output tables and historical data from the output catalog.

Before you begin

This section describes cloud, configuration, and compute requirements for reading output tables.

Shared output catalog requirement

Before you can read output tables, a user must create the catalog that holds them. You only need to do this once per clean room. The owner of the clean room has permission to read and manage the output catalog by default.

Permissions required: EXECUTE CLEAN ROOM TASK

- In your Databricks workspace, click

Catalog.

- Click the Clean Rooms > button.

- Select the clean room from the list.

- In the right pane, under Output, click Create catalog.

- Enter an Output catalog name or accept the default, which is

<clean-room-name>_output.

The output catalog appears in the list of Shared catalogs in your Catalog Explorer Catalog pane. Each clean room that you participate in can have one shared output catalog in your metastore.

Compute requirements

Queries on output tables require serverless compute. See Connect to serverless compute.

Permissions required to read an output table

The user who ran the notebook that created the output table and the owner of the clean room have permission to read and manage the output table by default. All other users must have the following permissions granted to them:

SELECTon the tableUSE CATALOGon the output catalogUSE SCHEMAon the output schema

Run the notebook

To generate shared output tables in your output catalog, a user with access to the clean room must run the notebook. See Run notebooks in clean rooms. Each notebook run creates a new output schema and table.

You can use Lakeflow Jobs to run notebooks and perform tasks on output tables, enabling complex workflows. See Use Lakeflow Jobs to run clean room notebooks.

Find and view an output table

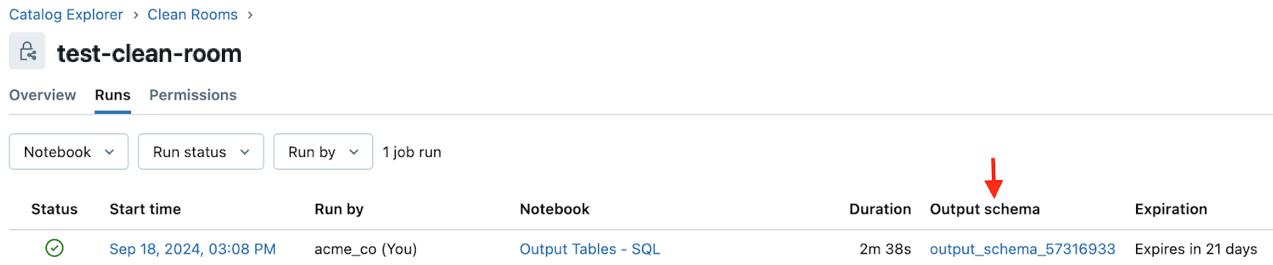

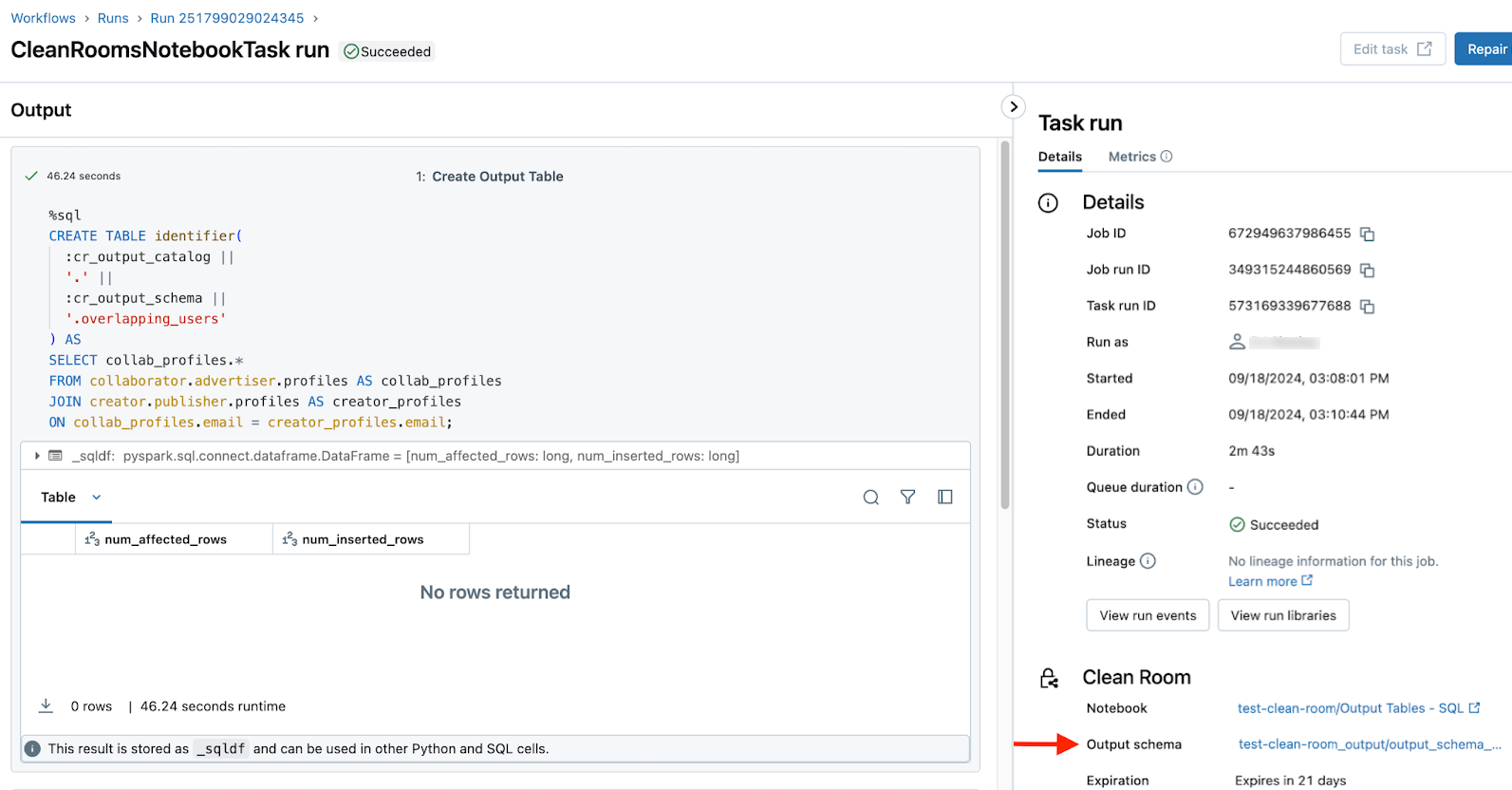

The user who runs the notebook that creates the output table can find a link to the output table on the notebook run history and run details pages in the Clean Rooms UI. In both cases, the link is in the Output schema field. See Monitor clean room notebook runs.

Run history:

Run details:

You can also find the output catalog in the list of Shared catalogs in your Catalog Explorer Catalog pane.

Limitations

In addition to the requirements listed in Overview of output tables and Before you begin, output tables have the following limitations:

- Output tables are only supported when the clean room was created after the output table feature was released.

- Only tables are supported. Volumes and views, for example, are not.

- You are limited in the number of output tables each notebook can support. See Resource limits.