Compute configuration reference

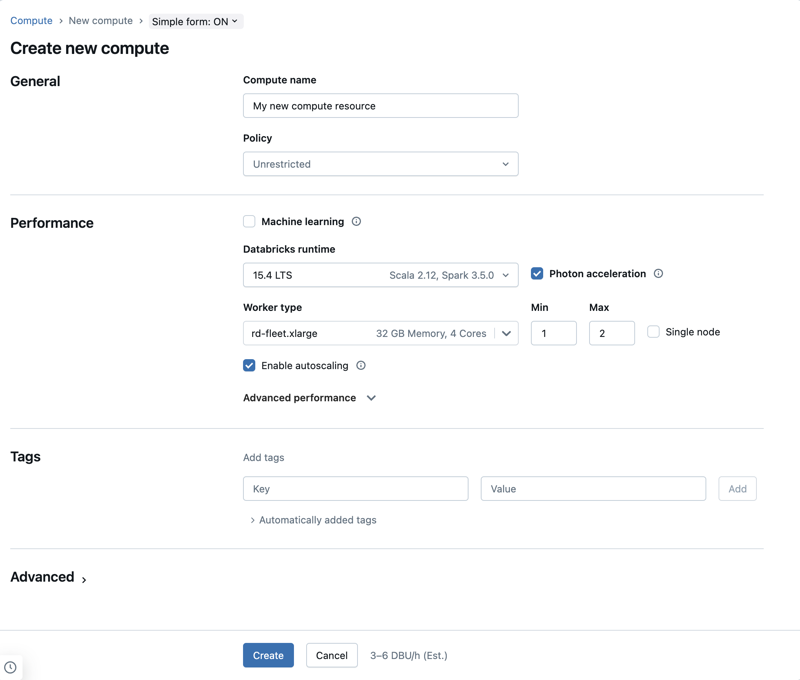

The organization of this article assumes you are using the simple form compute UI. For an overview of the simple form updates, see Use the simple form to manage compute.

This article explains the configuration settings available when creating a new all-purpose or job compute resource. Most users create compute resources using their assigned policies, which limits the configurable settings. If you don't see a particular setting in your UI, it's because the policy you've selected does not allow you to configure that setting.

For recommendations on configuring compute for your workload, see Compute configuration recommendations.

The configurations and management tools described in this article apply to both all-purpose and job compute. For more considerations on configuring job compute, see Configure compute for jobs.

Create a new all-purpose compute resource

To create a new all-purpose compute resource:

- In the workspace sidebar, click Compute.

- Click the Create compute button.

- Configure the compute resource.

- Click Create.

You new compute resource will automatically start spinning up and be ready to use shortly.

Compute policy

Policies are a set of rules used to limit the configuration options available to users when they create compute resources. If a user doesn't have the Unrestricted cluster creation entitlement, then they can only create compute resources using their granted policies.

To create compute resources according to a policy, select a policy from the Policy drop-down menu.

By default, all users have access to the Personal Compute policy, allowing them to create single-machine compute resources. If you need access to Personal Compute or any additional policies, reach out to your workspace admin.

Performance settings

The following settings appear under the Performance section of the simple form compute UI:

- Databricks Runtime versions

- Use Photon acceleration

- Worker node type

- Single-node compute

- Enable autoscaling

- Advanced performance settings

Databricks Runtime versions

Databricks Runtime is the set of core components that run on your compute. Select the runtime using the Databricks Runtime Version drop-down menu. For details on specific Databricks Runtime versions, see Databricks Runtime release notes versions and compatibility. All versions include Apache Spark. Databricks recommends the following:

- For all-purpose compute, use the most current version to ensure you have the latest optimizations and the most up-to-date compatibility between your code and preloaded packages.

- For job compute running operational workloads, consider using the Long Term Support (LTS) Databricks Runtime version. Using the LTS version will ensure you don't run into compatibility issues and can thoroughly test your workload before upgrading.

- For data science and machine learning use cases, consider Databricks Runtime ML version.

Use Photon acceleration

Photon is enabled by default on compute running Databricks Runtime 9.1 LTS and above.

To enable or disable Photon acceleration, select the Use Photon Acceleration checkbox. To learn more about Photon, see What is Photon?.

Worker node type

A compute resource consists of one driver node and zero or more worker nodes. You can pick separate cloud provider instance types for the driver and worker nodes, although by default the driver node uses the same instance type as the worker node. The driver node setting is underneath the Advanced performance section.

Different families of instance types fit different use cases, such as memory-intensive or compute-intensive workloads. You can also select a pool to use as the worker or driver node.

Do not use a pool with spot instances as your driver type. Select an on-demand driver type to prevent your driver from being reclaimed. See Connect to pools.

In multi-node compute, worker nodes run the Spark executors and other services required for a properly functioning compute resource. When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. Databricks runs one executor per worker node. Therefore, the terms executor and worker are used interchangeably in the context of the Databricks architecture.

To run a Spark job, you need at least one worker node. If the compute resource has zero workers, you can run non-Spark commands on the driver node, but Spark commands will fail.

Flexible node types

If your workspace has flexible node types enabled, you can use flexible node types for your compute resource. Flexible node types allow your compute resource to fall back to alternative, compatible instance types when your specified instance type is unavailable. This behavior improves compute launch reliability by reducing capacity failures during compute launches. See Improve compute launch reliability using flexible node types.

Worker node IP addresses

Databricks launches worker nodes with two private IP addresses each. The node's primary private IP address hosts Databricks internal traffic. The secondary private IP address is used by the Spark container for intra-cluster communication. This model allows Databricks to provide isolation between multiple compute resources in the same workspace.

GPU instance types

For computationally challenging tasks that demand high performance, like those associated with deep learning, Databricks supports compute resources that are accelerated with graphics processing units (GPUs). For more information, see GPU-enabled compute.

Databricks no longer supports spinning up compute using Amazon EC2 P2 instances.

AWS Graviton instance types

Databricks supports AWS Graviton instances. These instances use AWS-designed Graviton processors that are built on top of the Arm64 instruction set architecture. AWS claims that instance types with these processors have the best price-to-performance ratio of any instance type on Amazon EC2. To use Graviton instance types, select one of the available AWS Graviton instance type for the Worker type, Driver type, or both.

Databricks supports AWS Graviton-enabled compute:

- On Databricks Runtime 9.1 LTS and above for non-Photon, and Databricks Runtime 10.2 (EoS) and above for Photon.

- On Databricks Runtime 15.4 LTS for Machine Learning for Databricks Runtime for Machine Learning.

- In all AWS Regions. Note, however, that not all instance types are available in all Regions. If you select an instance type that is not available in the Region for a workspace, you get compute creation failure.

- For AWS Graviton2 and Graviton3 processors.

Lakeflow Spark Declarative Pipelines is not supported on Graviton-enabled compute.

ARM64 ISA limitations

- Floating point precision changes: typical operations like adding, subtracting, multiplying, and dividing have no change in precision. For single triangle functions such as

sinandcos, the upper bound on the precision difference to Intel instances is1.11e-16. - Third party support: the change in ISA may have some impact on support for third-party tools and libraries.

- Mixed-instance compute: Databricks does not support mixing AWS Graviton and non-AWS Graviton instance types, as each type requires a different Databricks Runtime.

Graviton limitations

The following features do not support AWS Graviton instance types:

- Python UDFs (Python UDFs are available on Databricks Runtime 15.2 and above)

- Databricks Container Services

- Lakeflow Spark Declarative Pipelines

- Databricks SQL

- Databricks on AWS GovCloud

- Access to workspace files, including those in Git folders, from web terminals

AWS Fleet instance types

If your workspace was created before May 2023, its IAM role's permissions might need to be updated to allow access to fleet instance types. For more information, see Enable fleet instance types.

A fleet instance type is a variable instance type that automatically resolves to the best available instance type of the same size.

For example, if you select the fleet instance type m-fleet.xlarge, your node will resolve to whichever .xlarge, general purpose instance type has the best spot capacity and price at that moment. The instance type your compute resource resolves to will always have the same memory and number of cores as the fleet instance type you chose.

Fleet instance types use AWS's Spot Placement Score API to choose the best and most likely to succeed availability zone for your compute resource at startup time.

Fleet limitations

- If you update the spot instance bid percentage using the API or JSON, it has no effect when the worker node type is set to a fleet instance type. This is because there is no single on-demand instance to use as a reference point for the spot price.

- Fleet instances do not support GPU instances.

- A small percentage of older workspaces do not yet support fleet instance types. If this is the case for your workspace, you'll see an error indicating this when attempting to create compute or an instance pool using a fleet instance type. We're working to bring support to these remaining workspaces.

Single-node compute

The Single node checkbox allows you to create a single node compute resource.

Single node compute is intended for jobs that use small amounts of data or non-distributed workloads such as single-node machine learning libraries. Multi-node compute should be used for larger jobs with distributed workloads.

Single node properties

A single node compute resource has the following properties:

- Runs Spark locally.

- Driver acts as both master and worker, with no worker nodes.

- Spawns one executor thread per logical core in the compute resource, minus 1 core for the driver.

- Saves all

stderr,stdout, andlog4jlog outputs in the driver log. - Can't be converted to a multi-node compute resource.

Selecting single or multi node

Consider your use case when deciding between single or multi-node compute:

-

Large-scale data processing will exhaust the resources on a single node compute resource. For these workloads, Databricks recommends using multi-node compute.

-

A multi-node compute resource can't be scaled to 0 workers. Use single node compute instead.

-

GPU scheduling is not enabled on single node compute.

-

On single-node compute, Spark cannot read Parquet files with a UDT column. The following error message results:

ConsoleThe Spark driver has stopped unexpectedly and is restarting. Your notebook will be automatically reattached.To work around this problem, disable the native Parquet reader:

Pythonspark.conf.set("spark.databricks.io.parquet.nativeReader.enabled", False)

Enable autoscaling

When Enable autoscaling is checked, you can provide a minimum and maximum number of workers for the compute resource. Databricks then chooses the appropriate number of workers required to run your job.

To set the minimum and the maximum number of workers your compute resource will autoscale between, use the Min and Max fields next to the Worker type dropdown.

If you don't enable autoscaling, you must enter a fixed number of workers in the Workers field next to the Worker type dropdown.

When the compute resource is running, the compute details page displays the number of allocated workers. You can compare number of allocated workers with the worker configuration and make adjustments as needed.

Benefits of autoscaling

With autoscaling, Databricks dynamically reallocates workers to account for the characteristics of your job. Certain parts of your pipeline may be more computationally demanding than others, and Databricks automatically adds additional workers during these phases of your job (and removes them when they're no longer needed).

Autoscaling makes it easier to achieve high utilization because you don't need to provision the compute to match a workload. This applies especially to workloads whose requirements change over time (like exploring a dataset during the course of a day), but it can also apply to a one-time shorter workload whose provisioning requirements are unknown. Autoscaling thus offers two advantages:

- Workloads can run faster compared to a constant-sized under-provisioned compute resource.

- Autoscaling can reduce overall costs compared to a statically-sized compute resource.

Depending on the constant size of the compute resource and the workload, autoscaling gives you one or both of these benefits at the same time. The compute size can go below the minimum number of workers selected when the cloud provider terminates instances. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers.

Autoscaling is not available for spark-submit jobs.

Compute auto-scaling has limitations scaling down cluster size for Structured Streaming workloads. Databricks recommends using Lakeflow Spark Declarative Pipelines with enhanced autoscaling for streaming workloads. See Optimize the cluster utilization of Lakeflow Spark Declarative Pipelines with Autoscaling.

How autoscaling behaves

Workspace on the Premium plan or above use optimized autoscaling. Workspaces on the standard pricing plan use standard autoscaling.

Optimized autoscaling has the following characteristics:

- Scales up from min to max in 2 steps.

- Can scale down, even if the compute resource is not idle, by looking at the shuffle file state.

- Scales down based on a percentage of current nodes.

- On job compute, scales down if the compute resource is underutilized over the last 40 seconds.

- On all-purpose compute, scales down if the compute resource is underutilized over the last 150 seconds.

- The

spark.databricks.aggressiveWindowDownSSpark configuration property specifies in seconds how often the compute makes down-scaling decisions. Increasing the value causes the compute to scale down more slowly. The maximum value is 600.

Standard autoscaling is used in standard plan workspaces. Standard autoscaling has the following characteristics:

- Starts by adding 8 nodes. Then scales up exponentially, taking as many steps as required to reach the max.

- Scales down when 90% of the nodes are not busy for 10 minutes and the compute has been idle for at least 30 seconds.

- Scales down exponentially, starting with 1 node.

Autoscaling with pools

If you are attaching your compute resource to a pool, consider the following:

- Make sure the compute size requested is less than or equal to the minimum number of idle instances in the pool. If it is larger, compute startup time will be equivalent to compute that doesn't use a pool.

- Make sure the maximum compute size is less than or equal to the maximum capacity of the pool. If it is larger, the compute creation will fail.

Autoscaling example

If you reconfigure a static compute resource to autoscale, Databricks immediately resizes the compute resource within the minimum and maximum bounds and then starts autoscaling. As an example, the following table demonstrates what happens to a compute resource with a certain initial size if you reconfigure the compute resource to autoscale between 5 and 10 nodes.

Initial size | Size after reconfiguration |

|---|---|

6 | 6 |

12 | 10 |

3 | 5 |

Advanced performance settings

The following setting appear under the Advanced performance section in the simple form compute UI.

Spot instances

You can specify whether to use spot instances by checking the Use spot instance checkbox under Advanced performance. See AWS spot pricing.

The first instance will always be on-demand (the driver node is always on-demand) and subsequent instances will be spot instances.

Automatic termination

You can set auto termination for compute under the Advanced performance section. During compute creation, specify an inactivity period in minutes after which you want the compute resource to terminate.

If the difference between the current time and the last command run on the compute resource is more than the inactivity period specified, Databricks automatically terminates that compute resource. For more information on compute termination, see Terminate a compute.

Driver type

You can select the driver type under the Advanced performance section. The driver node maintains state information of all notebooks attached to the compute resource. The driver node also maintains the SparkContext, interprets all the commands you run from a notebook or a library on the compute resource, and runs the Apache Spark master that coordinates with the Spark executors.

The default value of the driver node type is the same as the worker node type. You can choose a larger driver node type with more memory if you are planning to collect() a lot of data from Spark workers and analyze them in the notebook.

Since the driver node maintains all of the state information of the notebooks attached, make sure to detach unused notebooks from the driver node.

Tags

Tags allow you to easily monitor the cost of compute resources used by various groups in your organization. Specify tags as key-value pairs when you create compute, and Databricks applies these tags to cloud resources like VMs and disk volumes, as well as the Databricks usage logs.

For compute launched from pools, the custom tags are only applied to DBU usage reports and do not propagate to cloud resources.

For detailed information about how pool and compute tag types work together, see Use tags to attribute and track usage

To add tags to your compute resource:

- In the Tags section, add a key-value pair for each custom tag.

- Click Add.

Advanced settings

The following settings appear under the Advanced section of the simple form compute UI:

- Access modes

- Instance profiles

- Availability zones

- AWS Capacity Blocks

- Enable autoscaling local storage

- EBS volumes

- Local disk encryption

- Spark configuration

- Environment variables

- Compute log delivery

Access modes

Access mode is a security feature that determines who can use the compute resource and the data they can access using the compute resource. Every compute resource in Databricks has an access mode. Access mode settings are found under the Advanced section of the simple form compute UI.

Access mode selection is Auto by default, meaning the access mode is automatically chosen for you based on your selected Databricks Runtime. Auto defaults to Standard unless a machine learning runtime or a Databricks Runtimes lower than 14.3 is selected, in which case Dedicated is used.

Databricks recommends that you use standard access mode unless your required functionality is not supported.

Access Mode | Description | Supported Languages |

|---|---|---|

Standard | Can be used by multiple users with data isolation among users. | Python, SQL, Scala |

Dedicated | Can be assigned to and used by a single user or group. | Python, SQL, Scala, R |

For detailed information about the functionality support for each of these access modes, see Standard compute requirements and limitations and Dedicated compute requirements and limitations.

In Databricks Runtime 13.3 LTS and above, init scripts and libraries are supported by all access modes. Requirements and levels of support vary. See Where can init scripts be installed? and Compute-scoped libraries.

Instance profiles

Databricks recommends using Unity Catalog external locations to connect to S3 instead of instance profiles. Unity Catalog simplifies the security and governance of your data by providing a central place to administer and audit data access across multiple workspaces in your account. See Connect to cloud object storage using Unity Catalog.

To securely access AWS resources without using AWS keys, you can launch Databricks compute with instance profiles. See Tutorial: Configure S3 access with an instance profile for information about how to create and configure instance profiles. Once you have created an instance profile, you select it in the Instance Profile drop-down list.

After you launch your compute resource, verify that you can access the S3 bucket using the following command. If the command succeeds, that compute resource can access the S3 bucket.

dbutils.fs.ls("s3a://<s3-bucket-name>/")

Once a compute resource launches with an instance profile, anyone who has attach permissions to this compute resource can access the underlying resources controlled by this role. To guard against unwanted access, use Compute permissions to restrict permissions to the compute resource.

Availability zones

To find the availability zone setting, open the Advanced section and click the Instances tab. This setting lets you specify which availability zone (AZ) you want the compute resource to use. By default, this setting is set to auto, where the AZ is automatically selected based on available IPs in the workspace subnets. Auto-AZ retries in other availability zones if AWS returns insufficient capacity errors.

Auto-AZ works only at compute startup. After the compute resource launches, all the nodes stay in the original availability zone until the compute resource is terminated or restarted.

Choosing a specific AZ for the compute resource is useful primarily if your organization has purchased reserved instances in specific availability zones. Read more about AWS availability zones in the AWS documentation.

HA (high availability zone) is a deprecated AZ setting. HA resolves to auto in the background.

AWS Capacity Blocks

AWS Capacity Blocks allow you to reserve compute capacity for a specific time in a specific AZ. To use Capacity Blocks with Databricks compute, configure your compute resource with a specific tag and availability zone.

- Purchase a Capacity Block in your AWS portal. For details, see the AWS documentation on purchasing a Capacity Block.

- Ensure the total number of instances is sufficient for your expected usage.

- Note the availability zone AWS assigns to your Capacity Block reservation.

- Verify your workspace has a subnet in the same availability zone as the Capacity Block.

- Copy the Capacity Block ID from AWS for use in the Databricks configuration.

- When the capacity block reservation activation time comes, create a Databricks compute resource with the following settings:

- Add a tag with the following:

- Key:

X-Databricks-AwsCapacityBlockId - Value: Your Capacity Block ID from AWS Configure all nodes to use on-demand instances:

- Key:

- Under Advanced performance, clear the Use spot instances checkbox.

- Under Advanced > Instances, select the specific availability zone assigned to your Capacity Block.

- Add a tag with the following:

- Complete your compute configuration and click Create.

Capacity Blocks must be active before you can launch compute resources using them. Check your Capacity Block reservation activation time on the AWS portal.

Enable autoscaling local storage

To configure Enable autoscaling local storage, open the Advanced section and click the Instances tab.

If you don't want to allocate a fixed number of EBS volumes at compute creation time, use autoscaling local storage. With autoscaling local storage, Databricks monitors the amount of free disk space available on your compute's Spark workers. If a worker begins to run too low on disk, Databricks automatically attaches a new EBS volume to the worker before it runs out of disk space. EBS volumes are attached up to a limit of 5 TB of total disk space per instance (including the instance's local storage).

The EBS volumes attached to an instance are detached only when the instance is returned to AWS. That is, EBS volumes are never detached from an instance as long as it is part of a running compute. To scale down EBS usage, Databricks recommends using this feature in compute configured with autoscaling compute or automatic termination.

Databricks uses Amazon EBS GP3 volumes to extend the local storage of an instance. The default AWS capacity limit for these volumes is 50 TiB. To avoid hitting this limit, administrators should request an increase in this limit based on their usage requirements.

EBS volumes

This section describes the default EBS volume settings for worker nodes, how to add shuffle volumes, and how to configure compute so that Databricks automatically allocates EBS volumes.

To configure EBS volumes, your compute must not be enabled for autoscaling local storage. Click the Instances tab under Advanced in the compute configuration and select an option in the EBS Volume Type dropdown list.

Default EBS volumes

Databricks provisions EBS volumes for every worker node as follows:

- A 30 GB encrypted EBS instance root volume used by the host operating system and Databricks internal services.

- A 150 GB encrypted EBS container root volume used by the Spark worker. This hosts Spark services and logs.

- (HIPAA only) a 75 GB encrypted EBS worker log volume that stores logs for Databricks internal services.

Add EBS shuffle volumes

To add shuffle volumes, select General Purpose SSD in the EBS Volume Type dropdown list.

By default, Spark shuffle outputs go to the instance local disk. For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. This is particularly useful to prevent out-of-disk space errors when you run Spark jobs that produce large shuffle outputs.

Databricks encrypts these EBS volumes for both on-demand and spot instances. Read more about AWS EBS volumes.

Optionally encrypt Databricks EBS volumes with a customer-managed key

Optionally, you can encrypt compute EBS volumes with a customer-managed key.

See Customer-managed keys for encryption.

AWS EBS limits

Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all your deployed compute. For information on the default EBS limits and how to change them, see Amazon Elastic Block Store (EBS) Limits.

AWS EBS SSD volume type

Select either gp2 or gp3 for your AWS EBS SSD volume type. To do this, see Manage SSD storage. Databricks recommends you switch to gp3 for its cost savings compared to gp2.

By default, the Databricks configuration sets the gp3 volume's IOPS and throughput IOPS to match the maximum performance of a gp2 volume with the same volume size.

For technical information about gp2 and gp3, see Amazon EBS volume types.

Local disk encryption

This feature is in Public Preview.

Some instance types you use to run compute may have locally attached disks. Databricks may store shuffle data or ephemeral data on these locally attached disks. To ensure that all data at rest is encrypted for all storage types, including shuffle data that is stored temporarily on your compute resource's local disks, you can enable local disk encryption.

Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes.

When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each compute node and is used to encrypt all data stored on local disks. The scope of the key is local to each compute node and is destroyed along with the compute node itself. During its lifetime, the key resides in memory for encryption and decryption and is stored encrypted on the disk.

To enable local disk encryption, you must use the Clusters API. During compute creation or edit, set enable_local_disk_encryption to true.

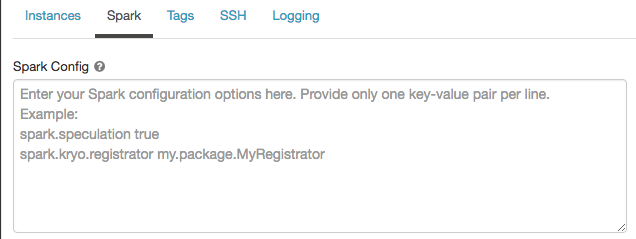

Spark configuration

To fine-tune Spark jobs, you can provide custom Spark configuration properties.

-

On the compute configuration page, click the Advanced toggle.

-

Click the Spark tab.

In Spark config, enter the configuration properties as one key-value pair per line.

When you configure compute using the Clusters API, set Spark properties in the spark_conf field in the create cluster API or Update cluster API.

To enforce Spark configurations on compute, workspace admins can use compute policies.

Retrieve a Spark configuration property from a secret

Databricks recommends storing sensitive information, such as passwords, in a secret instead of plaintext. To reference a secret in the Spark configuration, use the following syntax:

spark.<property-name> {{secrets/<scope-name>/<secret-name>}}

For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password:

spark.password {{secrets/acme-app/password}}

For more information, see Manage secrets.

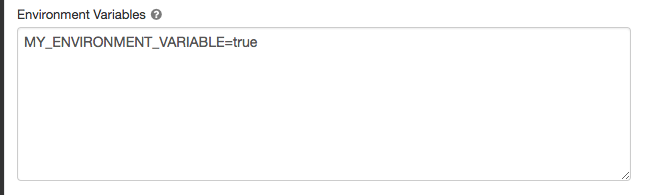

Environment variables

Configure custom environment variables that you can access from init scripts running on the compute resource. Databricks also provides predefined environment variables that you can use in init scripts. You cannot override these predefined environment variables.

-

On the compute configuration page, click the Advanced toggle.

-

Click the Spark tab.

-

Set the environment variables in the Environment variables field.

You can also set environment variables using the spark_env_vars field in the Create cluster API or Update cluster API.

Compute log delivery

When you create an all-purpose or jobs compute, you can specify a location to deliver the cluster logs for the Spark driver node, worker nodes, and events. Logs are delivered every five minutes and archived hourly in your chosen destination. Databricks will deliver all logs generated up until the compute resource is terminated.

To configure the log delivery location:

- On the compute page, click the Advanced toggle.

- Click the Logging tab.

- Select a destination type.

- Enter the Log path.

To store the logs, Databricks creates a subfolder in your chosen log path named after the compute's cluster_id.

For example, if the specified log path is /Volumes/catalog/schema/volume, logs for 06308418893214 are delivered to

/Volumes/catalog/schema/volume/06308418893214.

Delivering logs to volumes is in Public Preview and is only supported on Unity Catalog-enabled compute with Standard or Dedicated access mode. In Standard access mode, verify the cluster owner can upload files to the volume. In Dedicated access mode, ensure the assigned user or group can upload files to the volume. See the Create, delete, or update files operation in Privileges for Unity Catalog volumes.

S3 bucket destinations

If you choose an S3 destination, you must configure the compute resource with an instance profile that can access the bucket.

This instance profile must have both the PutObject and PutObjectAcl permissions. An example instance profile

has been included for your convenience. See Tutorial: Configure S3 access with an instance profile for instructions on how to set up an instance profile.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["s3:ListBucket"],

"Resource": ["arn:aws:s3:::<my-s3-bucket>"]

},

{

"Effect": "Allow",

"Action": ["s3:PutObject", "s3:PutObjectAcl", "s3:GetObject", "s3:DeleteObject"],

"Resource": ["arn:aws:s3:::<my-s3-bucket>/*"]

}

]

}

This feature is also available in the REST API. See the Clusters API.