Optimize the cluster utilization of Lakeflow Spark Declarative Pipelines with Autoscaling

This article discusses how to use enhanced autoscaling to optimize your pipelines on Databricks.

Enhanced autoscaling is enabled by default for all new pipelines. Serverless pipelines also use vertical autoscaling. See What is vertical autoscaling?.

For serverless pipelines, enhanced autoscaling is always on and cannot be disabled. See Configure a serverless pipeline.

What is enhanced autoscaling?

Databricks enhanced autoscaling optimizes cluster utilization by automatically allocating cluster resources based on workload volume, with minimal impact on the data processing latency of your pipelines.

Enhanced autoscaling improves on the Databricks cluster autoscaling functionality with the following features:

- Enhanced autoscaling implements optimization of streaming workloads and adds enhancements to improve the performance of batch workloads. Enhanced autoscaling optimizes costs by adding or removing machines as the workload changes.

- Enhanced autoscaling proactively shuts down under-utilized nodes while guaranteeing there are no failed tasks during shutdown. The existing cluster autoscaling feature scales down nodes only if the node is idle.

Enhanced autoscaling is the default autoscaling mode when you create a new pipeline in the pipelines UI. You can enable enhanced autoscaling for existing pipelines by editing the pipeline settings in the UI. You can also enable enhanced autoscaling when you create or edit pipelines with the pipelines REST API.

Which metrics does enhanced autoscaling use to make a scale-up or scale-down decision?

Enhanced autoscaling uses two metrics to decide on scaling up or scaling down:

- Task slot utilization: This is the average ratio of the number of busy task slots to the total task slots available in the cluster.

- Task queue size: This is the number of tasks waiting to be executed in task slots.

Enable enhanced autoscaling for a pipeline

Enhanced autoscaling is the default autoscaling mode when you create a new pipeline in the pipelines UI. You can enable enhanced autoscaling for existing pipelines by editing the pipeline settings in the UI. You can also enable enhanced autoscaling when you edit a pipeline with the Lakeflow Pipelines Editor.

To use enhanced autoscaling, do one of the following:

- Set Cluster mode to Enhanced autoscaling when editing pipeline settings in the Lakeflow Pipelines Editor.

- Add the

autoscalesetting to the pipeline cluster configuration and set themodefield toENHANCED. See Configure classic compute for pipelines.

Use the following guidelines when configuring enhanced autoscaling for production pipelines:

- Leave the

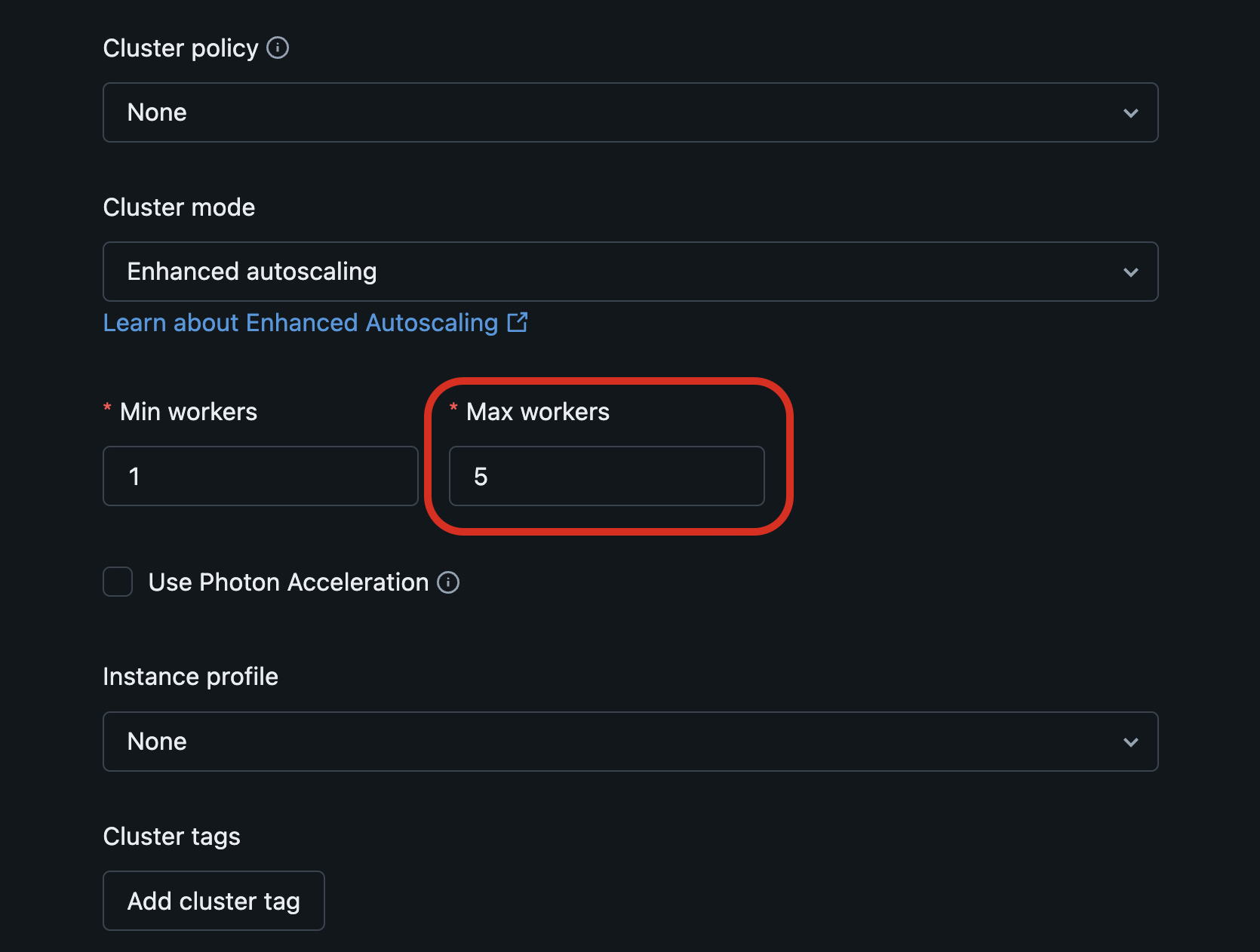

Min workerssetting at the default. - Set the

Max workerssetting to a value based on budget and pipeline priority.

The following example configures an enhanced autoscaling cluster with a minimum of 5 workers and a maximum of 10 workers. max_workers must be greater than or equal to min_workers.

- Enhanced autoscaling is available for

updatesclusters only. Legacy autoscaling is used formaintenanceclusters. - The

autoscaleconfiguration has two modes:LEGACY: Use cluster autoscaling.ENHANCED: Use enhanced autoscaling.

{

"clusters": [

{

"autoscale": {

"min_workers": 5,

"max_workers": 10,

"mode": "ENHANCED"

}

}

]

}

If the pipeline is configured for continuous execution, it is automatically restarted after the autoscaling configuration changes. After restart, expect a short period of increased latency. Following this brief period of increased latency, the cluster size should be updated based on your autoscale configuration, and the pipeline latency should return to its previous latency characteristics.

Limit costs for pipelines that use enhanced autoscaling

You cannot configure workers for serverless pipelines.

Setting the Max workers parameter in the pipelines Compute pane sets an upper bound for autoscaling. Reducing the number of available workers might increase latency for some workloads but prevents compute resource costs from bursting during compute-intensive operations.

Databricks recommends tuning the Max workers settings to balance the cost-latency trade-off for your particular needs.

Monitor enhanced autoscaling enabled classic pipelines

You can use the event log in the pipeline user interface to monitor enhanced autoscaling metrics for classic pipelines. Enhanced autoscaling events have the autoscale event type. The following are example events:

Event | Message |

|---|---|

Cluster resize request started |

|

Cluster resize request succeeded |

|

Cluster resize request partially succeeded |

|

Cluster resize request failed |

|

You can also view enhanced autoscaling events by directly querying the event log:

- To query the event log for backlog metrics, see Monitor data backlog for optimizing streaming duration.

- To monitor cluster resizing requests and responses during enhanced autoscaling operations, see Monitor autoscaling events for optimizing classic compute.

What is vertical autoscaling?

Serverless pipelines adds to the horizontal autoscaling provided by Databricks enhanced autoscaling by automatically allocating the most cost-efficient instance types that can run your pipeline without failing because of out-of-memory errors. Vertical autoscaling scales up when larger instance types are required to run a pipeline update and also scales down when it determines that the update can be run with smaller instance types. Vertical autoscaling determines whether driver nodes, worker nodes, or both driver and worker nodes should be scaled up or down.

Vertical autoscaling is used for all serverless pipelines, including pipelines used by Databricks SQL materialized views and streaming tables.

Vertical autoscaling works by detecting pipeline updates that have failed because of out-of-memory errors. Vertical autoscaling allocates larger instance types when these failures are detected based on the out-of-memory data collected from the failed update. In production mode, a new update that uses the new compute resources is started automatically. In development mode, the new compute resources are used when you manually start a new update.

If vertical autoscaling detects that the memory of the allocated instances is consistently underutilized, it will scale down the instance types to use in the next pipeline update.