Managed evaluation datasets — subject matter expert (SME) user guide

This feature is in Private Preview. To try it, reach out to your Databricks contact.

This page describes how subject matter experts (SMEs) use the managed evaluations UI. The managed evaluations UI is designed to help SMEs do the following:

- Review a set of chats that test different aspects of the AI agent's functionality.

- Provide information to help the AI judge evaluate the AI agent's responses to those questions.

For more information about Mosaic AI Agent Evaluation and the AI judges it provides, see Mosaic AI Agent Evaluation (MLflow 2) and How quality, cost, and latency are assessed by Agent Evaluation (MLflow 2).

Review chats

The first step is to review a set of chats that will be used to test the AI agent. These chats form the basis of an evaluation set. The chats are provided by the developer for testing of the AI agent.

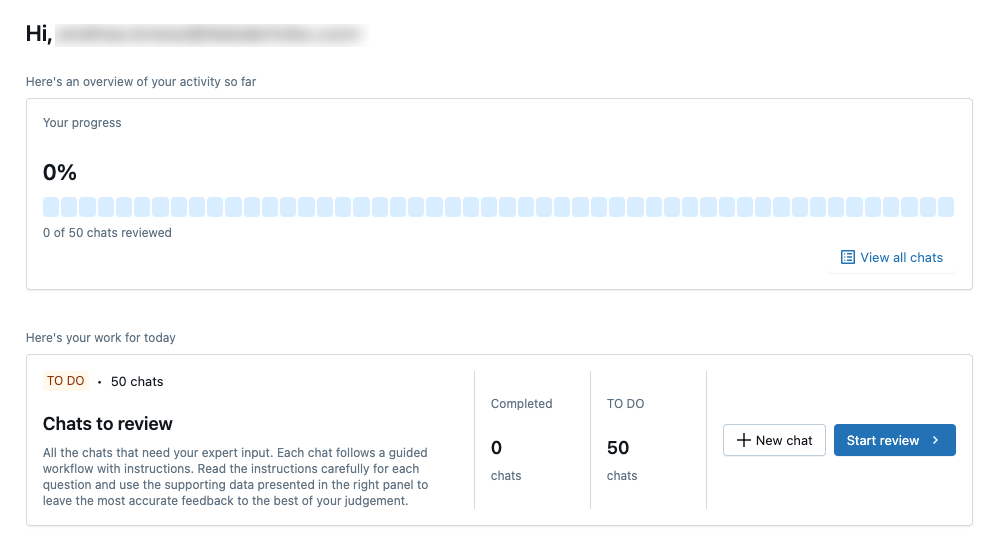

When you click the link to the app, a screen similar to the following appears:

You can see the overall progress of the review. The progress bar shows the number of chats you have reviewed and the total number of chats in the set.

-

Click Start review.

-

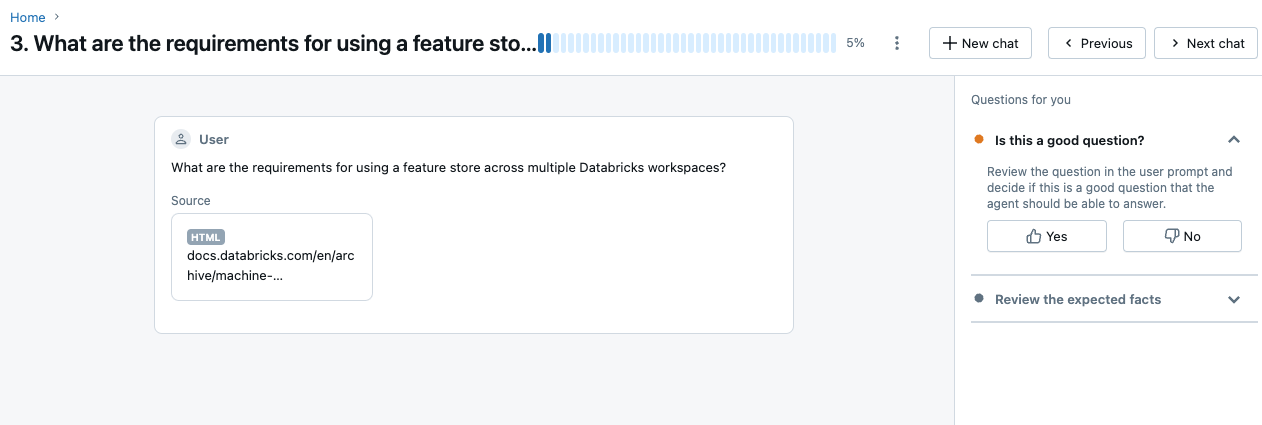

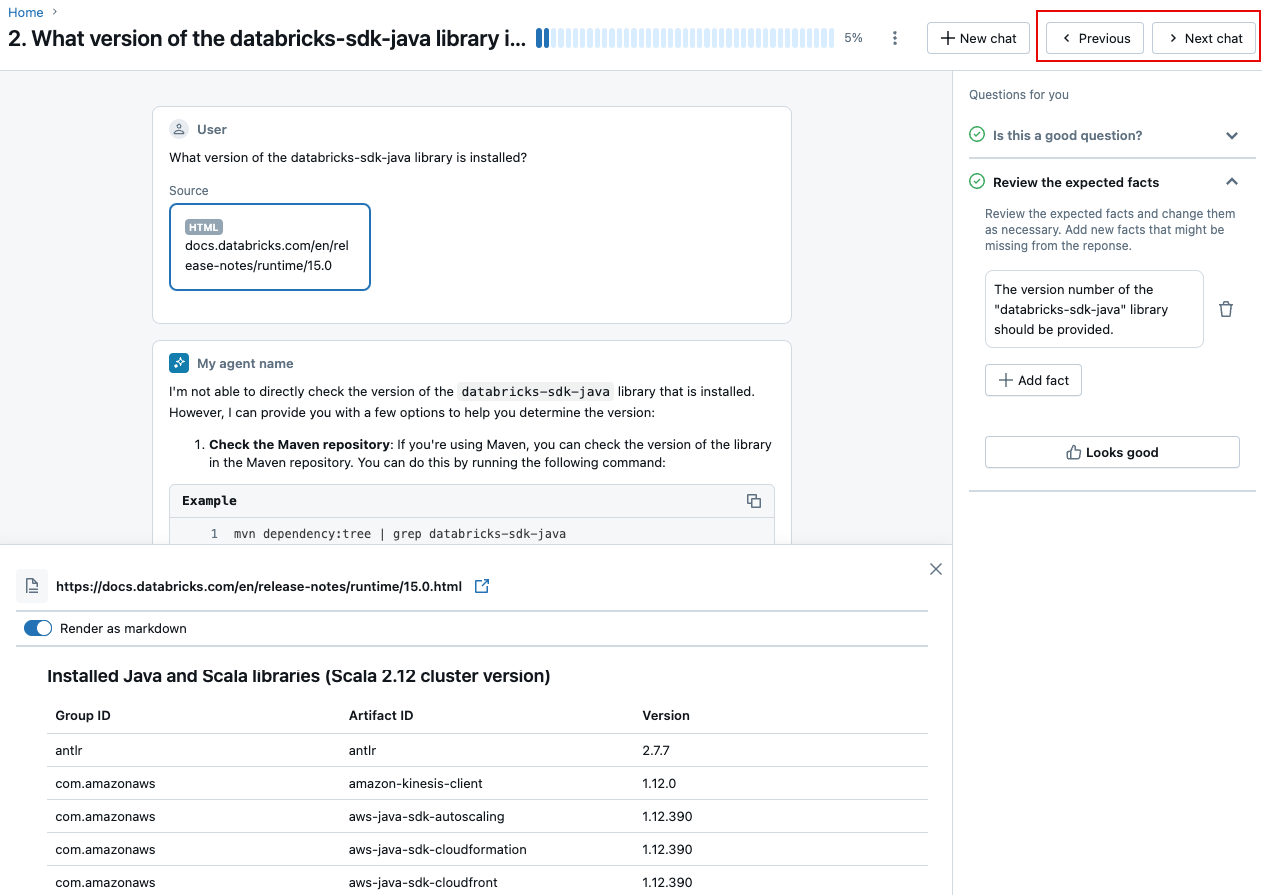

A new page opens, showing the chat interface on the left side, and a list of questions for you to review on the right side.

-

If the chat request is synthesized from a document, you can click the source document card to view the content of the source document.

-

Answer all the questions on the right side of the screen. For more details, see Review a chat. Changes you make are saved automatically.

-

When you are done with the review of this chat:

- It automatically moves to the next chat if there is one.

- To return to the home page, click

at the upper-left of the screen.

- To navigate to the previous or next chat, click Previous or Next chat at the upper-right of the page.

Review a chat

Is this a good question?

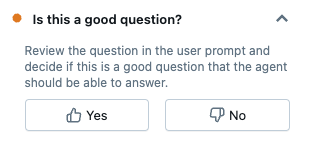

When you review a chat, the first step is to decide if the question is a good test of the AI agent's capabilities.

If you think the question is not a good test, click No to reject it and skip the rest of the review steps.

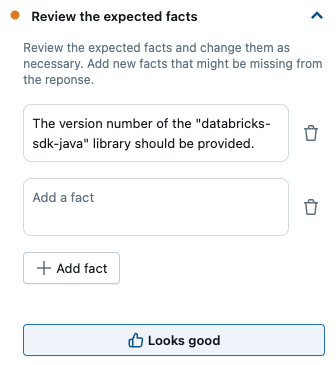

Review the expected facts

In this step, your task is to review and edit the list of expected facts that the AI agent should use to answer the question.

- Review the existing facts if there are any. You can edit the text directly if necessary. To remove a fact, click [add screen cap of the trash can icon].

- To add a new fact, click Add fact.

For important guidelines on how to provide expected facts, see

expected_factsguidelines. - When you have completed your review, click Looks good.