Create an AI agent

This article introduces the process of creating AI agents on Databricks and outlines the available methods for creating agents.

To learn more about agents, see Agent system design patterns.

Automatically build an agent with Agent Bricks

Agent Bricks provides a simple approach to build and optimize domain-specific, high-quality AI agent systems for common AI use cases. Specify your use case and data, and Agent Bricks will automatically build out several AI agent systems for you that you can further refine. See Agent Bricks.

Author an agent in code

Mosaic AI Agent Framework and MLflow provide tools to help you author enterprise-ready agents in Python.

Databricks supports authoring agents using third-party agent authoring libraries like LangGraph/LangChain, OpenAI, LlamaIndex, or custom Python implementations.

To get started quickly, see Get started with AI agents. For more details on authoring agents with different frameworks and advanced features, see Author an AI agent and deploy it on Databricks Apps.

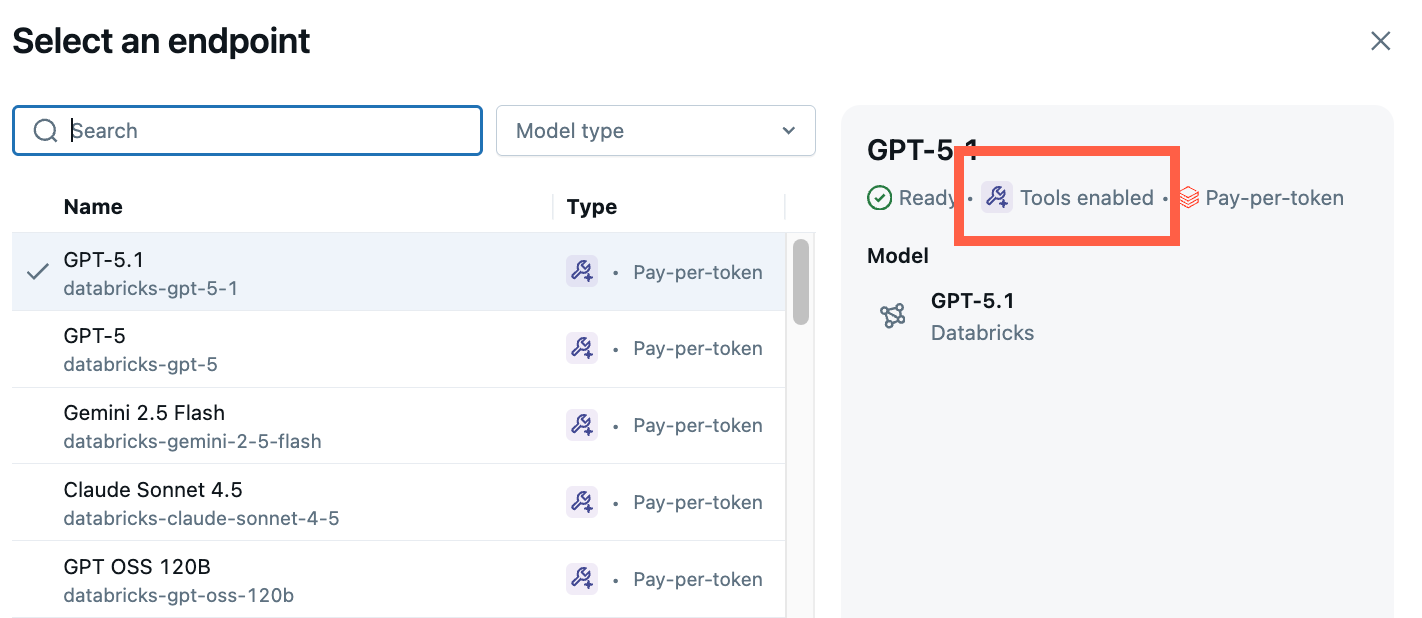

Prototype agents with AI Playground

The AI Playground is the easiest way to create an agent on Databricks. AI Playground lets you select from various LLMs and quickly add tools to the LLM using a low-code UI. You can then chat with the agent to test its responses and then export the agent to code for deployment or further development.

See Get started: Query LLMs and prototype AI agents with no code.

Understand model signatures to ensure compatibility with Databricks features

Databricks uses MLflow Model Signatures to define agents' input and output schema. Product features like the AI Playground assume that your agent has one of a set of supported model signatures.

If you follow the recommended approach to authoring agents using the ResponsesAgent interface, MLflow will automatically infer a signature for your agent that is compatible with Databricks product features.

Otherwise, you must ensure that your agent adheres to one of the other signatures in Legacy input and output agent schema (Model Serving), to ensure compatibility with Databricks features.