Use benchmarks in a Genie space

This page explains how to use benchmarks to evaluate the accuracy of your Genie space.

Overview

Benchmarks allow you to create a set of test questions that you can run to assess Genie's overall response accuracy. A well-designed set of benchmarks covering the most frequently asked user questions helps evaluate the accuracy of your Genie space as you refine it. Each Genie space can contain up to 500 benchmark questions.

Benchmark questions run as new conversations. They do not carry the same context as a threaded Genie conversation. Each question is processed as a new query, using the instructions defined in the space, including any provided example SQL and SQL functions.

Add benchmark questions

Benchmark questions should reflect different ways of phrasing the common questions your users ask. You can use them to check Genie's response to variations in question phrasing or different question formats.

When creating a benchmark question, you can optionally include a SQL query whose result set is the correct answer. During benchmark runs, accuracy is assessed by comparing the result set from your SQL query to the one generated by Genie. You can also use Unity Catalog SQL functions as gold standard answers for benchmarks.

To add a benchmark question:

-

Near the top of the Genie space, click Benchmarks.

-

Click Add benchmark.

-

In the Question field, enter a benchmark question to test.

-

(Optional) Provide a SQL query that answers the question. You can write your own query by typing in the SQL Answer text field, including Unity Catalog SQL functions. Alternatively, click Generate SQL to have Genie write the SQL query for you. Use a SQL statement that accurately answers the question you entered.

noteThis step is recommended. Only questions that include this example SQL statement can be automatically assessed for accuracy. Any questions that do not include a SQL Answer require manual review to be scored. If you use the Generate SQL button, review the statement to be sure that it's accurately answering the question.

-

(Optional) Click Run to run your query and view the results.

-

When you're finished editing, click Add benchmark.

-

To update a question after saving, click the

pencil icon to open the Update question dialog.

Use benchmarks to test alternate question phrasings

When evaluating the accuracy of your Genie space, it's important to structure tests to reflect realistic scenarios. Users may ask the same question in different ways. Databricks recommends adding multiple phrasings of the same question and using the same example SQL in your benchmark tests to fully assess accuracy. Most Genie spaces should include between two and four phrasings of the same question.

Run benchmark questions

Users with at least CAN EDIT permissions in a Genie space can run a benchmark evaluation at any time. You can run all benchmark questions or select a subset of questions to test.

For each question, Genie interprets the input, generates SQL, and returns results. The generated SQL and results are then compared against the SQL Answer defined in the benchmark question.

To run all benchmark questions:

- Near the top of the Genie space, click Benchmarks.

- Click Run benchmarks to start the test run.

To run a subset of benchmark questions:

- Near the top of the Genie space, click Benchmarks.

- Select the checkboxes next to the questions you want to test.

- Click Run selected to start the test run on the selected questions.

You can also select a subset of questions from a previous benchmark result and rerun those specific questions to test improvements.

Benchmarks continue to run when you navigate away from the page. You can check the results on the Evaluation tab when the run is complete.

Interpret ratings

The following criteria determine how Genie responses are rated:

Condition | Rating |

|---|---|

Genie generates SQL that exactly matches the provided SQL Answer | Good |

Genie generates a result set that exactly matches the result set produced by the SQL Answer | Good |

Genie generates a result set with the same data as the SQL Answer but sorted differently | Good |

Genie generates a result set with numeric values that round to the same 4 significant digits as the SQL Answer | Good |

Genie generates SQL that produces an empty result set or returns an error | Bad |

Genie generates a result set that includes extra columns compared to the result set produced by the SQL Answer | Bad |

Genie generates a single cell result that's different from the single cell result produced by the SQL Answer | Bad |

Manual review needed: Responses are marked with this label when Genie cannot assess correctness or when Genie-generated query results do not contain an exact match with the results from the provided SQL Answer. Any benchmark questions that do not include a SQL Answer must be reviewed manually.

Access benchmark evaluations

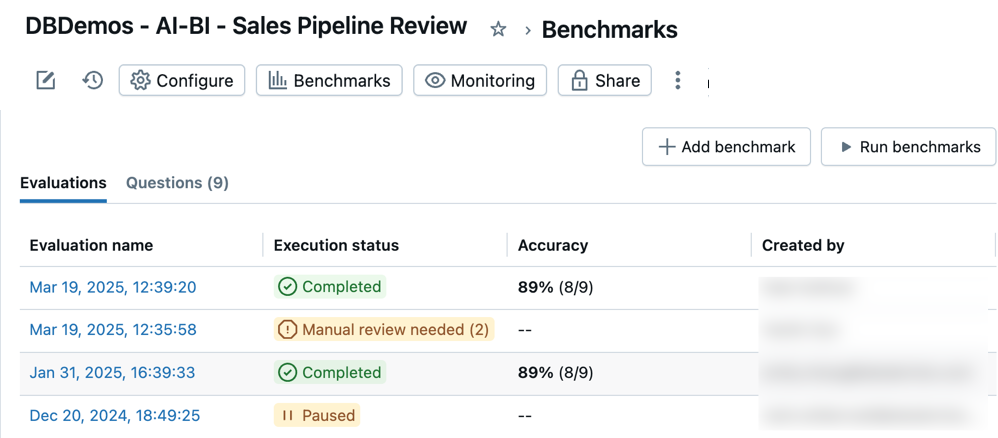

You can access all of your benchmark evaluations to track accuracy in your Genie space over time. When you open a space's Benchmarks, a timestamped list of evaluation runs appears in the Evaluations tab. If no evaluation runs are found, see Add benchmark questions or Run benchmark questions.

The Evaluations tab shows an overview of evaluations and their performance reported in the following categories:

Evaluation name: A timestamp that indicates when an evaluation run occurred. Click the timestamp to see details for that evaluation. Execution status: Indicates if the evaluation is completed, paused, or unsuccessful. If an evaluation run includes benchmark questions that do not have predefined SQL answers, it is marked for review in this column. Accuracy: A numeric assessment of accuracy across all benchmark questions. For evaluation runs that require manual review, an accuracy measure appears only after those questions have been reviewed. Created by: Indicates the name of the user who ran the evaluation.

Review individual evaluations

You can review individual evaluations to get a detailed look at each response. You can edit the assessment for any question and update any items that need manual review.

To review individual evaluations:

-

Near the top of the Genie space, click Benchmarks.

-

Click the timestamp for any evaluation in the Evaluation name column to open a detailed view of that test run.

-

Use the question list on the left side of the screen to see a detailed view of each question.

-

Review and compare the Model output response with the Ground truth response.

For results rated as incorrect, an explanation appears describing why the result was rated as Bad. This helps you understand specific differences between the generated output and the expected ground truth.

noteThe results of these responses appear in the evaluation details for one week. After one week, the results are no longer visible. The generated SQL statement and the example SQL statement remain.

-

Click Update ground truth to save the response as the new Ground truth for this question. This is useful if no ground truth exists, or if the response is better or more accurate than the existing ground truth statement.

-

Click the

on the label to edit the assessment.

Mark each result as Good or Bad to get an accurate score for this evaluation.