Lakehouse reference architectures (download)

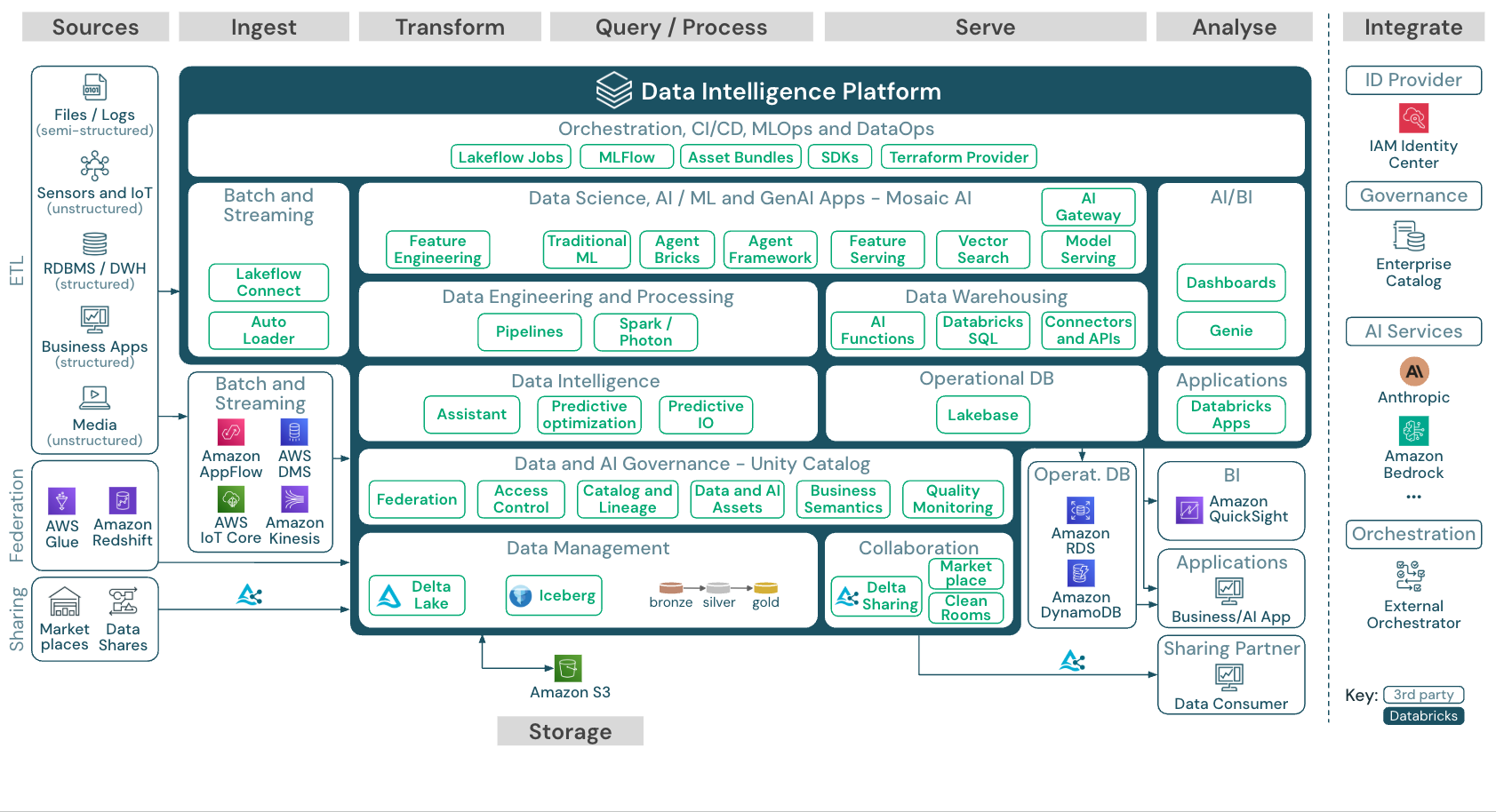

This article provides architectural guidance for the lakehouse, covering data sources, ingestion, transformation, querying and processing, serving, analysis, and storage.

Each reference architecture has a downloadable PDF in 11 x 17 (A3) format.

While the lakehouse on Databricks is an open platform that integrates with a large ecosystem of partner tools, the reference architectures focus only on AWS services and the Databricks lakehouse. The cloud provider services shown are selected to illustrate the concepts and are not exhaustive.

Download: Reference architecture for the Databricks lakehouse on AWS

The AWS reference architecture shows the following AWS-specific services for ingesting, storage, serving, and analysis:

- Amazon Redshift as a source for Lakehouse Federation

- Amazon AppFlow and AWS Glue for batch ingest

- AWS IoT Core, Amazon Kinesis, and AWS DMS for streaming ingest

- Amazon S3 as the object storage for data and AI assets

- Amazon RDS and Amazon DynamoDB as operational databases

- Amazon QuickSight as BI tool

- Amazon Bedrock is used by Model Serving to call external LLMs from leading AI startups and Amazon

Organization of the reference architectures

The reference architecture is structured along the swim lanes Source, Ingest, Transform, Query/Process, Serve, Analysis, and Storage:

-

Source

There are three ways to integrate external data into the Data Intelligence Platform:

- ETL: The platform enables integration with systems that provide semi-structured and unstructured data (such as sensors, IoT devices, media, files, and logs), as well as structured data from relational databases or business applications.

- Lakehouse Federation: SQL sources, such as relational databases, can be integrated into the lakehouse and Unity Catalog without ETL. In this case, the source system data is governed by Unity Catalog, and queries are pushed down to the source system.

- Catalog Federation: External Hive Metastore catalogs or AWS Glue can also be integrated into Unity Catalog through catalog federation, allowing Unity Catalog to control the tables stored in Hive Metastore or AWS Glue.

-

Ingest

Ingest data into the lakehouse via batch or streaming:

- Databricks Lakeflow Connect offers built-in connectors for ingestion from enterprise applications and databases. The resulting ingestion pipeline is governed by Unity Catalog and is powered by serverless compute and Pipelines.

- Files delivered to cloud storage can be loaded directly using the Databricks Auto Loader.

- For batch ingestion of data from enterprise applications into Delta Lake, the Databricks lakehouse relies on partner ingest tools with specific adapters for these systems of record.

- Streaming events can be ingested directly from event streaming systems such as Kafka using Databricks Structured Streaming. Streaming sources can be sensors, IoT, or change data capture processes.

-

Storage

- Data is typically stored in the cloud storage system where the ETL pipelines use the medallion architecture to store data in a curated way as Delta files/tables or Apache Iceberg tables.

-

Transform and Query / process

-

The Databricks lakehouse uses its engines Apache Spark and Photon for all transformations and queries.

-

Pipelines is a declarative framework for simplifying and optimizing reliable, maintainable, and testable data processing pipelines.

-

Powered by Apache Spark and Photon, the Databricks Data Intelligence Platform supports both types of workloads: SQL queries via SQL warehouses, and SQL, Python and Scala workloads via workspace clusters.

-

For data science (ML Modeling and Gen AI), the Databricks AI and Machine Learning platform provides specialized ML runtimes for AutoML and for coding ML jobs. All data science and MLOps workflows are best supported by MLflow.

-

-

Serving

-

For data warehousing (DWH) and BI use cases, the Databricks lakehouse provides Databricks SQL, the data warehouse powered by SQL warehouses, and serverless SQL warehouses.

-

For machine learning, Mosaic AI Model Serving is a scalable, real-time, enterprise-grade model serving capability hosted in the Databricks control plane. Mosaic AI Gateway is Databricks' solution for governing and monitoring access to supported generative AI models and their associated model serving endpoints.

-

Operational databases:

- Lakebase is an online transaction processing (OLTP) database based on Postgres and fully integrated with the Databricks Data Intelligence Platform. It allows you to create OLTP databases on Databricks and integrate OLTP workloads with your Lakehouse.

- External systems, such as operational databases, can be used to store and deliver final data products to user applications.

-

-

Collaboration:

-

Business partners get secure access to the data they need through Delta Sharing.

-

Based on Delta Sharing, the Databricks Marketplace is an open forum for exchanging data products.

-

Clean Rooms are secure and privacy-protecting environments where multiple users can work together on sensitive enterprise data without direct access to each other's data.

-

-

Analysis

-

The final business applications are in this swim lane. Examples include custom clients such as AI applications connected to Mosaic AI Model Serving for real-time inference or applications that access data pushed from the lakehouse to an operational database.

-

For BI use cases, analysts typically use BI tools to access the data warehouse. SQL developers can additionally use the Databricks SQL Editor (not shown in the diagram) for queries and dashboarding.

-

The Data Intelligence Platform also offers dashboards to build data visualizations and share insights.

-

-

Integrate

- The Databricks platform integrates with standard identity providers for user management and single sign on (SSO).

-

External AI services like OpenAI, LangChain or HuggingFace can be used directly from within the Databricks Intelligence Platform.

-

External orchestrators can either use the comprehensive REST API or dedicated connectors to external orchestration tools like Apache Airflow.

-

Unity Catalog is used for all data & AI governance in the Databricks Intelligence Platform and can integrate other databases into its governance through Lakehouse Federation.

Additionally, Unity Catalog can be integrated into other enterprise catalogs. Contact the enterprise catalog vendor for details.

Common capabilities for all workloads

In addition, the Databricks lakehouse comes with management capabilities that support all workloads:

-

Data and AI governance

The central data and AI governance system in the Databricks Data Intelligence Platform is Unity Catalog. Unity Catalog provides a single place to manage data access policies that apply across all workspaces and supports all assets created or used in the lakehouse, such as tables, volumes, features (feature store), and models (model registry). Unity Catalog can also be used to capture runtime data lineage across queries run on Databricks.

Databricks Data Quality Monitoring allows you to monitor the data quality of all tables in your account. It detects anomalies across all your tables and provides a full data profile for each table.

For observability, system tables is a Databricks-hosted analytical store of your account's operational data. System tables can be used for historical observability across your account.

-

Data intelligence engine

The Databricks Data Intelligence Platform allows your entire organization to use data and AI, combining generative AI with the unification benefits of a lakehouse to understand the unique semantics of your data. See Databricks AI assistive features.

The Databricks Assistant is available in Databricks notebooks, SQL editor, file editor, and elsewhere as a context-aware AI assistant for users.

-

Automation & Orchestration

Lakeflow Jobs orchestrate data processing, machine learning, and analytics pipelines on the Databricks Data Intelligence Platform. Lakeflow Spark Declarative Pipelines allow you to build reliable and maintainable ETL pipelines with declarative syntax. The platform also supports CI/CD and MLOps

High-level use cases for the Data Intelligence Platform on AWS

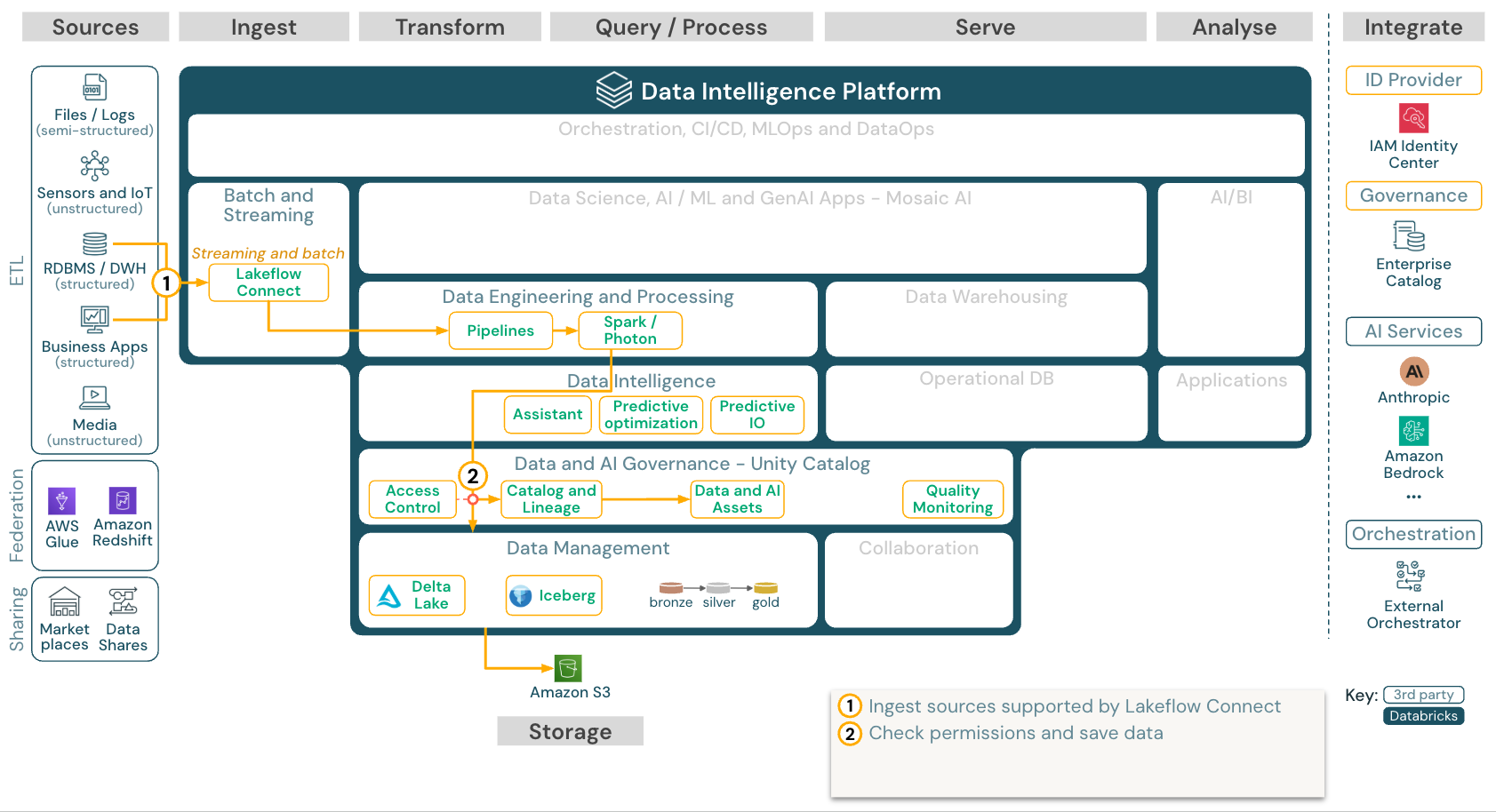

Built-in ingestion from SaaS apps and databases with Lakeflow Connect

Download: Lakeflow Connect reference architecture for Databricks on AWS

Databricks Lakeflow Connect offers built-in connectors for ingestion from enterprise applications and databases. The resulting ingestion pipeline is governed by Unity Catalog and is powered by serverless compute and Lakeflow Spark Declarative Pipelines.

Lakeflow Connect leverages efficient incremental reads and writes to make data ingestion faster, scalable, and more cost-efficient, while your data remains fresh for downstream consumption.

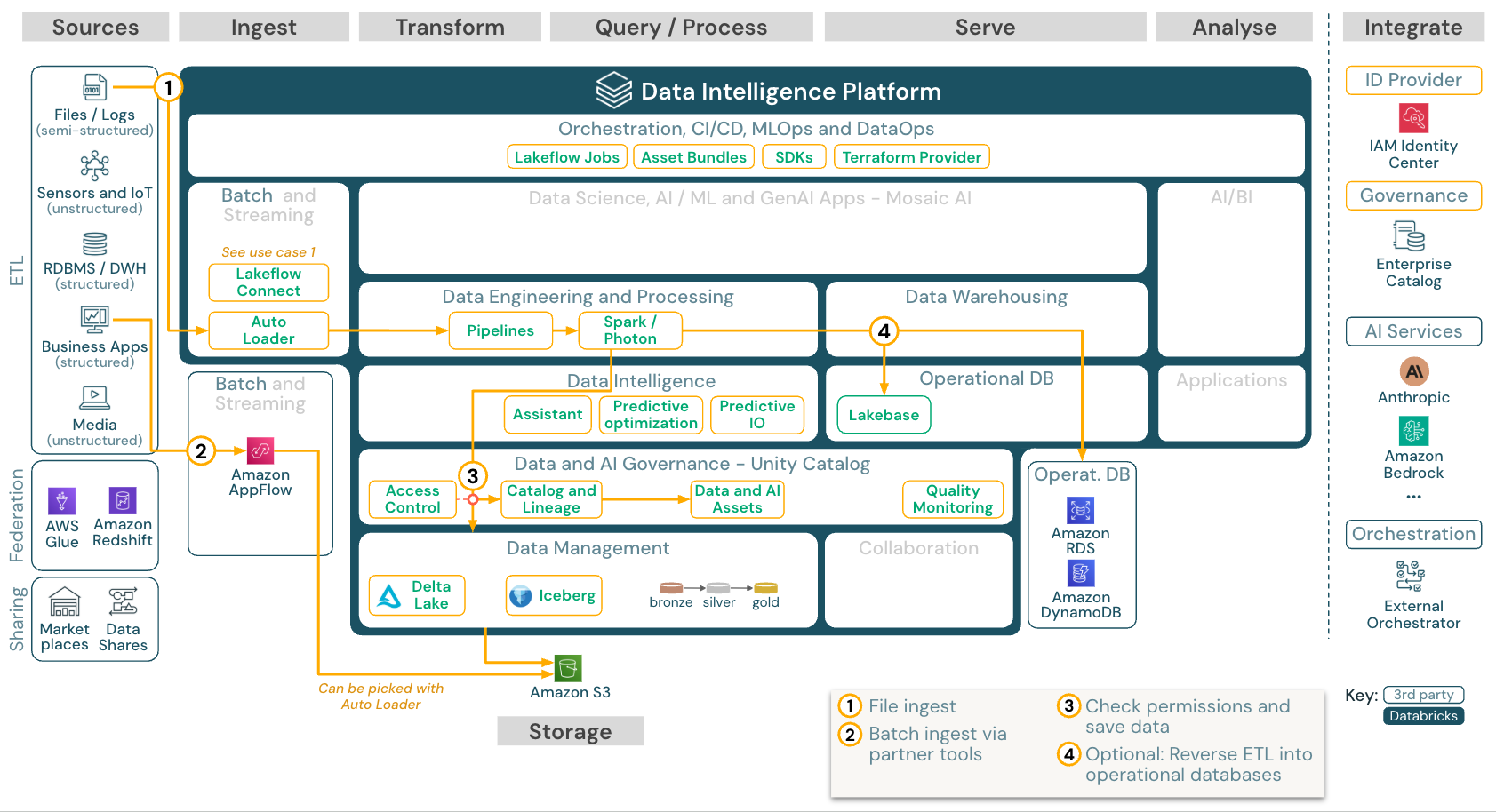

Batch ingestion and ETL

Download: Batch ETL reference architecture for Databricks on AWS

Ingestion tools use source-specific adapters to read data from the source and then either store it in the cloud storage from where Auto Loader can read it, or call Databricks directly (for example, with partner ingestion tools integrated into the Databricks lakehouse). To load the data, the Databricks ETL and processing engine runs the queries via Pipelines. Orchestrate single or multitask jobs using Lakeflow Jobs and govern them using Unity Catalog (access control, audit, lineage, and so on). To provide access to specific golden tables for low-latency operational systems, export the tables to an operational database such as an RDBMS or key-value store at the end of the ETL pipeline.

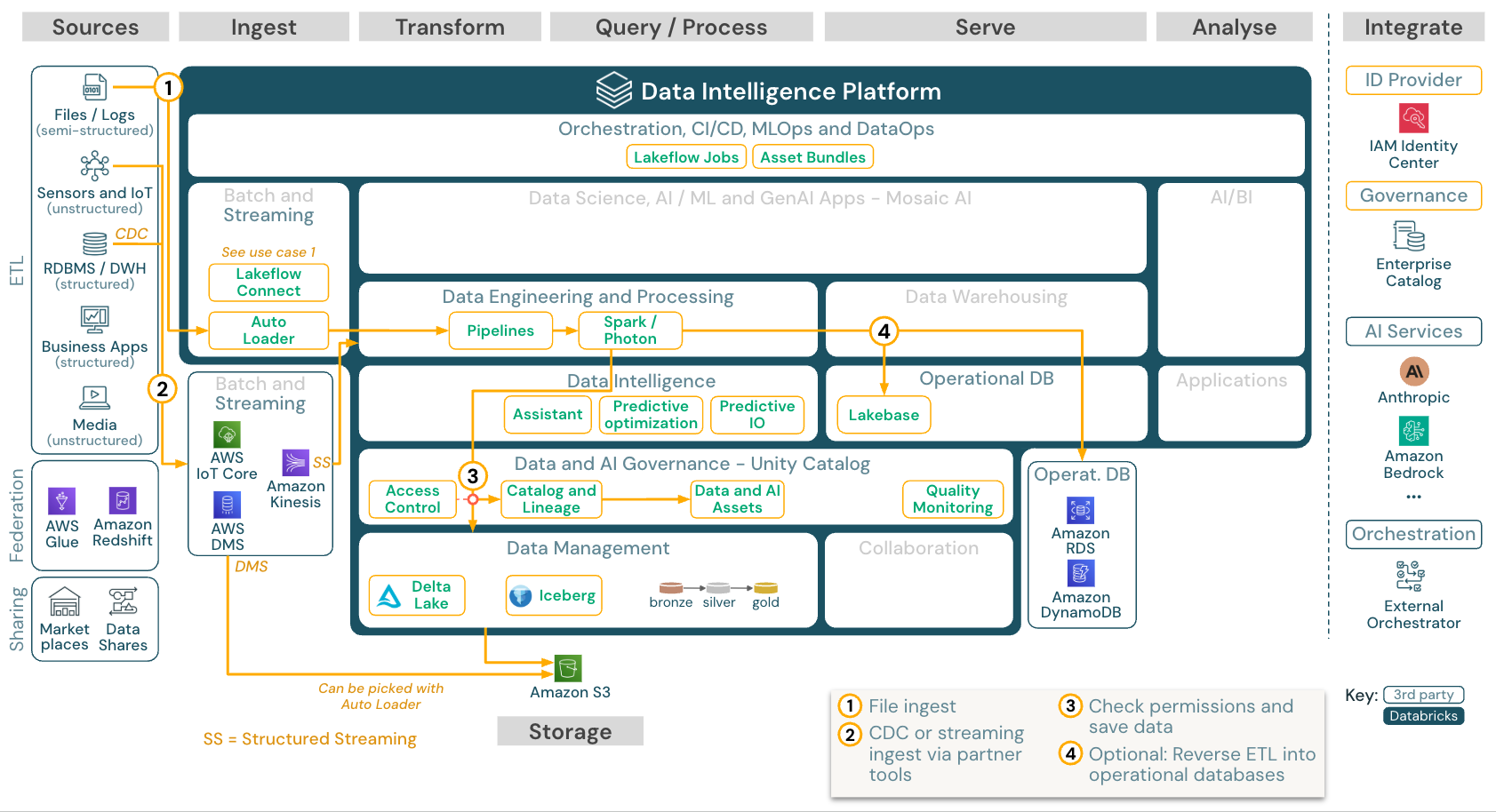

Streaming and change data capture (CDC)

Download: Spark structured streaming architecture for Databricks on AWS

The Databricks ETL engine Spark Structured Streaming to read from event queues such as Apache Kafka or AWS Kinesis. The downstream steps follow the approach of the Batch use case above.

Real-time change data capture (CDC) typically stores the extracted events in an event queue. From there, the use case follows the streaming use case.

If CDC is done in batch, with the extracted records stored in cloud storage first, Databricks Autoloader can read them, and the use case follows Batch ETL.

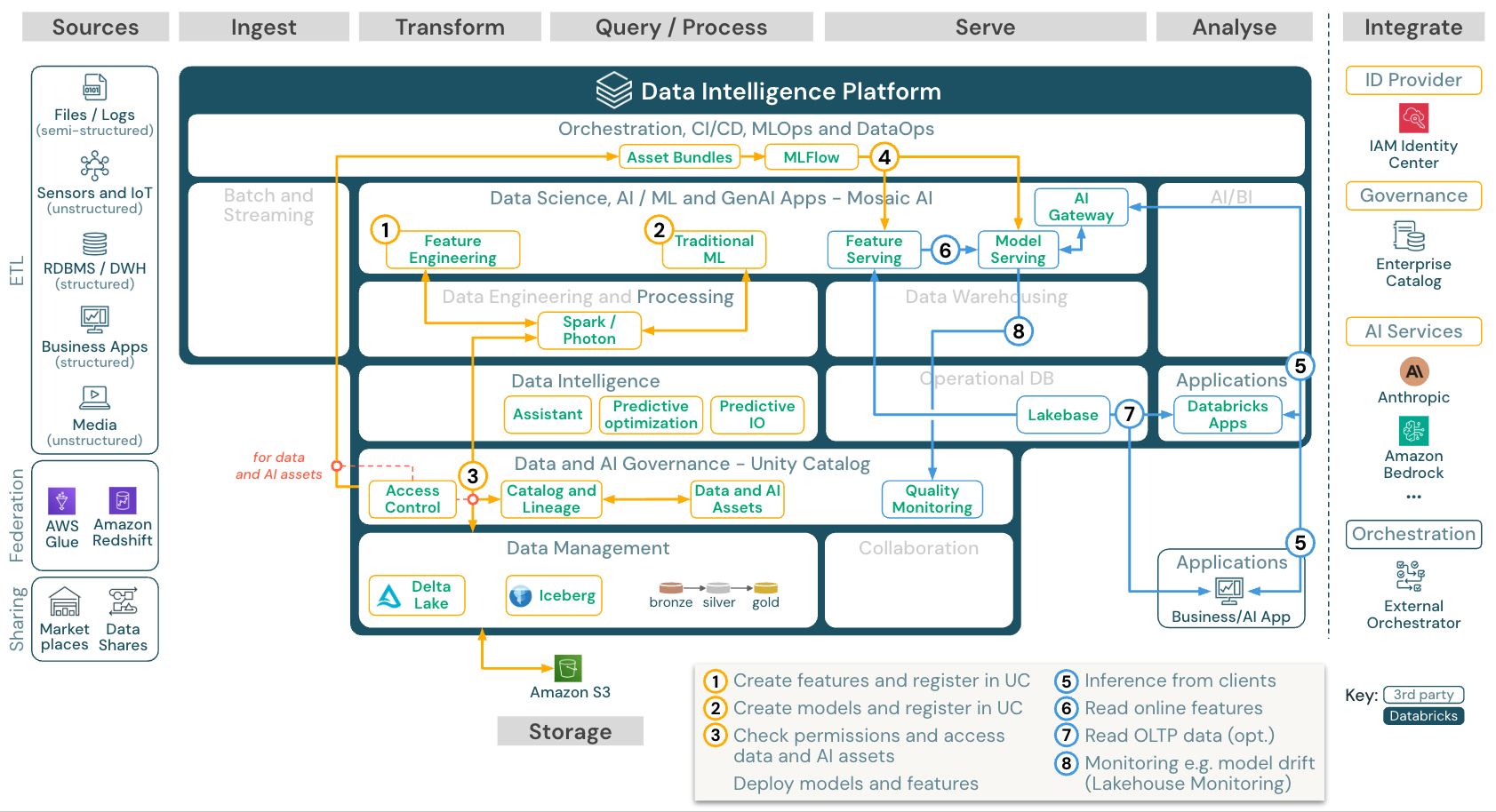

Machine learning and AI (traditional)

Download: Machine learning and AI reference architecture for Databricks on AWS

For machine learning, the Databricks Data Intelligence Platform provides Mosaic AI, which comes with state-of-the-art machine and deep learning libraries. It provides capabilities such as Feature Store and Model Registry (both integrated into Unity Catalog), low-code features with AutoML, and MLflow integration into the data science lifecycle.

Unity Catalog governs all data science-related assets (tables, features, and models), and data scientists can use Lakeflow Jobs to orchestrate their jobs.

For deploying models in a scalable and enterprise-grade way, use the MLOps capabilities to publish the models in model serving.

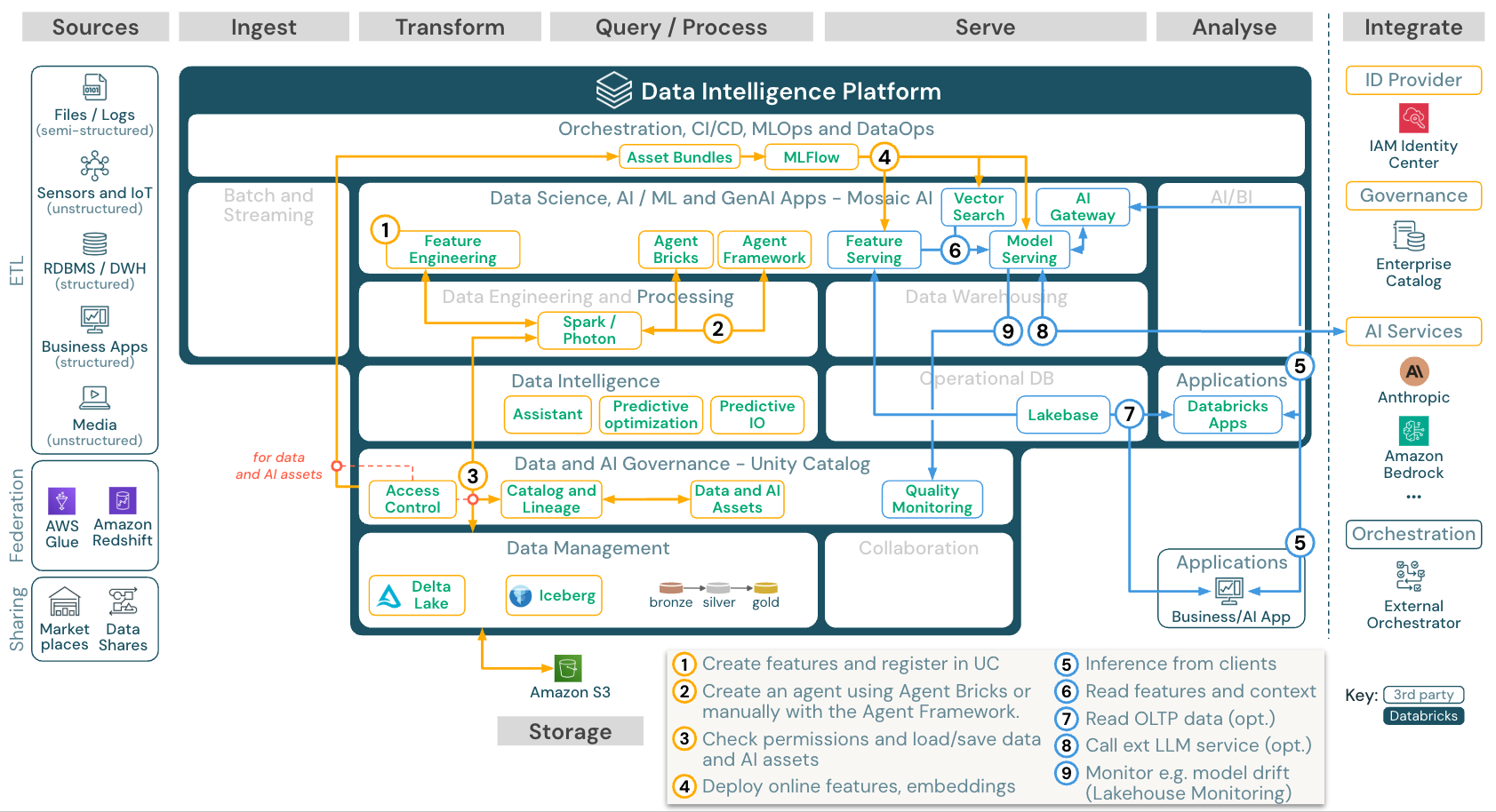

AI Agent applications (Gen AI)

Download: Gen AI application reference architecture for Databricks on AWS

For deploying models in a scalable and enterprise-grade way, use the MLOps capabilities to publish the models in model serving.

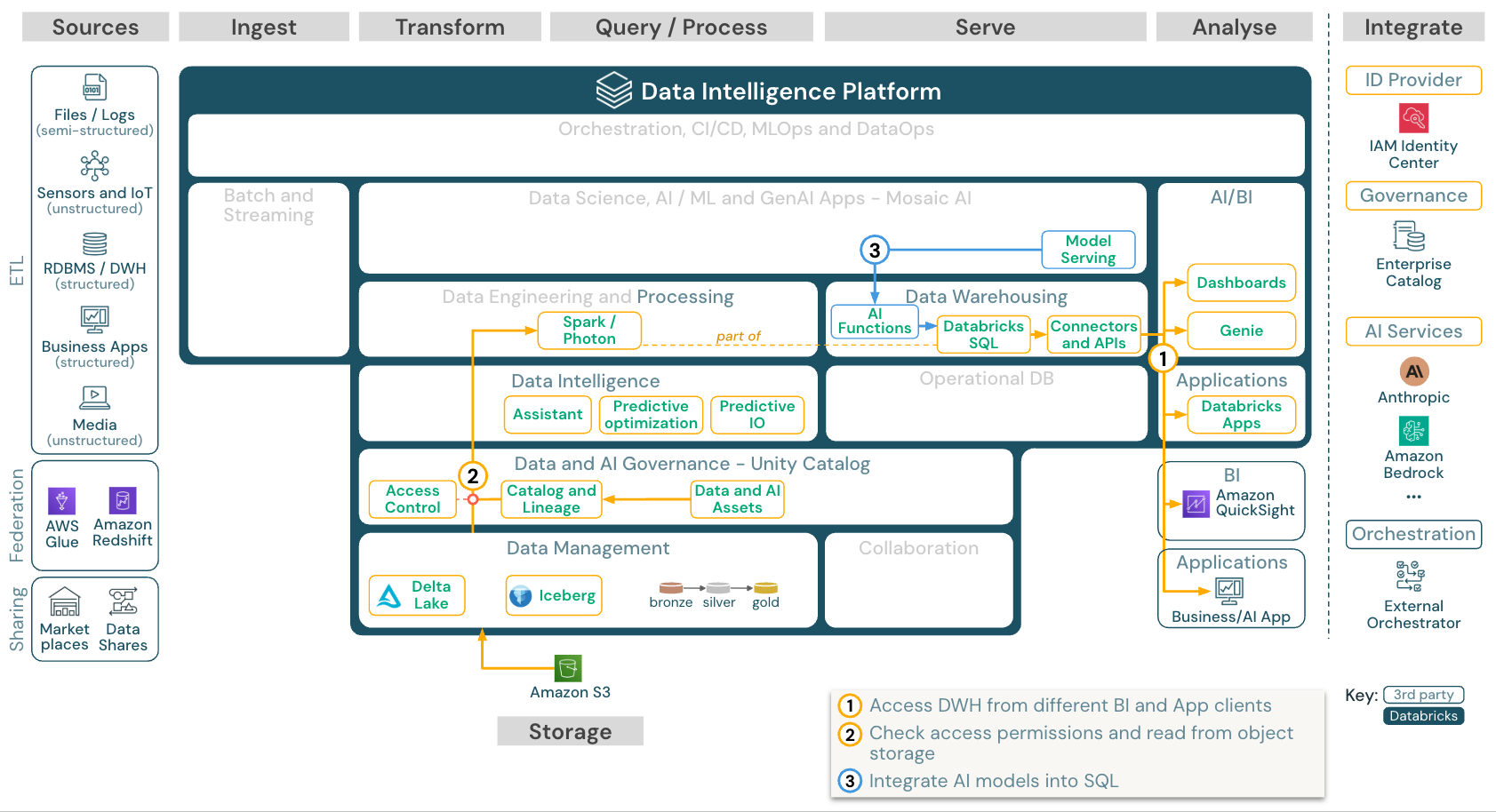

BI and SQL analytics

Download: BI and SQL analytics reference architecture for Databricks on AWS

For BI use cases, business analysts can use dashboards, the Databricks SQL editor or BI tools such as Tableau or Amazon QuickSight. In all cases, the engine is Databricks SQL (serverless or non-serverless), and Unity Catalog provides data discovery, exploration, lineage, and access control.

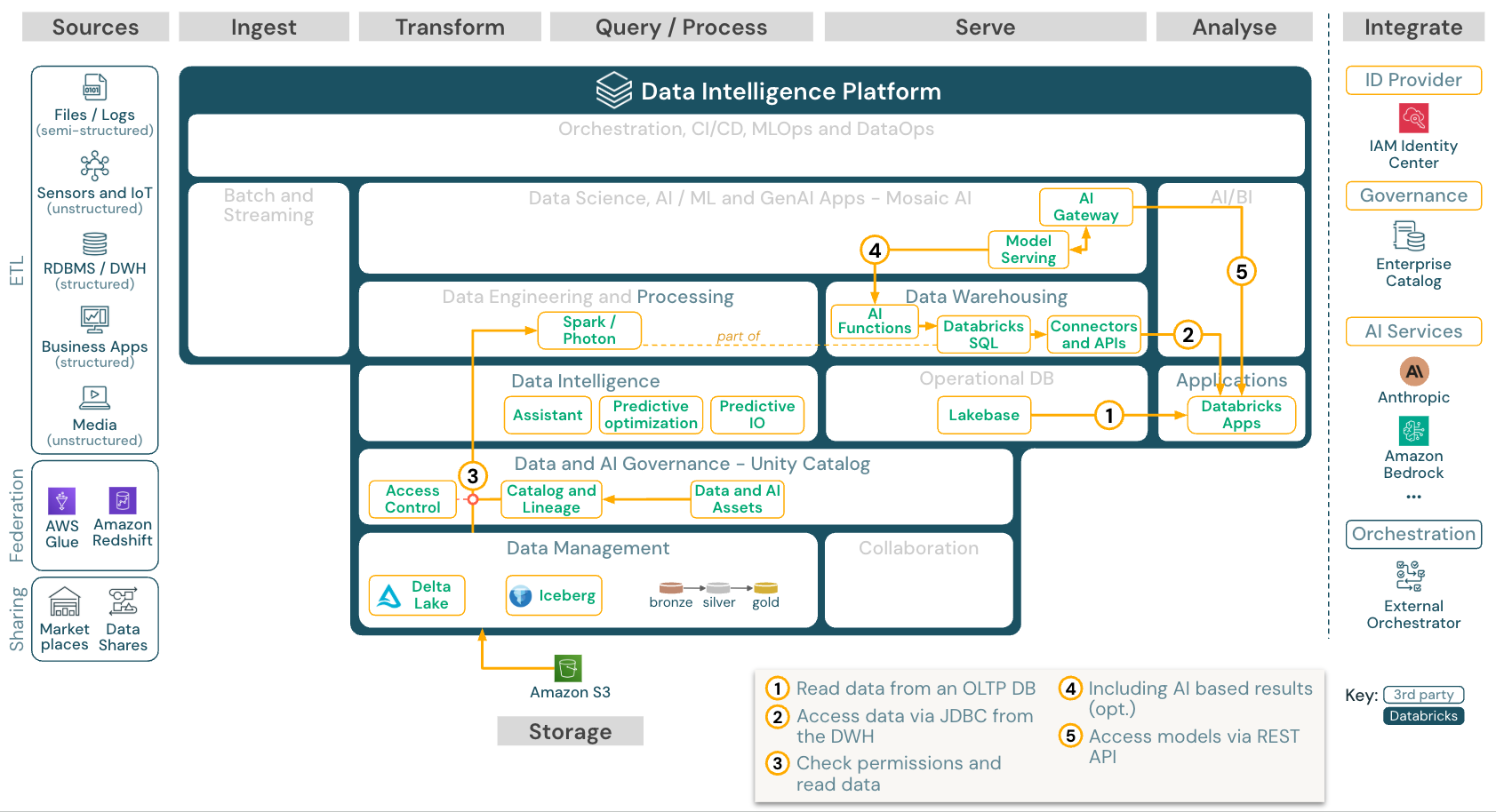

Business Apps

Download: Business Apps for Databricks on AWS

Databricks Apps enables developers to build and deploy secure data and AI applications directly on the Databricks platform, which eliminates the need for separate infrastructure. Apps are hosted on the Databricks serverless platform and integrate with key platform services. Use Lakebase, if the app needs OLTP data that got synched from the Lakehouse.

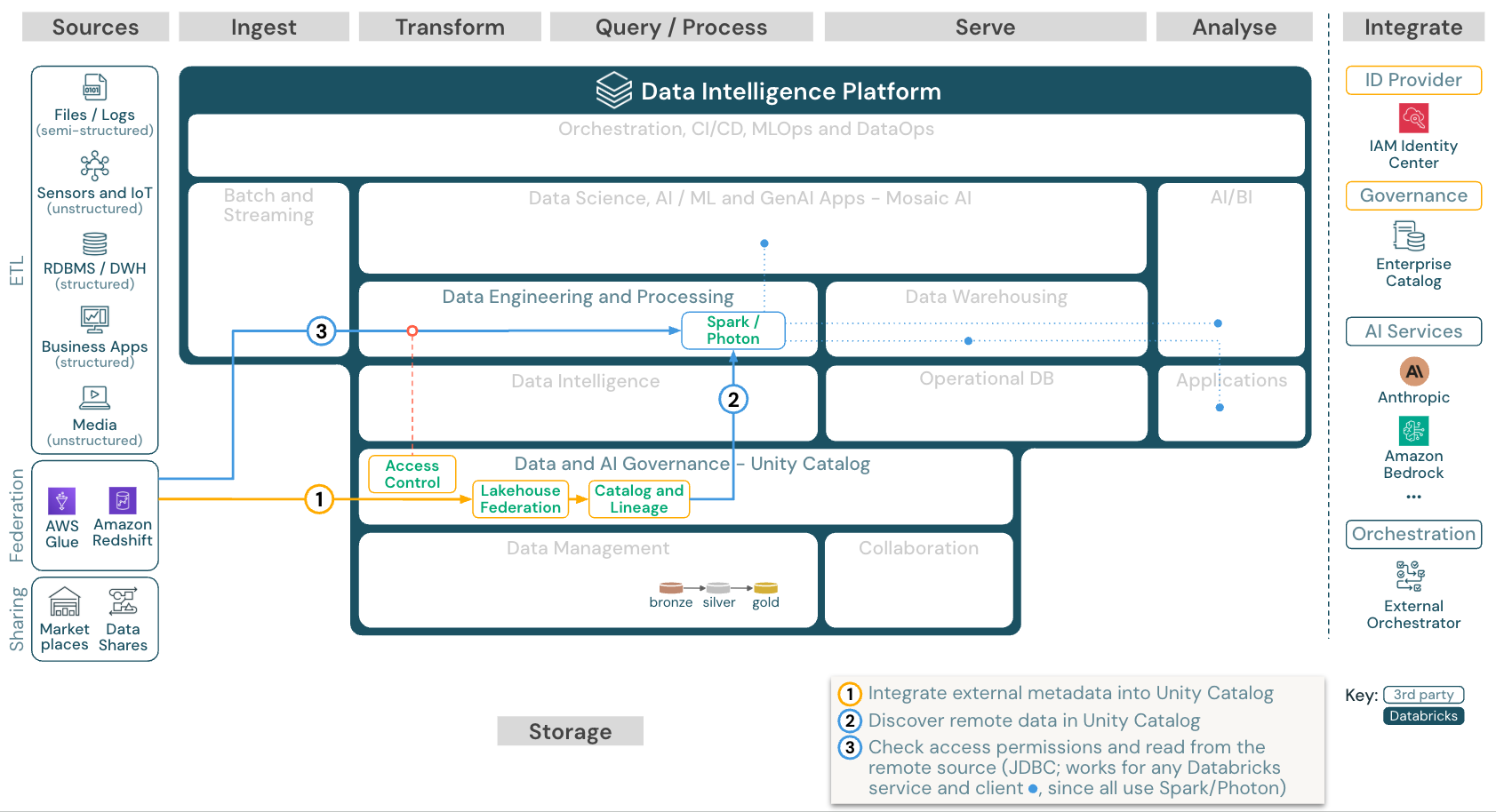

Lakehouse federation

Download: Lakehouse federation reference architecture for Databricks on AWS

Lakehouse Federation allows external data SQL databases (such as MySQL, Postgres, or Redshift) to be integrated with Databricks.

All workloads (AI, DWH, and BI) can benefit from this without the need to ETL the data into object storage first. The external source catalog is mapped into the Unity catalog and fine-grained access control can be applied to access via the Databricks platform.

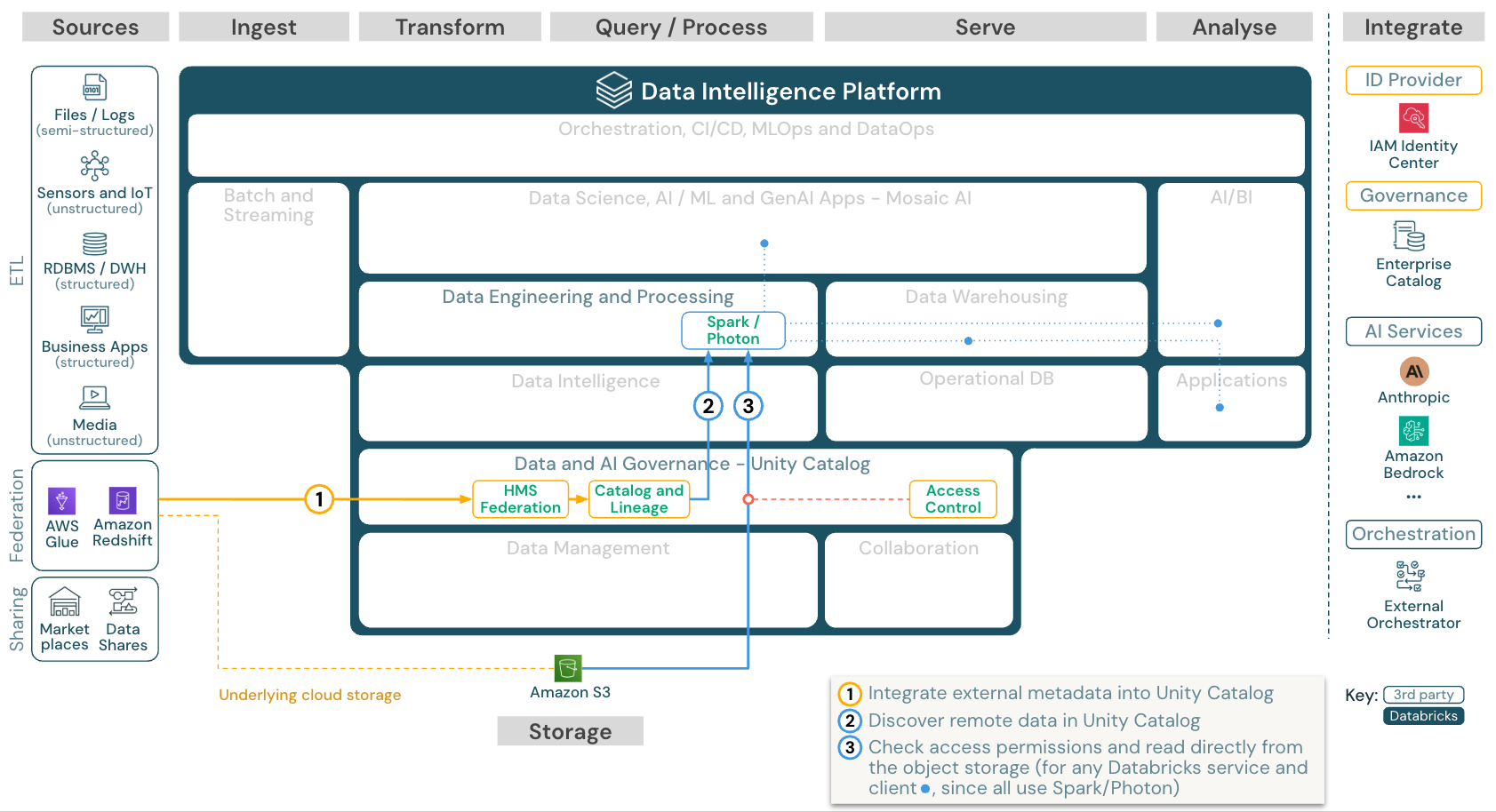

Catalog federation

Download: Catalog federation reference architecture for Databricks on AWS

Catalog federation allows external Hive Metastores (such as MySQL, Postgres, or Redshift) or Amazon Glue to be integrated with Databricks.

All workloads (AI, DWH, and BI) can benefit from this without the need to ETL the data into object storage first. The external source catalog is added to Unity Catalog where fine-grained access control is applied via the Databricks platform.

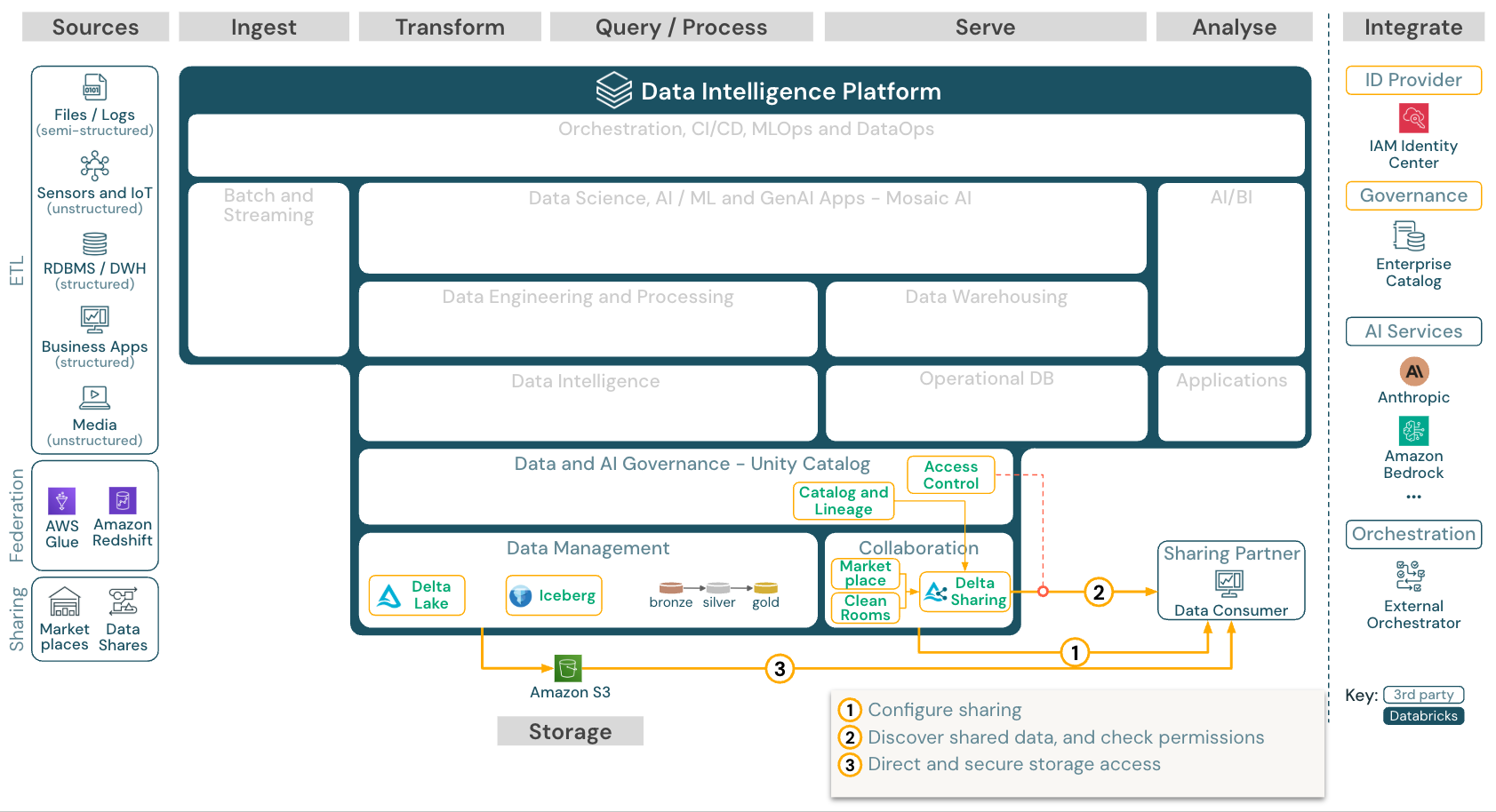

Share Data with 3rd party tools

Download: Share data with 3rd-party tools reference architecture for Databricks on AWS

Enterprise-grade data sharing with 3rd parties is provided by Delta Sharing. It enables direct access to data in the object store secured by Unity Catalog. This capability is also used in the Databricks Marketplace, an open forum for exchanging data products.

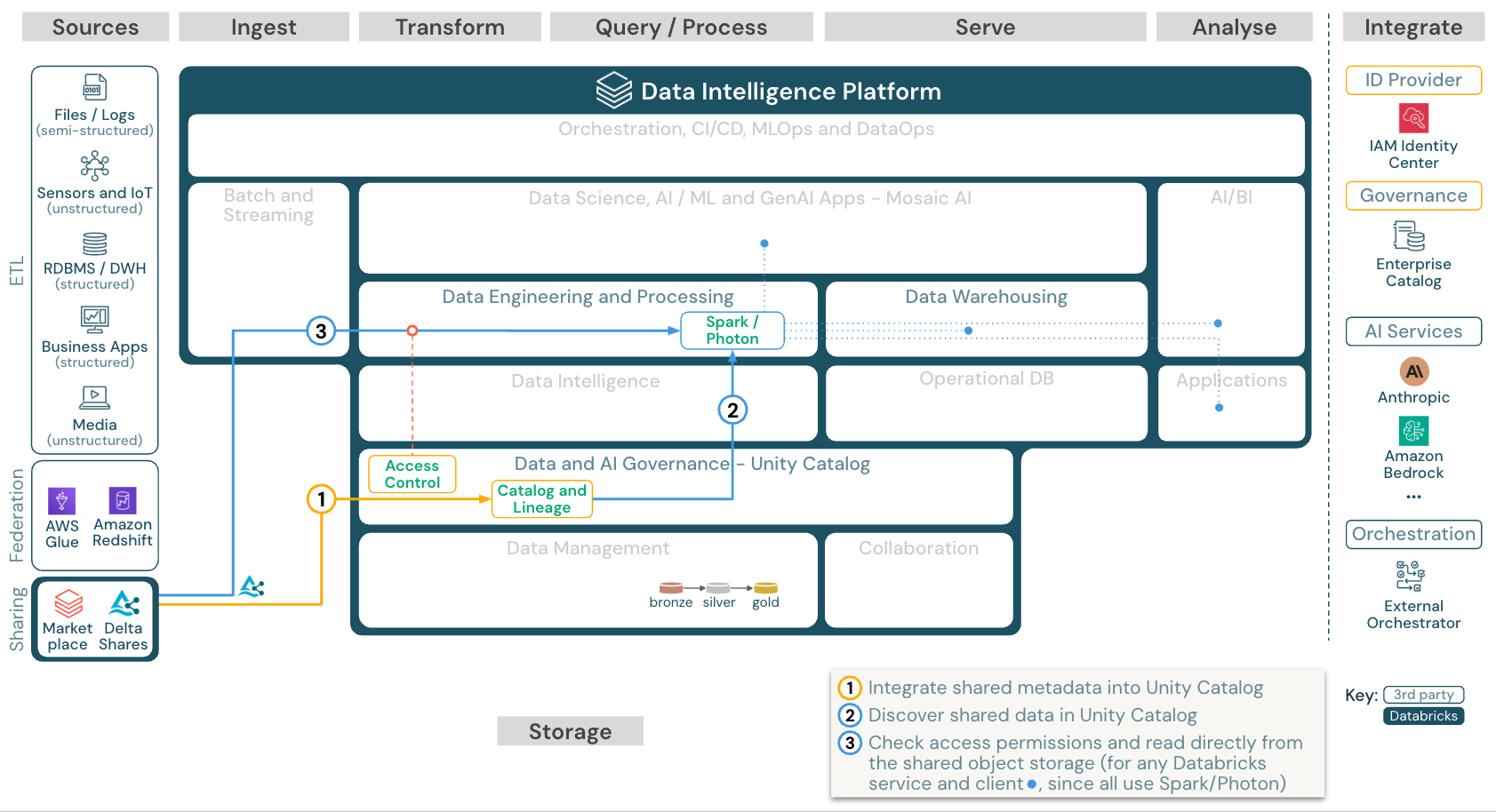

Consume shared data from Databricks

Download: Consume shared data from Databricks reference architecture for Databricks on AWS

The Delta Sharing Databricks-to-Databricks protocol allows users to share data securely with any Databricks user, regardless of account or cloud host, as long as that user has access to a workspace enabled for Unity Catalog.