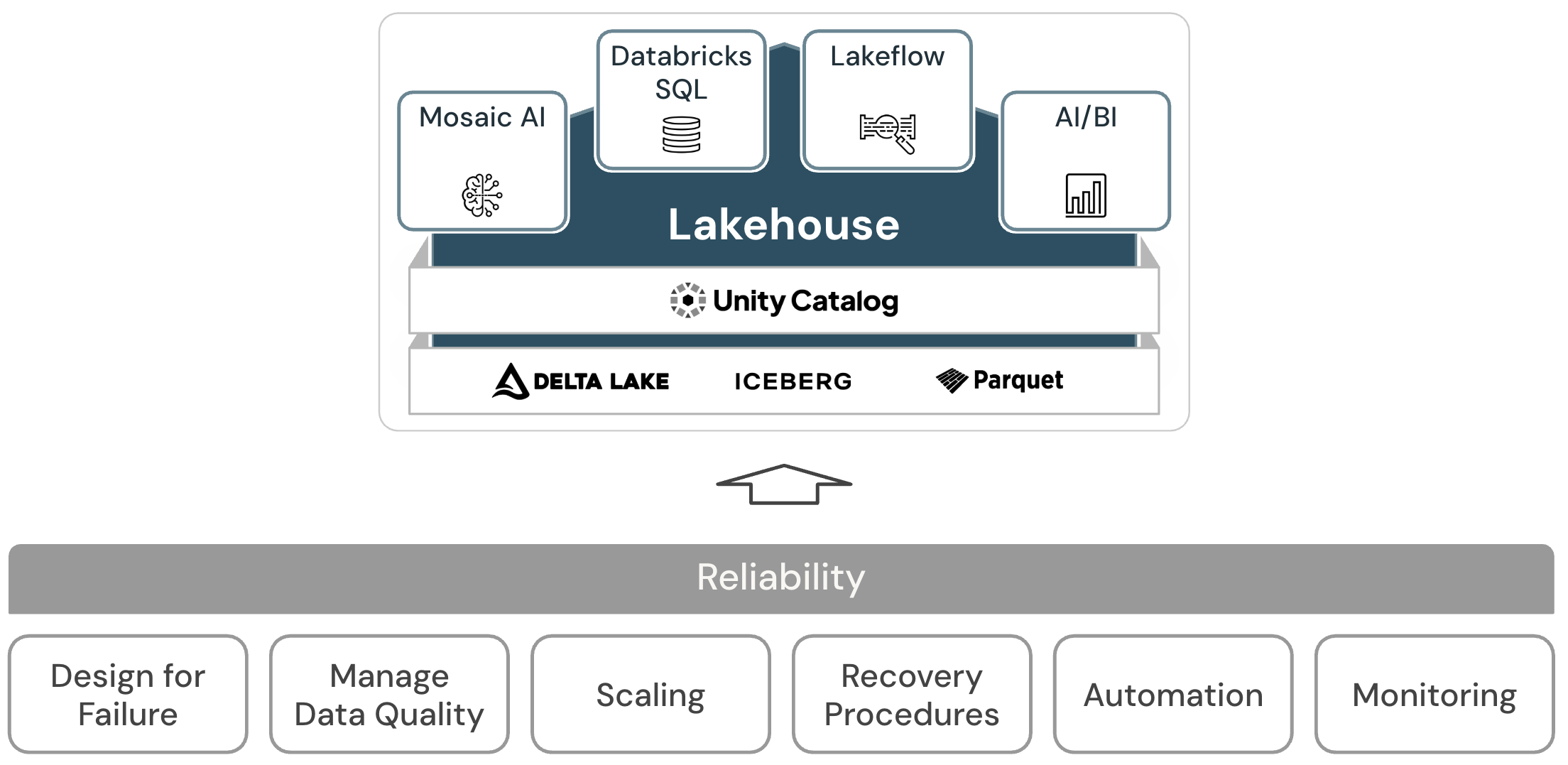

Reliability for the data lakehouse

The architectural principles of the reliability pillar address the ability of a system to recover from failures and continue to function.

Principles of reliability

-

Design for failure

In a highly distributed environment, outages can occur. For both the platform and the various workloads - such as streaming jobs, batch jobs, model training, and BI queries - failures must be anticipated and resilient solutions must be developed to increase reliability. The focus is on designing applications to recover quickly and, in the best case, automatically.

-

Manage data quality

Data quality is fundamental to deriving accurate and meaningful insights from data. Data quality has many dimensions, including completeness, accuracy, validity, and consistency. It must be actively managed to improve the quality of the final data sets so that the data serves as reliable and trustworthy information for business users.

-

Design for autoscaling

Standard ETL processes, business reports, and dashboards often have predictable resource requirements in terms of memory and compute. However, new projects, seasonal tasks, or advanced approaches such as model training (for churn, forecasting, and maintenance) create spikes in resource requirements. For an organization to handle all of these workloads, it needs a scalable storage and compute platform. Adding new resources as needed must be easy, and only actual consumption should be charged for. Once the peak is over, resources can be freed up and costs reduced accordingly. This is often referred to as horizontal scaling (number of nodes) and vertical scaling (size of nodes).

-

Test recovery procedures

An enterprise-wide disaster recovery strategy for most applications and systems requires an assessment of priorities, capabilities, limitations, and costs. A reliable disaster recovery approach regularly tests how workloads fail and validates recovery procedures. Automation can be used to simulate different failures or recreate scenarios that have caused failures in the past.

-

Automate deployments and workloads

Automating deployments and workloads for the lakehouse helps standardize these processes, eliminate human error, improve productivity, and provide greater repeatability. This includes using “configuration as code” to avoid configuration drift, and “infrastructure as code” to automate the provisioning of all required lakehouse and cloud services.

-

Monitor systems and workloads

Workloads in the lakehouse typically integrate Databricks platform services and external cloud services, for example as data sources or targets. Successful execution can only occur if each service in the execution chain is functioning properly. When this is not the case, monitoring, alerting, and logging are important to detect and track problems and understand system behavior.