Parallelize Hyperopt hyperparameter tuning

The open-source version of Hyperopt is no longer being maintained.

Hyperopt is not included in Databricks Runtime for Machine Learning after 16.4 LTS ML. Databricks recommends using either Optuna for single-node optimization or RayTune for a similar experience to the deprecated Hyperopt distributed hyperparameter tuning functionality. Learn more about using RayTune on Databricks.

This notebook shows how to use Hyperopt to parallelize hyperparameter tuning calculations. It uses the SparkTrials class to automatically distribute calculations across the cluster workers. It also illustrates automated MLflow tracking of Hyperopt runs so you can save the results for later.

Parallelize hyperparameter tuning with automated MLflow tracking notebook

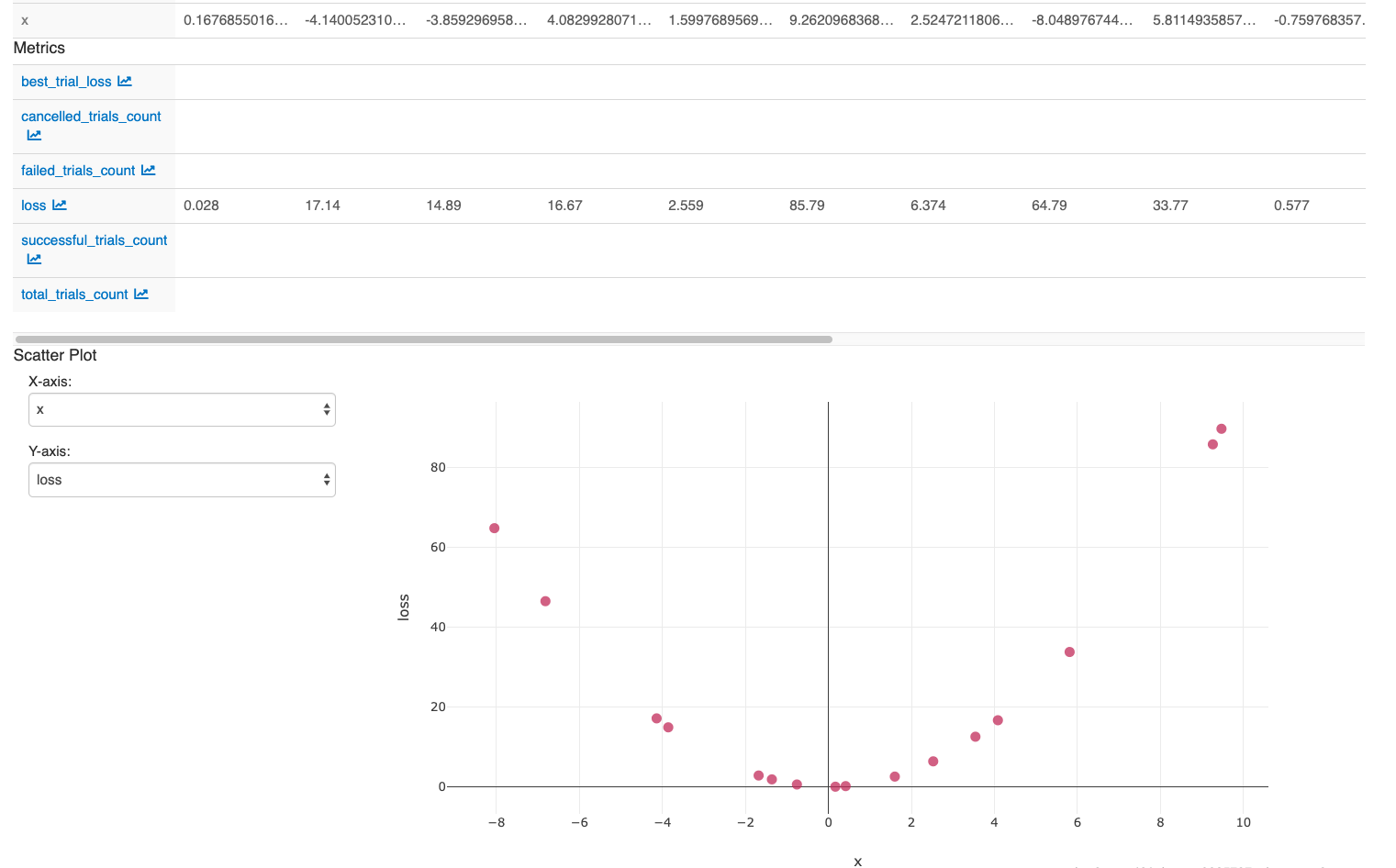

After you perform the actions in the last cell in the notebook, your MLflow UI should display: