Work with feature tables in Unity Catalog

This page describes how to create and work with feature tables in Unity Catalog.

This page applies only to workspaces that are enabled for Unity Catalog. If your workspace is not enabled for Unity Catalog, see Work with feature tables in Workspace Feature Store (legacy).

For details about the commands and parameters used in the examples on this page, see the Feature Engineering Python API reference.

Requirements

Feature Engineering in Unity Catalog requires Databricks Runtime 13.2 or above. In addition, the Unity Catalog metastore must have Privilege Model Version 1.0.

Install Feature Engineering in Unity Catalog Python client

Feature Engineering in Unity Catalog has a Python client FeatureEngineeringClient. The class is available on PyPI with the databricks-feature-engineering package and is pre-installed in Databricks Runtime 13.3 LTS ML and above. If you use a non-ML Databricks Runtime, you must install the client manually. Use the compatibility matrix to find the correct version for your Databricks Runtime version.

%pip install databricks-feature-engineering

dbutils.library.restartPython()

Create a catalog and a schema for feature tables in Unity Catalog

You must create a new catalog or use an existing catalog for feature tables.

To create a new catalog, you must have the CREATE CATALOG privilege on the metastore.

CREATE CATALOG IF NOT EXISTS <catalog-name>

To use an existing catalog, you must have the USE CATALOG privilege on the catalog.

USE CATALOG <catalog-name>

Feature tables in Unity Catalog must be stored in a schema. To create a new schema in the catalog, you must have the CREATE SCHEMA privilege on the catalog.

CREATE SCHEMA IF NOT EXISTS <schema-name>

Create a feature table in Unity Catalog

You can use an existing Delta table in Unity Catalog that includes a primary key constraint as a feature table. If the table does not have a primary key defined, you must update the table using ALTER TABLE DDL statements to add the constraint. See Use an existing Delta table in Unity Catalog as a feature table.

However, adding a primary key to a streaming table or materialized view that was published to Unity Catalog by Lakeflow Spark Declarative Pipelines requires modifying the schema of the streaming table or materialized view definition to include the primary key and then refreshing the streaming table or materialized view. See Use a streaming table or materialized view created by Lakeflow Spark Declarative Pipelines as a feature table.

Feature tables in Unity Catalog are Delta tables. Feature tables must have a primary key. Feature tables, like other data assets in Unity Catalog, are accessed using a three-level namespace: <catalog-name>.<schema-name>.<table-name>.

You can use Databricks SQL, the Python FeatureEngineeringClient, or Lakeflow Spark Declarative Pipelines to create feature tables in Unity Catalog.

- Databricks SQL

- Python

You can use any Delta table with a primary key constraint as a feature table. The following code shows how to create a table with a primary key:

CREATE TABLE ml.recommender_system.customer_features (

customer_id int NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (customer_id)

);

To create a time series feature table, add a time column as a primary key column and specify the TIMESERIES keyword. The TIMESERIES keyword requires Databricks Runtime 13.3 LTS or above.

CREATE TABLE ml.recommender_system.customer_features (

customer_id int NOT NULL,

ts timestamp NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (customer_id, ts TIMESERIES)

);

After the table is created, you can write data to it like other Delta tables, and it can be used as a feature table.

For details about the commands and parameters used in the following examples, see the Feature Engineering Python API reference.

- Write the Python functions to compute the features. The output of each function should be an Apache Spark DataFrame with a unique primary key. The primary key can consist of one or more columns.

- Create a feature table by instantiating a

FeatureEngineeringClientand usingcreate_table. - Populate the feature table using

write_table.

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

# Prepare feature DataFrame

def compute_customer_features(data):

''' Feature computation code returns a DataFrame with 'customer_id' as primary key'''

pass

customer_features_df = compute_customer_features(df)

# Create feature table with `customer_id` as the primary key.

# Take schema from DataFrame output by compute_customer_features

customer_feature_table = fe.create_table(

name='ml.recommender_system.customer_features',

primary_keys='customer_id',

schema=customer_features_df.schema,

description='Customer features'

)

# An alternative is to use `create_table` and specify the `df` argument.

# This code automatically saves the features to the underlying Delta table.

# customer_feature_table = fe.create_table(

# ...

# df=customer_features_df,

# ...

# )

# To use a composite primary key, pass all primary key columns in the create_table call

# customer_feature_table = fe.create_table(

# ...

# primary_keys=['customer_id', 'date'],

# ...

# )

# To create a time series table, set the timeseries_columns argument

# customer_feature_table = fe.create_table(

# ...

# primary_keys=['customer_id', 'date'],

# timeseries_columns='date',

# ...

# )

Create a feature table in Unity Catalog with Lakeflow Spark Declarative Pipelines

Lakeflow Spark Declarative Pipelines support for table constraints is in Public Preview. The following code examples must be run using the Lakeflow Spark Declarative Pipelines preview channel.

Any table published from Lakeflow Spark Declarative Pipelines that includes a primary key constraint can be used as a feature table. To create a table in a pipeline with a primary key, you can use either Databricks SQL or the Lakeflow Spark Declarative Pipelines Python programming interface.

To create a table in a pipeline with a primary key, use the following syntax:

- Databricks SQL

- Python

CREATE MATERIALIZED VIEW customer_features (

customer_id int NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (customer_id)

) AS SELECT * FROM ...;

from pyspark import pipelines as dp

@dp.materialized_view(

schema="""

customer_id int NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (customer_id)

""")

def customer_features():

return ...

To create a time series feature table, add a time column as a primary key column and specify the TIMESERIES keyword.

- Databricks SQL

- Python

CREATE MATERIALIZED VIEW customer_features (

customer_id int NOT NULL,

ts timestamp NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (customer_id, ts TIMESERIES)

) AS SELECT * FROM ...;

from pyspark import pipelines as dp

@dp.materialized_view(

schema="""

customer_id int NOT NULL,

ts timestamp NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (customer_id, ts TIMESERIES)

""")

def customer_features():

return ...

After the table is created, you can write data to it like other Lakeflow Spark Declarative Pipelines datasets, and it can be used as a feature table.

Use an existing Delta table in Unity Catalog as a feature table

Any Delta table in Unity Catalog with a primary key can be a feature table in Unity Catalog, and you can use the Features UI and API with the table.

- Only the table owner can declare primary key constraints. The owner's name is displayed on the table detail page of Catalog Explorer.

- Verify the data type in the Delta table is supported by Feature Engineering in Unity Catalog. See Supported data types.

- The

TIMESERIESkeyword requires Databricks Runtime 13.3 LTS or above.

If an existing Delta table does not have a primary key constraint, you can create one as follows:

-

Set primary key columns to

NOT NULL. For each primary key column, run:SQLALTER TABLE <full_table_name> ALTER COLUMN <pk_col_name> SET NOT NULL -

Alter the table to add the primary key constraint:

SQLALTER TABLE <full_table_name> ADD CONSTRAINT <pk_name> PRIMARY KEY(pk_col1, pk_col2, ...)pk_nameis the name of the primary key constraint. By convention, you can use the table name (without schema and catalog) with a_pksuffix. For example, a table with the name"ml.recommender_system.customer_features"would havecustomer_features_pkas the name of its primary key constraint.To make the table a time series feature table, specify the

TIMESERIESkeyword on one of the primary key columns, as follows:SQLALTER TABLE <full_table_name> ADD CONSTRAINT <pk_name> PRIMARY KEY(pk_col1 TIMESERIES, pk_col2, ...)After you add the primary key constraint on the table, the table appears in the Features UI and you can use it as a feature table.

Use a streaming table or materialized view created by Lakeflow Spark Declarative Pipelines as a feature table

Any streaming table or materialized view in Unity Catalog with a primary key can be a feature table in Unity Catalog, and you can use the Features UI and API with the table.

- Lakeflow Spark Declarative Pipelines support for table constraints is in Public Preview. The following code examples must be run using the Lakeflow Spark Declarative Pipelines preview channel.

- Only the table owner can declare primary key constraints. The owner's name is displayed on the table detail page of Catalog Explorer.

- Verify that Feature Engineering in Unity Catalog supports the data type in the Delta table. See Supported data types.

To set primary keys for an existing streaming table or materialized view, update the schema of the streaming table or materialized view in the notebook that manages the object. Then, refresh the table to update the Unity Catalog object.

The following is the syntax to add a primary key to a materialized view:

- Databricks SQL

- Python

CREATE OR REFRESH MATERIALIZED VIEW existing_live_table(

id int NOT NULL PRIMARY KEY,

...

) AS SELECT ...

from pyspark import pipelines as dp

@dp.materialized_view(

schema="""

id int NOT NULL PRIMARY KEY,

...

"""

)

def existing_live_table():

return ...

Use an existing view in Unity Catalog as a feature table

To use a view as a feature table, you must use databricks-feature-engineering version 0.7.0 or above, which is built into Databricks Runtime 16.0 ML.

A simple SELECT view in Unity Catalog can be a feature table in Unity Catalog, and you can use the Features API with the table.

- A simple SELECT view is defined as a view created from a single Delta table in Unity Catalog that can be used as a feature table, and whose primary keys are selected without JOIN, GROUP BY, or DISTINCT clauses. Acceptable keywords in the SQL statement are SELECT, FROM, WHERE, ORDER BY, LIMIT, and OFFSET.

- See Supported data types for supported data types.

- Feature tables backed by views do not appear in the Features UI.

- If columns are renamed in the source Delta table, the columns in the SELECT statement for the view definition must be renamed to match.

Here is an example of a simple SELECT view that can be used as a feature table:

CREATE OR REPLACE VIEW ml.recommender_system.content_recommendation_subset AS

SELECT

user_id,

content_id,

user_age,

user_gender,

content_genre,

content_release_year,

user_content_watch_duration,

user_content_like_dislike_ratio

FROM

ml.recommender_system.content_recommendations_features

WHERE

user_age BETWEEN 18 AND 35

AND content_genre IN ('Drama', 'Comedy', 'Action')

AND content_release_year >= 2010

AND user_content_watch_duration > 60;

Feature tables based on views can be used for offline model training and evaluation. They cannot be published to online stores. Features from these tables and models based on these features cannot be served.

Update a feature table in Unity Catalog

You can update a feature table in Unity Catalog by adding new features or by modifying specific rows based on the primary key.

The following feature table metadata should not be updated:

- Primary key.

- Partition key.

- Name or data type of an existing feature.

Altering them will cause downstream pipelines that use features for training and serving models to break.

Add new features to an existing feature table in Unity Catalog

You can add new features to an existing feature table in one of two ways:

- Update the existing feature computation function and run

write_tablewith the returned DataFrame. This updates the feature table schema and merges new feature values based on the primary key. - Create a new feature computation function to calculate the new feature values. The DataFrame returned by this new computation function must contain the feature tables' primary and partition keys (if defined). Run

write_tablewith the DataFrame to write the new features to the existing feature table using the same primary key.

Update only specific rows in a feature table

Use mode = "merge" in write_table. Rows whose primary key does not exist in the DataFrame sent in the write_table call remain unchanged.

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

fe.write_table(

name='ml.recommender_system.customer_features',

df = customer_features_df,

mode = 'merge'

)

Schedule a job to update a feature table

To ensure that features in feature tables always have the most recent values, Databricks recommends that you create a job that runs a notebook to update your feature table on a regular basis, such as every day. If you already have a non-scheduled job created, you can convert it to a scheduled job to ensure the feature values are always up-to-date. See Lakeflow Jobs.

Code to update a feature table uses mode='merge', as shown in the following example.

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

customer_features_df = compute_customer_features(data)

fe.write_table(

df=customer_features_df,

name='ml.recommender_system.customer_features',

mode='merge'

)

Store past values of daily features

Define a feature table with a composite primary key. Include the date in the primary key. For example, for a feature table customer_features, you might use a composite primary key (date, customer_id) and partition key date for efficient reads.

Databricks recommends that you enable liquid clustering on the table for efficient reads. If you do not use liquid clustering, set the date column as a partition key for better read performance.

- Databricks SQL

- Python

CREATE TABLE ml.recommender_system.customer_features (

customer_id int NOT NULL,

`date` date NOT NULL,

feat1 long,

feat2 varchar(100),

CONSTRAINT customer_features_pk PRIMARY KEY (`date`, customer_id)

)

-- If you are not using liquid clustering, uncomment the following line.

-- PARTITIONED BY (`date`)

COMMENT "Customer features";

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

fe.create_table(

name='ml.recommender_system.customer_features',

primary_keys=['date', 'customer_id'],

# If you are not using liquid clustering, uncomment the following line.

# partition_columns=['date'],

schema=customer_features_df.schema,

description='Customer features'

)

You can then create code to read from the feature table filtering date to the time period of interest.

You can also create a time series feature table which enables point-in-time lookups when you use create_training_set or score_batch. See Create a feature table in Unity Catalog.

To keep the feature table up to date, set up a regularly scheduled job to write features or stream new feature values into the feature table.

Create a streaming feature computation pipeline to update features

To create a streaming feature computation pipeline, pass a streaming DataFrame as an argument to write_table. This method returns a StreamingQuery object.

def compute_additional_customer_features(data):

''' Returns Streaming DataFrame

'''

pass

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

customer_transactions = spark.readStream.table("prod.events.customer_transactions")

stream_df = compute_additional_customer_features(customer_transactions)

fe.write_table(

df=stream_df,

name='ml.recommender_system.customer_features',

mode='merge'

)

Read from a feature table in Unity Catalog

Use read_table to read feature values.

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

customer_features_df = fe.read_table(

name='ml.recommender_system.customer_features',

)

Search and browse feature tables in Unity Catalog

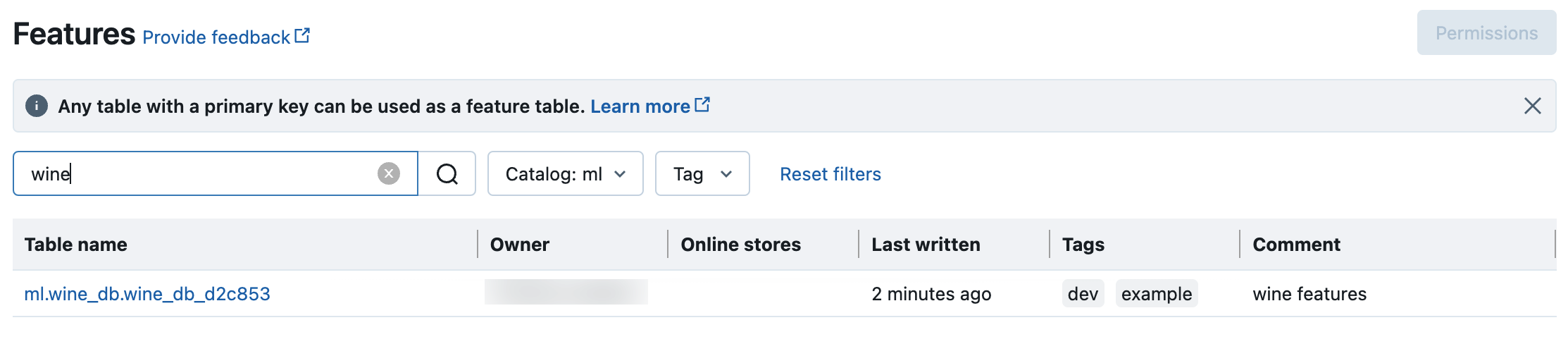

Use the Features UI to search for or browse feature tables in Unity Catalog.

-

Click

Features in the sidebar to display the Features UI.

-

Select catalog with the catalog selector to view all of the available feature tables in that catalog. In the search box, enter all or part of the name of a feature table, a feature, or a comment. You can also enter all or part of the key or value of a tag. Search text is case-insensitive.

Get metadata of feature tables in Unity Catalog

Use get_table to get feature table metadata.

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

ft = fe.get_table(name="ml.recommender_system.user_feature_table")

print(ft.features)

Use tags with feature tables and features in Unity Catalog

You can use tags, which are simple key-value pairs, to categorize and manage your feature tables and features.

For feature tables, you can create, edit, and delete tags using Catalog Explorer, SQL statements in a notebook or SQL query editor, or the Feature Engineering Python API.

For features, you can create, edit, and delete tags using Catalog Explorer or SQL statements in a notebook or SQL query editor.

See Apply tags to Unity Catalog securable objects and Python API.

The following example shows how to use the Feature Engineering Python API to create, update, and delete feature table tags.

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

# Create feature table with tags

customer_feature_table = fe.create_table(

# ...

tags={"tag_key_1": "tag_value_1", "tag_key_2": "tag_value_2", ...},

# ...

)

# Upsert a tag

fe.set_feature_table_tag(name="customer_feature_table", key="tag_key_1", value="new_key_value")

# Delete a tag

fe.delete_feature_table_tag(name="customer_feature_table", key="tag_key_2")

Delete a feature table in Unity Catalog

You can delete a feature table in Unity Catalog by directly deleting the Delta table in Unity Catalog using Catalog Explorer or using the Feature Engineering Python API.

- Deleting a feature table can lead to unexpected failures in upstream producers and downstream consumers (models, endpoints, and scheduled jobs). You must delete published online stores with your cloud provider.

- When you delete a feature table in Unity Catalog, the underlying Delta table is also dropped.

drop_tableis not supported in Databricks Runtime 13.1 ML or below. Use SQL command to delete the table.

You can use Databricks SQL or FeatureEngineeringClient.drop_table to delete a feature table in Unity Catalog:

- Databricks SQL

- Python

DROP TABLE ml.recommender_system.customer_features;

from databricks.feature_engineering import FeatureEngineeringClient

fe = FeatureEngineeringClient()

fe.drop_table(

name='ml.recommender_system.customer_features'

)

Share a feature table in Unity Catalog across workspaces or accounts

A feature table in Unity Catalog is accessible to all workspaces assigned to the table's Unity Catalog metastore.

To share a feature table with workspaces that are not assigned to the same Unity Catalog metastore, use Delta Sharing.

Troubleshooting

Error message: Feature tables must have a primary key

The feature table must have a primary key constraint. See Use an existing Delta table in Unity Catalog as a feature table.

Example notebooks

The basic notebook shows how to create a feature table, use it to train a model, and run batch scoring using automatic feature lookup. It also shows the Feature Engineering UI, which you can use to search for features and understand how features are created and used.

Basic Feature Engineering in Unity Catalog example notebook

The taxi example notebook illustrates the process of creating features, updating them, and using them for model training and batch inference.