Configure access to resources from model serving endpoints

This article describes how to configure access to external and private resources from model serving endpoints. Model Serving supports plain text environment variables and secrets-based environment variables using Databricks secrets.

Requirements

For secrets-based environment variables,

- The endpoint creator must have READ access to the Databricks secrets being referenced in the configs.

- You must store credentials like your API key or other tokens as a Databricks secret.

Add plain text environment variables

Use plain text environment variables to set variables that don't need to be hidden. You can set variables in the Serving UI, the REST API or the SDK when you create or update an endpoint.

- Serving UI

- REST API

- WorkspaceClient SDK

- MLflow Deployments SDK

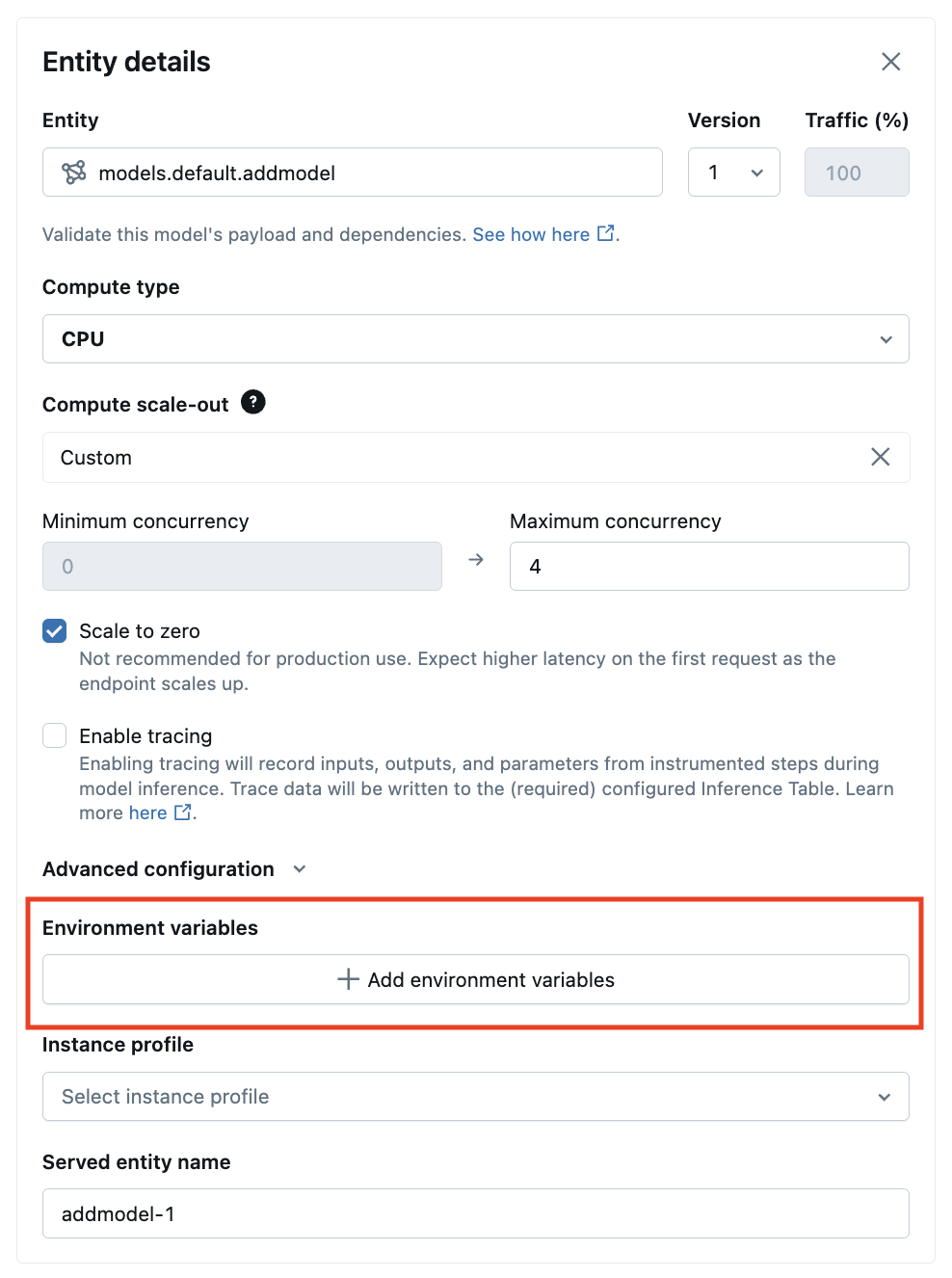

From the Serving UI, you can add an environment variable in Advanced configurations:

The following is an example for creating a serving endpoint using the POST /api/2.0/serving-endpoints REST API and the environment_vars field to configure your environment variable.

{

"name": "endpoint-name",

"config": {

"served_entities": [

{

"entity_name": "model-name",

"entity_version": "1",

"workload_size": "Small",

"scale_to_zero_enabled": "true",

"environment_vars": {

"TEXT_ENV_VAR_NAME": "plain-text-env-value"

}

}

]

}

}

The following is an example for creating a serving endpoint using the WorkspaceClient SDK and the environment_vars field to configure your environment variable.

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.serving import ServedEntityInput, EndpointCoreConfigInput, ServingModelWorkloadType

w = WorkspaceClient()

endpoint_name = "example-add-model"

model_name = "main.default.addmodel"

w.serving_endpoints.create_and_wait(

name=endpoint_name,

config=EndpointCoreConfigInput(

served_entities=[

ServedEntityInput(

entity_name = model_name,

entity_version = "2",

workload_type = ServingModelWorkloadType("CPU"),

workload_size = "Small",

scale_to_zero_enabled = False,

environment_vars = {

"MY_ENV_VAR": "value_to_be_injected",

"ADS_TOKEN": "abcdefg-1234"

}

)

]

)

)

The following is an example for creating a serving endpoint using the Mlflow Deployments SDK and the environment_vars field to configure your environment variable.

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

endpoint = client.create_endpoint(

name="unity-catalog-model-endpoint",

config={

"served_entities": [

{

"name": "ads-entity"

"entity_name": "catalog.schema.my-ads-model",

"entity_version": "3",

"workload_size": "Small",

"scale_to_zero_enabled": True,

"environment_vars": {

"MY_ENV_VAR": "value_to_be_injected",

"ADS_TOKEN": "abcdefg-1234"

}

}

]

}

)

Log feature lookup DataFrames to inference tables

If you have inference tables enabled on your endpoint, you can log your automatic feature lookup data frame to that inference table using ENABLE_FEATURE_TRACING. This requires MLflow 2.14.0 or above.

Set ENABLE_FEATURE_TRACING as an environment variable in the Serving UI, REST API or SDK when you create or update an endpoint.

- Serving UI

- REST API

- WorkspaceClient SDK

- MLflow Deployments SDK

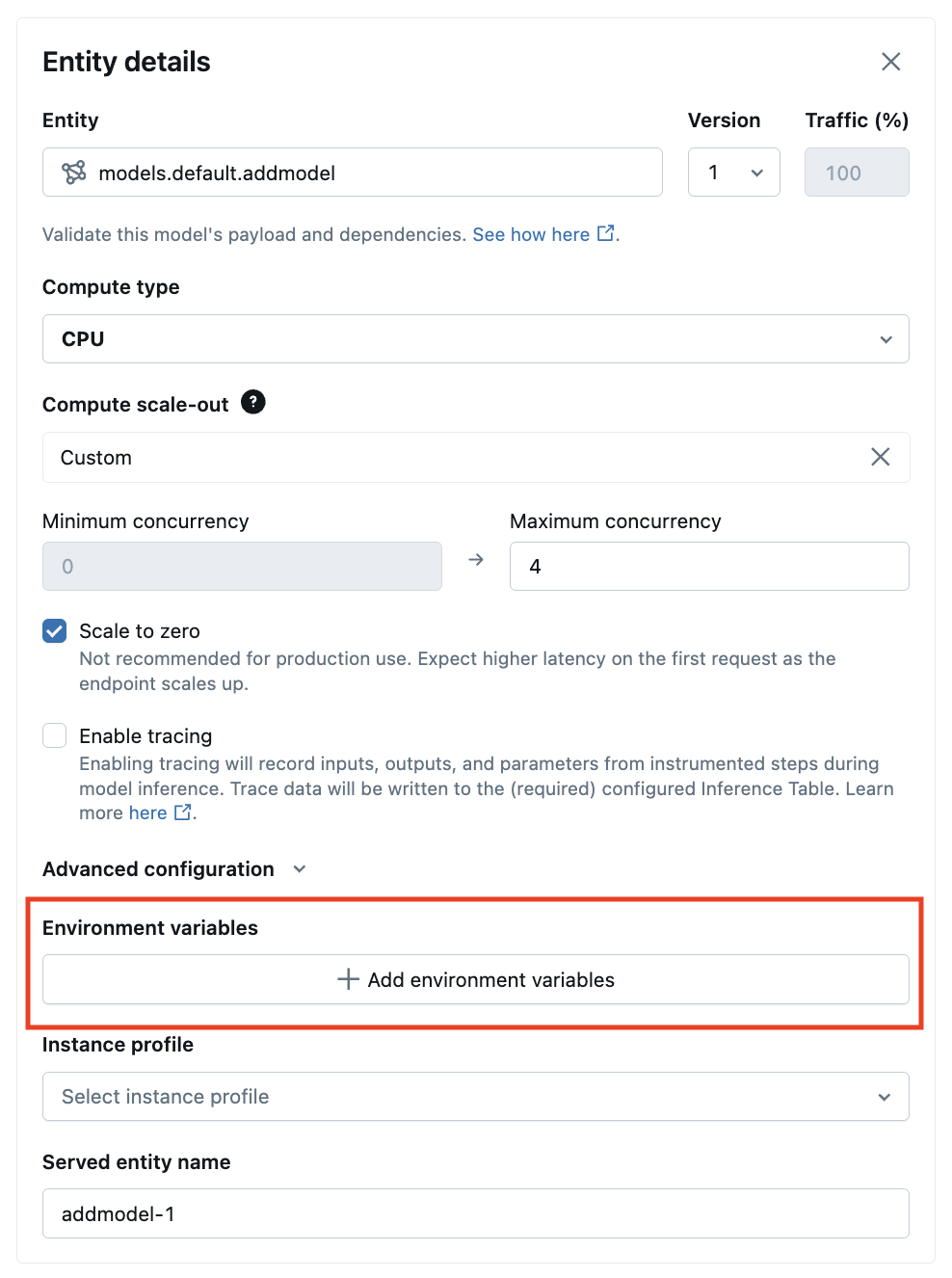

- In Advanced configurations, select ** + Add environment variables**.

- Type

ENABLE_FEATURE_TRACINGas the environment name. - In the field to the right type

true.

The following is an example for creating a serving endpoint using the POST /api/2.0/serving-endpoints REST API and the environment_vars field to configure the ENABLE_FEATURE_TRACING environment variable.

{

"name": "endpoint-name",

"config": {

"served_entities": [

{

"entity_name": "model-name",

"entity_version": "1",

"workload_size": "Small",

"scale_to_zero_enabled": "true",

"environment_vars": {

"ENABLE_FEATURE_TRACING": "true"

}

}

]

}

}

The following is an example for creating a serving endpoint using the WorkspaceClient SDK and the environment_vars field to configure the ENABLE_FEATURE_TRACING environment variable.

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.serving import ServedEntityInput, EndpointCoreConfigInput, ServingModelWorkloadType

w = WorkspaceClient()

endpoint_name = "example-add-model"

model_name = "main.default.addmodel"

w.serving_endpoints.create_and_wait(

name=endpoint_name,

config=EndpointCoreConfigInput(

served_entities=[

ServedEntityInput(

entity_name = model_name,

entity_version = "2",

workload_type = ServingModelWorkloadType("CPU"),

workload_size = "Small",

scale_to_zero_enabled = False,

environment_vars = {

"ENABLE_FEATURE_TRACING": "true"

}

)

]

)

)

The following is an example for creating a serving endpoint using the Mlflow Deployments SDK and the environment_vars field to configure the ENABLE_FEATURE_TRACING environment variable.

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

endpoint = client.create_endpoint(

name="unity-catalog-model-endpoint",

config={

"served_entities": [

{

"name": "ads-entity"

"entity_name": "catalog.schema.my-ads-model",

"entity_version": "3",

"workload_size": "Small",

"scale_to_zero_enabled": True,

"environment_vars": {

"ENABLE_FEATURE_TRACING": "true"

}

}

]

}

)

Add secrets-based environment variables

You can securely store credentials using Databricks secrets and reference those secrets in model serving using a secrets-based environment variables. This allows credentials to be fetched from model serving endpoints at serving time.

For example, you can pass credentials to call OpenAI and other external model endpoints or access external data storage locations directly from model serving.

Databricks recommends this feature for deploying OpenAI and LangChain MLflow model flavors to serving. It is also applicable to other SaaS models requiring credentials with the understanding that the access pattern is based on using environment variables and API keys and tokens.

Step 1: Create a secret scope

During model serving, the secrets are retrieved from Databricks secrets by the secret scope and key. These get assigned to the secret environment variable names that can be used inside the model.

First, create a secret scope. See Manage secret scopes.

The following are CLI commands:

databricks secrets create-scope my_secret_scope

You can then add your secret to a desired secret scope and key as shown below:

databricks secrets put-secret my_secret_scope my_secret_key

The secret information and the name of the environment variable can then be passed to your endpoint configuration during endpoint creation or as an update to the configuration of an existing endpoint.

Step 2: Add secret scopes to endpoint configuration

You can add the secret scope to an environment variable and pass that variable to your endpoint during endpoint creation or configuration updates. See Create custom model serving endpoints.

- Serving UI

- REST API

- WorkspaceClient SDK

- MLflow Deployments SDK

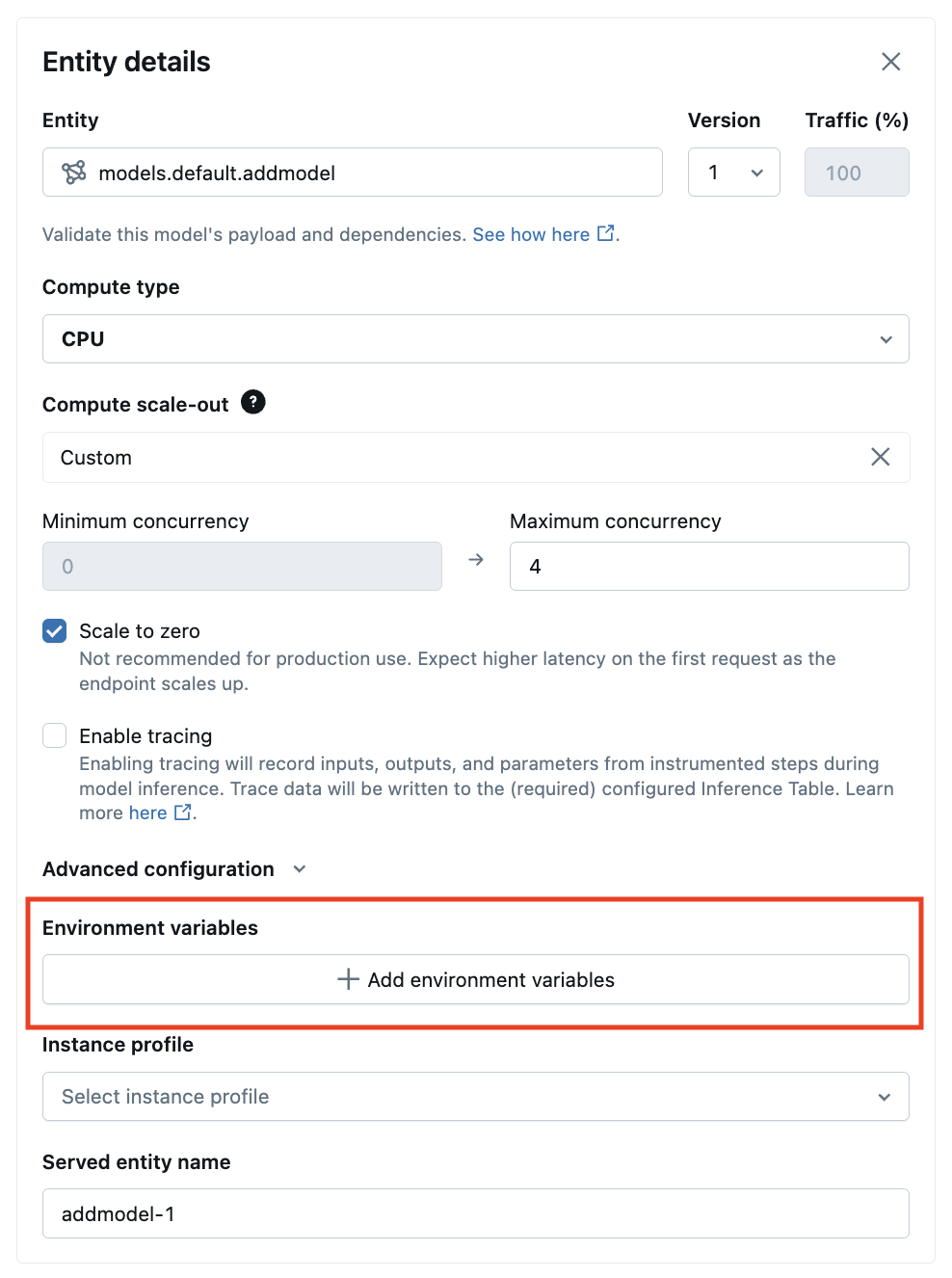

From the Serving UI, you can add an environment variable in Advanced configurations. The secrets based environment variable must be provided using the following syntax: {{secrets/scope/key}}. Otherwise, the environment variable is considered a plain text environment variable.

The following is an example for creating a serving endpoint using the REST API. During model serving endpoint creation and configuration updates, you are able to provide a list of secret environment variable specifications for each served model inside the API request using environment_vars field.

The following example assigns the value from the secret created in the provided code to the environment variable OPENAI_API_KEY.

{

"name": "endpoint-name",

"config": {

"served_entities": [

{

"entity_name": "model-name",

"entity_version": "1",

"workload_size": "Small",

"scale_to_zero_enabled": "true",

"environment_vars": {

"OPENAI_API_KEY": "{{secrets/my_secret_scope/my_secret_key}}"

}

}

]

}

}

You can also update a serving endpoint, as in the following PUT /api/2.0/serving-endpoints/{name}/config REST API example:

{

"served_entities": [

{

"entity_name": "model-name",

"entity_version": "2",

"workload_size": "Small",

"scale_to_zero_enabled": "true",

"environment_vars": {

"OPENAI_API_KEY": "{{secrets/my_secret_scope/my_secret_key}}"

}

}

]

}

The following is an example for creating a serving endpoint using the WorkspaceClient SDK. During model serving endpoint creation and configuration updates, you are able to provide a list of secret environment variable specifications for each served model inside the API request using environment_vars field.

The following example assigns the value from the secret created in the provided code to the environment variable OPENAI_API_KEY.

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.serving import ServedEntityInput, EndpointCoreConfigInput, ServingModelWorkloadType

w = WorkspaceClient()

endpoint_name = "example-add-model"

model_name = "main.default.addmodel"

w.serving_endpoints.create_and_wait(

name=endpoint_name,

config=EndpointCoreConfigInput(

served_entities=[

ServedEntityInput(

entity_name = model_name,

entity_version = "2",

workload_type = ServingModelWorkloadType("CPU"),

workload_size = "Small",

scale_to_zero_enabled = False,

environment_vars = {

"OPENAI_API_KEY": "{{secrets/my_secret_scope/my_secret_key}}"

}

)

]

)

)

The following is an example for creating a serving endpoint using the Mlflow Deployments SDK. During model serving endpoint creation and configuration updates, you are able to provide a list of secret environment variable specifications for each served model inside the API request using environment_vars field.

The following example assigns the value from the secret created in the provided code to the environment variable OPENAI_API_KEY.

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

endpoint = client.create_endpoint(

name="unity-catalog-model-endpoint",

config={

"served_entities": [

{

"name": "ads-entity"

"entity_name": "catalog.schema.my-ads-model",

"entity_version": "3",

"workload_size": "Small",

"scale_to_zero_enabled": True,

"environment_vars": {

"OPENAI_API_KEY": "{{secrets/my_secret_scope/my_secret_key}}"

}

}

]

}

)

After the endpoint is created or updated, model serving automatically fetches the secret key from the Databricks secrets scope and populates the environment variable for your model inference code to use.

Notebook example

See the following notebook for an example of how to configure an OpenAI API key for a LangChain Retrieval QA Chain deployed behind the model serving endpoints with secret-based environment variables.