External locations

Applies to: Databricks SQL

Databricks Runtime

Unity Catalog only

Unity Catalog and the built-in Databricks Hive metastore use default locations for managed tables. Unity Catalog introduces several new securable objects to grant privileges to data in cloud object storage.

-

A Unity Catalog object used to abstract long term credentials from cloud storage providers.

-

A Unity Catalog object used to associate a cloud object storage URI with a storage credential.

-

A Unity Catalog table created in a Unity Catalog-managed external location.

External location

An external location is a securable object that combines a storage path with a storage credential that authorizes access to that path.

An external location's creator is its initial owner. An external location's owner and users with the MANAGE privilege can modify the external location's name, URI, and storage credential.

After an external location is created, you can grant access to it to account-level principals (users and groups).

A user or group with permission to use an external location can access any storage path within the location's path without direct access to the storage credential.

To further refine access control you can use GRANT on external tables to encapsulate access to individual files within an external location.

External location names are unqualified and must be unique within the metastore.

The storage path of any external location cannot be contained within another external location's storage path, or within an external table's storage path using an explicit storage credential.

If a schema (database) is registered in your workspace-level Hive metastore, dropping that schema using the CASCADE option causes all files in that schema location to be deleted recursively, regardless of the table type (managed or external).

If the schema is registered to a Unity Catalog metastore, the files for Unity Catalog managed tables are deleted recursively. However, the files for external tables are not deleted. You must manage those files using the cloud storage provider directly.

Therefore, to avoid accidental data loss, you should never register a schema in a Hive metastore to a location with existing data. Nor should you create new external tables in a location managed by Hive metastore schemas or containing Unity Catalog managed tables.

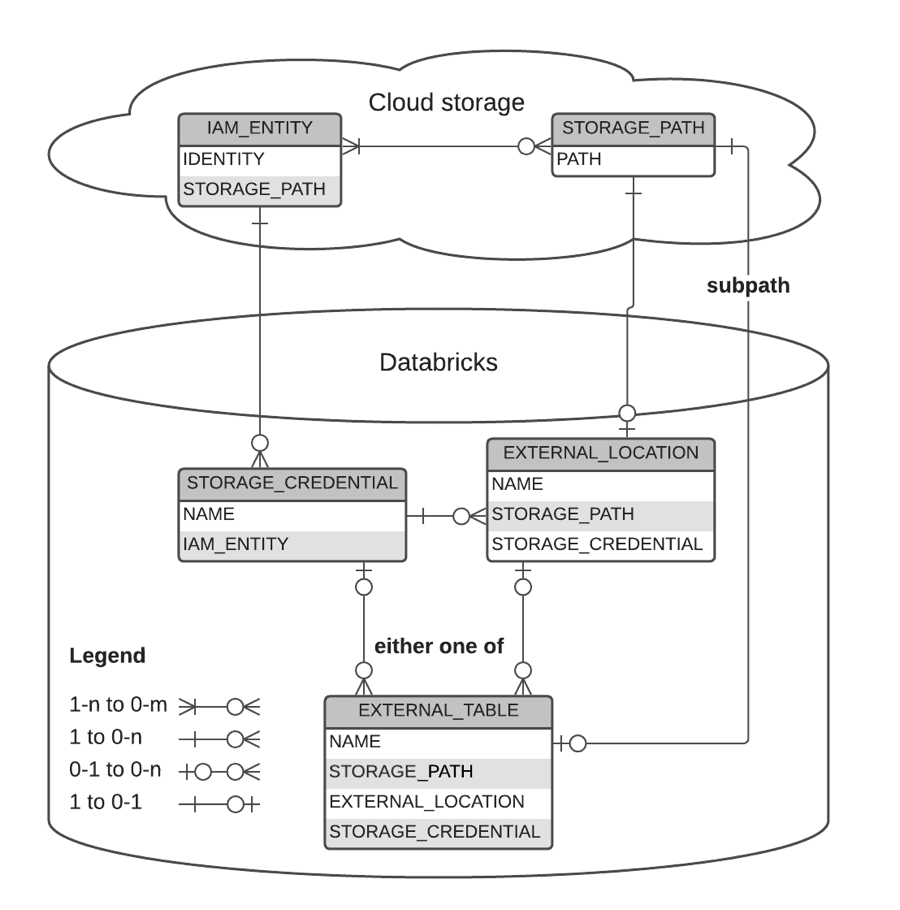

Graphical Representation of relationships

The following diagram describes the relationship between:

- storage credentials

- external locations

- external tables

- storage paths

- IAM entities

- Azure service accounts

Examples

-- Grant `finance` user permission to create external location on `my_aws_storage_cred` storage credential, and then create an external location on the specific path to which `my_aws_storage_cred` has access

> GRANT CREATE EXTERNAL LOCATION ON STORAGE CREDENTIAL `my_aws_storage_cred` TO `finance`

> CREATE EXTERNAL LOCATION finance_loc URL 's3://depts/finance'

WITH (CREDENTIAL my_aws_storage_cred)

COMMENT 'finance';

-- Grant read, write, and create table access to the finance location to `finance` user

> GRANT READ FILES, WRITE FILES, CREATE EXTERNAL TABLE ON EXTERNAL LOCATION `finance_loc` TO `finance`;

-- `finance` can read from any storage path under 's3://depts/finance' but nowhere else

> SELECT count(1) FROM `delta`.`s3://depts/finance/forecast_delta_table`;

100

-- 's3://depts/hr/' is not under external location `finance_loc` so `finance` cannot read it

> SELECT count(1) FROM `delta`.`s3://depts/hr/employees_delta_table`;

Error

-- `finance` can create an external table over specific object within the `finance_loc` location

> CREATE TABLE main.default.sec_filings LOCATION 's3://depts/finance/sec_filings';

-- Cannot list files under an external table with a user that doesn't have SELECT permission on it

> LIST 's3://depts/finance/sec_filings'

Error

> LIST 's3://depts/finance/sec_filings/_delta_log'

Error