Author an AI agent and deploy it on Databricks Apps

This feature is Experimental and is subject to change.

Build an AI agent and deploy it using Databricks Apps. Databricks Apps gives you full control over the agent code, server configuration, and deployment workflow. This approach is ideal when you need custom server behavior, git-based versioning, or local IDE development.

This agent deployment workflow is an alternative to deploying an agent to Model Serving endpoints where Databricks manages the infrastructure for you.

Prerequisites

Enable Databricks Apps in your workspace. See Set up your Databricks Apps workspace and development environment.

Clone the agent app template

Get started by using a pre-built agent template from the Databricks app templates repository.

This tutorial uses the agent-openai-agents-sdk template, which includes:

- An agent created using OpenAI Agent SDK

- Starter code for an agent application with a conversational REST API and an interactive chat UI

- Code to evaluate the agent using MLflow

Choose one of the following paths to set up the template:

- Workspace UI

- Clone from GitHub

Install the app template using the Workspace UI. This installs the app and deploys it to a compute resource in your workspace. You can then sync the application files to your local environment for further development.

-

In your Databricks workspace, click + New > App.

-

Click Agents > Agent - OpenAI Agents SDK.

-

Create a new MLflow experiment with the name

openai-agents-templateand complete the rest of the set up to install the template. -

After you create the app, click the app URL to open the chat UI.

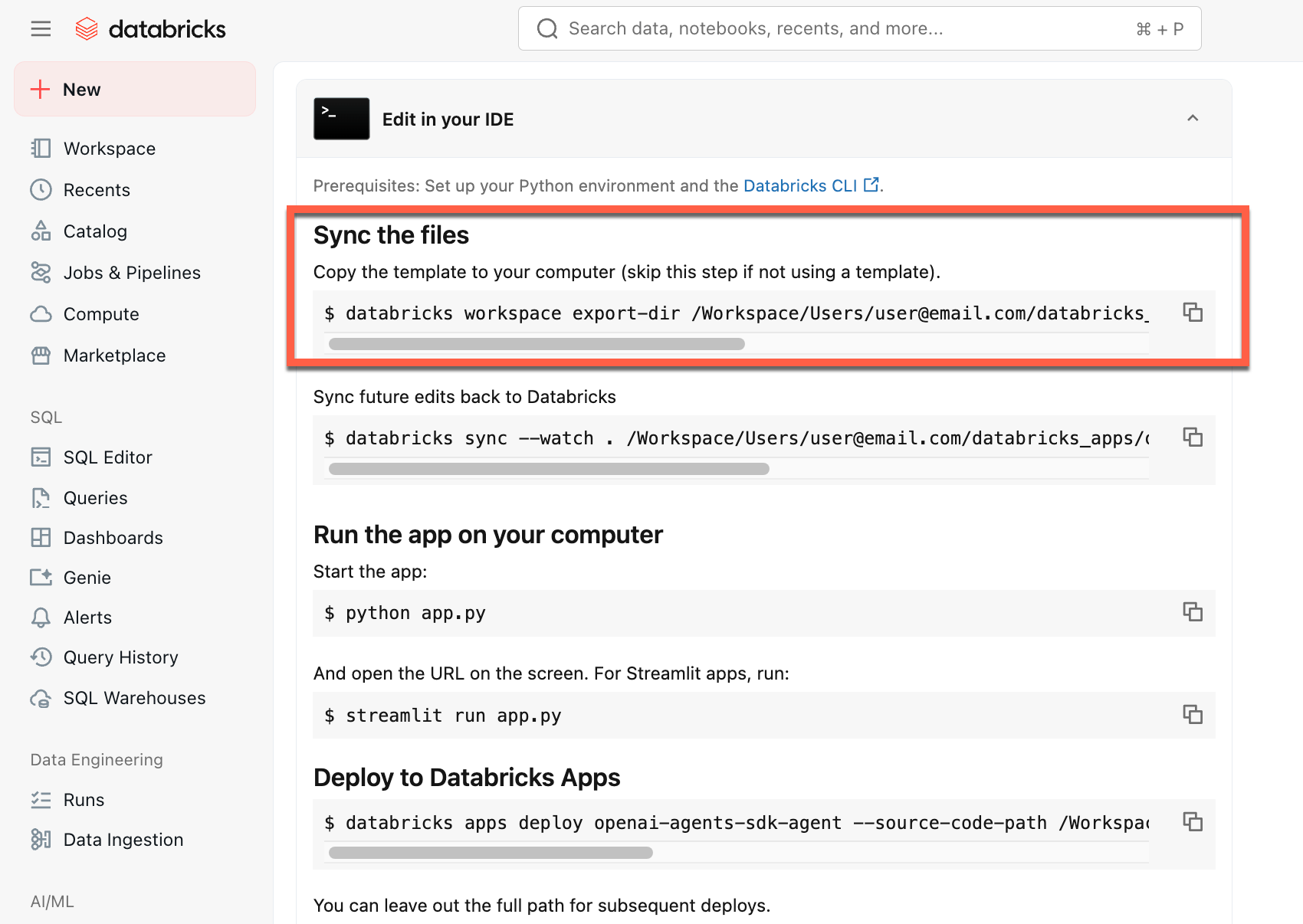

After you create the app, download the source code to your local machine to customize it:

-

Copy the first command under Sync the files

-

In local terminal, run the copied command.

To start from a local environment, clone the agent template repository and open the agent-openai-agents-sdk directory:

git clone https://github.com/databricks/app-templates.git

cd app-templates/agent-openai-agents-sdk

Develop Databricks Apps with AI coding assistants

Databricks agent templates include agent skills, in /.claude/skills, and an AGENTS.md file to help AI assistants understand the project structure, available tools, and best practices. AI coding assistants such as Claude Code, Cursor, and GitHub Copilot can automatically read these files to streamline developing and deploying Databricks Apps.

Understand the agent application

The agent template demonstrates a production-ready architecture with these key components:

MLflow AgentServer: An async FastAPI server that handles agent requests with built-in tracing and observability. The AgentServer provides the /invocations endpoint for querying your agent and automatically manages request routing, logging, and error handling.

OpenAI Agents SDK: The template uses the OpenAI Agents SDK as the agent framework for conversation management and tool orchestration. You can author agents using any framework. The key is wrapping your agent with MLflow ResponsesAgent interface.

ResponsesAgent interface: This interface ensures your agent works across different frameworks and integrates with Databricks tools. Build your agent using OpenAI SDK, LangGraph, LangChain, or pure Python, then wrap it with ResponsesAgent to get automatic compatibility with AI Playground, Agent Evaluation, and Databricks Apps deployment.

MCP (Model Context Protocol) servers: The template connects to Databricks MCP servers to access agents to tools and data sources. See Model Context Protocol (MCP) on Databricks.

Run the agent app locally

Set up your local environment:

-

Install

uv(Python package manager),nvm(Node version manager), and the Databricks CLI:uvinstallationnvminstallation- Run the following to use Node 20 LTS:

Bash

nvm use 20 databricks CLIinstallation

-

Change directory to the

agent-openai-agents-sdkfolder. -

Run the provided quickstart scripts to install dependencies, set up your environment, and start the app.

Bashuv run quickstart

uv run start-app

In a browser, go to http://localhost:8000 to start chatting with the agent.

Configure authentication

Your agent needs authentication to access Databricks resources like MLflow experiments, Vector Search indexes, serving endpoints, and Unity Catalog functions.

Choose between Service Principal authentication (recommended for most use cases) or On-Behalf-Of authentication,

- Service Principal authentication

- On-Behalf-Of (OBO) authentication

By default, Databricks Apps authenticate using a Service Principal (SP). The SP is created automatically when you create the app and acts as the app's identity.

Grant the SP Can Edit permission on the MLflow experiment to log traces and evaluation results. Click edit on your app home page to configure this. See Add an MLflow experiment resource to a Databricks app.

If your agent uses other Databricks resources such as Vector Search indexes, serving endpoints, UC Functions, UC Connections, Lakebase databases, UC Volumes, or Genie spaces you can grant the SP permissions to access them. For a full list of resources and how to configure permissions, see Add resources to a Databricks app.

See the table found in Agent automatic authentication for the right permission level to choose.

Use OBO authentication when your agent needs to access resources using the requesting user's identity instead of the app's Service Principal. OBO authentication enables user-specific permissions and audit trails.

To implement OBO authentication in your agent code:

-

Import the authentication utility. The utility uses the AgentServer to capture the header

x-forwarded-access-tokento handle authentication between the user, app, and agent server:Pythonfrom agent_server.utils import get_user_workspace_client -

Use

get_user_workspace_client()to get a WorkspaceClient authenticated as the requesting user. Initialize the WorkspaceClient at query time to access user credentials. If you initialize during app startup, it will fail because no user credentials are available yet.Python# In your agent code

w = get_user_workspace_client()

# Now use w to access Databricks resources with user permissions

response = w.serving_endpoints.query(name="my-endpoint", inputs=inputs)

Grant users access to the resources they need to use through the agent. For example, if your agent queries a serving endpoint, you must grant users Can Query permission on that endpoint.

Evaluate the agent

The template includes agent evaluation code. See agent_server/evaluate_agent.py for more information. Evaluate your agent's responses relevance and safety by running the following in a terminal:

uv run agent-evaluate

Deploy the agent to Databricks Apps

After configuring authentication, deploy your agent to Databricks. Ensure you have the Databricks CLI installed and configured.

-

If you cloned the repository locally, create the Databricks app before deploying it. If you created your app through the workspace UI, skip this step as the app and MLflow experiment are already configured.

Bashdatabricks apps create agent-openai-agents-sdk -

Sync local files to your workspace. See Deploy the app.

BashDATABRICKS_USERNAME=$(databricks current-user me | jq -r .userName)

databricks sync . "/Users/$DATABRICKS_USERNAME/agent-openai-agents-sdk" -

Deploy your Databricks App.

Bashdatabricks apps deploy agent-openai-agents-sdk --source-code-path /Workspace/Users/$DATABRICKS_USERNAME/agent-openai-agents-sdk

For future updates to the agent, sync and redeploy your agent.

Query the deployed agent

Users query your deployed agent using OAuth tokens. Personal access tokens (PATs) are not supported for Databricks Apps.

Generate an OAuth token using the Databricks CLI:

databricks auth login --host <https://host.databricks.com>

databricks auth token

Use the token to query the agent:

curl -X POST <app-url.databricksapps.com>/invocations \

-H "Authorization: Bearer <oauth token>" \

-H "Content-Type: application/json" \

-d '{ "input": [{ "role": "user", "content": "hi" }], "stream": true }'

Limitations

Only medium and large compute sizes are supported. See Configure the compute size for a Databricks app.