Agent system design patterns

GenAI agents combine the intelligence of GenAI models with tools for data retrieval, external actions, and other capabilities. This page walks through agent design:

- A concrete example of building an agent system illustrates orchestrating how model and tool calls flow together.

- Design patterns for agent systems form a continuum of complexity and autonomy, from deterministic chains, through single-agent systems that can make dynamic decisions, up to multi-agent architectures that coordinate multiple specialized agents.

- A practical advice section gives advice on choosing the right design and on developing agents, testing, and moving to production.

Agents rely heavily on tools for gathering information and taking external actions. For more background on tools, see Tools.

Example agent system

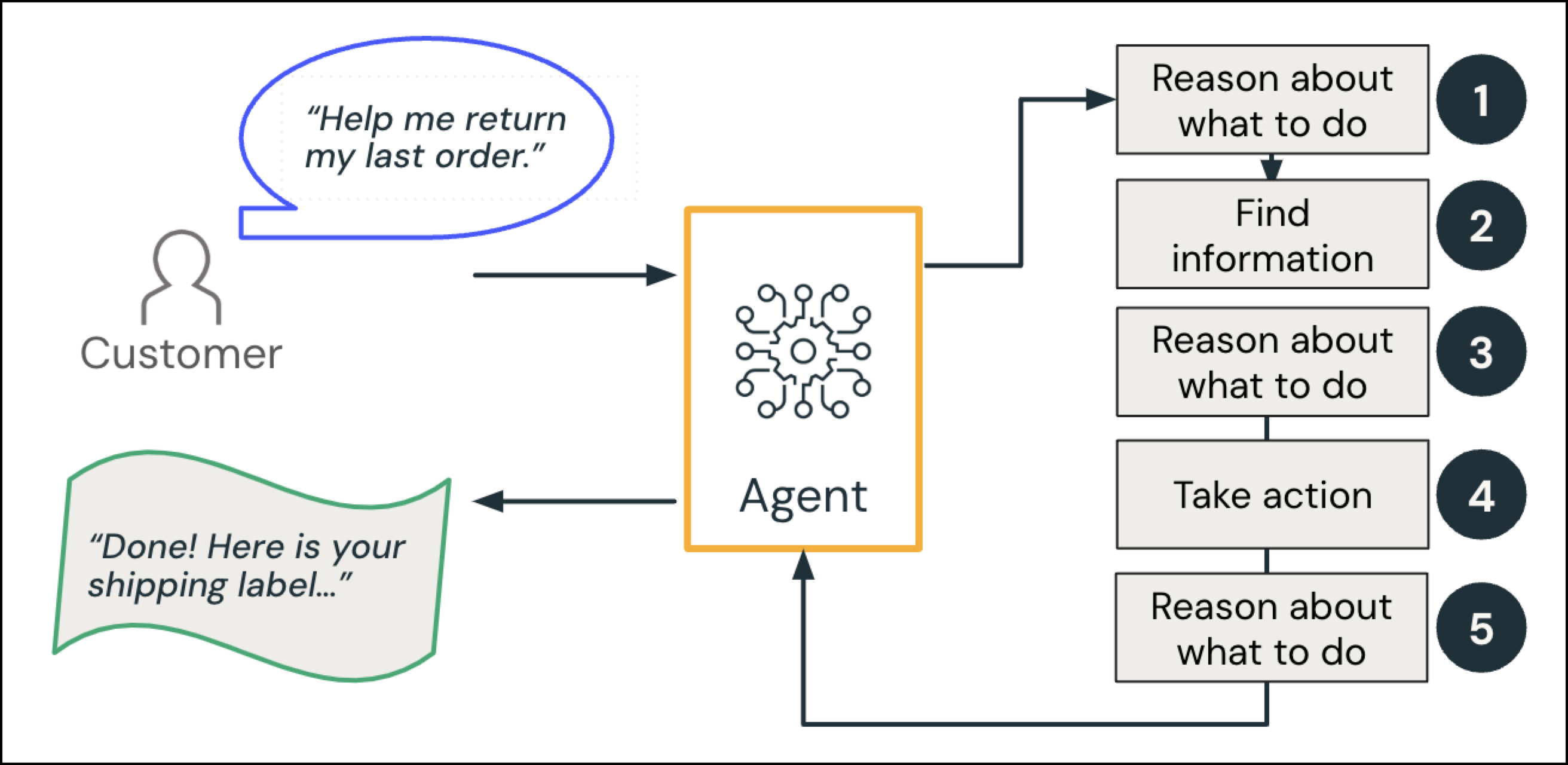

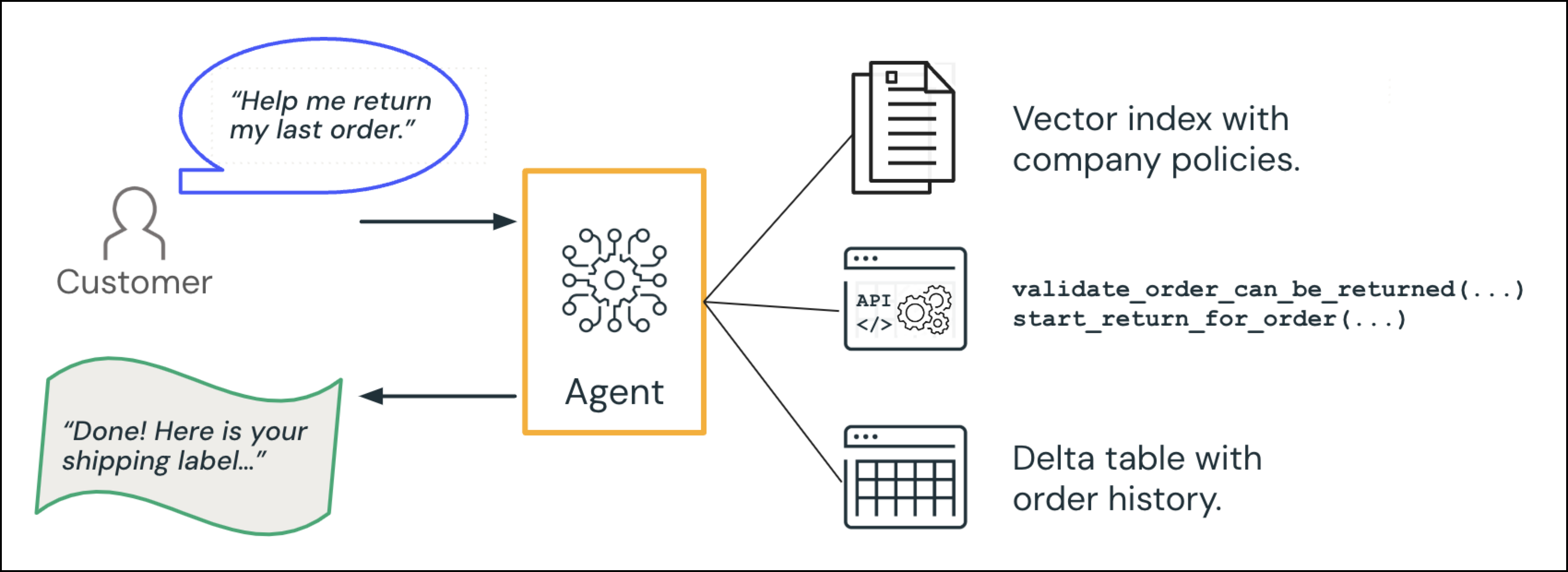

For a concrete example of an agent system, consider a call center GenAI agent interacting with a customer:

The customer makes a request: “Can you help me return my last order?”

- Reason and plan: Given the intent of the query, the agent “plans": “Look up the user's recent order and check our return policy.”

- Find information (data intelligence): The agent queries the order database to retrieve the relevant order and references a policy document.

- Reason: The agent checks whether that order fits in the return window.

- Optional human-in-the-loop: The agent checks an additional rule: If the item falls into a certain category or is outside the normal return window, escalate to a human.

- Action: The agent triggers the return process and generates a shipping label.

- Reason: The agent generates a response to the customer.

The AI agent responds to the customer: “Done! Here is your shipping label…”

These steps are second nature in a human call center context. In an agent system context, the LLM "reasons" while the system calls on specialized tools or data sources to fill in the details.

Levels of complexity: From LLMs to agent systems

GenAI apps can be powered by a range of systems, from simple LLM calls to complex multi-agent systems. When building any AI-powered application, start simple. Introduce more complex agentic behaviors when you truly need them for better flexibility or model-driven decisions. Deterministic chains offer predictable, rule-based flows for well-defined tasks. More agentic approaches offer greater flexibility and potential, but they come with the cost of extra complexity and potential latency.

Design Pattern | When to Use | Pros | Cons |

|---|---|---|---|

|

|

| |

|

|

| |

|

|

| |

|

|

|

Agent Framework is agnostic to these patterns, making it easy to start simple and evolve toward higher levels of automation and autonomy as your application requirements grow.

To read more about the theory behind agent systems, see blog posts from the Databricks founders:

- AI agent systems: Modular Engineering for Reliable Enterprise AI Applications

- The Shift from Models to Compound AI Systems

LLM and prompt

The simplest design has a standalone LLM or other GenAI model that responds to prompts based on knowledge from a vast training dataset. This design is good for simple or generic queries but is often disconnected from your real-world business data. You can customize behavior by providing a system prompt with your custom instructions or embedded data.

Deterministic chain (hard-coded steps)

Deterministic chains augment GenAI models with tool calling, but the developer defines which tools or models are called, in what order, and with which parameters. The LLM does not make decisions about which tools to call or in what order. The system follows a predefined workflow or "chain" for all requests, making it highly predictable.

For example, a deterministic Retrieval Augmented Generation (RAG) chain might always:

- Retrieve top-k results from a vector index to find context relevant to a user request.

- Augment a prompt by combining the user request with the retrieved context.

- Generate a response by sending the augmented prompt to an LLM.

When to use:

- For well-defined tasks with predictable workflows.

- When consistency and auditing are top priorities.

- When you want to minimize latency by avoiding multiple LLM calls for orchestration decisions.

Advantages:

- Highest predictability and auditability.

- Typically lower latency (fewer LLM calls for orchestration).

- Easier to test and validate.

Considerations:

- Limited flexibility for handling diverse or unexpected requests.

- Can become complex and difficult to maintain as logic branches grow.

- Can require significant refactoring to accommodate new capabilities.

Single-agent system

A single-agent system has an LLM that orchestrates one coordinated flow of logic. The LLM adaptively decides which tools to use, when to make more LLM calls, and when to stop. This approach supports dynamic, context-aware decisions.

A single-agent system can:

- Accept requests such as user queries and any relevant context such as conversation history.

- Reason about how best to respond, optionally deciding whether to call tools for external data or actions.

- Iterate if needed, calling an LLM or tools repeatedly until an objective is achieved or a certain condition is met, such as receiving valid data or resolving an error.

- Integrate tool outputs into the conversation.

- Return a cohesive response as output.

For example, a help desk assistant agent might adapt as follows:

- If the user asks a simple question ("What is our returns policy?"), the agent might respond directly from the LLM's knowledge.

- If the user wants their order status, the agent might call a function

lookup_order(customer_id, order_id). If that tool responds with "invalid order number," the agent can retry or prompt the user for the correct ID, continuing until it can provide a final answer.

When to use:

- You expect varied user queries but still within a cohesive domain or product area.

- Certain queries or conditions can warrant tool usage such as deciding when to fetch customer data.

- You want more flexibility than a deterministic chain but don't require separate specialized agents for different tasks.

Advantages:

- The agent can adapt to new or unexpected queries by choosing which (if any) tools to call.

- The agent can loop through repeated LLM calls or tool invocations to refine results - without needing a fully multi-agent setup.

- This design pattern is often the sweet spot for enterprise use cases - simpler to debug than multi-agent setups while still allowing dynamic logic and limited autonomy.

Considerations:

- Compared to a hard-coded chain, you must guard against repeated or invalid tool calls. Infinite loops can occur in any tool-calling scenario, so set iteration limits or timeouts.

- If your application spans radically different sub-domains (finance, devops, marketing, etc.), a single agent can become unwieldy or overloaded with functionality requirements.

- You still need carefully designed prompts and constraints to keep the agent focused and relevant.

- Agency is a continuum; the more freedom you provide models to control the behavior of the system, the more agentic the application becomes. In practice, most production systems carefully constrain the agent's autonomy to ensure compliance and predictability, for example by requiring human approval for risky actions.

Multi-agent system

A multi-agent system involves two or more specialized agents that exchange messages or collaborate on tasks. Each agent has its own domain or task expertise, context, and potentially distinct tool sets. A separate "coordinator" or "AI supervisor" directs requests to the appropriate agent, or decides when to hand off from one agent to another. The supervisor can be another LLM or a rule-based router.

For example, a customer assistant might have a supervisor that delegates to specialized agents:

- Shopping assistant: Helps customers search for products and provides advice on pros and cons from reviews

- Customer support agent: Handles feedback, returns, and shipping

When to use:

- You have distinct problem areas or skill sets such as a coding agent or a finance agent.

- Each agent needs access to conversation history or domain-specific prompts.

- You have so many tools that fitting them all into one agent's schema is impractical; each agent can own a subset.

- You want to implement reflection, critique, or back-and-forth collaboration among specialized agents.

Advantages:

- This modular approach means each agent can be developed or maintained by separate teams, specializing in a narrow domain.

- Can handle large, complex enterprise workflows that a single agent might struggle to manage cohesively.

- Facilitates advanced multi-step or multi-perspective reasoning - for instance, one agent generating an answer, another verifying it.

Considerations:

- Requires a strategy for routing between agents, plus overhead for logging, tracing, and debugging across multiple endpoints.

- If you have many sub-agents and tools, it can get complicated to decide which agent has access to which data or APIs.

- Agents can bounce tasks indefinitely among themselves without resolution if not carefully constrained. Infinite loop risks also exist in single-agent tool-calling, but multi-agent setups add another layer of debugging complexity.

Practical advice

If you need to build a custom agent system, then Databricks and the Mosaic AI Agent Framework are agnostic of whatever pattern you choose, making it easy to evolve design patterns as your application grows. Consider the following best practices for developing stable, maintainable agent systems:

- Start simple: If you only need a straightforward chain, a deterministic chain is fast to build.

- Gradually add complexity: As you need more dynamic queries or flexible data sources, move to a single-agent system with tool calling. If you have clearly distinct domains or tasks, multiple conversation contexts, or a large tool set, then consider a multi-agent system.

- Combine patterns: In practice, many real-world agent systems combine patterns. For instance, a mostly deterministic chain can have one step in which the LLM can dynamically call certain APIs if needed.

Development guidance

- Prompts and tools

- Keep prompts clear and minimal to avoid contradictory instructions, distracting information and reduce hallucinations.

- Provide only the tools and context your agent requires, rather than an unbounded set of APIs or large irrelevant context. Choose your tool approach during design.

- Logging and observability

- Implement detailed logging for each user request, agent plan, and tool call using MLflow Tracing.

- Store logs securely and be mindful of personally identifiable information (PII) in conversation data. Consider Data Classification for automation.

Testing and iteration guidance

- Evaluation

- Use MLflow Evaluation and production monitoring to define evaluation metrics for development and production.

- Gather human feedback from experts and users to ensure your automated evaluation metrics are well-calibrated.

- Error handling and fallback logic

- Plan for tool or LLM failures. Timeouts, malformed responses, or empty results can break a workflow. Include retry strategies, fallback logic, or a simpler fallback chain when advanced features fail.

- Iterative improvements

- Expect to refine prompts and agent logic over time. Version changes using the MLflow Prompt Registry for your prompts and MLflow app version tracking for your apps. Versioning will simplify operations and allow rollbacks and comparisons.

- As you collect evaluation data and define metrics, consider more automated optimization methods such as MLflow Prompt Optimization.

Production guidance

- Model updates and version pinning

- LLM behaviors can shift when providers update models behind the scenes. Use version pinning and frequent regression tests to ensure your agent logic remains robust and stable.

- Latency and cost optimization

- Each additional LLM or tool call increases token usage and response time. Where possible, combine steps or cache repeated queries to keep performance and cost manageable.

- Security and sandboxing

- If your agent can update records or run code, sandbox those actions or enforce human approval where necessary. This is critical in enterprise or regulated environments to avoid unintended harm. Unity Catalog functions provide sandboxed execution for production.

- See AI agent tools for more guidance on tool options.

By following these guidelines, you can mitigate many of the most common failure modes such as tool mis-calls, drifting LLM performance, or unexpected cost spikes, and build more reliable, scalable agent systems.