Auto Loader with file events overview

Learn about the cloudFiles.useManagedFileEvents option with Auto Loader, which provides efficient file discovery.

How does Auto Loader with file events work?

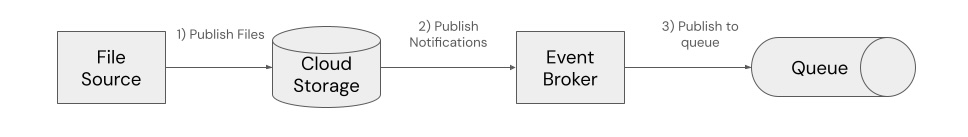

Auto Loader with file events uses file event notifications functionality provided by cloud vendors. You can configure cloud storage containers to publish notifications upon file events such as new file creation and modification. For example, with Amazon S3 event notifications, a new file arrival can trigger a notification to an Amazon SNS topic. An Amazon SQS queue can then be subscribed to the SNS topic for asynchronous processing of the event.

The following diagram depicts this pattern:

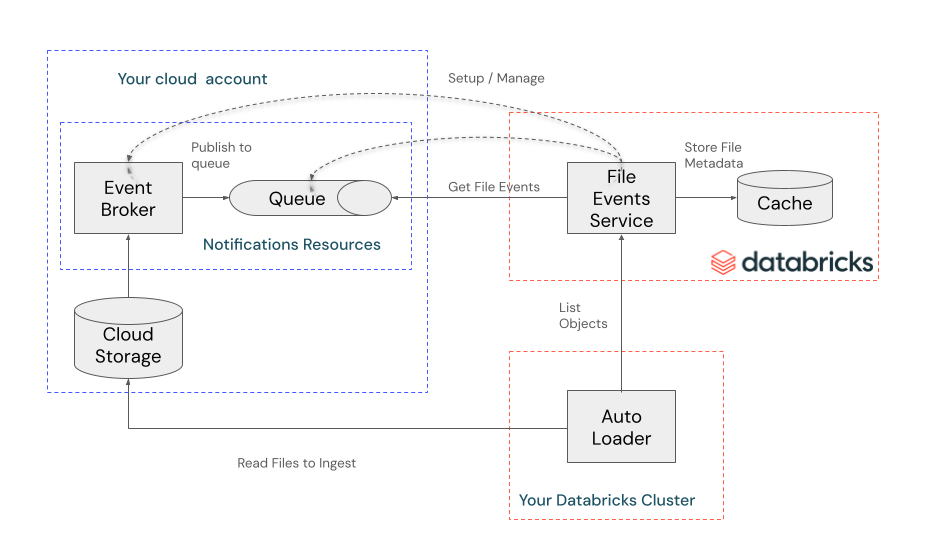

Databricks file events is a service that sets up cloud resources to listen for file events. Alternatively, you can set up the cloud resources yourself and provide your own storage queue.

After the cloud resources are configured, the service listens to file events and caches file metadata information. Auto Loader uses this cache to discover files when it is run with cloudFiles.useManagedFileEvents set to true.

The following diagram depicts these interactions:

When a stream runs for the first time with cloudFiles.useManagedFileEvents set to true, Auto Loader does a full directory listing of the load path to discover all files and get current with the file events cache (secure a valid read position in the cache and store it in the stream's checkpoint). Subsequent runs of Auto Loader discover new files by reading directly from the file events cache using the stored read position and do not require directory listing.

We recommend running your Auto Loader streams at least once every 7 days to take advantage of incremental file discovery from the cache. If you don’t run Auto Loader at least this often, the stored read position becomes invalid and Auto Loader must perform a full directory listing to get current with the file events cache.

When does Auto Loader with file events use directory listing?

Auto Loader performs a full directory listing when:

- Starting a new stream

- Migrating a stream from directory listing or classic file notifications

- A stream's load path is changed

- Auto Loader with file events is not run for a duration of more than 7 days

- Updates are made to the external location that invalidate Auto Loader's read position. Examples include when file events are turned off and on again, when the external location's path is changed, or when a different queue is provided for the external location.

Auto Loader always performs a full listing on the first run, even when includeExistingFiles is set to false. This flag enables you to ingest all files that were created after the stream's start time. Auto Loader lists the entire directory to discover all files created after the stream's start time, secure a read position in the file events cache, and store it in the checkpoint. Subsequent runs read directly from the file events cache and do not require a directory listing.

The Databricks file events service also performs full directory listings periodically on the external location to confirm that it hasn't missed any files (for example, if the provided queue is misconfigured). The first full directory listings begin as soon as file events are enabled on the external location. Subsequent listings occur periodically as long as there is at least one Auto Loader stream using file events to ingest data.

Best practices for Auto Loader with file events

Follow these best practices to optimize performance and reliability when using Auto Loader with file events.

Use volumes for optimal file discovery

For enhanced performance, Databricks recommends creating an external volume for each path or subdirectory that Auto Loader loads data from and supplying volume paths (for example, /Volumes/someCatalog/someSchema/someVolume) to Auto Loader instead of cloud paths (for example, s3://bucket/path/to/volume). This optimizes file discovery because Auto Loader is able to list the volume using an optimized data access pattern.

Consider file arrival triggers for event-driven pipelines

For event-driven data processing, consider using a file arrival trigger instead of a continuous pipeline. File arrival triggers automatically start your pipeline when new files arrive, providing better resource utilization and cost efficiency because your cluster only runs when there are new files to process.

Configure appropriate intervals with continuous triggers

We recommend using file arrival triggers to process files as soon as they arrive. However, if your use case requires using continuous triggers like Trigger.ProcessingTime, we recommend configuring the trigger intervals to 1 minute or higher (set using pipelines.trigger.interval when using Lakeflow Spark Declarative Pipelines). This lowers the polling frequency to check if new files have arrived and allows a higher number of streams to run concurrently from your workspace.

Limitations of Auto Loader with file events

- Path rewrites are not supported. Path rewrites are used when multiple buckets or containers are mounted under DBFS, which is a deprecated usage pattern.

For a general list of file events limitations, see File events limitations.

FAQs

Find answers to frequently asked questions about Auto Loader with file events.

Why is file events mode better than classic file notifications mode?

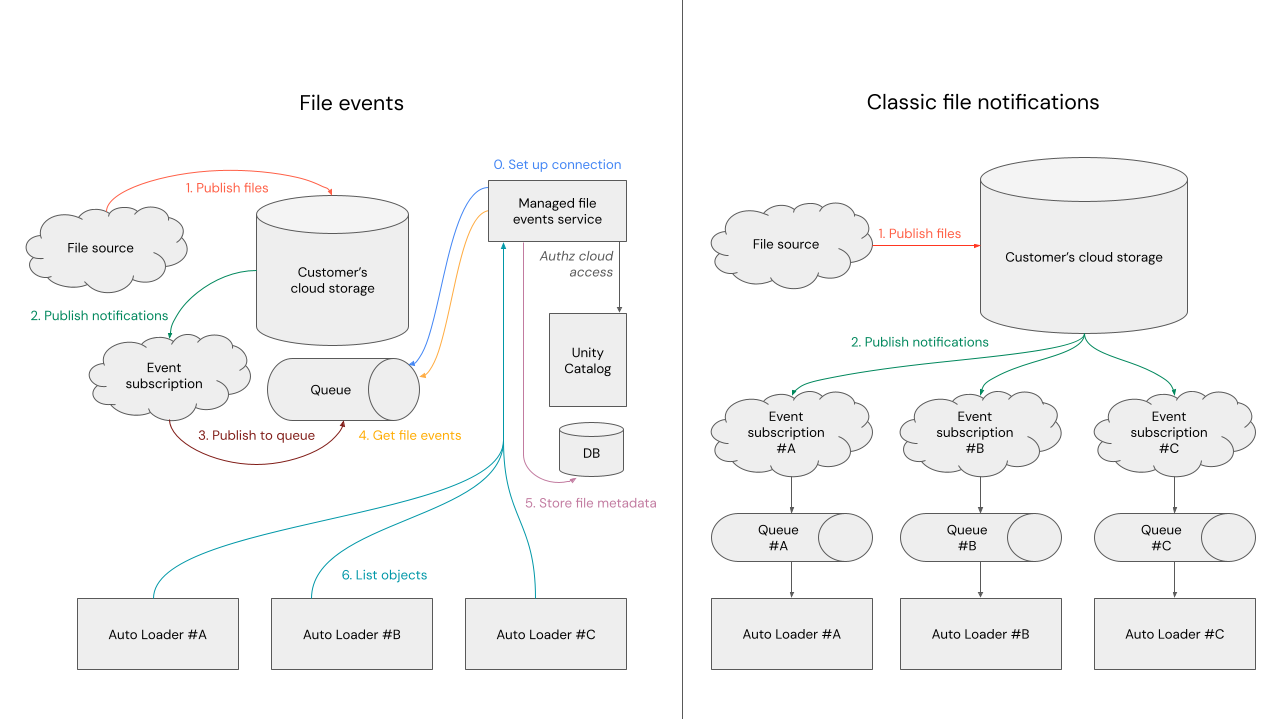

The following diagram compares file events mode and classic file notification mode.

File events mode has several advantages over classic file notification mode. Primarily, it requires only one queue for all Auto Loader streams on a bucket, helping you avoid the per-bucket notifications limit. For more information, see File notification mode with and without file events enabled on external locations.

How do I confirm that file events are set up correctly?

Click the Test Connection button on the external location page. If file events are set up correctly, you'll see a green check mark for the file events read item. If you just created the external location and enabled file events in Automatic mode, the test shows Skipped while Databricks sets up notifications for the external location. Wait a few minutes, then click Test Connection again. If Databricks doesn't have the required permissions to set up or read from file events, you’ll see an error for the file events read item.

Can I avoid a full directory listing during the initial run?

No. Even if includeExistingFiles is set to false, Auto Loader performs a directory listing to discover files created after the stream start and get current with the file events cache (secure a valid read position in the cache and store it in the stream's checkpoint).

Should I set cloudFiles.backfillInterval to avoid missing files?

No. This setting was recommended for the classic file notification mode because cloud storage notification systems could result in missed or late-arriving files. Now, Databricks performs full directory listings periodically on the external location. The first full directory listings begin as soon as file events are enabled on the external location. Subsequent listings periodically as long as there is at least one Auto Loader stream using file events to ingest data.

I set up file events with a provided storage queue, but the queue was misconfigured and I missed files. How do I ensure that Auto Loader ingests the files missed when my queue was misconfigured?

First, confirm that the provided queue misconfiguration is fixed. To check, click the Test Connection button on the external location page. If file events are set up correctly, you'll see a green check mark for the file events read item.

Databricks performs a full directory listing for external locations with file events enabled. This directory listing discovers any files that were missed during the misconfiguration period and stores them in the file events cache.

After the misconfiguration is fixed and Databricks completes the directory listing, Auto Loader will continue to read from the file events cache and automatically ingest any files missed during the misconfiguration period.

How does Databricks get the permissions to create cloud resources and read and delete messages from the queue?

Databricks uses the permissions granted in the storage credential associated with the external location on which file events are enabled.