Troubleshoot MySQL ingestion issues

The MySQL connector is in Public Preview. Contact your Databricks account team to request access.

This page contains references to the term slave, a term that Databricks doesn't use. When the term is removed from the third-party software, we'll remove it from this page.

General pipeline troubleshooting

The troubleshooting steps in this section apply to all managed ingestion pipelines in Lakeflow Connect.

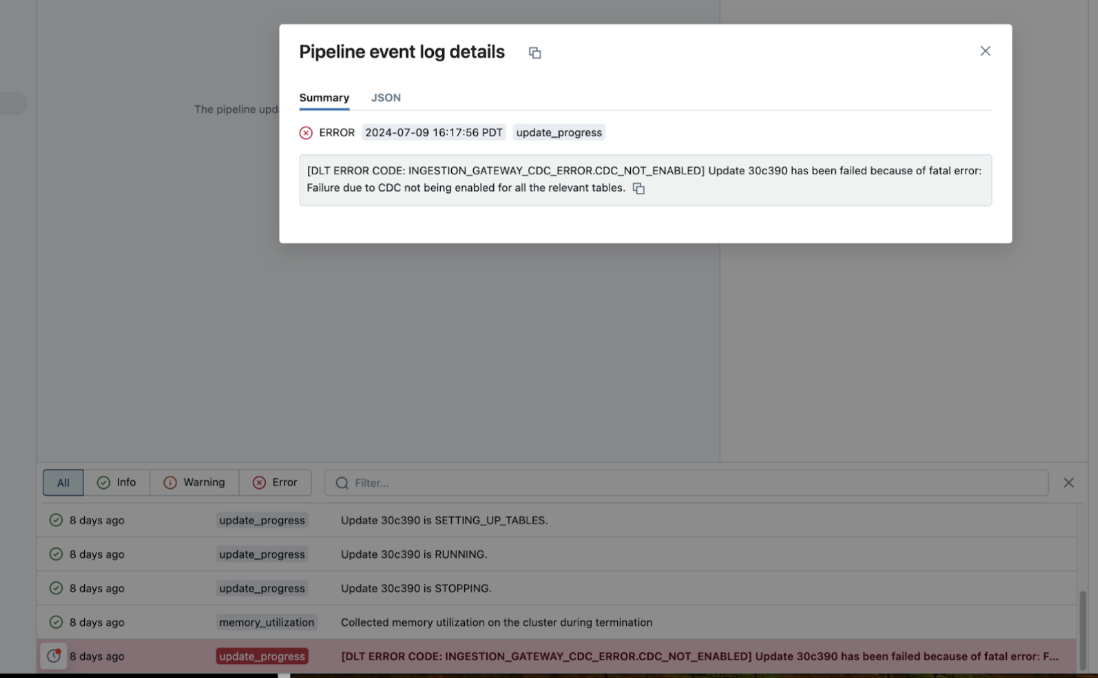

If a pipeline fails while running, click the step that failed and confirm whether the error message provides sufficient information about the nature of the error.

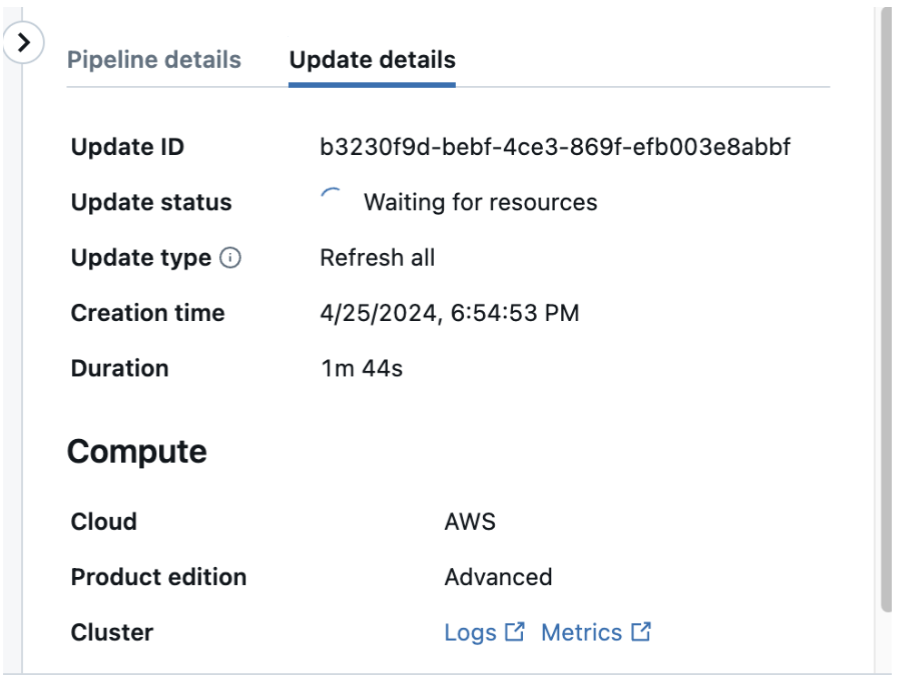

You can also check and download the cluster logs from the pipeline details page by clicking Update details in the right-hand panel, then Logs. Scan the logs for errors or exceptions.

Connector-specific troubleshooting

The troubleshooting steps in this section are specific to the MySQL connector.

Connection issues

Test Connection button fails

Problem: The Test Connection button fails in the UI, even though credentials are correct.

Cause: This is a known limitation for MySQL users with sha256_password or caching_sha2_password authentication plugins.

Solution: You can safely ignore this error and proceed with creating the connection. To confirm that your credentials work, run the following in the MySQL command-line client or another MySQL client tool:

mysql -h your-mysql-host -u lakeflow_connect_user -p

If you can connect with the MySQL client, the connection will work for ingestion despite the Test Connection failure.

Can't connect to MySQL server

Problem: Connection fails with errors like "Can't connect to MySQL server" or "Connection refused".

Possible causes and solutions:

-

Network connectivity:

- Verify firewall rules allow traffic from Databricks IP ranges on port 3306.

- Check security groups (AWS), NSGs (Azure), or firewall rules (GCP).

- Verify VPC peering or network connectivity is properly configured.

-

MySQL not listening on expected interface:

- Check the MySQL

bind-addresssetting. - Ensure MySQL is listening on

0.0.0.0or the specific IP address you're connecting to.

- Check the MySQL

-

Wrong host or port:

- Verify the hostname or IP address is correct.

- Confirm the port (default 3306) is correct.

Access denied for user

Problem: Connection fails with "Access denied for user 'lakeflow_connect_user'@'host'".

Possible causes and solutions:

-

Wrong password: Verify the password is correct

-

User not created or wrong host:

SQL-- Check if user exists

SELECT User, Host FROM mysql.user WHERE User = 'lakeflow_connect_user';

-- User might need to be created for specific host or '%'

CREATE USER 'lakeflow_connect_user'@'%' IDENTIFIED BY 'password'; -

Missing privileges:

SQL-- Grant required privileges

GRANT REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'lakeflow_connect_user'@'%';

GRANT SELECT ON your_database.* TO 'lakeflow_connect_user'@'%';

FLUSH PRIVILEGES;

Binary log issues

Binary logging not enabled

Problem: Pipeline fails with error indicating binary logging is not enabled.

Solution: Enable binary logging on your MySQL server:

- RDS/Aurora: Configure the parameter group and restart.

- Azure MySQL: Configure server parameters and restart.

- GCP Cloud SQL: Configure database flags and restart.

- EC2: Edit

my.cnfand addlog-bin=mysql-bin, then restart MySQL.

Verify with:

SHOW VARIABLES LIKE 'log_bin';

-- Should return ON

Wrong binlog format

Problem: Pipeline fails because binlog format is not ROW.

Solution: Set binlog format to ROW:

- RDS/Aurora: Set

binlog_format=ROWin the parameter group. - Azure MySQL: Set

binlog_format=ROWin server parameters. - GCP Cloud SQL: Set

binlog_format=ROWin database flags. - EC2: Add

binlog_format=ROWtomy.cnf.

Restart MySQL and verify:

SHOW VARIABLES LIKE 'binlog_format';

-- Should return ROW

Binlog purged before processing

Problem: Pipeline fails with error about missing binlog files.

Cause: Binary logs were purged before the ingestion gateway could process them.

Solution:

-

Increase binlog retention:

SQL-- RDS/Aurora:

-- Minimum: one day (24 hours), recommended: seven days (168 hours)

CALL mysql.rds_set_configuration('binlog retention hours', 168);

-- EC2 (MySQL 8.0):

-- Minimum: one day (86400 seconds), recommended: seven days (604800 seconds)

SET GLOBAL binlog_expire_logs_seconds = 604800;

-- EC2 (MySQL 5.7):

-- Minimum: one day, recommended: seven days

SET GLOBAL expire_logs_days = 7; -

Perform a full refresh of affected tables:

-

Ensure the ingestion gateway runs continuously

Prevention: Configure adequate binlog retention (minimum one day, seven days recommended). Setting a lower value might cause binlogs to be cleaned up before the ingestion gateway processes them.

Ingestion gateway issues

Gateway not starting

Problem: Ingestion gateway fails to start or immediately fails.

Possible causes and solutions:

-

Connection issues: Verify the connection works (see Connection issues).

-

Insufficient compute resources:

- Check cluster creation permissions and policies.

-

Staging volume issues:

- Verify you have

CREATE VOLUMEprivileges on the staging schema. - Check that the staging catalog isn't a foreign catalog.

- Verify you have

Gateway running but not capturing changes

Problem: Gateway is running but new changes aren't being captured.

Possible causes and solutions:

-

Binlog retention too short:

- Increase binlog retention.

- Monitor binlog files to ensure they're not purged too quickly.

-

Network interruptions:

- Check for network connectivity issues.

- Review gateway logs for connection errors.

Ingestion pipeline issues

Tables not being ingested

Problem: Some tables are not being ingested even though they're selected.

Possible causes and solutions:

-

Spatial data types:

- Tables with spatial columns can't be ingested.

- Exclude these tables from ingestion.

-

Missing privileges:

- Verify the replication user has

SELECTon all source tables.

SQLSHOW GRANTS FOR 'lakeflow_connect_user'@'%'; - Verify the replication user has

Checking logs

To diagnose issues, check the following logs:

-

Gateway logs:

- Navigate to the gateway pipeline in the Databricks UI.

- Click on the latest run.

- Review cluster logs and DLT event logs.

-

Pipeline logs:

- Navigate to the ingestion pipeline.

- Click on the latest run.

- Review DLT event logs and any error messages.

-

MySQL logs:

- Check the MySQL error log for connection or replication issues.

- Review binlog files if position errors occur.

Get help

If you continue to experience issues:

-

Collect relevant information:

- Error messages from pipeline logs.

- Gateway cluster logs.

- MySQL version and deployment type.

- Source configuration (binlog settings, retention).

-

Contact Databricks support with the collected information.

-

During Public Preview, you can also contact your Databricks account team.