Data Classification

This feature is in Public Preview.

This page describes how to use Databricks Data Classification in Unity Catalog to automatically classify and tag sensitive data in your catalog.

Data catalogs can have a vast amount of data, often containing known and unknown sensitive data. It is critical for data teams to understand what kind of sensitive data exists in each table so that they can both govern and democratize access to this data.

To address this problem, Databricks Data Classification uses an AI agent to automatically classify and tag tables in your catalog. This allows you to discover sensitive data and to apply governance controls over the results, using tools such as Unity Catalog attribute-based access control (ABAC). For a list of supported tags, see Supported classification tags.

Using this feature, you can:

- Classify data: The engine uses an agentic AI system to automatically classify and tag any tables in Unity Catalog.

- Optimize cost through intelligent scanning: The system intelligently determines when to scan your data by leveraging Unity Catalog and the Data Intelligence Engine. This means that scanning is incremental and optimized to ensure all new data is classified without manual configuration.

- Review and protect sensitive data: The results display assists you in viewing classification results and protecting sensitive data by tagging and creating access control policies for each class.

Databricks Data Classification uses default storage to store classification results. You are not billed for the storage.

Databricks Data Classification uses a large language model (LLM) to assist with classification.

Requirements

Data classification is a workspace-level preview feature, and it can only be managed by a workspace or account admin. For instructions, see Manage Databricks previews.

The model powering this function is made available using Mosaic AI Model Serving Foundation Model APIs. Llama 3.1 is licensed under the Llama 3.1 Community License, Copyright © Meta Platforms, Inc. All Rights Reserved. See Applicable model developer licenses and terms for more information.

If models emerge in the future that perform better according to Databricks's internal benchmarks, Databricks may change the models and update the documentation.

- You must have serverless compute enabled. See Connect to serverless compute.

- To enable data classification, you must own the catalog or have

USE_CATALOGandMANAGEprivileges on it. - To view the results table, you must have the following permissions:

USE CATALOGandUSE SCHEMA, plusSELECTon the table. See The results system table.

Use data classification

To use data classification on a catalog:

-

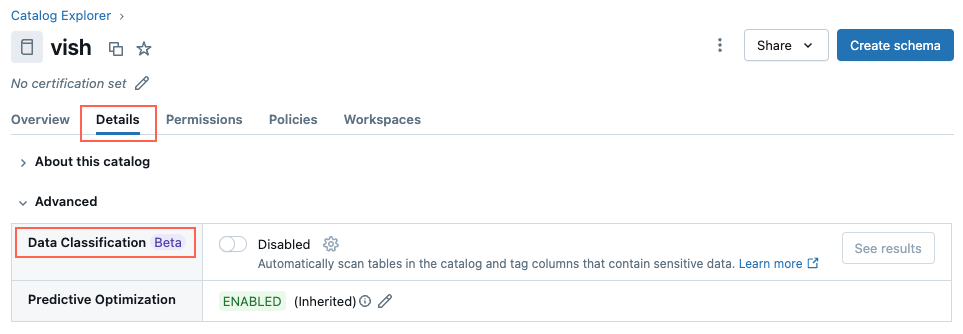

Navigate to the catalog and click the Details tab.

-

Click the Data Classification toggle to enable it.

-

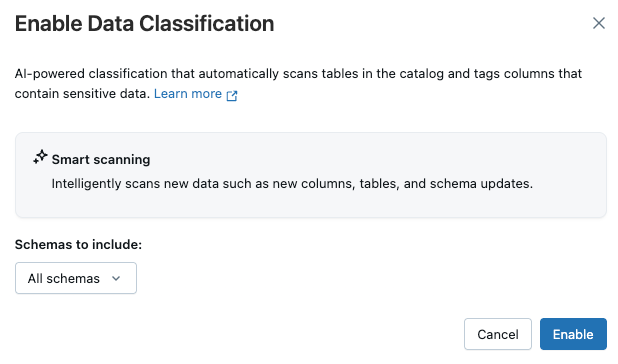

The Enable Data Classification dialog appears. By default, all schemas are included. To include only some schemas, select them in the Schemas to include dropdown menu.

-

Click Enable.

This creates a background job that incrementally scans all tables in the catalog or selected schemas.

The classification engine relies on intelligent scanning to determine when to scan a table. New tables and columns in a catalog are typically scanned within 24 hours of being created.

View classification results

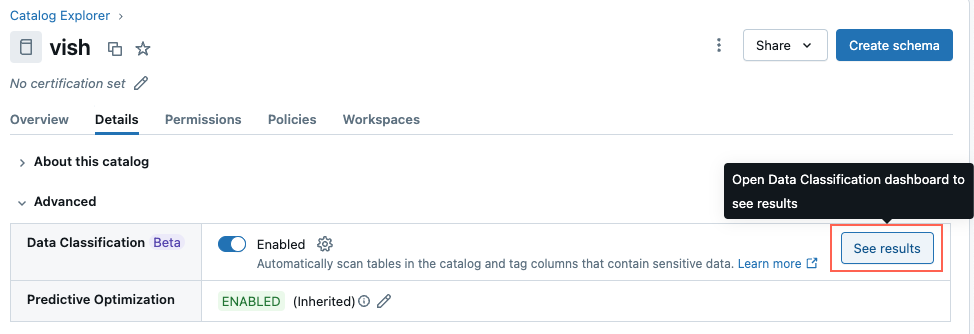

To view classification results, click See results next to the toggle.

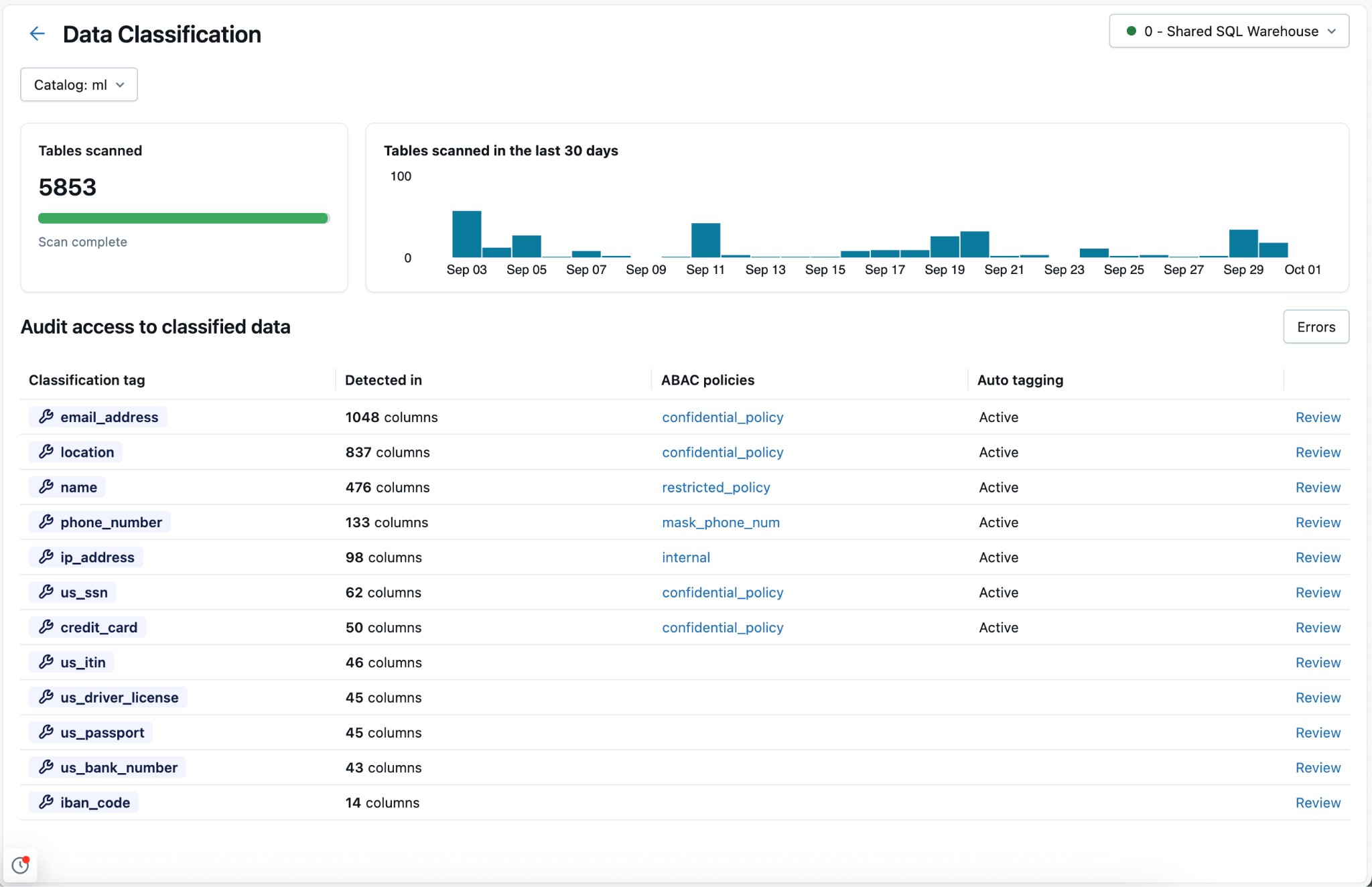

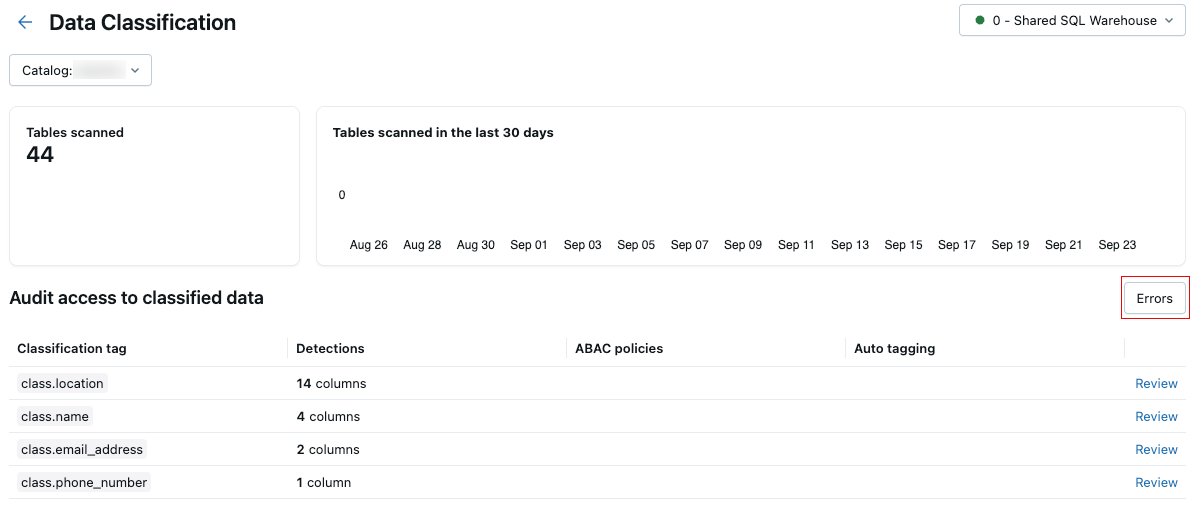

A results page opens, showing the classification results for all tables in the catalog. To select a different catalog, use the selector at the upper left of the page. A serverless SQL warehouse is required, and appears at the upper right of the page.

The results page lists any classification tags that were identified in the catalog. Any existing ABAC policies that reference data classification system tags (class.xx) appear in the table.

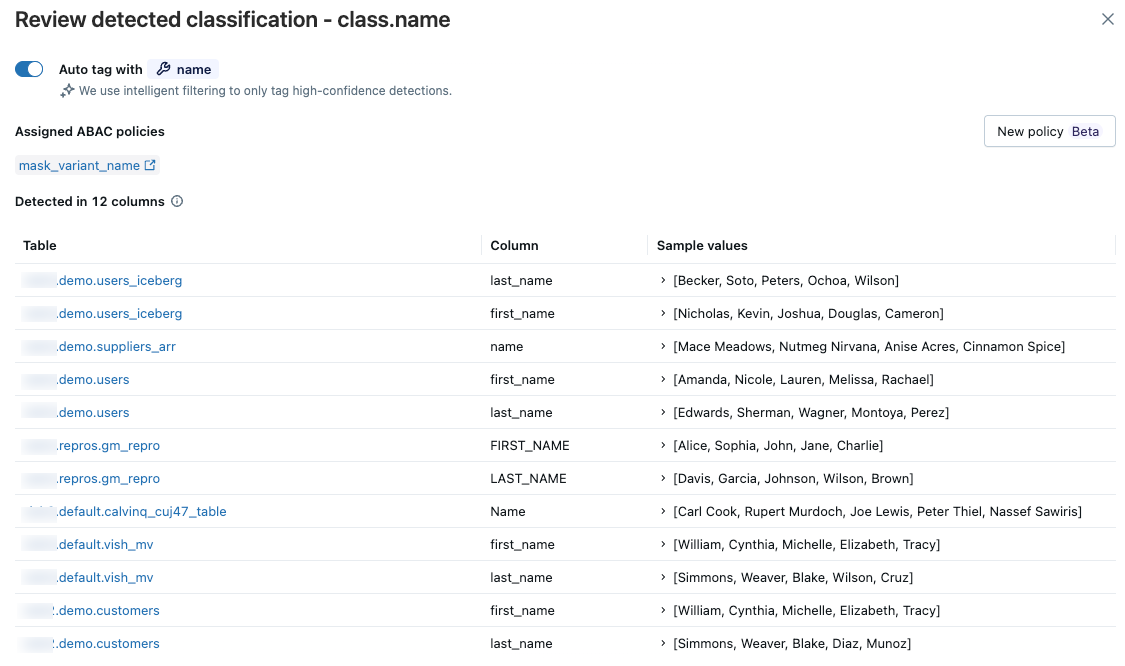

To review the results for a specific classification tag, click Review in the rightmost column for the corresponding row.

A panel appears, displaying the tables for which data classification has detected the classification tag with high confidence. Review the tables, columns, and sample values. Sample values only appear if you have access to the results table. See The results system table.

If the identified columns match your expectations, you can enable automatic tagging for the classification tag for this catalog. When automatic tagging is enabled, all existing and future detections of this classification are tagged.

To enable automatic tagging, toggle Auto tag with .... You can later disable automatic tagging using the same toggle. When you disable tagging, no future tags are applied, but existing tags are not removed.

When you enable automatic tagging, tags are not backfilled immediately. They will be populated in the next scan, which should take effect within 24 hours. Subsequent classifications will be tagged immediately.

The results system table

Data classification creates a system table named system.data_classification.results to store results that by default are accessible only to the account admin. The account admin can share this table. The table is only accessible when you use serverless compute. For details about this table, see Data classification system table reference.

The results table system.data_classification.results contains all classification results across the entire metastore and includes sample values from tables in each catalog. You should only share this table with users who are privileged to see metastore-wide classification results, including sample values.

The following permissions are required to view the results table: USE CATALOG and USE SCHEMA, plus SELECT on the table. Users with MANAGE or SELECT access to a catalog can see results in the page, but cannot see sample values.

Set up governance controls based on data classification results

Mask sensitive data using an ABAC policy

Databricks recommends using Unity Catalog attribute-based access control (ABAC) to create governance controls based on data classification results.

To create a policy, click New policy. The policy form is pre-filled to mask columns with the classification tag being reviewed. To mask the data, specify any masking function registered in Unity Catalog and click Save.

You can also create a policy that covers multiple classification tags, by changing When column to meets condition and providing multiple tags.

For example, to create a policy called "Confidential" which masks any name, email, or phone number, set the meets condition to hasTag("class.name") OR hasTag("class.email_address") OR hasTag("class.phone_number").

GDPR discovery and deletion

This example notebook shows how you can use data classification to assist with data discovery and deletion for GDPR compliance.

GDPR discovery and deletion using data classification notebook

How to handle incorrect tags

If data is incorrectly tagged, you can manually remove the tag. The tag will not be reapplied in future scans.

To remove a tag using the UI, navigate to the table in Catalog Explorer and edit the column tags.

To remove a tag using SQL:

ALTER TABLE catalog.schema.table

ALTER COLUMN col

UNSET TAGS ('class.phone_number', 'class.us_ssn')

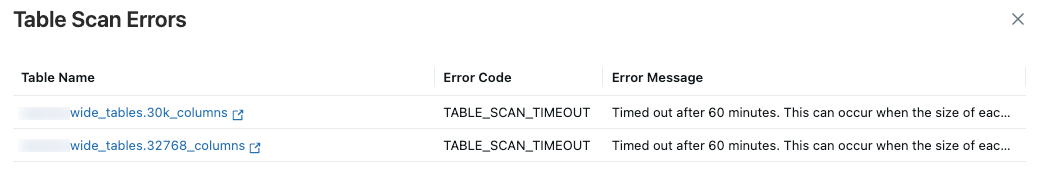

Scan errors

If any errors occur during the scan, an Errors button appears at the upper right of the results table.

Click the button to display the tables that failed the scan and associated error messages.

By default, failures that occurred for individual tables are skipped and retried the following day.

View Data Classification expenses

To understand how Data Classification is billed, see the pricing page. You can view expenses related to Data Classification either by running a query or viewing the usage dashboard.

The initial scan is more costly than subsequent scans on the same catalog, as those scans are incremental and typically incur lower costs.

View usage from the system table system.billing.usage

You can query Data Classification expenses from system.billing.usage. The fields created_by and catalog_id can be used optionally to break down costs:

created_by: Include to see costs by the user who triggered the usage.catalog_id: Include to see costs by catalog. The catalog ID is shown in thesystem.data_classification.resultstable.

Example query for the last 30 days:

SELECT

usage_date,

identity_metadata.created_by,

usage_metadata.catalog_id,

SUM(usage_quantity) AS dbus

FROM

system.billing.usage

WHERE

usage_date >= DATE_SUB(CURRENT_DATE(), 30)

AND billing_origin_product = 'DATA_CLASSIFICATION'

GROUP BY

usage_date,

created_by,

catalog_id

ORDER BY

usage_date DESC,

created_by;

View usage from the usage dashboard

If you already have a usage dashboard configured in your workspace, you can use it to filter the usage by selecting the Billing Origin Project labeled 'Data Classification.' If you do not have a usage dashboard configured, you can import one and apply the same filtering. For details, see Usage dashboards.

Supported classification tags

The following tables list the System governed tags supported by Data Classification.

Tags available to global customers

Class | Description |

|---|---|

class.credit_card | Credit card number |

class.email_address | Email address |

class.iban_code | International Bank Account Number (IBAN) |

class.ip_address | Internet Protocol Address (IPv4 or IPv6) |

class.location | Location |

class.name | Name of a person |

class.phone_number | Phone number |

class.url | URL |

class.us_bank_number | US bank number |

class.us_driver_license | US driver license |

class.us_itin | US Individual Taxpayer Identification Number |

class.us_passport | US Passport |

class.us_ssn | US Social Security Number |

class.vin | Vehicle Identification Number (VIN) |

Tags available to European customers

These tags are available in workspaces in regions in Europe.

Class | Description |

|---|---|

class.de_id_card | German ID card number (Personalausweisnummer) |

class.de_svnr | German social insurance number (Sozialversicherungsnummer) |

class.de_tax_id | German tax ID (Steueridentifikationsnummer) |

class.uk_nhs | UK National Health Service (NHS) number |

class.uk_nino | UK National Insurance Number (NINO) |

Tags available to Australian customers

These tags are available in workspaces in regions in Australia.

Class | Description |

|---|---|

class.au_medicare | Australian Medicare card number |

class.au_tfn | Australian Tax File Number (TFN) |

Limitations

- Views and metric views are not supported. If the view is based on existing tables, Databricks recommends classifying the underlying tables to see if they contain sensitive data.