What's coming?

Learn about features and behavioral changes in upcoming Databricks releases.

Data Classification will soon be available by default for some workspaces with the compliance security profile enabled

In mid-March 2026, Data Classification will be available by default for workspaces with the compliance security profile enabled and HIPAA controls selected.

EventBridge support will soon be available for file events provided queues

In late February 2026, EventBridge support will be available for file events provided queues for S3 locations. Currently, file events can only be set up using SNS or by routing storage events directly to SQS.

Databricks Apps compute sizing will soon be available by default for workspaces with the compliance security profile enabled

Compute sizing for Databricks Apps will be available by default for workspaces with the compliance security profile enabled in early March 2026.

ADBC will become the default driver for new Power BI connections

Starting in February, new connections created in Power BI Desktop or Power BI Service will automatically use the Arrow Database Connectivity (ADBC) driver by default. Existing connections will continue to use ODBC unless you manually update them to ADBC.

If you prefer to continue using ODBC for new connections, you will have two options:

- When creating a connection, select

defaultas the Implementation. - In Power BI Desktop, disable the ADBC feature preview, which will restore ODBC as the default for all new connections.

To learn more about ADBC, see Configure ADBC or ODBC driver for Power BI and the Databricks Driver in the ADBC repository.

ODBC Driver name change

In an upcoming release, the Spark ODBC Driver (Simba) will be renamed to the Databricks ODBC Driver. Going forward, all new versions of the driver will use this updated name. As a result, when downloading the latest version of the driver, you will need to make minor updates in your application to use the rebranded driver. All existing functionality and performance will remain the same.

Previous versions of the driver will continue to be available on the All ODBC Driver Versions page and will remain supported for at least two years.

New slicing logic for jobs timeline tables

Starting January 19, 2026, the jobs timeline tables use a new clock-hour-aligned slicing logic. Time slices now align to standard clock hour boundaries (5:00-6:00 PM, 6:00-7:00 PM, and so on) instead of one-hour intervals based on the run's start time. New rows will use the new slicing logic, while existing rows remain unchanged.

See Clock-hour-aligned slicing logic.

Public URLs for ODBC driver downloads will be disabled

In an upcoming release, public URLs for automated Apache Spark ODBC driver downloads will be disabled. After this change, all driver downloads, including automated processes, will require authentication. This will break automated processes that download Apache Spark ODBC drivers using direct public links without authentication.

Catalog Explorer navigation updates

Catalog Explorer will soon receive navigation improvements to streamline workflows and help you discover and manage data assets more efficiently.

Simplified navigation:

The duplicative Catalogs tab is removed to reduce redundancy and focus on a single catalog navigation surface. DBFS and Send feedback actions move into the kebab menu for a cleaner layout.

New Suggested section:

A new Suggested tab on the Catalog Explorer landing page highlights frequently used objects, example objects for first-time users, and user favorites. This helps you quickly re-engage with important assets or discover helpful starting points.

Consolidated entry points:

Related capabilities are grouped under clearer categories to reduce visual noise and improve findability:

- Govern – Entry point for governed tags, metastore administration, and data classification

- Connect – Entry points for external locations, external data, credentials, and connections

- Share – Entry points for Delta Sharing and Clean Rooms

These groupings replace scattered sub-tabs and create a more intuitive, scalable information architecture.

Changes to open Delta Sharing recipient tokens

Delta Sharing for open recipients will transition to a new recipient-specific URL format that is used to connect to the Delta Sharing server. This change improves network security and firewall configurations, aligning to best practices for sharing endpoints.

Recipient tokens created on or after March 9, 2026:

Starting March 9, 2026, new open recipient tokens will use a new, recipient-specific URL format to improve security and network filtering. This change applies to tokens issued on or after this date; earlier tokens can continue to use the older URL format until they expire. See New token expiration lifetime policy in the following section.

For OIDC, recipients must transition to the new URL format by March 9, 2027. Starting March 9, 2026, providers will be able to view the new URL format for existing recipients in the Delta Sharing page to share with recipients.

The new URLs will be in the following format:

https://2d4b0370-9d5c-4743-9297-72ba0f5caa8d.delta-sharing.us-central1.gcp.databricks.com

New token expiration lifetime policy:

Starting December 8, 2025, all new open sharing recipient tokens will be issued with a maximum expiration of one year from the date of creation. Tokens with longer or unlimited validity can no longer be created.

For tokens created using the previous recipient URL format between December 8, 2025 and March 9, 2026, each token automatically expires one year after its creation. When rotating tokens, providers can configure a downtime window to allow recipients to migrate. During this window, both the old and new recipient URLs continue to work.

If you currently use recipient tokens with long or unlimited lifetimes, review your integrations and remember to rotate tokens annually as needed as tokens will expire on December 8, 2026.

Time travel and VACUUM behavioral changes for Unity Catalog managed tables

In January 2026, the time travel and VACUUM behavioral changes introduced in Databricks Runtime 18.0 will extend to serverless compute, Databricks SQL, and Databricks Runtime 12.2 and above for Unity Catalog managed tables.

These changes include:

- Time travel queries are blocked if they exceed

delta.deletedFileRetentionDuration. delta.logRetentionDurationmust be greater than or equal todelta.deletedFileRetentionDuration.

Email notifications for expiring personal access tokens

Databricks will soon send email notifications to workspace users approximately seven days before their personal access tokens expire. Notifications are sent only to workspace users (not service principals) with email-based usernames. All expiring tokens within the same workspace are grouped together in a single email.

See Monitor and revoke personal access tokens.

Updated end of support timeline for legacy dashboards

- Official support for the legacy version of dashboards has ended as of April 7, 2025. Only critical security issues and service outages will be addressed.

- November 3, 2025: Databricks began presenting users with a dismissable warning dialog when accessing any legacy dashboard. The dialog reminds users that access to legacy dashboards will end on January 12, 2026, and provides a one-click option to migrate to AI/BI.

- January 12, 2026: Legacy dashboards and APIs will no longer be directly accessible. However, they will still provide the ability to update in place to AI/BI. The migration page will be available until March 2, 2026.

To help transition to AI/BI dashboards, upgrade tools are available in both the user interface and the API. For instructions on how to use the built-in migration tool in the UI, see Clone a legacy dashboard to an AI/BI dashboard. For tutorials about creating and managing dashboards using the REST API, see Use Databricks APIs to manage dashboards.

Lakehouse Federation sharing and default storage

Delta Sharing on Lakehouse Federation is in Beta, allowing Delta Sharing data providers to share foreign catalogs and tables. By default, data must be temporarily materialized and stored on default storage (Private Preview). Currently, users must manually enable the Delta Sharing for Default Storage – Expanded Access feature in the account console to use Lakehouse Federation sharing.

After Delta Sharing for Default Storage – Expanded Access is enabled by default for all Databricks users, Delta Sharing on Lakehouse Federation will automatically be available in regions where default storage is supported.

See Default storage in Databricks and Add foreign schemas or tables to a share.

Reload notification in workspaces

In an upcoming release, a message to reload your workspace tab will display if your workspace tab has been open for a long time without refreshing. This will help ensure you are always using the latest version of Databricks with the newest features and fixes.

SAP Business Data Cloud (BDC) Connector for Databricks will soon be generally available

The SAP Business Data Cloud (BDC) Connector for Databricks is a new feature that allows you to share data from SAP BDC to Databricks and from Databricks to SAP BDC using Delta Sharing. This feature will be generally available at the end of September.

Delta Sharing for tables on default storage will soon be enabled by default (Beta)

This default storage update for Delta Sharing has expanded sharing capabilities, allowing providers to share tables backed by default storage to any Delta Sharing recipient (open or Databricks), including recipients using classic compute. This feature is currently in Beta and requires providers to manually enable Delta Sharing for Default Storage – Expanded Access in the account console. Soon, this will be enabled by default for all users.

See Limitations.

Behavior change for the Auto Loader incremental directory listing option

The Auto Loader cloudFiles.useIncrementalListing option is deprecated. Although this note discusses a change to the options's default value and how to continue using it after this change, Databricks recommends against using this option in favor of file notification mode with file events.

In an upcoming Databricks Runtime release, the value of the deprecated Auto Loader cloudFiles.useIncrementalListing option will, by default, be set to false. Setting this value to false causes Auto Loader to perform a full directory listing each time it's run. Currently, the default value of the cloudFiles.useIncrementalListing option is auto, instructing Auto Loader to make a best-effort attempt at detecting if an incremental listing can be used with a directory.

To continue using the incremental listing feature, set the cloudFiles.useIncrementalListing option to auto. When you set this value to auto, Auto Loader makes a best-effort attempt to do a full listing once every seven incremental listings, which matches the behavior of this option before this change.

To learn more about Auto Loader directory listing, see Auto Loader streams with directory listing mode.

Behavior change when dataset definitions are removed from Lakeflow Spark Declarative Pipelines

An upcoming release of Lakeflow Spark Declarative Pipelines will change the behavior when a materialized view or streaming table is removed from a pipeline. With this change, the removed materialized view or streaming table will not be deleted automatically when the next pipeline update runs. Instead, you will be able to use the DROP MATERIALIZED VIEW command to delete a materialized view or the DROP TABLE command to delete a streaming table. After dropping an object, running a pipeline update will not recover the object automatically. A new object is created if a materialized view or streaming table with the same definition is re-added to the pipeline. You can, however, recover an object using the UNDROP command.

The sourceIpAddress field in audit logs will no longer include a port number

Due to a bug, certain authorization and authentication audit logs include a port number in addition to the IP in the sourceIPAddress field (for example, "sourceIPAddress":"10.2.91.100:0"). The port number, which is logged as 0, does not provide any real value and is inconsistent with the rest of the Databricks audit logs. To enhance the consistency of audit logs, Databricks plans to change the format of the IP address for these audit log events. This change will gradually roll out starting in early August 2024.

If the audit log contains a sourceIpAddress of 0.0.0.0, Databricks might stop logging it.

External support ticket submission will soon be deprecated

Databricks is transitioning the support ticket submission experience from help.databricks.com to the help menu in the Databricks workspace. Support ticket submission via help.databricks.com will soon be deprecated. You'll continue to view and triage your tickets at help.databricks.com.

The in-product experience, which is available if your organization has a Databricks Support contract, integrates with Databricks Assistant to help address your issues quickly without having to submit a ticket.

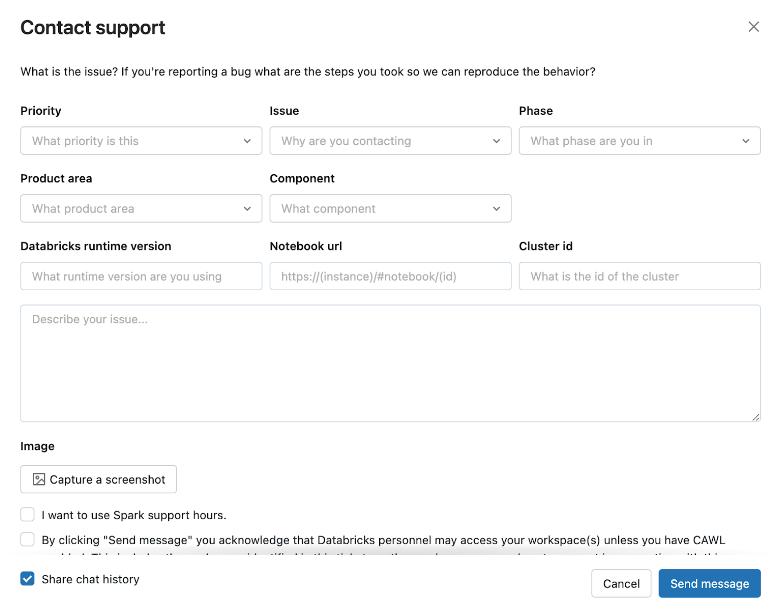

To access the in-product experience, click your user icon in the top bar of the workspace, and then click Contact Support or type “I need help” into the assistant.

The Contact support modal opens.

If the in-product experience is down, send requests for support with detailed information about your issue to help@databricks.com. For more information, see Get help.