SAML 2.0 フェデレーションを使用した IAM 認証情報パススルーを使用した S3 へのアクセス (レガシー)

このドキュメントは廃止されており、更新されない可能性があります。

資格情報のパススルーは、Databricks Runtime 15.0 以降で非推奨となり、将来の Databricks Runtime バージョンで削除される予定です。 Databricks では、Unity Catalog にアップグレードすることをお勧めします。 Unity Catalog は、アカウント内の複数のワークスペースにわたるデータアクセスを一元的に管理および監査するための場所を提供することで、データのセキュリティとガバナンスを簡素化します。 「Unity Catalog とは」を参照してください。

AWS は SAML 2.0 ID フェデレーション をサポートしており、 AWS 管理コンソールと AWS API. シングルサインオンで設定されたDatabricksワークスペースでは、AWS IAMフェデレーションを使用して、SCIMを使用したDatabricks内ではなく、ID プロバイダー (IdP)内のIAM ロールへのユーザーのマッピングを維持できます。これにより、IdP 内のデータアクセスを一元化し、それらのエンタイトルメントを直接 Databricks クラスターに渡すことができます。

SAML 2.0 フェデレーションを使用した IAM 認証情報のパススルーは、統合ログインが無効になっている場合にのみ設定できます。 Databricks では、Unity Catalog にアップグレードすることをお勧めします (「Unity Catalog とは」を参照)。アカウントが 2023 年 6 月 21 日より後に作成された場合、または 2024 年 12 月 12 日より前に SSO を構成しておらず、SAML 2.0 フェデレーションを使用した IAM 資格情報のパススルーが必要な場合は、Databricks アカウント チームにお問い合わせください。

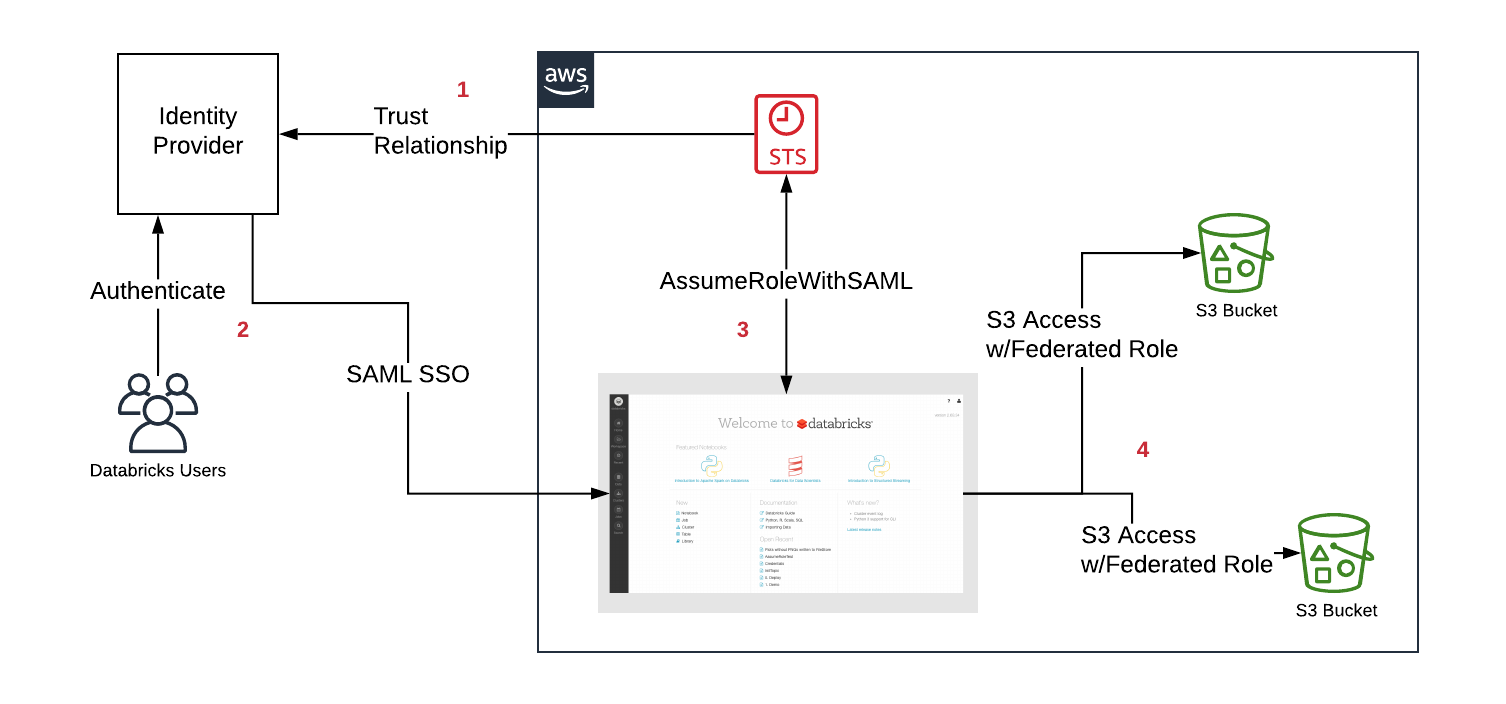

次の図は、フェデレーション ワークフローを示しています。

- IdP と AWS アカウントの間に信頼関係を設定して、IdP がユーザーが引き受けることができるロールを制御できるようにします。

- ユーザーは SAML SSO 経由で Databricks にログインし、ロールのエンタイトルメントは IdP によって渡されます。

- Databricks は AWS Security トークン サービス (STS) を呼び出し、 SAML 応答を渡して一時トークンを取得することで、ユーザーのロールを引き受けます。

- ユーザーがS3 Databricksクラスターから にアクセスすると、Databricks ランタイムはユーザーの一時トークンを使用して、アクセスを自動的かつ安全に実行します。

IAM資格情報パススルーのフェデレーションは、SAML IAMロール権限の自動同期が有効になっている場合 常にロールを 内のユーザーにマップします。SCIM API を介して設定された以前のロールは上書きされます。

必要条件

-

Databricks ワークスペースで構成された SAML シングル サインオン 。

-

AWS 管理者のアクセス:

- DatabricksデプロイメントのAWSアカウント内のIAMロールとポリシー。

- S3 バケットの AWS アカウント。

-

ID プロバイダー (IdP) 管理者は、AWS ロールを Databricks に渡すように IdP を構成します。

-

SAML アサーションに AWS ロールを含める Databricks ワークスペース管理者。

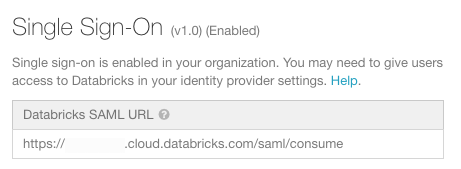

手順 1: Databricks SAML URL を取得する

-

設定ページに移動します。

-

「認証 」タブをクリックします。

-

Databricks SAML URL をコピーします。

ステップ 2: ID プロバイダーのメタデータをダウンロードする

ID プロバイダコンソール内の手順は、ID プロバイダごとに若干異なります。 ID プロバイダーの例については、「サードパーティーの SAML ソリューションプロバイダーと AWS の統合 」を参照してください。

-

ID プロバイダーの管理コンソールで、シングル サインオン用の Databricks アプリケーションを見つけます。

-

SAML メタデータをダウンロードします。

ステップ 3: ID プロバイダーを構成する

- AWSコンソールで、 [IAM] サービスに移動します。

- サイドバーの 「ID プロバイダ」 タブをクリックします。

- プロバイダの作成 をクリックします。

- [プロバイダーの種類] で [ SAML ] を選択します。

- [プロバイダー名] に名前を入力します。

- メタデータドキュメント で、「 ファイルの選択 」をクリックし、上記でダウンロードしたメタデータドキュメントを含むファイルに移動します。

- 次のステップ をクリックし、 作成 をクリックします。

手順 4: フェデレーションの IAMロールを構成する

データ アクセスに使用されるロールのみを、Databricks とのフェデレーションに使用する必要があります。 通常 AWS コンソールへのアクセスに使用されるロールは、必要以上の権限を持っている可能性があるため、許可することはお勧めしません。

-

AWSコンソールで、 [IAM] サービスに移動します。

-

サイドバーの「 ロール 」タブをクリックします。

-

「 ロールの作成 」をクリックします。

- [ 信頼されたエンティティの種類の選択 ] で、[ SAML 2.0 フェデレーション ] を選択します。

- [SAML プロバイダー] で、ステップ 3 で作成した名前を選択します。

- [ プログラムによるアクセスのみを許可する ] を選択します。

- [属性] で [SAML] を選択します.

- [値] に、手順 1 でコピーした Databricks SAML URL を貼り付けます。

- 次へ: アクセス許可 、 次へ: タグ 、 次へ: 確認 の順にクリックします。

- [ロール名] フィールドに、ロール名を入力します。

- 「 ロールの作成 」をクリックします。ロールのリストが表示されます。

-

インライン ポリシーをロールに追加します。 このポリシーは、S3 バケットへのアクセスを許可します。

-

[アクセス許可] タブで、[

] をクリックします。

-

JSON タブをクリックします。このポリシーをコピーし、

<s3-bucket-name>バケットの名前に設定します。JSON{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["s3:ListBucket"],

"Resource": ["arn:aws:s3:::<s3-bucket-name>"]

},

{

"Effect": "Allow",

"Action": ["s3:PutObject", "s3:GetObject", "s3:DeleteObject", "s3:PutObjectAcl"],

"Resource": ["arn:aws:s3:::<s3-bucket-name>/*"]

}

]

} -

「 ポリシーの確認 」をクリックします。

-

[名前] フィールドに、ポリシー名を入力します。

-

「 ポリシーの作成 」をクリックします。

-

-

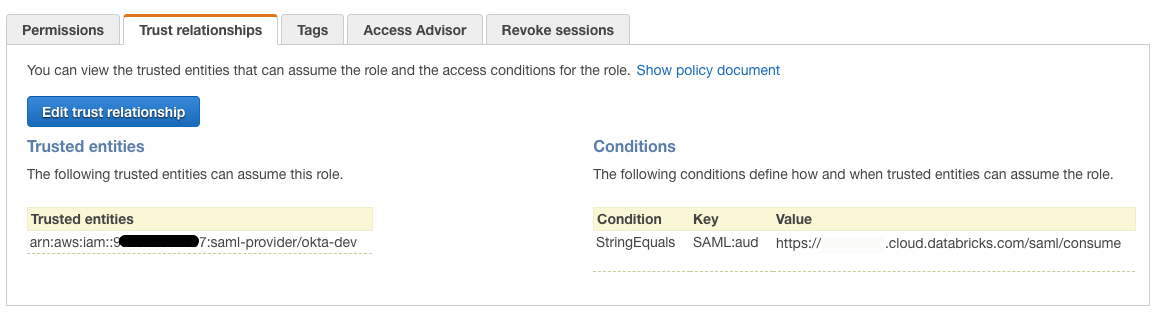

信頼関係 タブでは、次のような内容が表示されます。

-

[ 信頼関係の編集 ] ボタンをクリックします。 IAM の結果として得られる信頼ポリシードキュメントは、次のようになります。

JSON{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::<accountID>:saml-provider/<IdP-name>"

},

"Action": "sts:AssumeRoleWithSAML",

"Condition": {

"StringEquals": {

"SAML:aud": "https://xxxxxx.cloud.databricks.com/saml/consume"

}

}

}

]

}

手順 5: Databricks に属性を渡すように ID プロバイダーを構成する

Databricksがロールをクラスターに渡せるようにするには、SSOを通じたSAMLレスポンスで以下の属性をDatabricksに引き渡す必要があります。

https://aws.amazon.com/SAML/Attributes/Rolehttps://aws.amazon.com/SAML/Attributes/RoleSessionName

これらの属性は、ロール ARN のリストと、シングルサインオンログインに一致するユーザー名です。 ロール マッピングは、ユーザーが Databricks ワークスペースにログインすると更新されます。

IAMロールに対するユーザー権限が AD/LDAP グループ メンバーシップに基づいている場合は、IdP ごとにそのグループからロールへのマッピングを構成する必要があります。

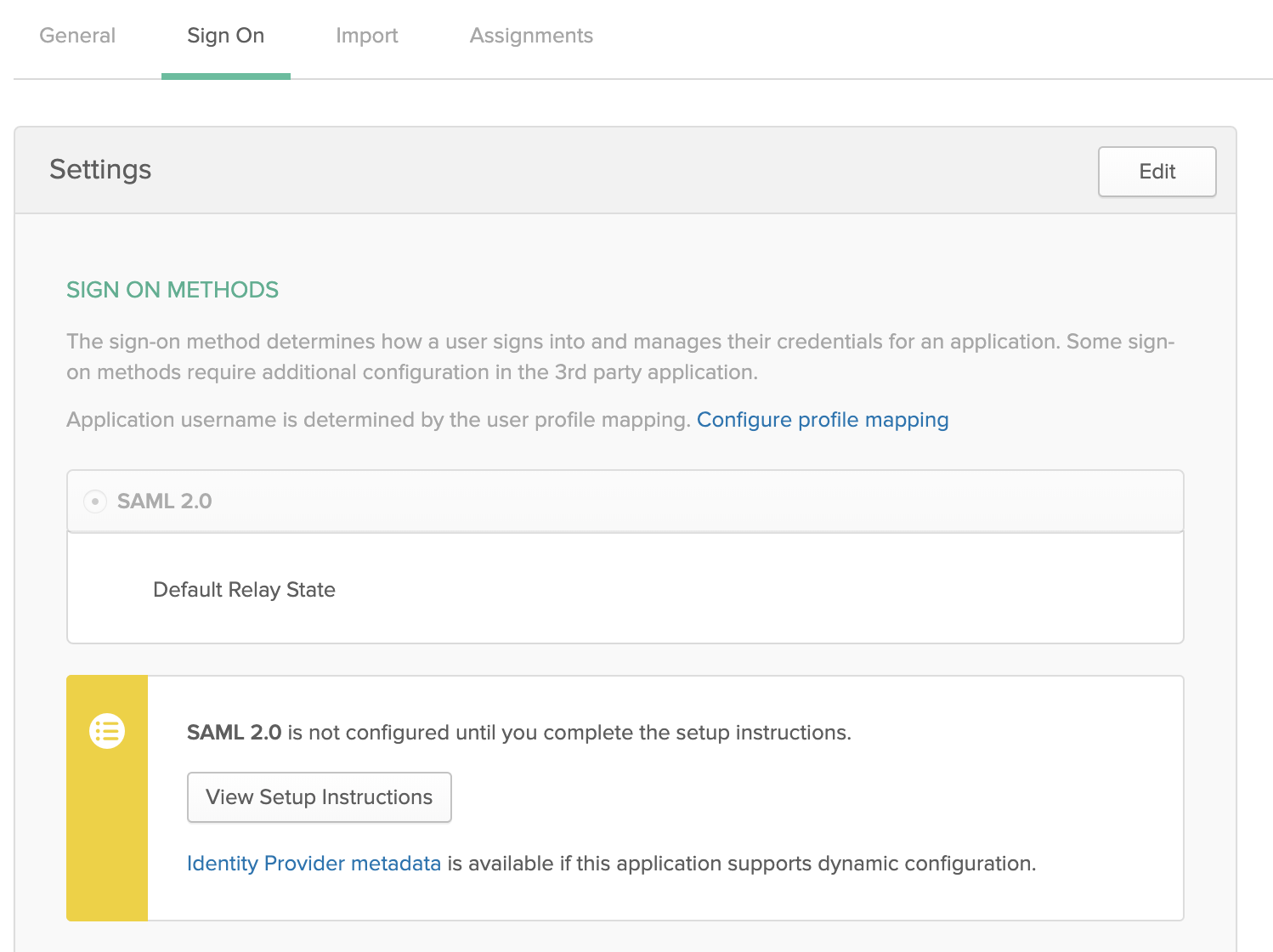

各 ID プロバイダーは、SAML をパススルーする属性を追加する方法が異なります。 次のセクションでは、Okta を使用した 1 つの例を示します。 ID プロバイダーの例については、「サードパーティーのSAML ソリューションプロバイダーと AWS の統合 」を参照してください。

Okta の例

-

Okta管理コンソールの[アプリケーション]で、Databricksへのシングルサインオンアプリケーションを選択します。

-

「SAML 設定」の下の 編集 をクリックし、 次へ をクリックして SAML の設定 タブをクリックします。

-

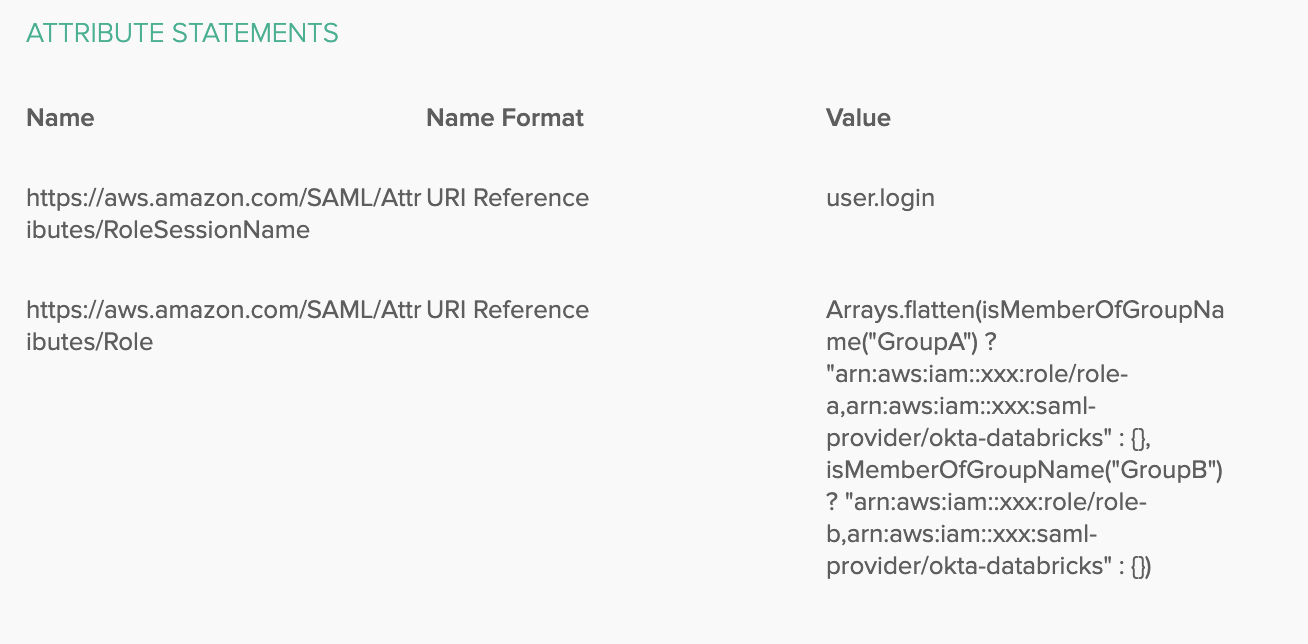

「属性ステートメント」で、次の属性を追加します。

- 名前:

https://aws.amazon.com/SAML/Attributes/RoleSessionName、名前の形式: URI 参照、値:user.login

- 名前:

-

グループを使用してロールを簡単に管理するには、 IAMロールに対応するグループ(

GroupAやGroupBなど)を作成し、そのグループにユーザーを追加します。 -

Okta Expressionsを使用して、次の方法でグループとロールを一致させることができます。

-

名前:

https://aws.amazon.com/SAML/Attributes/Role、名前の形式:URI Reference、値:Arrays.flatten(isMemberOfGroupName("GroupA") ? "arn:aws:iam::xxx:role/role-a,arn:aws:iam::xxx:saml-provider/okta-databricks" : {}, isMemberOfGroupName("GroupB") ? "arn:aws:iam::xxx:role/role-b,arn:aws:iam::xxx:saml-provider/okta-databricks" : {})次のようになります。

特定のグループ内のユーザーのみが、対応する IAMロールを使用する権限を持ちます。

-

-

ユーザーの管理 を使用して、ユーザーをグループに追加します。

-

[アプリの管理 ] を使用して、グループを SSO アプリケーションに割り当て、ユーザーが Databricksにログインできるようにします。

ロールを追加するには、上記の手順に従って、Oktaグループをフェデレーションロールにマッピングします。 異なるAWS アカウントのロールを持つには、 Databricksのフェデレーションロールを持つ追加の各AWSアカウントに、 SSOアプリケーションを新しいIAM IDプロバイダーとして追加します。

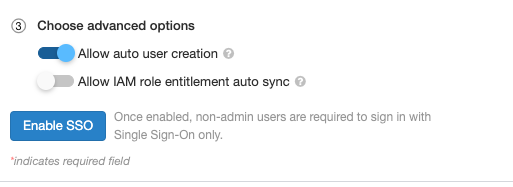

手順 6: 必要に応じて、SAML から SCIM へのロール マッピングを同期するように Databricks を構成する

このステップは、 ジョブ または JDBC に IAM 認証情報のパススルーを使用する場合に実行します。 それ以外の場合は、 SCIM APIを使用して IAMロールマッピングを設定する必要があります。

-

設定ページに移動します。

-

「認証 」タブをクリックします。

-

[ ロール エンタイトルメントの自動同期を許可するIAM ] を選択します。

おすすめの方法

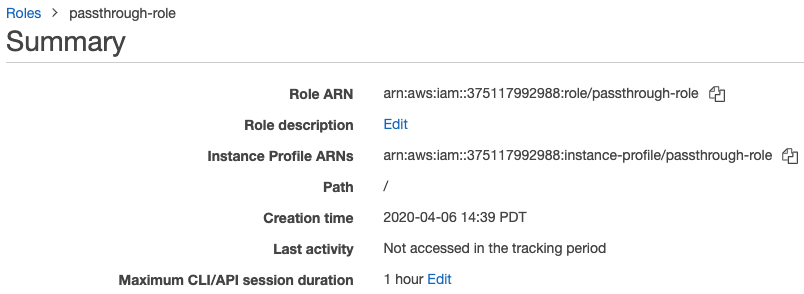

最適なエクスペリエンスを得るには、 IAMロールの最大セッション時間を 4 から 8 に設定することをお勧めします 時間。 これは、ユーザーが新しい トークンまたは長いクエリが期限切れのトークンのために失敗しました。 期間を設定するには:

-

AWSコンソールで、「ステップ 4: フェデレーションのIAMロールを設定する 」で設定したIAMロールをクリックします。

-

最大 CLI/API セッション期間 プロパティーで、「 編集 」をクリックします。

-

期間を選択し、[ 変更を保存 ] をクリックします。

フェデレーションで IAM 認証情報のパススルーを使用する

IAM 資格情報のパススルー クラスターを起動し、インスタンスプロファイルを追加しないの手順に従います。ジョブまたは JDBC 接続のフェデレーションで IAM パススルーを使用するには、「 メタインスタンスプロファイルの設定」の手順に従います。

安全

ハイコンカレンシーIAM資格情報パススルークラスターを他のユーザーと共有しても安全です。あなたは他から分離され、他の人の資格情報を読み取ることはできません。

トラブルシューティング

設定ミスは、資格情報のパススルーを設定する際のエラーの一般的な原因です。 X-Databricks-PassThrough-Error ヘッダーは、サインオン応答ヘッダーと共に返されます これらのエラーの原因を特定するのに役立ちます。可能な値は次のとおりです。

- ValidationError : ID プロバイダーのロールの設定は、AWS サービスで指定された制約を満たせません。このエラーの一般的な原因は、ロール名と ID プロバイダー名の順序が正しくないことです。

- InvalidIdentityToken : 送信された ID プロバイダーが無効です。このエラーの一般的な原因は、次のとおりです ID プロバイダーのメタデータが AWS IAM サービスで正しく設定されていません。

- AccessDenied : ロールの検証に失敗しました。このエラーの一般的な原因は、 ID プロバイダーが AWS IAM サービスのロールの 信頼関係 に追加されていません。

- ロール名属性の形式が正しくありません : ID プロバイダーのロールの構成の形式が正しくありません。

レスポンスヘッダーへのアクセス手順については、Webブラウザのドキュメントを参照してください。

既知の制限事項

次の機能は、IAM フェデレーションではサポートされていません。

-

%fs(代わりに、同等の dbutils.fs コマンドを使用してください)。 -

SparkContext (

sc) オブジェクトと SparkSession (spark) オブジェクトの次のメソッド:- 非推奨のメソッド。

addFile()やaddJar()など、管理者以外のユーザーが Scala のコードを呼び出すことができるメソッド。- S3 以外のファイルシステムにアクセスする任意の方法。

- 古い Hadoop API (

hadoopFile()とhadoopRDD())。 - ストリームの実行中にパススルーされた資格情報の有効期限が切れるため、ストリーミング API。

-

DBFS マウント (

/dbfs) は、Databricks Runtime 7.3 LTS 以降でのみ使用できます。 資格情報のパススルーが構成されたマウント ポイントは、このパスではサポートされません。 -

クラスター全体のライブラリで、ダウンロードするにはクラスター インスタンスプロファイルのアクセス許可が必要です。 DBFS パスを持つライブラリのみがサポートされています。

-

Databricks Connect高コンカレンシー クラスターの は、Databricks Runtime 7.3LTS 以降でのみ使用できます。