Monitoring and observability for Lakeflow Jobs

This article describes the features available in the Databricks UI to view jobs you have access to, view a history of runs for jobs, and view details of job runs. To configure notifications for jobs, see Add notifications on a job.

To learn about using the Databricks CLI to view jobs and run jobs, run the CLI commands databricks jobs list -h, databricks jobs get -h, and databricks jobs run-now -h. To learn about using the Jobs API, see the Jobs API.

If you have access to the system.lakeflow schema, you can also view and query records of job runs and tasks from across your account. See Jobs system table reference. You can also join the jobs system tables with billing tables to monitor the cost of jobs across your account. See Monitor job costs & performance with system tables.

View jobs and pipelines

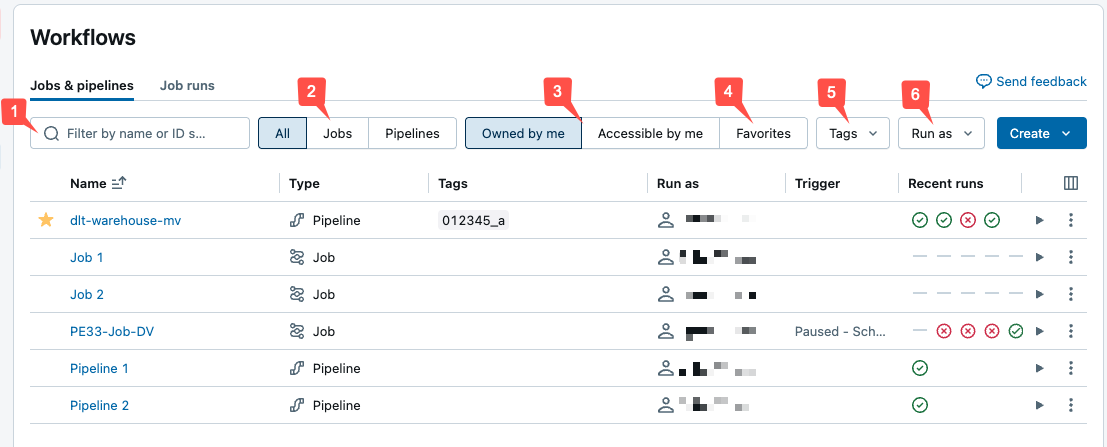

To view the list of jobs you have access to, click Jobs & Pipelines in the sidebar. The Jobs & pipelines tab in the Lakeflow Jobs UI lists information about all available jobs and pipelines, such as the creator, the trigger (if any), and the result of the last five runs.

To change the columns displayed in the list, click and select or deselect columns.

The unified Jobs & pipelines list is in Public Preview. You can disable the feature and return to the default experience by disabling Jobs and pipelines: Unified management, search, & filtering. See Manage Databricks previews for more information.

You can filter jobs in the Jobs & pipelines list as shown in the following screenshot.

- Text search: keyword search is supported for the Name and Job ID fields. To search for a tag created with a key and value, you can search by the key, the value, or both the key and value. For example, for a tag with the key

departmentand the valuefinance, you can search fordepartmentorfinanceto find matching jobs. To search by the key and value, enter the key and value separated by a colon (for example,department:finance). - Type: select only jobs, pipelines, or all.

- Owner: select only the jobs or pipelines you own.

- Favorites: select all jobs or pipelines you have marked as favorites.

- Tags: Use tags. To search by tag you can use the tags drop-down menu to filter for up to five tags at the same time or directly use the keyword search.

- Run as: Filter by up to two

run asvalues.

To start a job or pipeline, click the play button. To stop a workflow, click the

stop button. To access other actions, click the kebab menu

For example, you can delete the workflow, or access settings for a pipeline from this menu.

View runs for a single job

You can view a list of currently running and recently completed runs for a job that you have access to, including runs started by external orchestration tools such as Apache Airflow or Azure Data Factory. To view the list of recent job runs:

-

In your Databricks workspace's sidebar, click Jobs & Pipelines.

-

Optionally, select the Jobs and Owned by me filters.

-

Click your job's Name link.

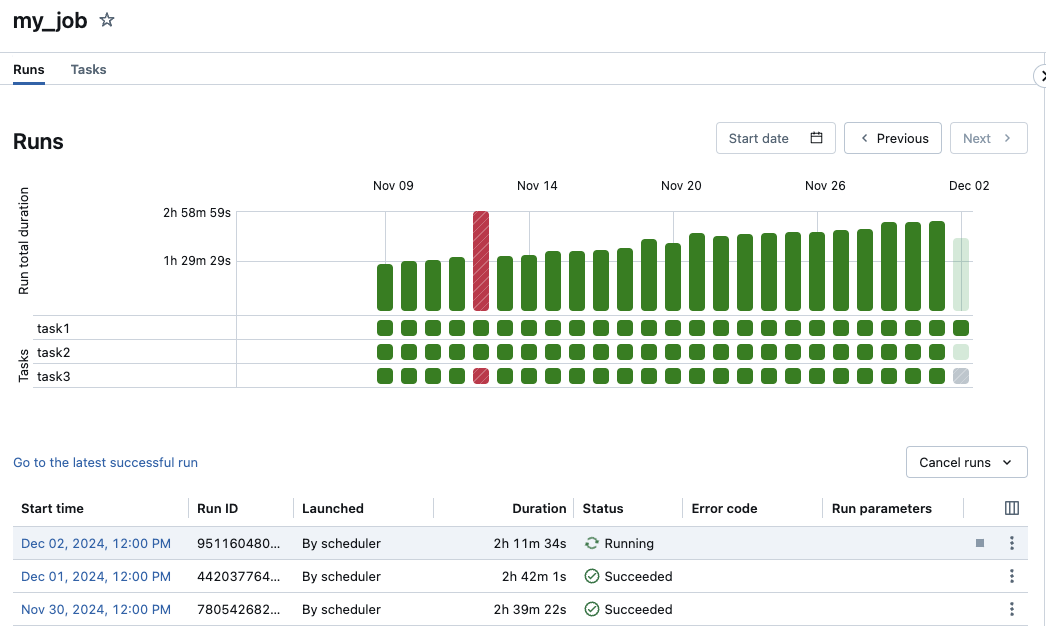

The Runs tab appears with matrix and list views of active and completed runs.

The matrix view shows a history of runs for the job, including each job task.

The Run total duration row of the matrix displays the run's total duration and the run's state. To view details of the run, including the start time, duration, and status, hover over the bar in the Run total duration row.

Each cell in the Tasks row represents a task and the corresponding status of the task. To view details of each task, including the start time, duration, cluster, and status, hover over the cell for that task.

The job run and task run bars are color-coded to indicate the status of the run. Successful runs are green. Unsuccessful runs are red, skipped runs are pink, and waiting for retry is yellow. Pending, canceled or timed out are grey. The height of the individual job run and task run bars visually indicate the run duration.

If you have configured an expected completion time, the matrix view displays a warning when the duration of a run exceeds the configured time.

By default, the runs list view displays the following:

- The start time for the run.

- The run identifier.

- Whether the run was triggered by a job schedule or an API request, or was manually started.

- The time elapsed for a currently running job or the total running time for a completed run. A warning is displayed if the duration exceeds a configured expected completion time.

- The status of the run, either Queued, Pending, Running, Skipped, Succeeded, Failed, Timed Out, Canceling, or Canceled.

- The error code the run terminated with.

- The run parameters.

Currently active runs display a stop button. To stop all active and queued runs, select Cancel runs or Cancel all queued runs from the drop-down menu.

Use the for a run for additional context-specific actions, such as deleting entries for completed runs.

To access context-specific actions for the run, click the kebab menu (for example, to stop an active run or delete a completed run).

To change the columns displayed in the runs list view, click and select or deselect columns.

To view details for a job run, click the link for the run in the Start time column in the runs list view. To view details for this job's most recent successful run, click Go to the latest successful run.

Databricks maintains a history of your job runs for up to 60 days. If you need to preserve job runs, Databricks recommends exporting results before they expire. For more information, see Export job run results.

View job run details

The job run details page contains job output and links to logs, including information about the success or failure of each task in the job run. You can access job run details from the Runs tab for the job.

To view job run details from the Runs tab, click the link for the run in the Start time column in the runs list view. To return to the Runs tab for the job, click the Job ID value.

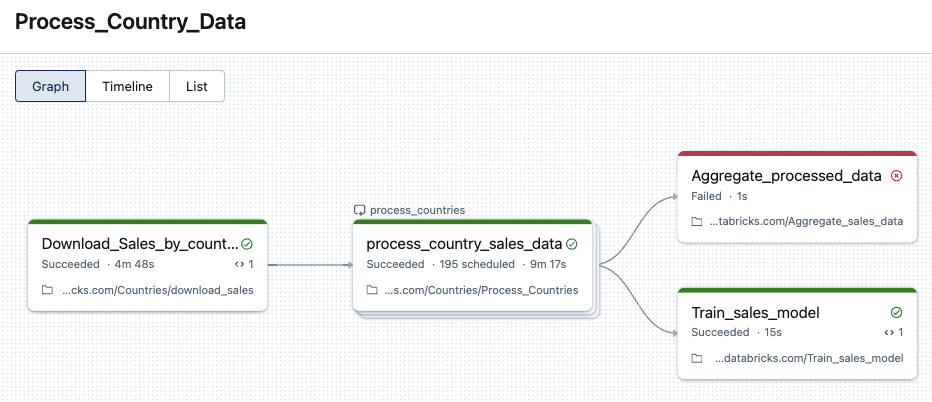

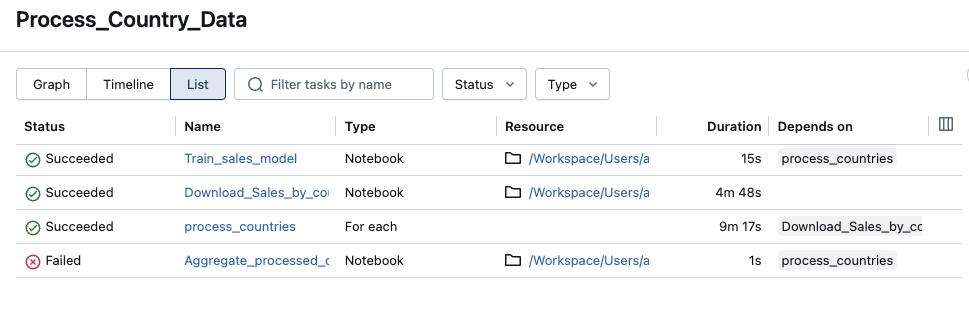

Jobs with multiple tasks additionally have a graph, timeline, and list view.

Graph view

Click a task node in the graph to view task run details, including:

- Task details including run as, how the job was launched, start time, end time, duration and status.

- The source code.

- The cluster that ran the task and links to its query history and logs.

- Metrics for the task.

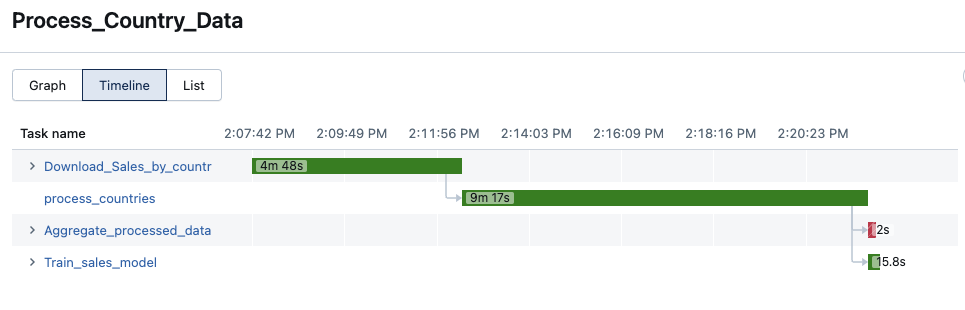

Timeline view

Jobs that contain multiple tasks have a timeline view to identify tasks that are taking a long time to complete, understand dependencies and overlap to help debug and optimize these jobs.

List view

By default, the list view shows the status, name, type, resource, duration and dependencies. You can add and remove columns in this view.

You can search for a task by name, filter by task status or task type, and sort tasks by status, name or duration.

Click the Job ID value to return to the Runs tab for the job.

How does Databricks determine job run status?

Databricks determines whether a job run was successful based on the outcome of the job's leaf tasks. A leaf task is a task that has no downstream dependencies. A job run can have one of three outcomes:

- Succeeded: All tasks were successful.

- Succeeded with failures: Some tasks failed, but all leaf tasks were successful.

- Failed: One or more leaf tasks failed.

- Skipped: The job run was skipped (for example, a task might be skipped because you exceeded the maximum concurrent runs for your job or your workspace).

- Timed Out: The job run took too long to complete and timed out.

- Canceled: The job run was canceled (for example, a user manually canceled the ongoing run).

View metrics for streaming tasks

Streaming observability for Lakeflow Jobs is in Public Preview.

When you view job run details, you can get data on streaming workloads with streaming observability metrics in the Jobs UI. These metrics include backlog seconds, backlog bytes, backlog records, and backlog files for sources supported by Spark Structured Streaming including Apache Kafka, Amazon Kinesis, Auto Loader, Google Pub/Sub, and Delta tables. Metrics are displayed as charts in the right-hand pane when you view the run details for a task. The metrics shown in each chart are maximum values aggregated by minute and can include up to the previous 48 hours.

Each streaming source supports only specific metrics. Metrics not supported by a streaming source are not available to view in the UI. The following table shows the metrics available for supported streaming sources:

source | backlog bytes | backlog records | backlog seconds | backlog files |

|---|---|---|---|---|

Kafka | ✓ | ✓ | ||

Kinesis | ✓ | ✓ | ||

Delta | ✓ | ✓ | ||

Auto Loader | ✓ | ✓ | ||

Google Pub/Sub | ✓ | ✓ |

You can also specify thresholds for each streaming metric and configure notifications if a stream exceeds a threshold during a task run. See Configure notifications for slow jobs.

To view streaming metrics for a task run that streams data from one of the supported Structured Streaming sources:

- On the Job run details page, click the task for which you want to view metrics.

- Click the Metrics tab in the Task run pane.

- To open the graph for a metric, click

next to the metric name.

- To view the metrics for a specific stream, enter the stream ID in the Filter by stream_id text box. You can find the stream ID in the output for the job run.

- To change the time period for the metric graphs, use the time drop-down menu.

- To scroll through the streams if the run contains more than ten streams, click Next or Previous.

Streaming observability limitations

- Metrics are updated every minute unless a run has more than four streams. If a run has more than four streams, the metrics are updated every five minutes.

- Metrics are only collected for the first fifty streams in each run.

- Metrics are collected at one-second intervals. The metrics might not be visible if your

triggerIntervalsetting is less than one second. - Most data sources collect streaming metrics by default. However, for others, you must enable this feature. If your data source isn't collecting streaming metrics, set the

spark.sql.streaming.metricsEnabledflag toTrue.

View task run history

To view the run history of a task, including successful and unsuccessful runs:

- Click on a task on the Job run details page. The Task run details page appears.

- Select the task run in the run history drop-down menu.

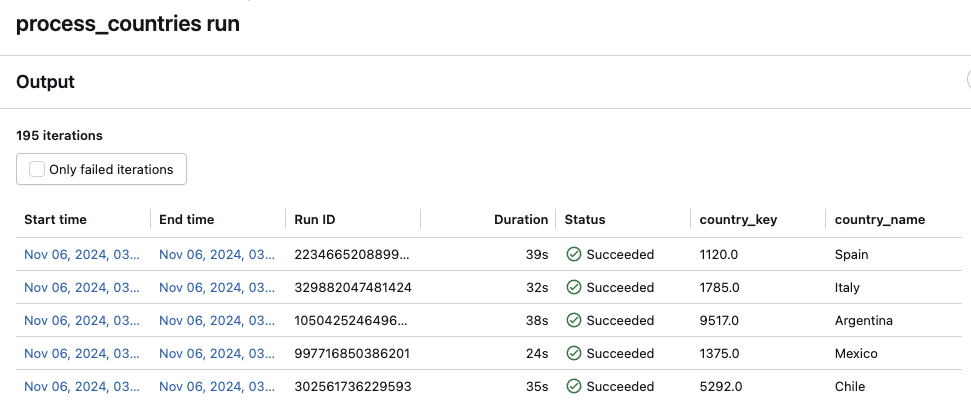

View task run history for a For each task

Accessing the run history of a For each task is the same as a standard Lakeflow Jobs task. You can click the For each task node on the Job run details page or the corresponding cell in the matrix view. However, unlike a standard task, the run details for a For each task are presented as a table of the nested task's iterations.

To view only failed iterations, click Only failed iterations.

To view the output of an iteration, click the Start time or End time values of the iteration.

View recent job runs across all jobs

You can view a list of currently running and recently completed runs for all jobs in a workspace that you have access to, including runs started by external orchestration tools such as Apache Airflow or Azure Data Factory. To view the list of recent job runs:

- Click

Jobs & Pipelines in the sidebar.

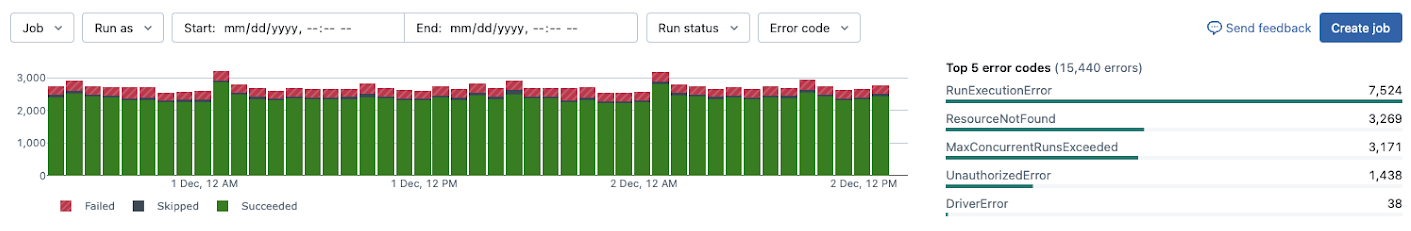

- Click the Job runs tab to display the Finished runs count graph and the Job runs list.

If you have the Unified Runs List preview enabled, then the tab is called Runs instead, and shows a list of both job and pipeline runs. You can filter to view only jobs by clicking Jobs.

For more information about the unified runs list and using it with pipelines, see Unified runs list.

Finished runs count graph

The Finished runs count graph displays the number of job runs completed in the last 48 hours. By default, the graph displays the failed, skipped, and successful job runs. You can also filter the graph to show specific run statuses or restrict the graph to a specific time range. The Job runs tab also includes a table of job runs from the last 67 days. By default, the table includes details on failed, skipped, and successful job runs.

The Finished runs count graph is only displayed when you click Owned by me.

You can filter the Finished runs count by run status:

- To update the graph to show jobs currently running or waiting to run, click Active runs.

- To update the graph to show only completed runs, including failed, successful, and skipped runs, click Completed runs.

- To update the graph to show only runs that completed successfully over the last 48 hours, click Successful runs.

- To update the graph to show only skipped runs, click Skipped runs. Runs are skipped because you exceeded the maximum number of concurrent runs in your workspace or the job exceeded the maximum number of concurrent runs specified by the job configuration.

- To update the graph to show only runs that completed in an error state, click Failed runs.

When you click any of the filter buttons, the list of runs in the runs table also updates to show only job runs that match the selected status.

To limit the time range displayed in the Finished runs count graph, click and drag your cursor in the graph to select the time range. The graph and the runs table update to display runs from only the selected time range.

The Top 5 error types table displays a list of the most frequent error types from the selected time range, allowing you to quickly see the most common causes of job issues in your workspace.

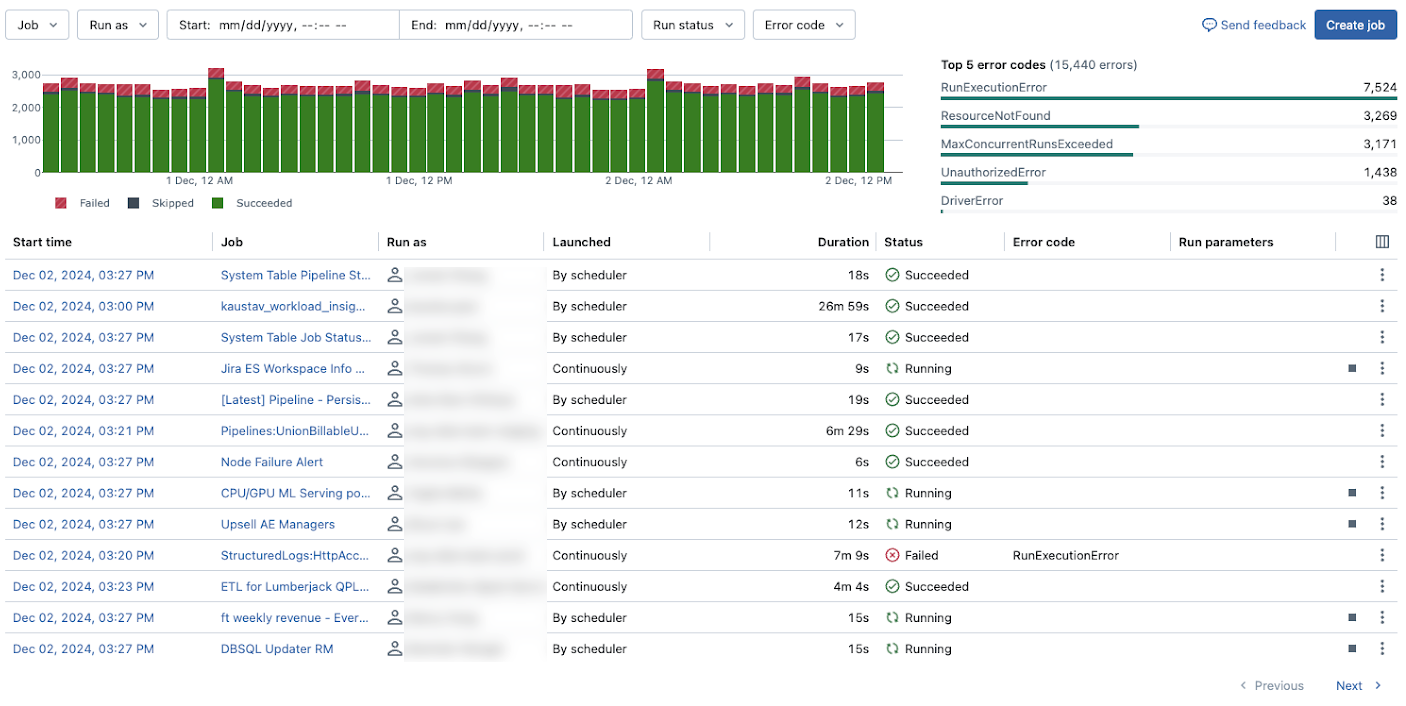

Job runs list

The Job runs tab also includes a table of job runs from the last 60 days. By default, the table includes details on failed, skipped, and successful job runs.

You can filter the list of runs shown in the list based on the following:

- Job: Select up to 3 jobs and see only runs for these jobs.

- Run as: Select up to 3 run as identities and see only runs that were run as these users.

- Time window: Select and start and end date and time to narrow down to job runs that happened in this interval.

- Run status: Filter to only see active (currently running), completed (finished running including successful and unsuccessful runs), succeeded, failed, and skipped runs.

- Error code: Filter the list based on error code to see all jobs that have failed with the same error code.

By default, the list of runs in the runs table displays the following:

- The start time for the run.

- The name of the job associated with the run.

- The user name that the job runs as.

- Whether the run was triggered by a job schedule or an API request, or was manually started.

- The time elapsed for a currently running job or the total running time for a completed run. A warning is displayed if the duration exceeds a configured expected completion time.

- The status of the run: Queued, Pending, Running, Skipped, Succeeded, Failed, Timed Out, Canceling, or Canceled.

- Any error code that the run terminated with.

- Any parameters for the run.

- To stop a running job, click the stop button. To access actions for the job, click the

(for example, to stop an active run or delete a completed run).

To change the columns displayed in the runs list, click and select or deselect columns.

To view job run details, click the link in the Start time column for the run. To view job details, click the job name in the Job column.

View lineage information for a job

If Unity Catalog is enabled in your workspace, you can view lineage information for any Unity Catalog tables in your workflow. If lineage information is available for your workflow, you will see a link with a count of upstream and downstream tables in the Job details panel for your job, the Job run details panel for a job run, or the Task run details panel for a task run. Click the link to show the list of tables. Click a table to see detailed information in Catalog Explorer.

View and run a job created with Databricks Asset Bundles

You can use the Lakeflow Jobs UI to view and run jobs deployed by Databricks Asset Bundles. By default, these jobs are read-only in the Jobs UI. To edit a job deployed by a bundle, change the bundle configuration file and redeploy the job. Applying changes only to the bundle configuration ensures that the bundle source files always capture the current job configuration.

However, if you must make immediate changes to a job, you can disconnect the job from the bundle configuration to enable editing the job settings in the UI. To disconnect the job, click Disconnect from source. In the Disconnect from source dialog, click Disconnect to confirm.

Any changes you make to the job in the UI are not applied to the bundle configuration. To apply changes you make in the UI to the bundle, you must manually update the bundle configuration. To reconnect the job to the bundle configuration, redeploy the job using the bundle.

Export job run results

You can export notebook run results and job run logs for all job types.

Export notebook run results

You can persist job runs by exporting their results. For notebook job runs, you can export a rendered notebook that can later be imported into your Databricks workspace.

To export notebook run results for a job with a single task:

- On the job detail page, click the View Details link for the run in the Run column of the Completed Runs (past 60 days) table.

- Click Export to HTML.

To export notebook run results for a job with multiple tasks:

- On the job detail page, click the View Details link for the run in the Run column of the Completed Runs (past 60 days) table.

- Click the notebook task to export.

- Click Export to HTML.

Export job run logs

You can export the logs for your job run. You can set up your job to automatically deliver logs to DBFS or S3 while configuring jobs compute (see Compute configuration reference) or through the Job API. See the new_cluster.cluster_log_conf object in the request body passed to the Create a new job operation (POST /jobs/create) in the Jobs API.