Compartilhar tabelas de recurso em todo o espaço de trabalho (legado)

- Essa documentação foi descontinuada e pode não estar atualizada.

- Databricks recomenda o uso do recurso engenharia em Unity Catalog para compartilhar tabelas de recurso em todo o espaço de trabalho. A abordagem deste artigo está obsoleta.

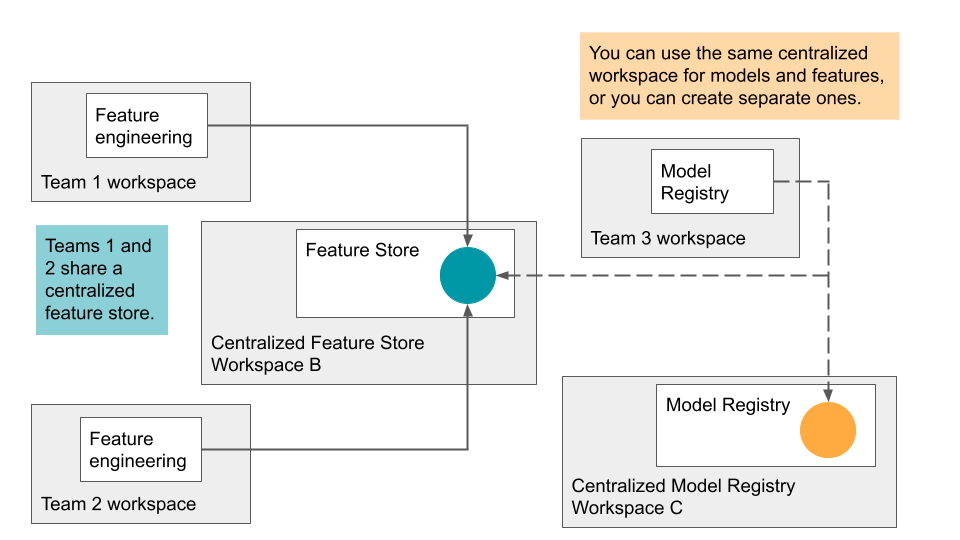

Databricks suporta o compartilhamento de tabelas de recurso em vários espaços de trabalho. Por exemplo, em seu próprio site workspace, o senhor pode criar, gravar ou ler em uma tabela de recursos em um recurso store centralizado. Isso é útil quando várias equipes compartilham o acesso a tabelas de recursos ou quando sua organização tem vários espaços de trabalho para lidar com diferentes estágios de desenvolvimento.

Para um recurso store centralizado, o site Databricks recomenda que o senhor designe um único site workspace para armazenar todos os metadados do recurso store e crie uma conta para cada usuário que precisar acessar o recurso store.

Se suas equipes também estiverem compartilhando modelos entre espaços de trabalho, o senhor pode optar por dedicar o mesmo workspace centralizado para tabelas e modelos de recursos ou pode especificar um espaço de trabalho centralizado diferente para cada um.

O acesso ao armazenamento centralizado de recursos é controlado por tokens. Cada usuário ou script que precisa de acesso cria um token de acesso pessoal no armazenamento centralizado de recursos e copia esse token no gerenciador de segredos de seu site local workspace. Cada solicitação API enviada ao recurso store centralizado workspace deve incluir os tokens de acesso; o cliente do recurso Store fornece um mecanismo simples para especificar os segredos a serem usados ao realizar operaçõesworkspace cruzadas.

Como prática recomendada de segurança ao se autenticar com ferramentas, sistemas, scripts e aplicativos automatizados, a Databricks recomenda que você use tokens OAuth.

Se o senhor usar a autenticação de tokens de acesso pessoal, a Databricks recomenda usar o acesso pessoal tokens pertencente à entidade de serviço em vez de usuários workspace. Para criar tokens o site para uma entidade de serviço, consulte gerenciar tokens para uma entidade de serviço.

Requisitos

É necessário usar um recurso store em todo o espaço de trabalho:

- cliente de armazenamento de recurso v0.3.6e acima.

- Ambos os espaços de trabalho devem ter acesso aos dados brutos do recurso. Eles devem compartilhar o mesmo Hive metastoreexterno e ter acesso ao mesmo armazenamentoDBFS.

- Se as listas de acesso IP estiverem ativadas, os endereços IP workspace deverão estar nas listas de acesso.

Configure os tokens API para um registro remoto

Nesta seção, "espaço de trabalho B" refere-se ao recurso store centralizado ou remoto workspace.

- No espaço de trabalho B, crie um token de acesso.

- Em seu site local workspace, crie segredos para armazenar os tokens de acesso e as informações sobre o espaço de trabalho B:

-

Criar um escopo secreto:

databricks secrets create-scope --scope <scope>. -

Escolha um identificador exclusivo para o espaço de trabalho B, mostrado aqui como

<prefix>. Em seguida, crie três segredos com os nomes key especificados:databricks secrets put --scope <scope> --key <prefix>-hostDigite o nome do host do espaço de trabalho B. Use o seguinte comando Python para obter o nome do host de um workspace:

Pythonimport mlflow

host_url = mlflow.utils.databricks_utils.get_webapp_url()

host_url-

databricks secrets put --scope <scope> --key <prefix>-token: Digite os tokens de acesso do espaço de trabalho B. -

databricks secrets put --scope <scope> --key <prefix>-workspace-idDigite o ID workspace do espaço de trabalho B, que pode ser encontrado no URL de qualquer página.

-

Talvez o senhor queira compartilhar o Secret Scope com outros usuários, pois há um limite para o número de Secret Scope por workspace.

Especificar um armazenamento de recurso remoto

Com base no escopo secreto e no prefixo de nome que você criou para o recurso store remoto workspace, é possível construir um URI de recurso store no formato:

feature_store_uri = f'databricks://<scope>:<prefix>'

Em seguida, especifique o URI explicitamente ao instanciar um FeatureStoreClient:

fs = FeatureStoreClient(feature_store_uri=feature_store_uri)

Criar um banco de dados para tabelas de recurso no local compartilhado DBFS

Antes de criar tabelas de recurso no armazenamento remoto de recurso, o senhor deve criar um banco de dados para armazená-las. O banco de dados deve existir no local compartilhado do DBFS.

Por exemplo, para criar um banco de dados recommender no local compartilhado /mnt/shared, use o seguinte comando:

%sql CREATE DATABASE IF NOT EXISTS recommender LOCATION '/mnt/shared'

Criar uma tabela de recurso no armazenamento de recurso remoto

O API para criar uma tabela de recurso em um armazenamento de recurso remoto depende da versão de tempo de execução do Databricks que o senhor está usando.

- V0.3.6 and above

- V0.3.5 and below

Use a API FeatureStoreClient.create_table:

fs = FeatureStoreClient(feature_store_uri=f'databricks://<scope>:<prefix>')

fs.create_table(

name='recommender.customer_features',

primary_keys='customer_id',

schema=customer_features_df.schema,

description='Customer-keyed features'

)

Use a API FeatureStoreClient.create_feature_table:

fs = FeatureStoreClient(feature_store_uri=f'databricks://<scope>:<prefix>')

fs.create_feature_table(

name='recommender.customer_features',

keys='customer_id',

schema=customer_features_df.schema,

description='Customer-keyed features'

)

Para obter exemplos de outros métodos do recurso Store, consulte Notebook example: Compartilhar tabelas de recurso no espaço de trabalho.

Usar uma tabela de recursos do armazenamento de recursos remoto

O senhor pode ler uma tabela de recurso no armazenamento de recurso remoto com o método FeatureStoreClient.read_table, definindo primeiro o feature_store_uri:

fs = FeatureStoreClient(feature_store_uri=f'databricks://<scope>:<prefix>')

customer_features_df = fs.read_table(

name='recommender.customer_features',

)

Também há suporte para outros métodos auxiliares de acesso à tabela de recursos:

fs.read_table()

fs.get_feature_table() # in v0.3.5 and below

fs.get_table() # in v0.3.6 and above

fs.write_table()

fs.publish_table()

fs.create_training_set()

Use um registro de modelo remoto

Além de especificar um URI de armazenamento de recurso remoto, o senhor também pode especificar um URI de registro de modelo remoto para compartilhar modelos no espaço de trabalho.

Para especificar um registro de modelo remoto para logging ou pontuação de modelo, é possível usar um URI de registro de modelo para instanciar um FeatureStoreClient.

fs = FeatureStoreClient(model_registry_uri=f'databricks://<scope>:<prefix>')

customer_features_df = fs.log_model(

model,

"recommendation_model",

flavor=mlflow.sklearn,

training_set=training_set,

registered_model_name="recommendation_model"

)

Usando feature_store_uri e model_registry_uri, o senhor pode treinar um modelo usando qualquer tabela de recurso local ou remota e, em seguida, registrar o modelo em qualquer registro de modelo local ou remoto.

fs = FeatureStoreClient(

feature_store_uri=f'databricks://<scope>:<prefix>',

model_registry_uri=f'databricks://<scope>:<prefix>'

)

Notebook exemplo: Compartilhar tabelas de recurso no espaço de trabalho

O Notebook a seguir mostra como trabalhar com um recurso store centralizado.