March 2019

These features and Databricks platform improvements were released in March 2019.

Releases are staged. Your Databricks account may not be updated until up to a week after the initial release date.

Purge deleted MLflow experiments and runs

March 26 - April 2, 2019: Version 2.94

You can now permanently purge deleted MLflow experiments and runs. See Purge workspace storage.

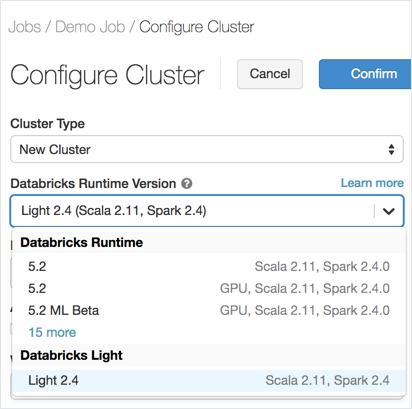

Databricks Light generally available

March 26 - April 2, 2019: Version 2.94

Databricks Light (also known as Data Engineering Light) is now generally available. Databricks Light is the Databricks packaging of the open source Apache Spark runtime. It provides a runtime option for jobs that don't need the advanced performance, reliability, or autoscaling benefits provided by Databricks Runtime. You can select Databricks Light only when you create a cluster to run a JAR, Python, or spark-submit job; you cannot select this runtime for clusters on which you run interactive or notebook job workloads. See Databricks Light.

Your account may require a Databricks account team to enable Databricks Light access for you. If it hasn't been enabled for you by April 2, contact your Databricks account team.

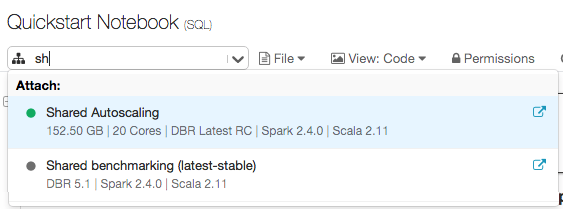

Searchable cluster selector

March 26 - April 2, 2019: Version 2.94

Now when select a cluster to attach to a notebook, you can search for the cluster as you type.

Databricks is rolling this feature out to customers gradually, starting with this release.

Upcoming usage display changes

April 2019

The View Usage tab in the account console will undergo changes during the month of April. The Cluster Usage dashboard will temporarily be unable to display March and April 2019 data, but you will be able to download an expanded set of itemized usage data for those months.

Starting April 5:

- When you click the Download itemized usage button, you will be able to download a monthly usage report with much more detailed information than before, including usage breakdown by cluster and pricing tier at hourly resolution. This more detailed report will be available for March 2019 data and after.

- The usage dashboard will be disabled temporarily for March 2019 data and after. Data for earlier months will still be available for display. All months will be re-enabled during the second quarter of 2019.

- Any Data Engineering Light (Databricks Light) usage will be categorized under Data Engineering (automated) usage until April 5. On that date, Data Engineering Light usage will be reported separately from Data Engineering usage, and the report will show separate usage details retroactive to March 2019.

Billing will not be impacted by these changes for any time period. You will always be charged correctly, according to your contract terms.

Manage groups from the Admin Console

March 12 - March 19, 2019: Version 2.93

Admins can now create groups using the new Groups tab in the Admin Console. Use the Groups tab to add users, add subgroups, grant members the ability to create clusters, add instance profiles to members, and manage parent-child group relationships.

See Groups.

Notebooks automatically have associated MLflow experiment

March 12 - March 19, 2019: Version 2.93

Every notebook in a Databricks Workspace now has an associated MLflow experiment. You record runs in the notebook's experiment using MLflow tracking APIs by referring to its experiment ID or experiment name. If you use the Python API in MLflow 0.9.0 or above, MLflow automatically detects the notebook experiment when you create a run.

To view the MLflow experiment associated with a notebook, click the Experiment icon in the Databricks notebook's right sidebar.

See Create notebook experiment.

Z1d series Amazon EC2 instance types (Beta)

March 12 - March 19, 2019: Version 2.93

Databricks now provides Beta support for the Amazon EC2 Z1d series for high frequency compute loads. Use Z1d instances to turn Delta Lake into a rocket ship!

Two private IP addresses per node

March 12 - March 19, 2019: Version 2.93

Until recently, each worker node in a Databricks cluster could have as many as five assigned private IP addresses. This caused IP address space conflicts for some customers. From now on, all new worker nodes will launch with two private IP addresses.

See Worker node type.

Databricks Delta public community

March 8, 2019

With Delta Lake now GA, we have created a public community for developers to get started, discuss articles, and get help.

These forums are public. Do not use them to share confidential information with Databricks representatives or with other users.