Manage network policies for serverless egress control

To avoid breaking the connection between BDC and SAP Databricks, refer to the SAP documentation before configuring serverless egress control.

This page explains how to configure and manage network policies to control outbound network connections from your serverless workloads in SAP Databricks.

Requirements

- Permissions for managing network policies are restricted to account admins.

Accessing network policies

To create, view, and update network policies in your account:

- From the account console, click Security.

- Click the Networking tab.

- Under Policies, click Context-based ingress & egress control.

Create a network policy

-

Click Create new network policy.

-

Enter a Policy name.

-

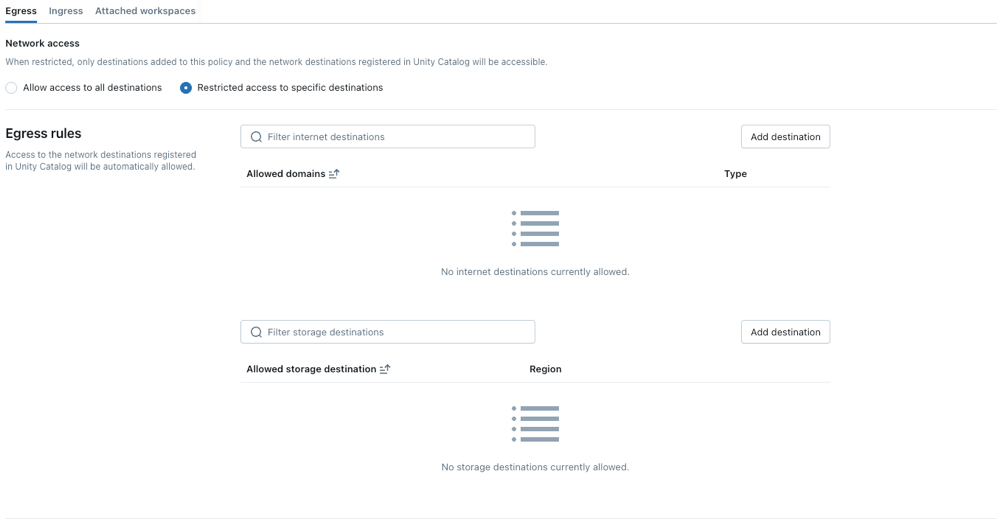

Click the Egress tab.

-

Choose a network access mode:

- Allow access to all destinations: Unrestricted outbound internet access. If you choose Full access, outbound internet access remains unrestricted.

- Restricted access to specific destinations: Outbound access is limited to specified destinations.

Configure network policies

The following steps outline optional settings for restricted access mode.

Set egress rules

Before setting egress rules, note:

- When your metastore and cloud storage containers bucket of your UC external location are located in different regions, you must explicitly add the bucket to your egress allowlist for access to succeed.

- The maximum number of supported destinations is 2500.

- The number of FQDNs that can be added as allowed domains is limited to 100 per policy.

- Domains added as Private Link entries for a network load balancer are implicitly allowlisted in network policies. When a domain is removed or the private endpoint is deleted, it might take up to 24 hours for network policy controls to fully enforce the change.

- Delta Sharing buckets are implicitly allowlisted in network policies.

-

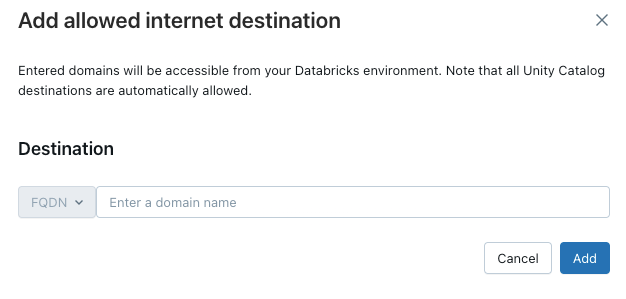

To grant your serverless compute access to additional domains, click Add destination above the Allowed domains list.

The FQDN filter allows access to all domains that share the same IP address. Model serving provisioned throughout endpoints prevents internet access when network access is set to restricted. However, granular control with FQDN filtering is not supported.

-

To allow your workspace to access additional cloud storage containers, click the Add destination button above the Allowed storage destinations list.

Direct access to cloud storage services from user code containers, such as REPLs or UDFs, is not permitted by default. To enable this access, add the storage resource's FQDN under Allowed Domains in your policy. Adding only the storage resource's base domain could inadvertently grant access to all storage resources in the region.

Policy enforcement

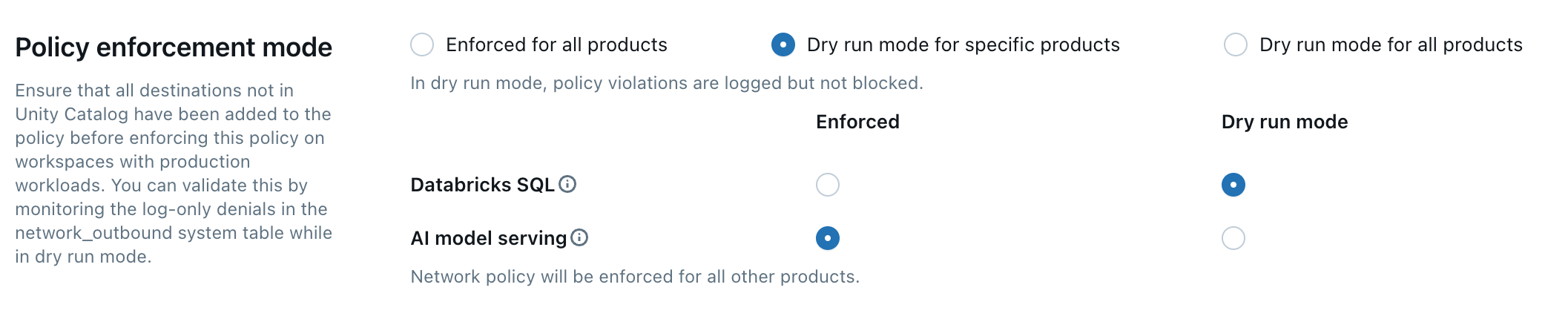

Dry-run mode allows you to test your policy configuration and monitor outbound connections without disrupting access to resources. When dry-run mode is enabled, requests that violate the policy are logged but not blocked. You can select from the following options:

-

Databricks SQL: Databricks SQL warehouses operate in dry-run mode.

-

AI model serving: Model serving endpoints operate in dry-run mode.

-

All products: All SAP Databricks services operate in dry-run mode, overriding all other selections.

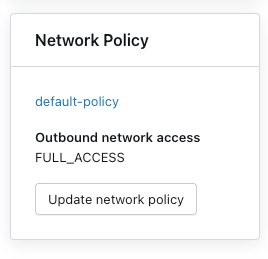

Update the default policy

Each SAP Databricks account includes a default policy. The default policy is associated with all workspaces with no explicit network policy assignment, including newly created workspaces. You can modify this policy, but it cannot be deleted.

Associate a network policy to workspaces

If you have updated your default policy with additional configurations, they are automatically applied to workspaces that do not have an existing network policy.

To associate your workspace with a different policy, do the following:

- Select a workspace.

- In Network Policy, click Update network policy.

- Select the desired network policy from the list.

- Click Apply policy.

Apply network policy changes

Most network configuration updates automatically propagate to your serverless compute in ten minutes. This includes:

- Adding a new Unity Catalog external location or connection.

- Attaching your workspace to a different metastore.

- Changing the allowed storage or internet destinations.

You must restart your compute if you modify the internet access or dry-run mode setting.

Restart or redeploy serverless workloads

You only need to update when switching internet access mode or when updating dry-run mode.

To determine the appropriate restart procedure, refer to the following list by product:

- Databricks ML Serving: Redeploy your ML serving endpoint.

- Serverless SQL warehouse: Stop and restart the SQL warehouse.

- Jobs: Network policy changes are automatically applied when a new job run is triggered or an existing job run is restarted.

- Notebooks:

- If your notebook does not interact with Spark, you can terminate then reattach serverless compute to refresh the network policy.

- If your notebook interacts with Spark, your serverless resource refreshes and automatically detects the change. Most changes will be refreshed in ten minutes, but switching internet access modes, updating dry-run mode, or changing between attached policies that have different enforcement types can take up to 24 hours. To expedite a refresh on these specific types of changes, turn off all associated notebooks and jobs.

Databricks Asset Bundles UI dependencies

When you use restricted access mode with serverless egress control, Databricks Asset Bundles UI features require access to specific external domains. If outbound access is completely restricted, users might see errors in the workspace interface when working with Databricks Asset Bundles.

To keep Databricks Asset Bundles UI features working with restricted network policies, add these domains to the Allowed domains in your policy:

- github.com

- objects.githubusercontent.com

- release-assets.githubusercontent.com

- checkpoint-api.hashicorp.com

- releases.hashicorp.com

- registry.terraform.io

Verify network policy enforcement

You can validate that your network policy is correctly enforced by attempting to access restricted resources from different serverless workloads.

- Run a test query in the SQL editor or a notebook that attempts to access a resource controlled by your network policy.

- Verify the results:

- Trusted destination: The query succeeds.

- Untrusted Destination: The query fails with a network access error.

Validate with model serving

To validate your network policy using model serving:

Before you begin

When a model serving endpoint is created, a container image is built to serve your model. Network policies are enforced during this build stage. When using model serving with network policies, consider the following:

-

Dependency access: Any external build dependencies like Python packages from PyPI and conda-forge, base container images, or files from external URLs specified in your model's environment or Docker context required by your model's environment must be permitted by your network policy.

- For example, if your model requires a specific version of scikit-learn that needs to be downloaded during the build, the network policy must allow access to the repository hosting the package.

-

Build failures: If your network policy blocks access to necessary dependencies, the model serving container build will fail. This prevents the serving endpoint from deploying successfully and potentially cause it to fail to store or function correctly.

-

Troubleshooting denials: Network access denials during the build phase are logged. These logs feature a

network_source_typefield with the valueML Build. This information is crucial for identifying the specific blocked resources that must be added to your network policy to allow the build to complete successfully.

Validate runtime network access

The following steps demonstrate how to validate network policy for a deployed model at runtime, specifically for attempts to access external resources during inference. This assumes the model serving container has been built successfully, meaning any build-time dependencies were allowed in the network policy.

-

Create a test model

-

In a Python notebook, create a model that attempts to access a public internet resource at inference time, like downloading a file or making an API request.

-

Run this notebook to generate a model in the test workspace. For example:

Pythonimport mlflow

import mlflow.pyfunc

import mlflow.sklearn

import requests

class DummyModel(mlflow.pyfunc.PythonModel):

def load_context(self, context):

# This method is called when the model is loaded by the serving environment.

# No network access here in this example, but could be a place for it.

pass

def predict(self, _, model_input):

# This method is called at inference time.

first_row = model_input.iloc[0]

try:

# Attempting network access during prediction

response = requests.get(first_row['host'])

except requests.exceptions.RequestException as e:

# Return the error details as text

return f"Error: An error occurred - {e}"

return [response.status_code]

with mlflow.start_run(run_name='internet-access-model'):

wrappedModel = DummyModel()

# When this model is deployed to a serving endpoint,

# the environment will be built. If this environment

# itself (e.g., specified conda_env or python_env)

# requires packages from the internet, the build-time SEG policy applies.

mlflow.pyfunc.log_model(

artifact_path="internet_access_ml_model",

python_model=wrappedModel,

registered_model_name="internet-http-access"

)

-

-

Create a serving endpoint

-

In the workspace navigation, select AI/ML.

-

Click the Serving tab.

-

Click Create Serving Endpoint.

-

Configure the endpoint with the following settings:

- Serving Endpoint Name: Provide a descriptive name.

- Entity Details: Select Model registry model.

- Model: Choose the model you created in the previous step (

internet-http-access).

-

Click Confirm. At this stage, the model serving container build process begins. Network policies for

ML Buildwill be enforced. If the build fails due to blocked network access for dependencies, the endpoint will not become ready. -

Wait for the serving endpoint to reach the Ready state. If it fails to become ready, check the denial logs for

network_source_type: ML Buildentries.

-

-

Query the endpoint.

-

Use the Query Endpoint option in the serving endpoint page to send a test request.

JSON{ "dataframe_records": [{ "host": "[https://www.google.com](https://www.google.com)" }] }

-

-

Verify the result for run-time access:

- Internet access enabled at runtime: The query succeeds and returns a status code like

200. - Internet access restricted at runtime: The query fails with a network access error, such as the error message from the

try-exceptblock in the model code, indicating a connection timeout or host resolution failure.

- Internet access enabled at runtime: The query succeeds and returns a status code like

Update a network policy

You can update a network policy any time after it is created. To update a network policy:

- On the details page of the network policy in your accounts console, modify the policy:

- Change the network access mode.

- Enable or disable dry-run mode for specific services.

- Add or remove FQDN or storage destinations.

- Click Update.

- Refer to Apply network policy changes to verify that the updates are applied to existing workloads.

Limitations

- Artifact upload size: When using MLflow's internal Databricks File System with the

dbfs:/databricks/mlflow-tracking/<experiment_id>/<run_id>/artifacts/<artifactPath>format, artifact uploads are limited to 5GB forlog_artifact,log_artifacts, andlog_modelAPIs. - Model serving: Egress control does not apply when building images for model serving.

- Denial log delivery for short-lived garbage collection (GC) workloads: Denial logs from short-lived GC workloads lasting less than 120 seconds might not be delivered before the node terminates due to logging delays. Although access is still enforced, the corresponding log entry might be missing.

- Network connectivity for Databricks SQL user-defined functions (UDFs): To enable network access in Databricks SQL, contact your Databricks account team.