Shiny on Databricks

Shiny is an R package, available on CRAN, used to build interactive R applications and dashboards. You can use Shiny inside RStudio Server hosted on Databricks clusters. You can also develop, host, and share Shiny applications directly from a Databricks notebook.

To get started with Shiny, see the Shiny tutorials. You can run these tutorials on Databricks notebooks.

This article describes how to run Shiny applications on Databricks and use Apache Spark inside Shiny applications.

Shiny inside R notebooks

Get started with Shiny inside R notebooks

The Shiny package is included with Databricks Runtime. You can interactively develop and test Shiny applications inside Databricks R notebooks similarly to hosted RStudio.

Follow these steps to get started:

-

Create an R notebook.

-

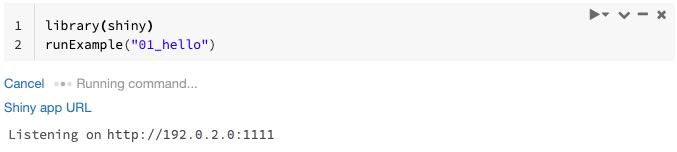

Import the Shiny package and run the example app

01_helloas follows:Rlibrary(shiny)

runExample("01_hello") -

When the app is ready, the output includes the Shiny app URL as a clickable link which opens a new tab. To share this app with other users, see Share Shiny app URL.

- Log messages appear in the command result, similar to the default log message (

Listening on http://0.0.0.0:5150) shown in the example. - To stop the Shiny application, click Cancel.

- The Shiny application uses the notebook R process. If you detach the notebook from the cluster, or if you cancel the cell running the application, the Shiny application terminates. You cannot run other cells while the Shiny application is running.

Run Shiny apps from Databricks Git folders

You can run Shiny apps that are checked into Databricks Git folders.

-

Run the application.

Rlibrary(shiny)

runApp("006-tabsets")

Run Shiny apps from files

If your Shiny application code is part of a project managed by version control, you can run it inside the notebook.

You must use the absolute path or set the working directory with setwd().

-

Check out the code from a repository using code similar to:

%sh git clone https://github.com/rstudio/shiny-examples.git

cloning into 'shiny-examples'... -

To run the application, enter code similar to the following in another cell:

Rlibrary(shiny)

runApp("/databricks/driver/shiny-examples/007-widgets/")

Share Shiny app URL

The Shiny app URL generated when you start an app is shareable with other users. Any Databricks user with CAN ATTACH TO permission on the cluster can view and interact with the app as long as both the app and the cluster are running.

If the cluster that the app is running on terminates, the app is no longer accessible. You can disable automatic termination in the cluster settings.

If you attach and run the notebook hosting the Shiny app on a different cluster, the Shiny URL changes. Also, if you restart the app on the same cluster, Shiny might pick a different random port. To ensure a stable URL, you can set the shiny.port option, or, when restarting the app on the same cluster, you can specify the port argument.

Shiny on hosted RStudio Server

Requirements

With RStudio Server Pro, you must disable proxied authentication.

Make sure auth-proxy=1 is not present inside /etc/rstudio/rserver.conf.

Get started with Shiny on hosted RStudio Server

-

Open RStudio on Databricks.

-

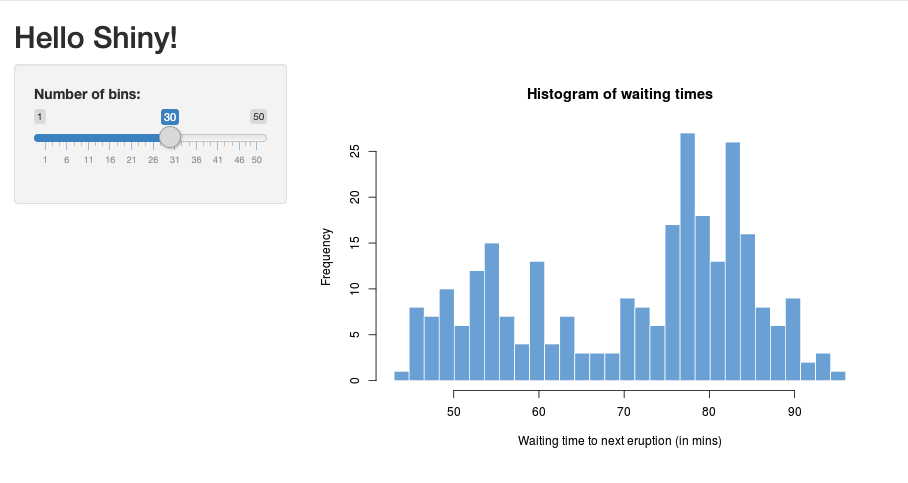

In RStudio, import the Shiny package and run the example app

01_helloas follows:R> library(shiny)

> runExample("01_hello")

Listening on http://127.0.0.1:3203A new window appears, displaying the Shiny application.

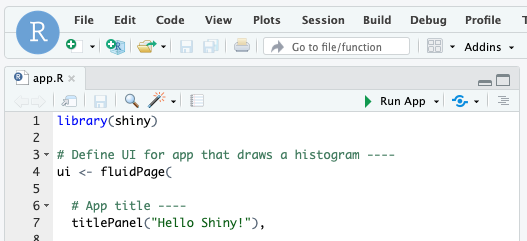

Run a Shiny app from an R script

To run a Shiny app from an R script, open the R script in the RStudio editor and click the Run App button on the top right.

Use Apache Spark inside Shiny apps

You can use Apache Spark inside Shiny applications with either SparkR or sparklyr.

Use SparkR with Shiny in a notebook

library(shiny)

library(SparkR)

sparkR.session()

ui <- fluidPage(

mainPanel(

textOutput("value")

)

)

server <- function(input, output) {

output$value <- renderText({ nrow(createDataFrame(iris)) })

}

shinyApp(ui = ui, server = server)

Use sparklyr with Shiny in a notebook

library(shiny)

library(sparklyr)

sc <- spark_connect(method = "databricks")

ui <- fluidPage(

mainPanel(

textOutput("value")

)

)

server <- function(input, output) {

output$value <- renderText({

df <- sdf_len(sc, 5, repartition = 1) %>%

spark_apply(function(e) sum(e)) %>%

collect()

df$result

})

}

shinyApp(ui = ui, server = server)

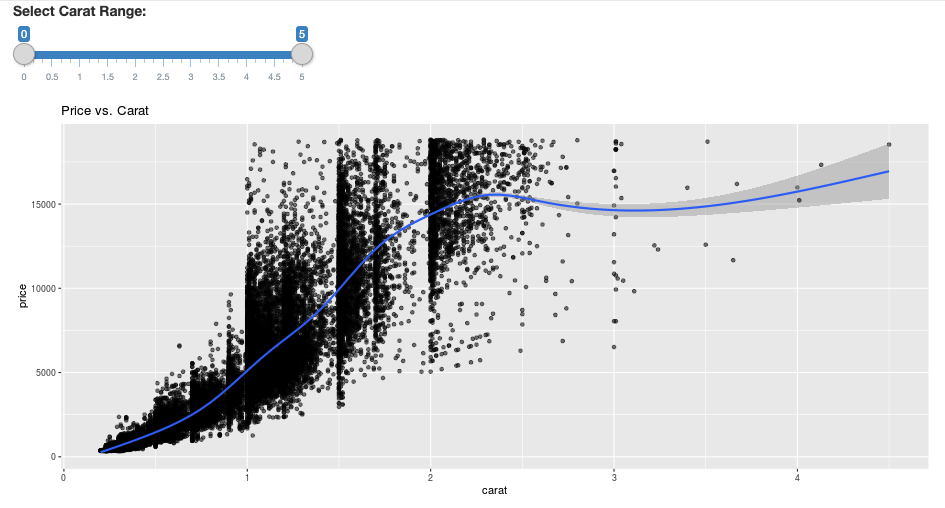

library(dplyr)

library(ggplot2)

library(shiny)

library(sparklyr)

sc <- spark_connect(method = "databricks")

diamonds_tbl <- spark_read_csv(sc, path = "/databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv")

# Define the UI

ui <- fluidPage(

sliderInput("carat", "Select Carat Range:",

min = 0, max = 5, value = c(0, 5), step = 0.01),

plotOutput('plot')

)

# Define the server code

server <- function(input, output) {

output$plot <- renderPlot({

# Select diamonds in carat range

df <- diamonds_tbl %>%

dplyr::select("carat", "price") %>%

dplyr::filter(carat >= !!input$carat[[1]], carat <= !!input$carat[[2]])

# Scatter plot with smoothed means

ggplot(df, aes(carat, price)) +

geom_point(alpha = 1/2) +

geom_smooth() +

scale_size_area(max_size = 2) +

ggtitle("Price vs. Carat")

})

}

# Return a Shiny app object

shinyApp(ui = ui, server = server)

Frequently asked questions (FAQ)

- Why is my Shiny app grayed out after some time?

- Why does my Shiny viewer window disappear after a while?

- Why do long Spark jobs never return?

- How can I avoid the timeout?

- My app crashes immediately after launching, but the code appears to be correct. What's going on?

- How many connections can be accepted for one Shiny app link during development?

- Can I use a different version of the Shiny package than the one installed in Databricks Runtime?

- How can I develop a Shiny application that can be published to a Shiny server and access data on Databricks?

- Can I develop a Shiny application inside a Databricks notebook?

- How can I save the Shiny applications that I developed on hosted RStudio Server?

Why is my Shiny app grayed out after some time?

If there is no interaction with the Shiny app, the connection to the app closes after about 10 minutes.

To reconnect, refresh the Shiny app page. The dashboard state resets.

Why does my Shiny viewer window disappear after a while?

If the Shiny viewer window disappears after idling for several minutes, it is due to the same timeout as the “gray out” scenario.

Why do long Spark jobs never return?

This is also because of the idle timeout. Any Spark job running for longer than the previously mentioned timeouts is not able to render its result because the connection closes before the job returns.

How can I avoid the timeout?

-

There is a workaround suggested in Feature request: Have client send keep alive message to prevent TCP timeout on some load balancers on Github. The workaround sends heartbeats to keep the WebSocket connection alive when the app is idle. However, if the app is blocked by a long running computation, this workaround does not work.

-

Shiny does not support long running tasks. A Shiny blog post recommends using promises and futures to run long tasks asynchronously and keep the app unblocked. Here is an example that uses heartbeats to keep the Shiny app alive, and runs a long running Spark job in a

futureconstruct.R# Write an app that uses spark to access data on Databricks

# First, install the following packages:

install.packages('future')

install.packages('promises')

library(shiny)

library(promises)

library(future)

plan(multisession)

HEARTBEAT_INTERVAL_MILLIS = 1000 # 1 second

# Define the long Spark job here

run_spark <- function(x) {

# Environment setting

library("SparkR", lib.loc = "/databricks/spark/R/lib")

sparkR.session()

irisDF <- createDataFrame(iris)

collect(irisDF)

Sys.sleep(3)

x + 1

}

run_spark_sparklyr <- function(x) {

# Environment setting

library(sparklyr)

library(dplyr)

library("SparkR", lib.loc = "/databricks/spark/R/lib")

sparkR.session()

sc <- spark_connect(method = "databricks")

iris_tbl <- copy_to(sc, iris, overwrite = TRUE)

collect(iris_tbl)

x + 1

}

ui <- fluidPage(

sidebarLayout(

# Display heartbeat

sidebarPanel(textOutput("keep_alive")),

# Display the Input and Output of the Spark job

mainPanel(

numericInput('num', label = 'Input', value = 1),

actionButton('submit', 'Submit'),

textOutput('value')

)

)

)

server <- function(input, output) {

#### Heartbeat ####

# Define reactive variable

cnt <- reactiveVal(0)

# Define time dependent trigger

autoInvalidate <- reactiveTimer(HEARTBEAT_INTERVAL_MILLIS)

# Time dependent change of variable

observeEvent(autoInvalidate(), { cnt(cnt() + 1) })

# Render print

output$keep_alive <- renderPrint(cnt())

#### Spark job ####

result <- reactiveVal() # the result of the spark job

busy <- reactiveVal(0) # whether the spark job is running

# Launch a spark job in a future when actionButton is clicked

observeEvent(input$submit, {

if (busy() != 0) {

showNotification("Already running Spark job...")

return(NULL)

}

showNotification("Launching a new Spark job...")

# input$num must be read outside the future

input_x <- input$num

fut <- future({ run_spark(input_x) }) %...>% result()

# Or: fut <- future({ run_spark_sparklyr(input_x) }) %...>% result()

busy(1)

# Catch exceptions and notify the user

fut <- catch(fut, function(e) {

result(NULL)

cat(e$message)

showNotification(e$message)

})

fut <- finally(fut, function() { busy(0) })

# Return something other than the promise so shiny remains responsive

NULL

})

# When the spark job returns, render the value

output$value <- renderPrint(result())

}

shinyApp(ui = ui, server = server) -

There is a hard limit of 12 hours since the initial page load after which any connection, even if active, will be terminated. You must refresh the Shiny app to reconnect in these cases. However, the underlying WebSocket connection can close at any time by a variety of factors including network instability or computer sleep mode. Databricks recommends rewriting Shiny apps such that they do not require a long-lived connection and do not over-rely on session state.

My app crashes immediately after launching, but the code appears to be correct. What's going on?

There is a 50 MB limit on the total amount of data that can be displayed in a Shiny app on Databricks. If the application's total data size exceeds this limit, it will crash immediately after launching. To avoid this, Databricks recommends reducing the data size, for example by downsampling the displayed data or reducing the resolution of images.

How many connections can be accepted for one Shiny app link during development?

Databricks recommends up to 20.

Can I use a different version of the Shiny package than the one installed in Databricks Runtime?

Yes. See Fix the Version of R Packages.

How can I develop a Shiny application that can be published to a Shiny server and access data on Databricks?

While you can access data naturally using SparkR or sparklyr during development and testing on Databricks, after a Shiny application is published to a stand-alone hosting service, it cannot directly access the data and tables on Databricks.

To enable your application to function outside Databricks, you must rewrite how you access data. There are a few options:

- Use JDBC/ODBC to submit queries to a Databricks cluster.

- Use Databricks Connect.

- Directly access data on object storage.

Databricks recommends that you work with your Databricks solutions team to find the best approach for your existing data and analytics architecture.

Can I develop a Shiny application inside a Databricks notebook?

Yes, you can develop a Shiny application inside a Databricks notebook.

How can I save the Shiny applications that I developed on hosted RStudio Server?

You can either save your application code on DBFS or check your code into version control.