Data access configurations

These instructions apply to legacy data access patterns. Databricks recommends using Unity Catalog external locations for data access. SeeConnect to cloud object storage using Unity Catalog.

This article describes how to manage instance profiles and data access properties in a workspace. These settings apply to all SQL warehouses in a workspace. Additionally, the configured instance profile can be used by serverless compute resources in the workspace.

Changing these settings restarts all running SQL warehouses.

Configure SQL warehouses and serverless compute to use an instance profile

This setting allows all SQL warehouses and serverless compute in a workspace to access AWS storage through the instance profile.

For information on instance profiles, see Tutorial: Configure S3 access with an instance profile.

To set an instance profile to be used by all SQL warehouses and serverless compute in your workspace:

- Click your username in the top bar of the workspace and select Settings from the drop-down.

- Click the Compute tab.

- Click Manage next to SQL warehouses and serverless compute.

- In the Instance Profile drop-down, select an instance profile.

- Click Save changes.

After you update this setting, all warehouses restart. If a job is using serverless compute, it will run for up to 5 minutes before terminating and then retrying. If you are using serverless compute in a notebook, you will be able to continue running queries for 5 minutes. After 5 minutes, any in-flight queries will appear to continue running briefly without incurring billing, then fail with an error message telling you to start a new session and try again.

- If a user does not have permission to use the instance profile, all SQL warehouses the user creates will fail to start.

- If the instance profile is not valid, all SQL warehouses will become unhealthy.

You can also configure an instance profile using the Databricks Terraform provider and databricks_sql_global_config.

Configure data access properties for SQL warehouses

This setting is only supported on SQL warehouses and does not apply to the workspace's serverless notebooks, jobs, or pipelines.

Data access properties help configure how the warehouse interacts with data sources and authentication layers. This is particularly useful for workloads that use an external metastore, instead of the legacy Hive metastore, allowing you to align data access configuration across systems.

To configure all SQL warehouses with data access properties:

-

Click your username in the top bar of the workspace and select Settings from the drop-down.

-

Click the Compute tab.

-

Click Manage next to SQL warehouses.

-

In the Data Access Configuration textbox, specify key-value pairs containing metastore properties.

importantTo set a Spark configuration property to the value of a secret without exposing the secret value to Spark, set the value to

{{secrets/<secret-scope>/<secret-name>}}. Replace<secret-scope>with the secret scope and<secret-name>with the secret name. The value must start with{{secrets/and end with}}. For more information about this syntax, see Manage secrets. -

Click Save.

You can also configure data access properties using the Databricks Terraform provider and databricks_sql_global_config.

Supported properties

-

For an entry that ends with

*, all properties within that prefix are supported.For example,

spark.sql.hive.metastore.*indicates that bothspark.sql.hive.metastore.jarsandspark.sql.hive.metastore.versionare supported, and any other properties that start withspark.sql.hive.metastore. -

For properties whose values contain sensitive information, you can store the sensitive information in a secret and set the property's value to the secret name using the following syntax:

secrets/<secret-scope>/<secret-name>.

The following properties are supported for SQL warehouses:

spark.databricks.hive.metastore.glueCatalog.enabledspark.databricks.delta.catalog.update.enabled falsespark.sql.hive.metastore.*(spark.sql.hive.metastore.jarsandspark.sql.hive.metastore.jars.pathare unsupported for serverless SQL warehouses.)spark.sql.warehouse.dirspark.hadoop.aws.regionspark.hadoop.datanucleus.*spark.hadoop.fs.*spark.hadoop.hive.*spark.hadoop.javax.jdo.option.*spark.hive.*spark.hadoop.aws.glue.*

For more information about how to set these properties, see External Hive metastore and AWS Glue data catalog.

Confirm or set up an AWS instance profile to use with your serverless SQL warehouses

If you already use an instance profile with Databricks SQL, the role associated with the instance profile needs a Databricks Serverless compute trust relationship statement so that serverless SQL warehouses can use it.

You might not need to modify the role depending on how and when your instance profile was created because it might already have the trust relationship. If the instance profile was created in the following ways, it likely has the trust relationship statement:

- After June 24, 2022, your instance profile was created as part of creating a Databricks workspace by using AWS Quickstart.

- After June 24, 2022, someone in your organization followed the steps in the Databricks article to create the instance profile manually.

This section describes how to confirm or update that the role associated with the instance profile has the trust relationship statement. That enables your serverless SQL warehouses to use the role to access your S3 buckets.

To perform these steps, you must be a Databricks workspace admin to confirm which instance profile your workspace uses for Databricks SQL. You must also be an AWS account administrator to check the role's trust relationship policy or make necessary changes. If you are not both of these admin types, contact the appropriate admins in your organization to complete these steps.

-

In the admin settings page, click the Compute tab, then click Manage next to SQL warehouses.

-

Look in the Data Security section for the Instance Profile field. Confirm whether your workspace is configured to use an AWS instance profile for Databricks SQL to connect to AWS S3 buckets other than your root bucket.

- If you use an instance profile, its name is visible in the Instance Profile field. Make a note of it for the next step.

- If the field value is None, you are not using an instance profile to access S3 buckets other than your workspace's root bucket. Setup is complete.

-

Confirm whether your instance profile name matches the associated role name.

-

In the AWS console, go to the IAM service's Roles tab. It lists all the IAM roles in your account.

-

Click the role with the name that matches the instance profile name in the Databricks SQL admin settings in the Data Security section for the Instance Profile field you found earlier in this section.

-

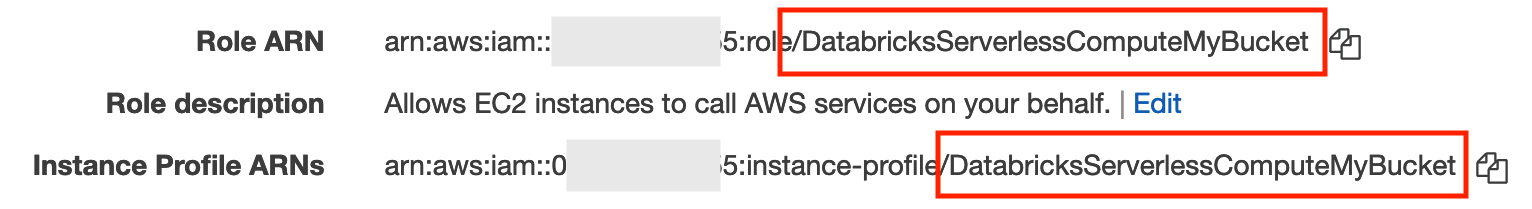

In the summary area, find the Role ARN and Instance Profile ARNs fields.

-

Check if the last part of those two fields have matching names after the final slash. For example:

-

-

If you determined in the previous step that the role name (the text after the last slash in the role ARN) and the instance profile name (the text after the last slash in the instance profile ARN) do not match, edit your instance profile registration to specify your IAM role ARN.

- To edit your instance profiles, look below the Instance profile field and click the Configure button.

- Click your instance profile's name.

- Click Edit.

- In the optional Role ARN field, paste the role ARN for the role associated with your instance profile. This is the key step that allows your instance profile to work with Databricks SQL Serverless even if the role name does not match the instance profile name.

- Click Save.

-

In the AWS console, confirm or edit the trust relationship.

-

In the AWS console IAM service's Roles tab, click the instance profile role you want to modify.

-

Click the Trust relationships tab.

-

View the existing trust policy. If the policy already includes the JSON block below, then this step was completed earlier, and you can ignore the following instructions.

-

Click Edit trust policy.

-

In the existing

Statementarray, append the following JSON block to the end of the existing trust policy. Confirm that you don't overwrite the existing policy.JSON{

"Effect": "Allow",

"Principal": {

"AWS": ["arn:aws:iam::790110701330:role/serverless-customer-resource-role"]

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": [

"databricks-serverless-<YOUR-WORKSPACE-ID1>",

"databricks-serverless-<YOUR-WORKSPACE-ID2>"

]

}

}

}The only thing you must change in the statement is the workspace ID. Replace the

YOUR_WORKSPACE-IDwith one or more Databricks workspace IDs for the workspaces that will use this role. To get your workspace ID while you are using your workspace, check the URL. For example, inhttps://<databricks-instance>/?o=6280049833385130, the number aftero=is the workspace ID.The

Principal.AWSfield must have one of the following values based on your AWS region type. These reference a serverless compute role managed by Databricks:- AWS commercial regions:

arn:aws:iam::790110701330:role/serverless-customer-resource-role - AWS GovCloud (US):

arn:aws-us-gov:iam::170655000336:role/serverless-customer-resource-role - AWS GovCloud (US-DoD):

arn:aws-us-gov:iam::170644377855:role/serverless-customer-resource-role

- AWS commercial regions:

-

Click Review policy.

-

Click Save changes.

-

If your instance profile changes later, repeat these steps to verify that the trust relationship for the instance profile's role contains the required extra statement.

Troubleshooting

If your trust relationship is misconfigured, clusters fail with a message: “Request to create a cluster failed with an exception INVALID_PARAMETER_VALUE: IAM role <role-id> does not have the required trust relationship.”

If you get this error, it could be that the workspace IDs were incorrect or that the trust policy was not updated correctly on the correct role.

Carefully perform the steps in Confirm or set up an AWS instance profile to use with your serverless SQL warehouses to update the trust relationship.

Configuring Glue metastore for serverless SQL warehouses

If you must specify an AWS Glue metastore or add additional data source configurations, update the Data Access Configuration field in the admin settings page.

Serverless SQL warehouses support the default Databricks metastore and AWS Glue as a metastore, but do not support external Hive metastores.