Clusters UI changes and cluster access modes

This article has been archived and may no longer reflect the current state of the product. For information about cluster configuration, see Compute configuration reference.

A new clusters user interface is available with the following changes:

- Access mode replaces cluster security mode. Learn about the differences between cluster security mode and access mode.

- Radio buttons replace the cluster mode dropdown.

- Credential passthrough has new configuration settings.

- The functionality of legacy High Concurrency cluster functionality when combined with table access control (Table ACLs) or credential passthrough is now available in Shared access mode clusters.

For more details on configuring compute for Unity Catalog, see Access modes.

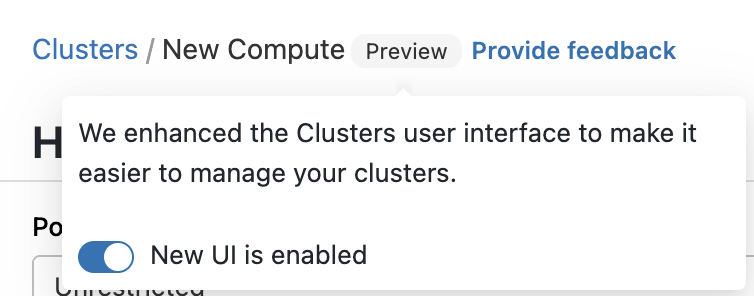

By default, the clusters editor uses the New UI. To toggle between the New UI and the Legacy UI, click the Preview button at the top of the page and click the setting that appears.

What is cluster access mode?

A new Access mode dropdown has replaced the Security mode drop down. Access modes are standardized as follows:

Access mode dropdown | Visible to user | Unity Catalog support | Supported languages |

|---|---|---|---|

Single user | Always | Yes | Python, SQL, Scala, R |

Shared | Always (Premium plan or above required) | Yes | Python (on Databricks Runtime 11.3 LTS and above), SQL, Scala (on Databricks Runtime 13.3 LTS and above) |

No isolation shared | Workspace admins can hide this cluster type by enforcing user isolation in the admin settings page. Also see a related account-level setting for No Isolation Shared clusters. | No | Python, SQL, Scala, R |

Custom | This option will only be shown for existing clusters without access modes. If a cluster was created with the legacy cluster modes, for example Standard or High Concurrency, Databricks shows this value for access mode when you are using the new UI. This value is not an option for creating new clusters. | No | Python, SQL, Scala, R |

Access mode in the clusters API is not yet supported. There are no changes to the existing Clusters API.

How do you configure cluster mode in the new Databricks clusters UI?

The Cluster mode dropdown is replaced by a radio button with two options: Multi node and Single node. When a user toggles between the two modes of the radio button, the form will reset.

How do you configure credential passthrough in the new clusters UI?

Credential passthrough does not work with Unity Catalog.

In Advanced options, the Enable credential passthrough checkbox will be available if the access mode is single user or shared. Check the box to enable credential passthrough on the cluster and disable Unity Catalog on the cluster.

How does backward compatibility work with these changes?

All existing interactive clusters and job cluster definitions continue to work after the change in the clusters UI. The behavior remains the same (no change) when you start or restart an existing cluster, or when an existing job run is triggered.

Legacy cluster definitions appear in the clusters UI as the Custom access mode. This value is only for backward compatibility and cannot be selected from the dropdown list.

For workspaces without Unity Catalog (that is, no Unity Catalog metastore is assigned), use the following table to migrate from your original cluster settings to the new access mode when creating new clusters.

Original cluster settings | New access mode |

|---|---|

Standard | No isolation shared |

Standard + credential passthrough checkbox checked | Single user + credential passthrough checkbox checked |

High concurrency (HC)* | No isolation shared |

HC + Table Access Control checkbox checked | Shared |

HC + Credential Passthrough checkbox checked | Shared + credential passthrough checkbox checked |

- Databricks no longer differentiates High Concurrency clusters without table access control (Table ACLs) and credential passthrough from Standard clusters as they have the same feature support. They are both represented by the “No isolation shared” access mode.

For customers with backward compatibility concerns, you can disable the new clusters UI by toggling off the New UI is enabled switch on the Preview label:

Limitations

-

The following security-related Spark configurations are not available in the new UI when creating a new cluster. If you need to fine-tune a cluster configuration (for example, for integrations such as Privacera or Immuta) you can define a custom cluster policy to set these configurations. Contact your Databricks account team for assistance.

spark.databricks.repl.allowedLanguagesspark.databricks.acl.dfAclsEnabledspark.databricks.passthrough.enabledspark.databricks.pyspark.enableProcessIsolation

-

Python support in the Shared access mode requires Databricks Runtime 11.3 LTS and above.