Materialized views

Like standard views, materialized views are the results of a query and you access them the same way you would a table. Unlike standard views, which recompute results on every query, materialized views cache the results and refreshes them on a specified interval. Because a materialized view is precomputed, queries against it can run much faster than against regular views.

A materialized view is a declarative pipeline object. It includes a query that defines it, a flow to update it, and the cached results for fast access. A materialized view:

- Tracks changes in upstream data.

- On trigger, incrementally processes the changed data and applies the necessary transformations.

- Maintains the output table, in sync with the source data, based on a specified refresh interval.

Materialized views are a good choice for many transformations:

- You apply reasoning over cached results instead of rows. In fact, you simply write a query.

- They are always correct. All required data is processed, even if it arrives late or out of order.

- They are often incremental. Databricks will try to choose the appropriate strategy that minimizes the cost of updating a materialized view.

How materialized views work

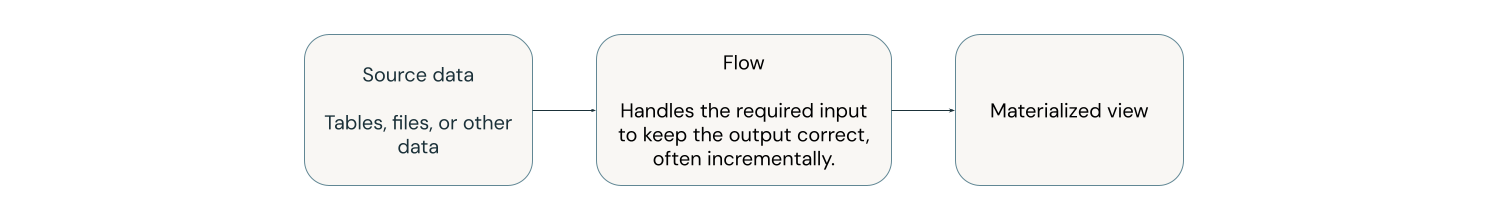

The following diagram illustrates how materialized views work.

Materialized views are defined and updated by a single pipeline. You can explicitly define materialized views in the source code of the pipeline. Tables defined by a pipeline can't be changed or updated by any other pipeline.

When you create a materialized view outside of a pipeline, using Databricks SQL, Databricks creates a pipeline which is used to update the view. You can see the pipeline by selecting Jobs & Pipelines from the left navigation in your workspace. You can add the Pipeline type column to your view. Materialized views defined in a pipeline have a type of ETL. Materialized views created in Databricks SQL have a type of MV/ST.

Databricks uses Unity Catalog to store metadata about the view, including the query and additional system views that are used for incremental updates. The cached data is materialized in cloud storage.

The following example joins two tables together and keeps the result up to date using a materialized view.

- Python

- SQL

from pyspark import pipelines as dp

@dp.materialized_view

def regional_sales():

partners_df = spark.read.table("partners")

sales_df = spark.read.table("sales")

return (

partners_df.join(sales_df, on="partner_id", how="inner")

)

CREATE OR REPLACE MATERIALIZED VIEW regional_sales

AS SELECT *

FROM partners

INNER JOIN sales ON

partners.partner_id = sales.partner_id;

Automatic incremental updates

When the pipeline defining a materialized view is triggered, the view is automatically kept up to date, often incrementally. Databricks attempts to process only the data that must be processed to keep the materialized view up to date. A materialized view always shows the correct result, even if it requires fully recomputing the query result from scratch, but often Databricks makes only incremental updates to a materialized view, which can be far less costly than a full recomputation.

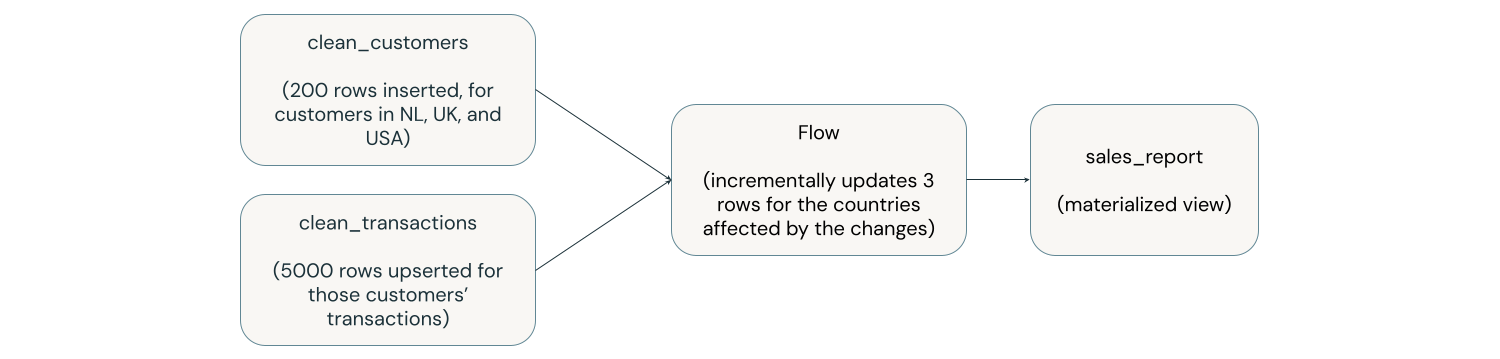

The diagram below shows a materialized view called sales_report, which is the result of joining two upstream tables called clean_customers and clean_transactions, and grouping by country. An upstream process inserts 200 rows into clean_customers in three countries (USA, Netherlands, UK) and updates 5,000 rows in clean_transactions corresponding to these new customers. The sales_report materialized view is incrementally updated for only the countries that have new customers or corresponding transactions. In this example, we see three rows updated instead of the entire sales report.

For more details about how incremental refresh works in materialized views, see Incremental refresh for materialized views.

Materialized views limitations

Materialized views have the following limitations:

- Since updates create correct queries, some changes to inputs will require a full recomputation of a materialized view, which can be expensive.

- They are not designed for low-latency use cases. The latency of updating a materialized view is in the seconds or minutes, not milliseconds.

- Not all computations can be incrementally computed.