Use Agent Bricks: Custom LLM to create a gen AI agent for text

This feature is in Beta. Workspace admins can control access to this feature from the Previews page. See Manage Databricks previews.

This article describes how to create a generative AI agent for custom text-based tasks using Agent Bricks: Custom LLM.

Agent Bricks provides a simple approach to build and optimize domain-specific, high-quality AI agent systems for common AI use cases.

What can you do with Custom LLM?

Use Agent Bricks: Custom LLM to generate high-quality results for any domain-specific task, such as summarization, classification, text transformation, and content generation.

Agent Bricks: Custom LLM is ideal for the following use cases:

- Summarizing the issue and resolution of customer calls.

- Analyzing the sentiment of customer reviews.

- Classifying research papers by topic.

- Generating press releases for new features.

Given high-level instruction and examples, Agent Bricks: Custom LLM optimizes prompts on behalf of users, automatically infers evaluation criteria, evaluates the system from provided data, and deploys the model as a productionizable endpoint.

Agent Bricks: Custom LLM leverages automated evaluation capabilities, including MLflow and Agent Evaluation, to enable rapid assessment of the cost-quality tradeoff for your specific extraction task. This assessment allows you to make informed decisions about the balance between accuracy and resource investment.

Agent Bricks uses default storage to store temporary data transformations, model checkpoints, and internal metadata that power each agent. On agent deletion, all data associated with the agent is removed from default storage.

Requirements

- A workspace that includes the following:

- Mosaic AI Agent Bricks Preview (Beta) enabled. See Manage Databricks previews.

- Serverless compute enabled. See Serverless compute requirements.

- Unity Catalog enabled. See Enable a workspace for Unity Catalog.

- Access to Mosaic AI Model Serving.

- Access to foundation models in Unity Catalog through the

system.aischema. - Access to a serverless budget policy with a nonzero budget.

- A workspace in one of the supported regions:

us-east-1orus-west-2. - Ability to use the

ai_querySQL function. - You must have input data ready to use. You can choose to provide either:

- A Unity Catalog table. The table name cannot contain any special characters (such as

-).- If you want to use PDFs, convert them to a Unity Catalog table. See Use PDFs in Agent Bricks.

- At least 3 example inputs and outputs. If you choose this option, you'll need to specify a Unity Catalog schema destination path for the agent, and you must have CREATE REGISTERED MODEL and CREATE TABLE permissions to this schema.

- A Unity Catalog table. The table name cannot contain any special characters (such as

- If you want to optimize your agent, you need at least 100 inputs (either 100 rows in a Unity Catalog table or 100 manually-provided examples).

Create a custom LLM agent

Go to Agents in the left navigation pane of your workspace. From the Custom LLM tile, click Build.

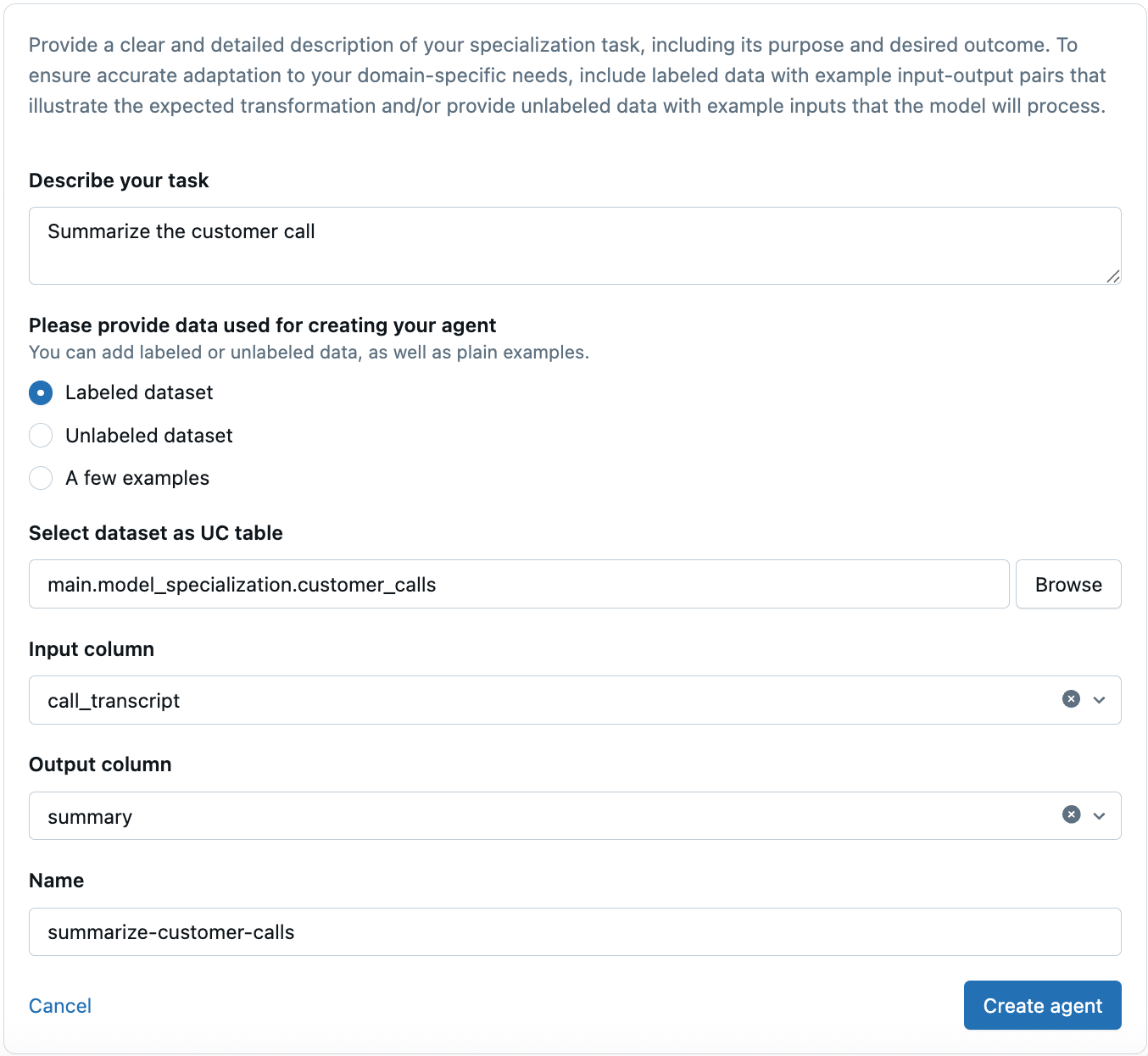

Step 1: Configure your agent

On the Build tab, click Show an example > to expand an example input and model response for a Custom LLM agent.

In the pane below, configure your agent:

-

Under Describe your task, enter a clear and detailed description of your specialization task, including its purpose and desired outcome.

-

Provide a labeled dataset, an unlabeled dataset, or a few examples to use to create your agent.

If you want to use PDFs, convert them to a Unity Catalog table first. See Use PDFs in Agent Bricks.

The following data types are supported:

string,int, anddouble.- Labeled dataset

- Unlabeled dataset

- A few examples

If you select Labeled dataset:

-

Under Select dataset as UC table, click Browse to select the table in Unity Catalog you want to use. The table name cannot contain any special characters (such as

-).The following is an example:

main.model_specialization.customer_call_transcripts -

In the Input column field, select the column you want to use as your input text. The dropdown menu is automatically populated with columns from your selected table.

-

In the Output column, select the column you want to provide as an example output for the expected transformation. Providing this data helps configure your agent to more accurately adapt to your domain-specific needs.

If you select Unlabeled dataset:

-

Under Select dataset as UC table, click Browse to select the table in Unity Catalog you want to use. The table name cannot contain any special characters (such as

-). -

In the Input column field, select the column you want to use as your input text. The dropdown menu is automatically populated with columns from your selected table.

If you select A few examples:

- Provide at least 3 examples of inputs and expected outputs for your specialization task. Providing high-quality examples helps configure your specialization agent to better understand your requirements.

- To add more examples, click + Add.

- Under Agent destination, select the Unity Catalog schema where you'd like Agent Bricks to help you create a table with evaluation data. You must have CREATE REGISTERED MODEL and CREATE TABLE permissions to this schema.

-

Name your agent.

-

Click Create agent.

Step 2: Build and improve your agent

In the Build tab, review recommendations to improve your agent, review sample model outputs, and adjust your task instructions and evaluation criteria.

-

In the Recommendation pane, Databricks provides recommendations to help you optimize and evaluate sample responses as good or bad.

- Review the Databricks recommendations for optimizing agent performance.

- Provide feedback to improve responses. For each response, answer Is this a good response? with Yes or No. If No, provide optional feedback on the response and click Save to move onto the next one.

- You can also choose to Dismiss the recommendation.

-

On the right, under Guidelines, set clear guidelines to help your agent produce the right output. These will also be used to automatically evaluate quality.

- Review suggested guidelines. The guideline suggestions are automatically inferred to help you optimize your agent. You can refine them or delete them.

- Agent Bricks may propose additional guidelines. Select Accept to add the new guideline, Reject to reject it, or click into the text to edit the guideline first.

- To add your own guidelines, click

Add.

- Click Save and update to update the agent.

-

(Optional) On the right side, under Instructions, describe your task. Add any additional instructions for the agent to follow when generating its responses. Click Save and update to apply the instructions.

-

After you update the agent, new sample responses are generated. Review and provide feedback on these responses.

Step 3: Evaluate your agent

A quality report containing a small set of evaluation results is automatically generated from your guidelines. Review this report in the Quality tab.

Each accepted guideline is used as an evaluation metric. For each generated request, the response is evaluated using the guidelines and given a pass/fail assessment. These assessments are used to generate the evaluation scores shown at the top. Click an evaluation result to see the full details.

Use the quality report to help you decide if the agent needs further optimization.

(Optional) Optimize your agent

Agent Bricks can help optimize your agent for cost. Databricks recommends at least 100 inputs (either 100 rows in your Unity Catalog table or 100 manually-provided examples) to optimize your agent. When you add more inputs, the knowledge base that the agent can learn from increases, which improves agent quality and its response accuracy.

When you optimize your agent, Databricks compares multiple different optimization strategies to build and deploy an optimized agent. These strategies include Foundation Model Fine-tuning which uses Databricks Geos.

To optimize your agent:

-

Click Optimize.

-

Click Start optimization.

Optimization can take a few hours. Making changes to your currently active agent is blocked when optimization is in progress.

-

When optimization completes, review a comparison of your currently active agent and the agent optimized for cost.

-

After you review these results, select the best model under Deploy best model to an endpoint and click Deploy.

Step 4: Use your agent

Try out your agent in workflows across Databricks.

To start using your agent, click Use. You have the following options:

-

Click Try in SQL to open the SQL editor and use

ai_queryto send requests to your new Custom LLM agent. -

Click Create pipeline to deploy a pipeline that runs at scheduled intervals to use your agent on new data. See Lakeflow Spark Declarative Pipelines for more information about pipelines.

-

Click Open in Playground to test out your agent in a chat environment with AI Playground.

Manage permissions

By default, only Agent Bricks authors and workspace admins have permissions to the agent. To allow other users to edit or query your agent, you need to explicitly grant them permission.

To manage permissions on your agent:

- Open your agent in Agent Bricks.

- At the top, click the

kebab menu.

- Click Manage permissions.

- In the Permission Settings window, select the user, group, or service principal.

- Select the permission to grant:

- Can Manage: Allows managing the Agent Bricks, including setting permissions, editing the agent configuration, and improving its quality.

- Can Query: Allows querying the Agent Bricks endpoint in AI Playground and through the API. Users with only this permission cannot view or edit the agent in Agent Bricks.

- Click Add.

- Click Save.

For agent endpoints created before September 16, 2025, you can grant Can Query permissions to the endpoint from the Serving endpoints page.

Query the agent endpoint

On the agent page, click See Agent status in the upper-right to get your deployed agent endpoint and see endpoint details.

There are multiple ways to query the created agent endpoint. Use the code examples provided in AI Playground as a starting point:

- On the agent page, click Use.

- Click Open in playground.

- From Playground, click Get code.

- Choose how you want to use the endpoint:

- Select Apply on data to create a SQL query that applies the agent to a specific table column.

- Select Curl API for a code example to query the endpoint using curl.

- Select Python API for a code example to interact with the endpoint using Python.

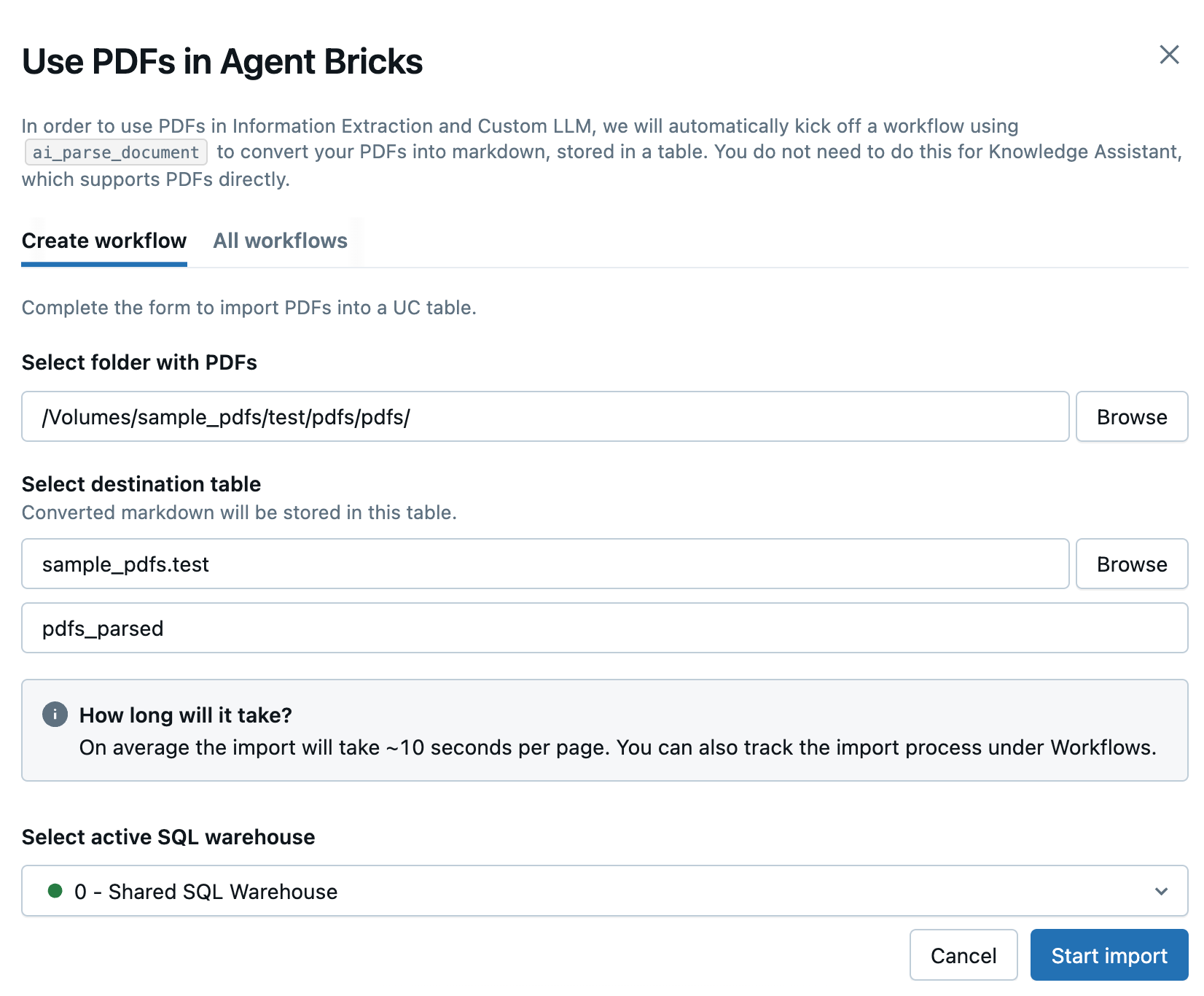

Use PDFs in Agent Bricks

PDFs are not yet supported natively in Agent Bricks: Information Extraction and Custom LLM. However, you can use Agent Brick's UI workflow to convert a folder of PDF files into markdown, then use the resulting Unity Catalog table as input when building your agent. This workflow uses ai_parse_document for the conversion. Follow these steps:

-

Click Agents in the left navigation pane to open Agent Bricks in Databricks.

-

In the Information Extraction or Custom LLM use cases, click Use PDFs.

-

In the side panel that opens, enter the following fields to create a new workflow to convert your PDFs:

- Select folder with PDFs or images: Select the Unity Catalog folder containing the PDFs you want to use.

- Select destination table: Select the destination schema for the converted markdown table and, optionally, adjust the table name in the field below.

- Select active SQL warehouse: Select the SQL warehouse to run the workflow.

-

Click Start import.

-

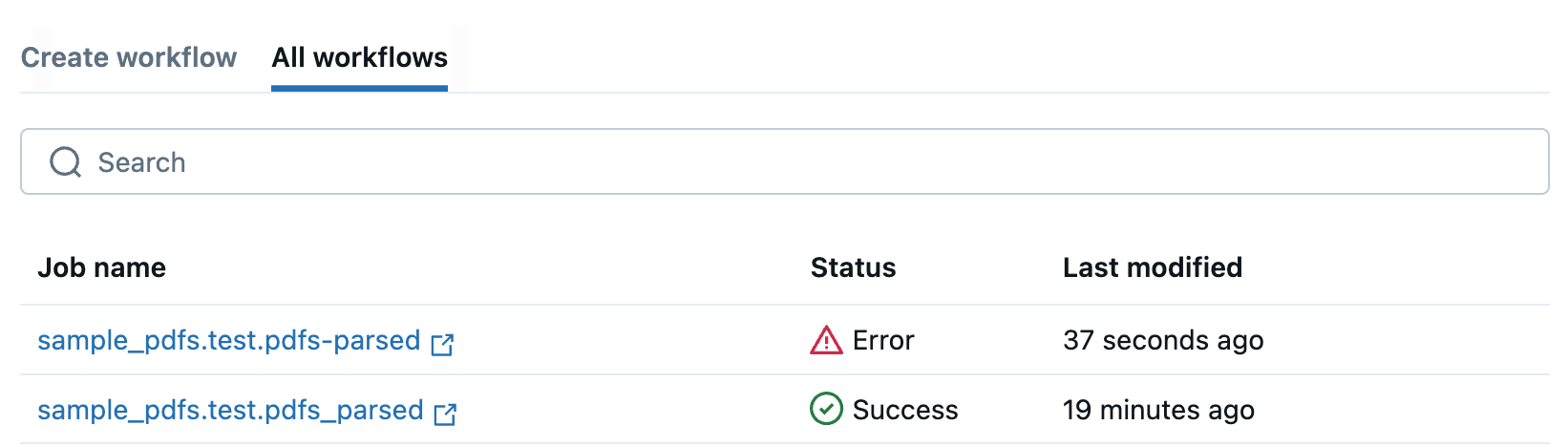

You will be redirected to the All workflows tab, which lists all of your PDF workflows. Use this tab to monitor the status of your jobs.

If your workflow fails, click on the job name to open it and view error messages to help you debug.

-

When your workflow has completed successfully, click on the job name to open the table in Catalog Explorer to explore and understand the columns.

-

Use the Unity Catalog table as input data in Agent Bricks when configuring your agent.

Limitations

- Databricks recommends at least 100 inputs (either 100 rows in your Unity Catalog table or 100 manually-provided samples) to optimize your agent. When you add more inputs, the knowledge base that the agent can learn from increases, which improves agent quality and its response accuracy.

- If you provide a Unity Catalog table, the table name cannot contain any special characters (such as

-). - Only the following data types are supported as inputs:

string,int, anddouble. - Usage capacity is currently limited to 100k input and output tokens per minute.

- Workspaces that have Enhanced Security and Compliance enabled are not supported.

- Optimization may fail in workspaces that have serverless egress control network policies with restricted access mode.