Manage model serving endpoints

This article describes how to manage model serving endpoints using the Serving UI and REST API. See Serving endpoints in the REST API reference.

To create model serving endpoints use one of the following:

Get the status of the model endpoint

You can check the status of an endpoint using the Serving UI or programmatically using the REST API, Databricks Workspace Client, or MLflow Deployments SDK.

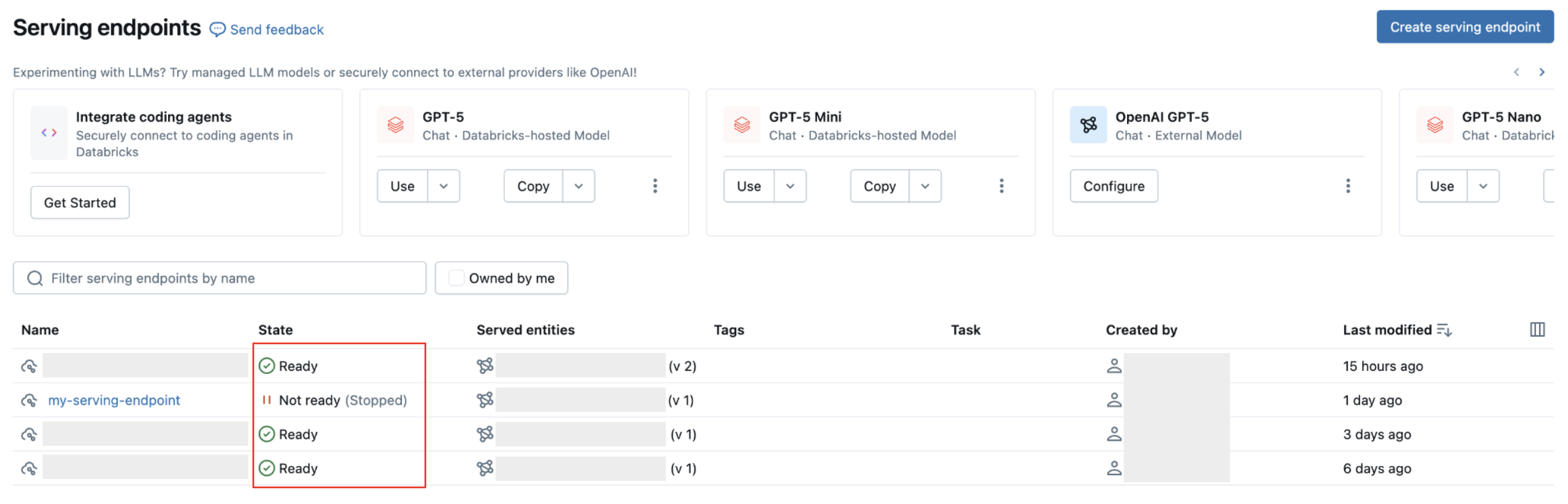

Endpoint statuses can be Ready, Ready (Update failed), Not ready (Updating), Not ready (Update failed), or Not ready (Stopped). Readiness refers to whether or not an endpoint can be queried. Updated failed indicates the latest change to the endpoint was unsuccessful. Stopped means the endpoint was stopped.

- UI

- REST API

- Databricks Workspace Client

- MLflow Deployments SDK

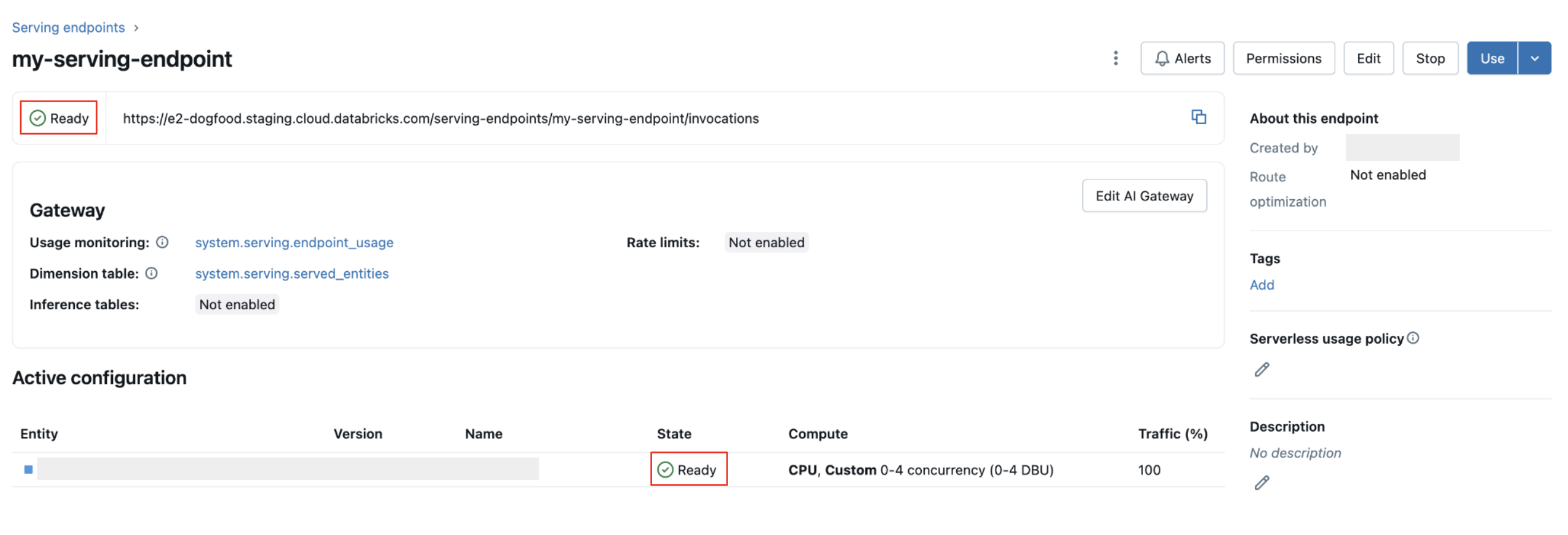

The Serving endpoint state indicator at the top of an endpoint's details page:

GET /api/2.0/serving-endpoints/{name}

In the following example response, the state.ready field is "READY", which means the endpoint is ready to receive traffic. The state.update_state field is NOT_UPDATING and pending_config is no longer returned because the update was finished successfully.

{

"name": "unity-model-endpoint",

"creator": "customer@example.com",

"creation_timestamp": 1666829055000,

"last_updated_timestamp": 1666829055000,

"state": {

"ready": "READY",

"update_state": "NOT_UPDATING"

},

"config": {

"served_entities": [

{

"name": "my-ads-model",

"entity_name": "myCatalog.mySchema.my-ads-model",

"entity_version": "1",

"workload_size": "Small",

"scale_to_zero_enabled": false,

"state": {

"deployment": "DEPLOYMENT_READY",

"deployment_state_message": ""

},

"creator": "customer@example.com",

"creation_timestamp": 1666829055000

}

],

"traffic_config": {

"routes": [

{

"served_model_name": "my-ads-model",

"traffic_percentage": 100

}

]

},

"config_version": 1

},

"id": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"permission_level": "CAN_MANAGE"

}

from databricks.sdk import WorkspaceClient

w = WorkspaceClient()

endpoint = w.serving_endpoints.get(name="my-endpoint")

print(f"Endpoint state: {endpoint.state.ready}")

print(f"Update state: {endpoint.state.config_update}")

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

endpoint = client.get_endpoint(endpoint="my-endpoint")

print(f"Endpoint state: {endpoint['state']}")

print(f"Endpoint config: {endpoint['config']}")

Stop a model serving endpoint

You can temporarily stop a model serving endpoint and start it later. When an endpoint is stopped:

- The resources provisioned for it are shut down.

- The endpoint is not able to serve queries until it is started again.

- Only endpoints that serve custom models and have no in-progress updates can be stopped.

- Stopped endpoints do not count against the resource quota.

- Queries sent to a stopped endpoint return a 400 error.

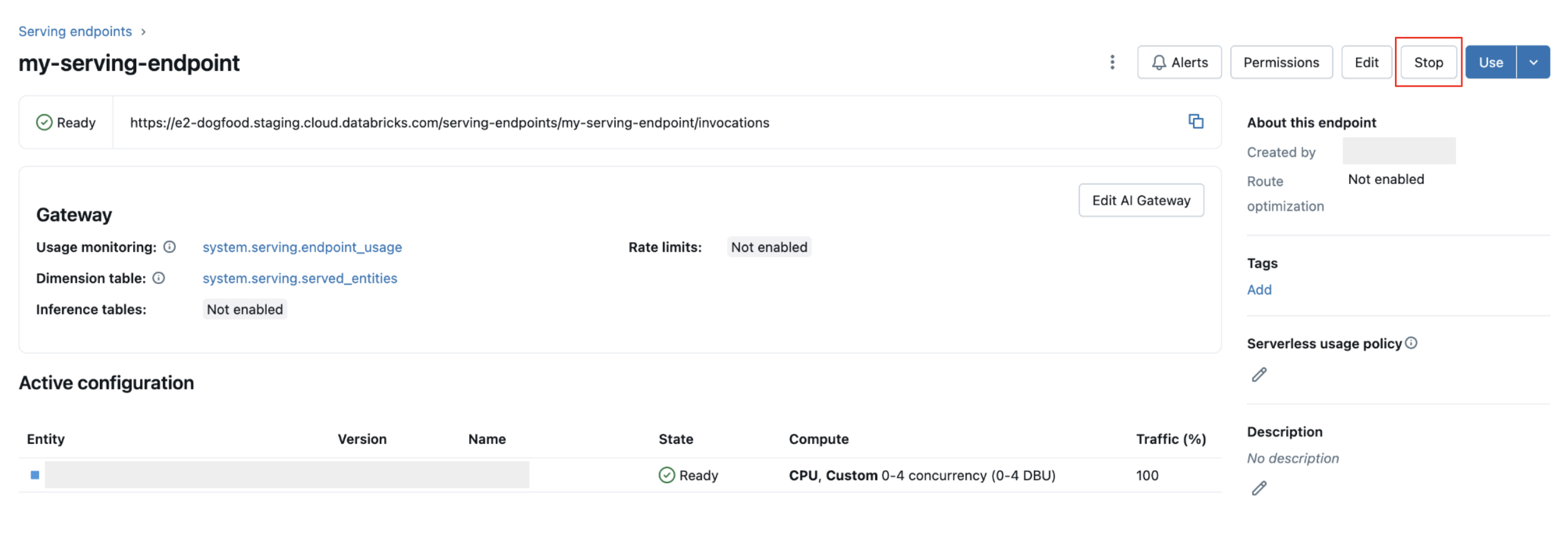

Stop an endpoint

- UI

- REST API

Click Stop in the upper-right corner.

POST /api/2.0/serving-endpoints/{name}/config:stop

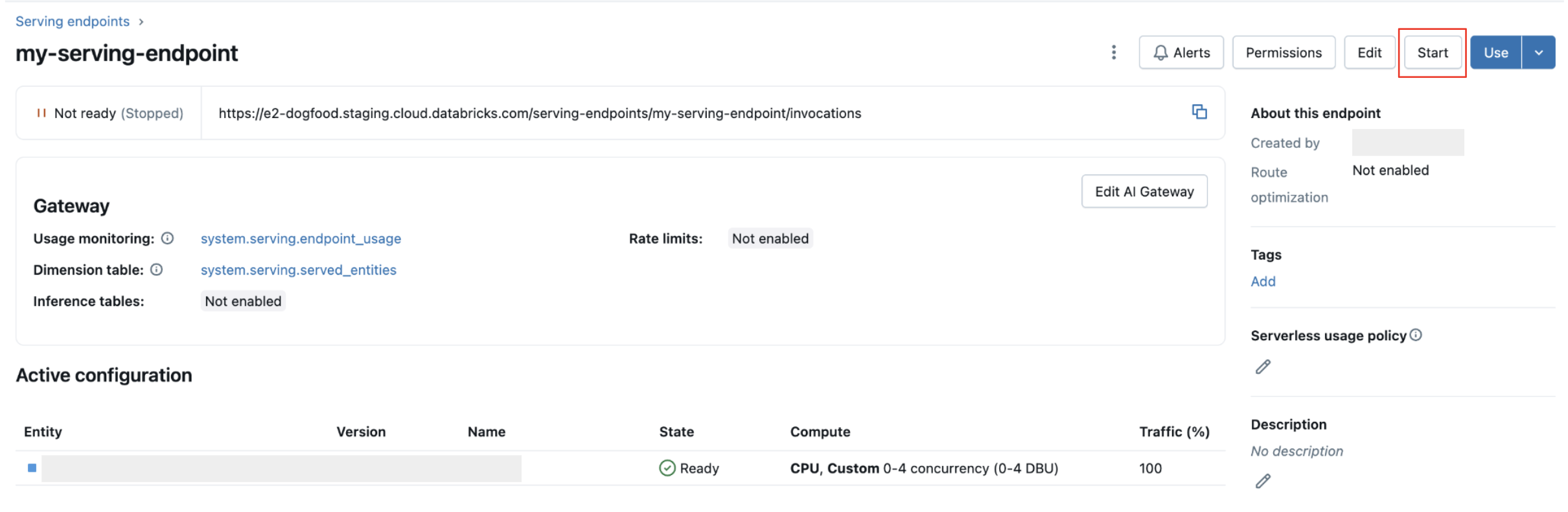

Start an endpoint

Starting an endpoint creates a new config version with the same properties as the existing stopped config.

When you are ready to start a stopped model serving endpoint:

- UI

- REST API

Click Start in the upper-right corner.

POST /api/2.0/serving-endpoints/{name}/config:start

Delete a model serving endpoint

Deleting an endpoint disables usage and deletes all data associated with the endpoint. You cannot undo deletion.

- UI

- REST API

- MLflow Deployments SDK

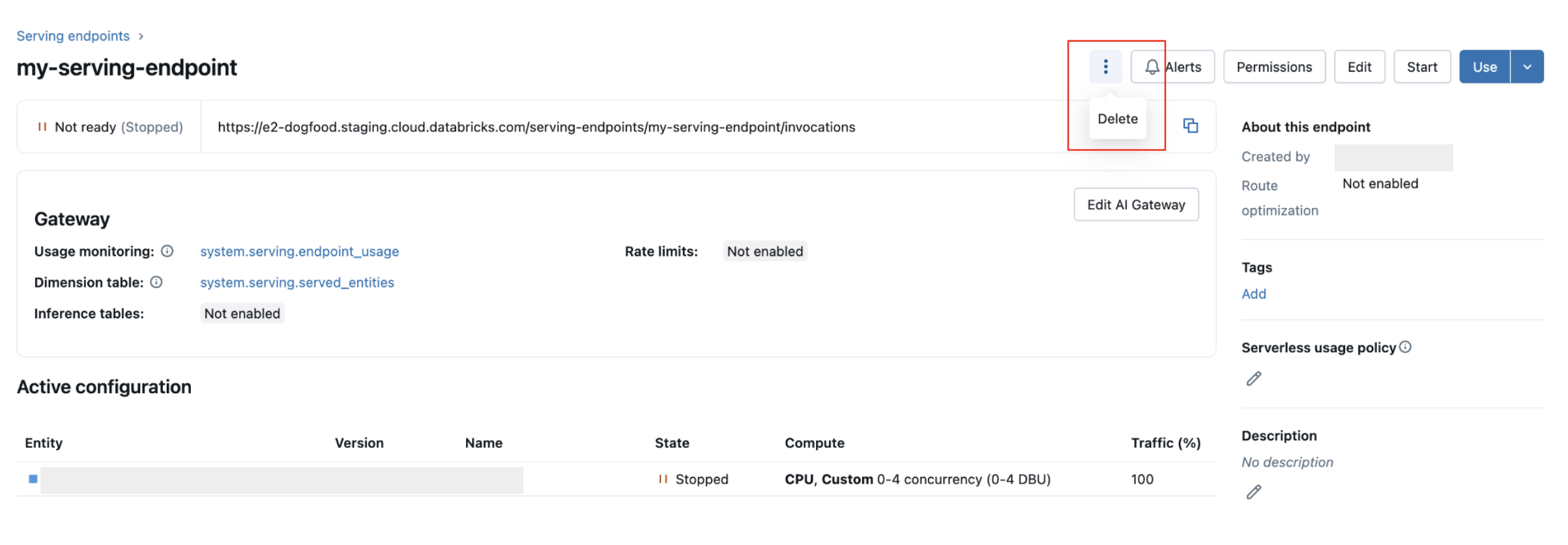

Click the kebab menu at the top and select Delete.

DELETE /api/2.0/serving-endpoints/{name}

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

client.delete_endpoint(endpoint="chat")

Debug a model serving endpoint

Two types of logs are available to help debug issues with endpoints:

- Model server container build logs: Generated during endpoint initialization when the container is being created. These logs capture the setup phase including downloading the model, installing dependencies, and configuring the runtime environment. Use these logs to debug why an endpoint failed to start or is stuck during deployment.

- Model server logs: Generated during runtime when the endpoint is actively serving predictions. These logs capture incoming requests, model inference execution, runtime errors, and application-level logging from your model code. Use these logs to debug issues with predictions or investigate query failures.

Both log types are also accessible from the Endpoints UI in the Logs tab.

Get container build logs

For the build logs for a served model you can use the following request. See Debugging guide for Model Serving for more information.

GET /api/2.0/serving-endpoints/{name}/served-models/{served-model-name}/build-logs

{

"config_version": 1 // optional

}

Get model server logs

For the model server logs for a serve model, you can use the following request:

GET /api/2.0/serving-endpoints/{name}/served-models/{served-model-name}/logs

{

"config_version": 1 // optional

}

Manage permissions on a model serving endpoint

You must have at least the CAN MANAGE permission on a serving endpoint to modify permissions. For more information on the permission levels, see Serving endpoint ACLs.

Get the list of permissions on the serving endpoint.

- UI

- Databricks CLI

Click the Permissions button at the top right of the UI.

databricks permissions get serving-endpoints <endpoint-id>

Grant user jsmith@example.com the CAN QUERY permission on the serving endpoint.

databricks permissions update serving-endpoints <endpoint-id> --json '{

"access_control_list": [

{

"user_name": "jsmith@example.com",

"permission_level": "CAN_QUERY"

}

]

}'

You can also modify serving endpoint permissions using the Permissions API.

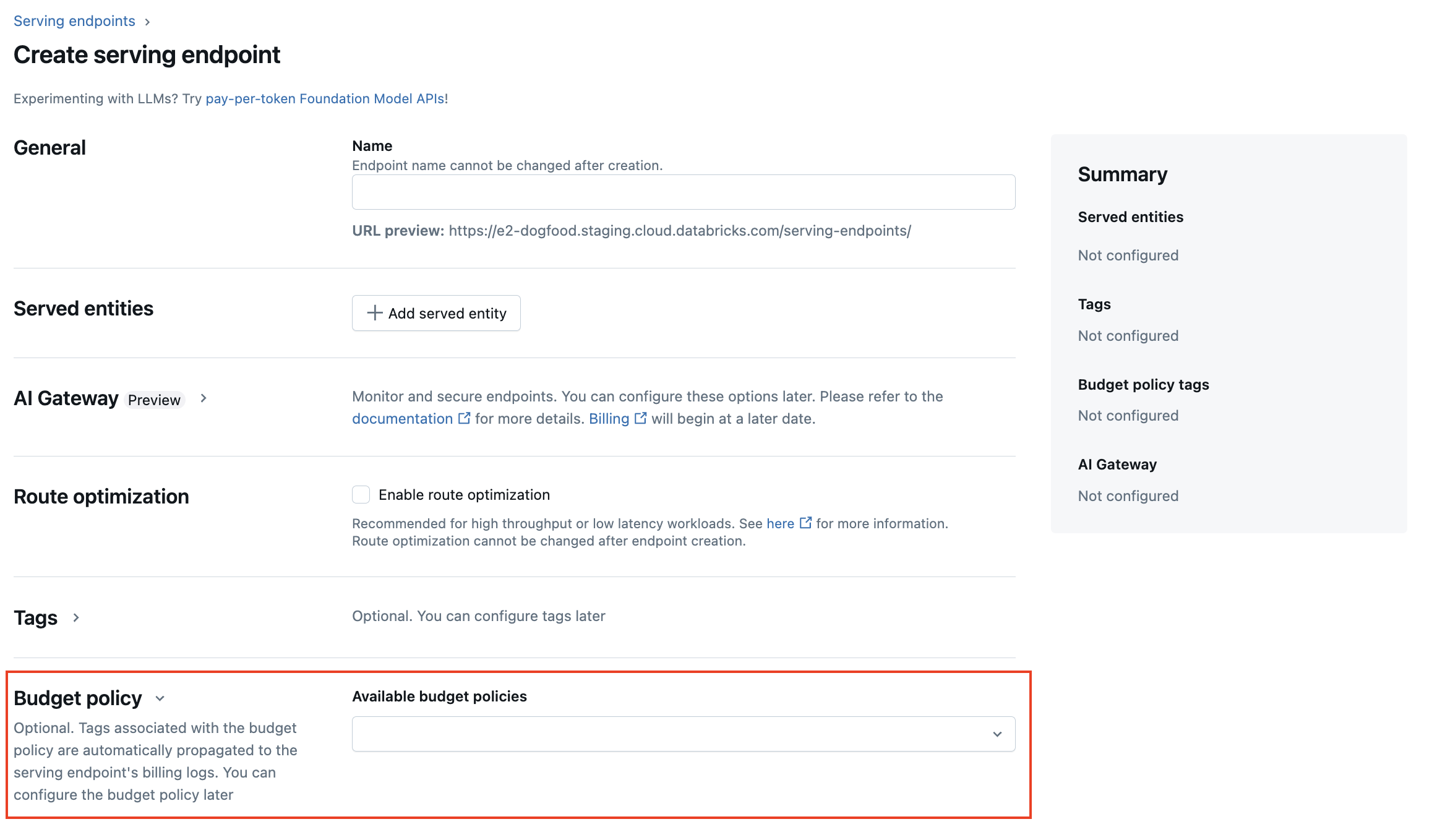

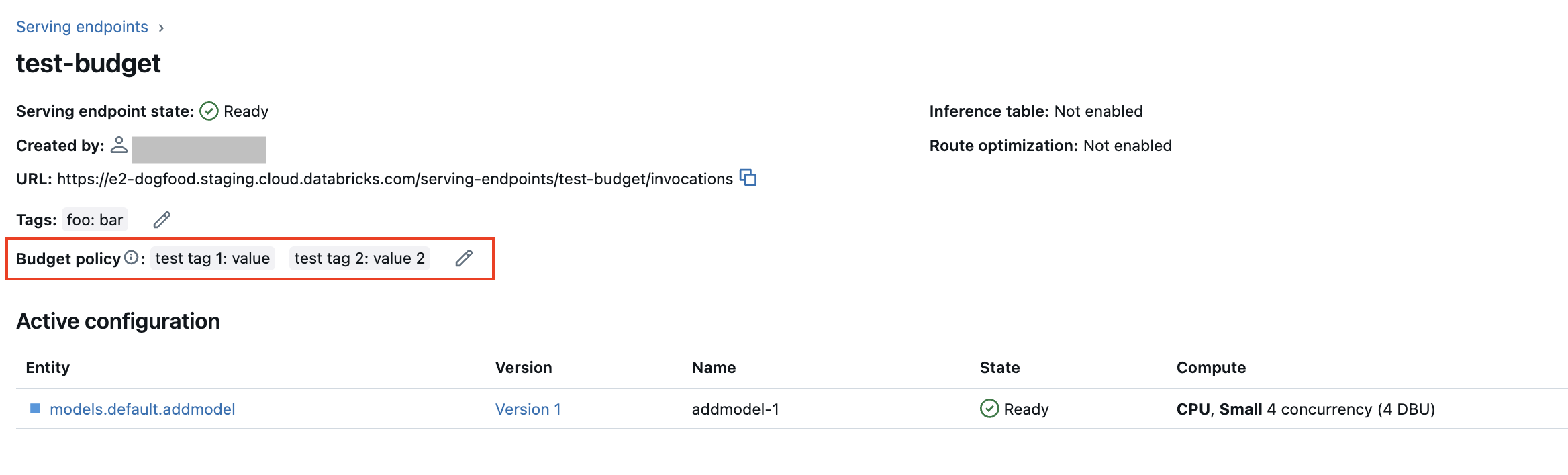

Add a serverless budget policy for a model serving endpoint

This feature is in Public Preview and is not available for serving endpoints that serve External models.

Serverless budget policies allow your organization to apply custom tags on serverless usage for granular billing attribution. If your workspace uses serverless budget policies to attribute serverless usage, you can add a serverless budget policy to your model serving endpoints. See Attribute usage with serverless budget policies.

During model serving endpoint creation, you can select your endpoint's serverless budget policy from the Budget policy menu in the Serving UI. If you have a serverless budget policy assigned to you, all endpoints that you create are assigned that serverless budget policy, even if you do not select a policy from the Budget policy menu.

If you have MANAGE permissions for an existing endpoint, you can edit and add a serverless budget policy to that endpoint from the Endpoint details page in the UI.

If you've been assigned a serverless budget policy, your existing endpoints are not automatically tagged with your policy. You must manually update existing endpoints if you want to attach a serverless budget policy to them.

Get a model serving endpoint schema

Support for serving endpoint query schemas is in Public Preview. This functionality is available in Model Serving regions.

A serving endpoint query schema is a formal description of the serving endpoint using the standard OpenAPI specification in JSON format. It contains information about the endpoint including the endpoint path, details for querying the endpoint like the request and response body format, and data type for each field. This information can be helpful for reproducibility scenarios or when you need information about the endpoint, but you are not the original endpoint creator or owner.

To get the model serving endpoint schema, the served model must have a model signature logged and the endpoint must be in a READY state.

The following examples demonstrate how to programmatically get the model serving endpoint schema using the REST API. For feature serving endpoint schemas, see Feature Serving endpoints.

The schema returned by the API is in the format of a JSON object that follows the OpenAPI specification.

ACCESS_TOKEN="<endpoint-token>"

ENDPOINT_NAME="<endpoint name>"

curl "https://example.databricks.com/api/2.0/serving-endpoints/$ENDPOINT_NAME/openapi" -H "Authorization: Bearer $ACCESS_TOKEN" -H "Content-Type: application/json"

Schema response details

The response is an OpenAPI specification in JSON format, typically including fields like openapi, info, servers and paths. Since the schema response is a JSON object, you can parse it using common programming languages, and generate client code from the specification using third-party tools.

You can also visualize the OpenAPI specification using third-party tools like Swagger Editor.

The main fields of the response include:

- The

info.titlefield shows the name of the serving endpoint. - The

serversfield always contains one object, typically theurlfield which is the base url of the endpoint. - The

pathsobject in the response contains all supported paths for an endpoint. The keys in the object are the path URL. Eachpathcan support multiple formats of inputs. These inputs are listed in theoneOffield.

The following is an example endpoint schema response:

{

"openapi": "3.1.0",

"info": {

"title": "example-endpoint",

"version": "2"

},

"servers": [{ "url": "https://example.databricks.com/serving-endpoints/example-endpoint" }],

"paths": {

"/served-models/vanilla_simple_model-2/invocations": {

"post": {

"requestBody": {

"content": {

"application/json": {

"schema": {

"oneOf": [

{

"type": "object",

"properties": {

"dataframe_split": {

"type": "object",

"properties": {

"columns": {

"description": "required fields: int_col",

"type": "array",

"items": {

"type": "string",

"enum": ["int_col", "float_col", "string_col"]

}

},

"data": {

"type": "array",

"items": {

"type": "array",

"prefixItems": [

{

"type": "integer",

"format": "int64"

},

{

"type": "number",

"format": "double"

},

{

"type": "string"

}

]

}

}

}

},

"params": {

"type": "object",

"properties": {

"sentiment": {

"type": "number",

"format": "double",

"default": "0.5"

}

}

}

},

"examples": [

{

"columns": ["int_col", "float_col", "string_col"],

"data": [

[3, 10.4, "abc"],

[2, 20.4, "xyz"]

]

}

]

},

{

"type": "object",

"properties": {

"dataframe_records": {

"type": "array",

"items": {

"required": ["int_col", "float_col", "string_col"],

"type": "object",

"properties": {

"int_col": {

"type": "integer",

"format": "int64"

},

"float_col": {

"type": "number",

"format": "double"

},

"string_col": {

"type": "string"

},

"becx_col": {

"type": "object",

"format": "unknown"

}

}

}

},

"params": {

"type": "object",

"properties": {

"sentiment": {

"type": "number",

"format": "double",

"default": "0.5"

}

}

}

}

}

]

}

}

}

},

"responses": {

"200": {

"description": "Successful operation",

"content": {

"application/json": {

"schema": {

"type": "object",

"properties": {

"predictions": {

"type": "array",

"items": {

"type": "number",

"format": "double"

}

}

}

}

}

}

}

}

}

}

}

}