May 2025

These features and Databricks platform improvements were released in May 2025.

Releases are staged. Your Databricks account might not be updated until a week or more after the initial release date.

Improved UI for managing notebook dashboards

May 30, 2025

Quickly navigate between a notebook and its associated dashboards by clicking in the top right.

See Navigate between a notebook dashboard and a notebook.

SQL authoring improvements

May 30, 2025

The following improvements were made to the SQL editing experience in the SQL editor and notebooks:

- Filters applied to result tables now also affect visualizations, enabling interactive exploration without modifying the underlying query or dataset. To learn more about filters, see Filter results.

- In a SQL notebook, you can now create a new query from a filtered results table or visualization. See Create a query from filtered results.

- You can hover over

*in aSELECT *query to expand the columns in the queried table. - Custom SQL formatting settings are now available in the new SQL editor and the notebook editor. Click View > Developer Settings > SQL Format. See Customize SQL formatting.

Metric views are in Public Preview

May 29, 2025

Unity Catalog metric views provide a centralized way to define and manage consistent, reusable, and governed core business metrics. They abstract complex business logic into a centralized definition, enabling organizations to define key performance indicators once and use them consistently across reporting tools like dashboards, Genie spaces, and alerts. Use a SQL warehouse running on the Preview channel (2025.16) or other compute resource running Databricks Runtime 16.4 or above to work with metric views. See Unity Catalog metric views.

Agent Bricks: Knowledge Assistant for domain-specialized chatbots is in Beta

May 29, 2025

Agent Bricks provides a simple approach to build and optimize domain-specific, high-quality AI agent systems for common AI use cases. In Beta, Agent Bricks: Knowledge Assistant supports creating a question-and-answer chatbot over your documents and improving its quality based on natural language feedback from your subject matter experts.

See Use Agent Bricks: Knowledge Assistant to create a high-quality chatbot over your documents.

Workspaces system table now available (Public Preview)

May 28, 2025

You can now use the system.access.workspaces_latest table to monitor the latest state of all active workspaces in your account.

Join this table with other system tables to analyze reliability, performance, and cost across your account's workspaces. See Workspaces system table reference.

Claude Sonnet 4 and Claude Opus models now available on Mosaic AI Model Serving

May 27, 2025

The Anthropic Claude Sonnet 4 and Anthropic Claude Opus 4 models are now available in Mosaic AI Model Serving as Databricks-hosted foundation models.

These models are available using Foundation Model APIs pay-per-token in US regions only.

FedRAMP Moderate support in additional AWS regions

May 23, 2025

FedRAMP Moderate compliance controls are now available in the following AWS regions:

us-east-2(Ohio)us-west-1(Northern California)

These regions support FedRAMP Moderate using classic compute resources only. FedRAMP Moderate supports serverless compute in us-east-1 and us-west-2

For more information, see FedRAMP Moderate.

New alerts in Beta

May 22, 2025

A new version of Databricks SQL alerts is now in Beta. You can use alerts to periodically run queries, evaluate defined conditions, and send notifications if a condition is met. This version simplifies creating and managing alerts by consolidating query setup, conditions, schedules, and notification destinations into a single interface. You can still use legacy alerts alongside the new version. See Databricks SQL alerts.

Improved admin billing settings

May 21, 2025

Account admins in pay-as-you-go Databricks accounts now have more control over payment management. The updated Subscription and billing tab on the account console includes the following improvements:

- Add and update payment methods, including credit cards and AWS Marketplace accounts.

- Switch between payment methods at any time.

- View active and expired credits, including free trial credits.

See Manage your subscription and billing.

Dashboards, alerts, and queries are supported as workspace files

May 20, 2025

Dashboards, alerts, and queries are now supported as workspace files, which means you can programmatically interact with these Databricks objects like any other file, from anywhere the workspace filesystem is available. See What are workspace files? and Programmatically interact with workspace files.

Pipelines system table is now available (Public Preview)

May 20, 2025

The system.lakeflow.pipelines table is a slowly changing dimension table (SCD2) that tracks all pipelines created in your Databricks account.

Databricks Asset Bundles in the workspace (Public Preview)

May 19, 2025

Collaborating on Databricks Asset Bundles with other users in your organization is now easier with bundles in the workspace, which allows workspace users to edit, commit, test, and deploy bundle updates through the UI.

See Collaborate on bundles in the workspace.

Workflow task repair now respects transitive dependencies

May 19, 2025

Previously, repaired tasks were unblocked once their direct dependencies completed. Now, repaired tasks wait for all transitive dependencies. For example, in a graph A → B → C, repairing A and C will block C until A finishes.

Move streaming tables and materialized views between pipelines

May 16, 2025

Tables created by DLT in Unity Catalog ETL pipelines can now be moved from one pipeline to another. This feature is in Public Preview and is only available to participating customers. Reach out to your Databricks account manager to participate in the preview.

Serverless compute is now available in us-west-1

May 14, 2025

Workspaces in us-west-1 are now eligible to use serverless compute. This includes serverless SQL warehouses and serverless compute for notebooks, jobs, and DLT. See Connect to serverless compute.

Korean Financial Security Institute (K-FSI) compliance controls

May 14, 2024

Korean Financial Security Institute (K-FSI) compliance controls are now available to help support your workspace's alignment with Korean financial regulations. The K-FSI sets security and compliance standards for financial institutions in South Korea. See Korean Financial Security Institute (K-FSI) compliance controls.

Databricks Apps (Generally Available)

May 13, 2025

Databricks Apps is now generally available (GA). This feature lets you build and run interactive full-stack applications directly in the Databricks workspace. Apps run on managed infrastructure and integrate with Delta Lake, notebooks, ML models, and Unity Catalog.

See Databricks Apps.

Databricks Runtime 16.4 LTS is GA

May 13, 2025

Databricks Runtime 16.4 and Databricks Runtime 16.4 ML are now generally available.

See Databricks Runtime 16.4 LTS and Databricks Runtime 16.4 LTS for Machine Learning.

Model Serving CPU and GPU workloads now support compliance security profile standards

May 13, 2025

Model Serving CPU and GPU workloads now support the following compliance standards offered through the compliance security profile:

- HIPAA

- PCI-DSS

- UK Cyber Essentials Plus

See Compliance security profile standards: CPU and GPU workloads for region availability of these standards.

Provisioned throughput workloads now support compliance security profile standards

May 13, 2025

Foundation Model APIs provisioned throughput workloads now support the following compliance standards offered through the compliance security profile:

- HIPAA

- PCI-DSS

- UK Cyber Essentials Plus

See Compliance security profile standards: Foundation Model APIs workloads for region availability of these standards.

Databricks multi-factor authentication (MFA) is now generally available

May 13, 2025

Account admins can now enable multi-factor authentication (MFA) directly in Databricks, allowing them to either recommend or require MFA for users at login. See Configure multi-factor authentication.

Databricks JDBC driver 2.7.3

May 12, 2025

The Databricks JDBC Driver version 2.7.3 is now available for download from the JDBC driver download page.

This release includes the following enhancements and new features:

- Added support for Azure Managed Identity OAuth 2.0 authentication. To enable this, set the

Auth_Flowproperty to 3. - Added support for OAuth Token exchange for IDPs different than host. OAuth access tokens (including BYOT) will be exchanged for a Databricks access token.

- OAuth browser (

Auth_Flow=2) now supports token caching for Linux and Mac operating systems. - Added support for

VOID,Variant, andTIMESTAMP_NTZdata types ingetColumns()andgetTypeInfo()APIs. - The driver now lists columns with unknown or unsupported types and maps them to SQL

VARCHARin thegetColumns()metadata API. - Added support for cloud.databricks.us and cloud.databricks.mil domains when connecting to Databricks using OAuth (

AuthMech=11). - Upgraded to netty-buffer 4.1.119 and netty-common 4.1.119 (previously 4.1.115).

This release resolves the following issues:

- Compatibility issues when deserializing Apache Arrow data with Java JVMs version 11 or higher.

- Issues with date and timestamp before the beginning of the Gregorian calendar when connecting to specific Spark versions with Arrow result set serialization.

For complete configuration information, see the Databricks JDBC Driver Guide installed with the driver download package.

Updated lineage system tables schema

May 11, 2025

The lineage system tables (system.access.column_lineage and system.access.table_lineage) have been updated to better log entity information.

- The

entity_metadatacolumn replacesentity_type,entity_run_id, andentity_id, which have been deprecated. - The

record_idcolumn is a new primary key for the lineage record. - The

event_idcolumn logs an identifier for lineage event, which can be shared by multiple rows if they were generated by the same event. - The

statement_idcolumn logs the query statement ID of the query that generated the lineage event. It is a foreign key that can be joined with thesystem.query.historytable.

For a full schema of these tables, see Lineage system tables reference.

You can now collaborate with multiple parties with Databricks Clean Rooms

May 14, 2025

Databricks Clean Rooms now supports:

- Up to 10 collaborators for more complex, multi-party data projects.

- New notebook approval workflow that enhances security and compliance, allowing designated runners, requiring explicit approval before execution

- Auto-approval options for trusted partners.

- Difference views for easy review and auditing.

These updates enable more secure, scalable, and auditable collaboration.

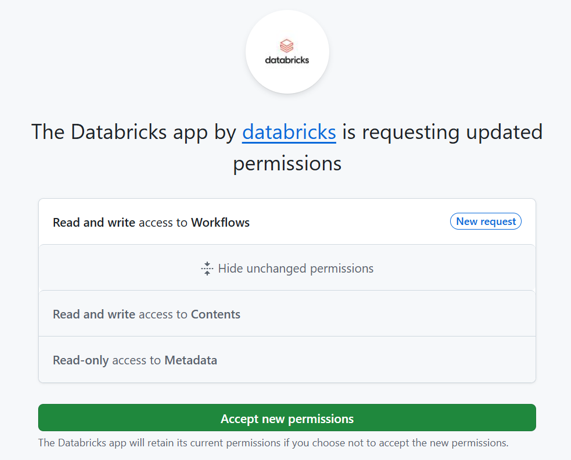

Databricks GitHub App adds workflow scope to support authoring GitHub Actions

May 9, 2025

Databricks made a change where you may get an email request for the Read and write access to Workflows scope for the Databricks GitHub app. This change makes the scope of the Databricks GitHub app consistent with the required scope of other supported authentication methods and allows users to commit GitHub Actions from Databricks Git folders using the Databricks GitHub app for authorization.

If you are the owner of a Databricks account where the Databricks GitHub app is installed and configured to support OAuth, you may receive the following notification in an email titled "Databricks is requesting updated permissions" from GitHub. (This is a legitimate email request from Databricks.) Accept the new permissions in order to enable committing GitHub Actions from Databricks Git folders with the Databricks GitHub app.

Automatically provision users (JIT) GA

May 9, 2025

You can now enable just-in-time (JIT) provisioning to automatically creates new user accounts during first-time authentication. When a user logs in to Databricks for the first time using single sign-on (SSO), Databricks checks if the user already has an account. If not, Databricks instantly provisions a new user account using details from the identity provider. See Automatically provision users (JIT).

Query snippets are now available in the new SQL editor, notebooks, files, and dashboards

May 9, 2025

Query snippets are segments of queries that you can share and trigger using autocomplete. You can now create query snippets through the View menu in the new SQL editor, and also in the notebook and file editors. You can use your query snippets in the SQL editor, notebook SQL cells, SQL files, and SQL datasets in dashboards.

See Query snippets.

You can now create views in ETL pipelines

May 8, 2025

The CREATE VIEW SQL command is now available in ETL pipelines. You can create a dynamic view of your data. See CREATE VIEW (pipelines).

Configure Python syntax highlighting in Databricks notebooks

May 8, 2025

You can now configure Python syntax highlighting in notebooks by placing a pyproject.toml file in the notebook's ancestor path or your home folder. Through the pyproject.toml file, you can configure ruff, pylint, pyright, and flake8 linters, as well as disable Databricks-specific rules. This configuration is supported for clusters running Databricks Runtime 16.4 or above, or Client 3.0 or above.

See Configure Python syntax highlighting.

Jobs and pipelines now share a single, unified view (Public Preview)

May 7, 2025

You can now view all workflows, including jobs, ETL pipelines, and ingestion pipelines, in a single unified list. See View jobs and pipelines.

Predictive optimization enabled for all existing Databricks accounts

May 7, 2025

Starting May 7, 2025, Databricks enabled predictive optimization by default for all existing Databricks accounts. This will roll out gradually based on your region and will be completed by July 1, 2025. When predictive optimization is enabled, Databricks automatically runs maintenance operations for Unity Catalog managed tables. For more information on predictive optimization, see Predictive optimization for Unity Catalog managed tables.

Llama 4 Maverick is now supported on provisioned throughput workloads (Public Preview)

May 5, 2025

Llama 4 Maverick is now supported on Foundation Model APIs provisioned throughput workloads in Public Preview. See Provisioned throughput Foundation Model APIs.

See Provisioned throughput limits for Llama 4 Maverick limitations during the preview.

Reach out to your Databricks account team to participate in the preview.

File events for external locations improve file notifications in Auto Loader and file arrival triggers in jobs (Public Preview)

May 5, 2025

You can now enable file events on external locations that are defined in Unity Catalog. This makes file arrival triggers in jobs and file notifications in Auto Loader more scalable and efficient.

This feature is in Public Preview. Auto Loader support for file events requires enablement by a Databricks representative. For access, reach out to your Databricks account team.

For details, see the following:

- Set up file events for an external location

- File notification mode with and without file events enabled on external locations

- Trigger jobs when new files arrive

Mosaic AI Model Serving region expansion

May 5, 2025

Mosaic AI Model Serving is now available in the following regions:

ap-northeast-2eu-west-2sa-east-1

See Model serving features availability.

Llama 4 Maverick now available in EU regions where pay-per-token is supported

May 2, 2025

Llama 4 Maverick is now available in EU regions where Foundation Model API pay-per-token is supported.

The jobs system tables (Public Preview) are enabled by default

May 1, 2025

The system.lakeflow schema, which contains system tables related to jobs, is now enabled by default in all Unity Catalog workspaces. See Jobs system table reference.