Manage privileges in Unity Catalog

This page explains how to control access to data and other objects in Unity Catalog. To learn about how this model differs from access control in the Hive metastore, see Work with the legacy Hive metastore alongside Unity Catalog.

Who can manage privileges?

Initially, users have no access to data in a metastore. Databricks account admins, workspace admins, and metastore admins have default privileges for managing Unity Catalog. See Admin privileges in Unity Catalog.

All securable objects in Unity Catalog have an owner. Object owners have all privileges on that object, including the ability to grant privileges to other principals. Owners can grant other users the MANAGE privilege on the object, which allows users to manage privileges on the object. See Manage Unity Catalog object ownership.

Privileges can be granted by any of the following:

- A metastore admin.

- A user with the

MANAGEprivilege on the object. - The owner of the object.

- The owner of the catalog or schema that contains the object.

Account admins can also grant privileges directly on a metastore.

Workspace catalog privileges

If your workspace was enabled for Unity Catalog automatically, the workspace is attached to a metastore by default and a workspace catalog is created for your workspace in the metastore. Workspace admins are the default owners of the workspace catalog. As owners, they can manage privileges on the workspace catalog and all child objects.

All workspace users receive the USE CATALOG privilege on the workspace catalog. Workspace users also receive the USE SCHEMA, CREATE TABLE, CREATE VOLUME, CREATE MODEL, CREATE FUNCTION, and CREATE MATERIALIZED VIEW privileges on the default schema in the catalog.

For more information, see Automatic enablement of Unity Catalog.

Inheritance model

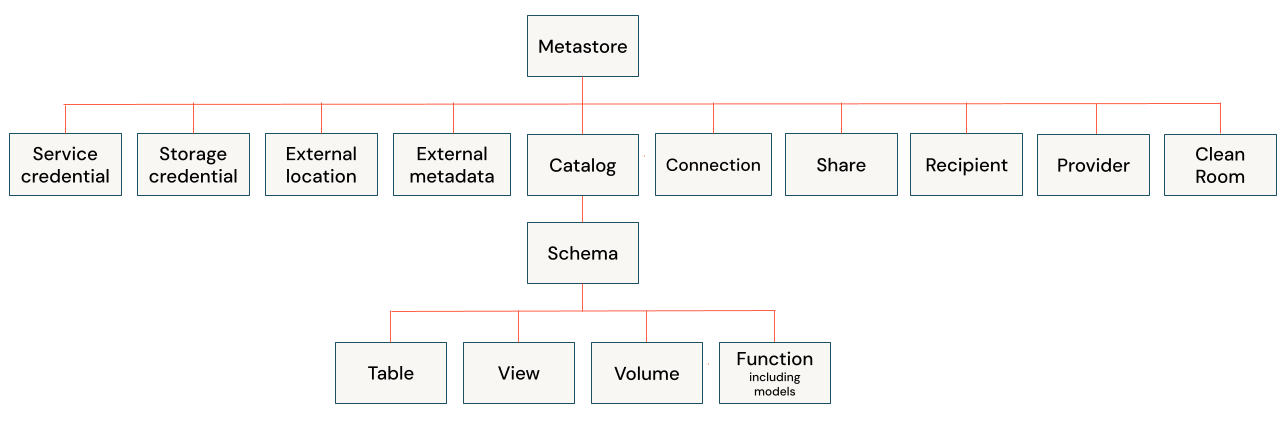

Securable objects in Unity Catalog are hierarchical, and privileges are inherited downward. The highest level object that privileges are inherited from is the catalog. This means that granting a privilege on a catalog or schema automatically grants the privilege to all current and future objects within the catalog or schema. For example, if you give a user the SELECT privilege on a catalog, then that user will be able to select (read) all tables and views in that catalog. Privileges that are granted on a Unity Catalog metastore are not inherited.

Owners of an object are automatically granted all privileges on that object. In addition, object owners can grant privileges on the object itself and on all of its child objects. This means that owners of a schema do not automatically have all privileges on the tables in the schema, but they can grant themselves privileges on the tables in the schema.

If you created your Unity Catalog metastore during the public preview (before August 25, 2022), you might be on an earlier privilege model that doesn't support the current inheritance model. You can upgrade to Privilege Model version 1.0 to get privilege inheritance. See Upgrade to privilege inheritance.

Access requests and destination configuration

This feature is in Public Preview.

Users can request access to objects they are able to discover, typically by having the BROWSE privilege, through Catalog Explorer, search, or direct links. These requests are sent to access request destinations that can be configured on any securable object in Unity Catalog. Destinations can include email addresses, Slack channels, Microsoft Teams channels, webhooks, or a redirect URL to your organization's approval system.

To enable access requests for an object, it must have a configured destination. For more information, see Manage access requests.

Show, grant, and revoke privileges

You can manage privileges for metastore objects using SQL commands, the Databricks CLI, the Databricks Terraform provider, or Catalog Explorer.

In the SQL commands that follow, replace these placeholder values:

<privilege-type>is a Unity Catalog privilege type. See Privilege types.<securable-type>: The type of securable object, such asCATALOGorTABLE. See Securable objects<securable-name>: The name of the securable. If the securable type isMETASTORE, do not provide the securable name. It is assumed to be the metastore attached to the workspace.<principal>is a user, service principal (represented by its applicationId value), or group. You must enclose users, service principals, and group names that include special characters in backticks (` `). See Principal.

Show grants on an object

Currently, users with the MANAGE privilege on an object cannot view all grants for that object in the INFORMATION_SCHEMA. Instead, the INFORMATION_SCHEMA only shows grants their own grants on the object. This behavior will be corrected in the future.

Users with MANAGE privilege can view all grants on an object using SQL commands or Catalog Explorer. See Manage privileges in Unity Catalog.

Permissions required:

- Metastore admins, users with the

MANAGEprivilege on the object, the owner of the object, or the owner of the catalog or schema that contains the object can see all grants on the object. - If you do not have the above permissions, you can view only your own grants on the object.

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- Select the object, such as a catalog, schema, table, or view.

- Go to the Permissions tab.

Run the following SQL command in a notebook or SQL query editor. You can show grants on a specific principal, or you can show all grants on a securable object.

SHOW GRANTS [principal] ON <securable-type> <securable-name>

For example, the following command shows all grants on a schema named default in the parent catalog named main:

SHOW GRANTS ON SCHEMA main.default;

The command returns:

principal actionType objectType objectKey

------------- ------------- ---------- ------------

finance-team CREATE TABLE SCHEMA main.default

finance-team USE SCHEMA SCHEMA main.default

Show my grants on an object

Permissions required: You can always view your own grants on an object.

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- Select the object, such as a catalog, schema, table, or view.

- Go to the Permissions tab. If you are not an object owner or metastore admin, you can view only your own grants on the object.

Run the following SQL command in a notebook or SQL query editor to show your grants on an object.

SHOW GRANTS `<user>@<domain-name>` ON <securable-type> <securable-name>

Grant permissions on an object

Permissions required: Metastore admin, the MANAGE privilege on the object, the owner of the object, or the owner of the catalog or schema that contains the object.

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- Select the object, such as a catalog, schema, table, or view.

- Go to the Permissions tab.

- Click Grant.

- Enter the email address for a user or the name of a group.

- Select the permissions to grant.

- Click OK.

Run the following SQL command in a notebook or SQL query editor.

GRANT <privilege-type> ON <securable-type> <securable-name> TO <principal>

For example, the following command grants a group named finance-team access to create tables in a schema named default with the parent catalog named main:

GRANT CREATE TABLE ON SCHEMA main.default TO `finance-team`;

GRANT USE SCHEMA ON SCHEMA main.default TO `finance-team`;

GRANT USE CATALOG ON CATALOG main TO `finance-team`;

Note that registered models are a type of function. To grant a privilege on a model, you must use GRANT ON FUNCTION. For example, to grant the group ml-team-acme the EXECUTE privilege on the model prod.ml_team.iris_model, you'd use:

GRANT EXECUTE ON FUNCTION prod.ml_team.iris_model TO `ml-team-acme`;

Revoke permissions on an object

Permissions required: Metastore admin, the MANAGE privilege on the object, the owner of the object, or the owner of the catalog or schema that contains the object.

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- Select the object, such as a catalog, schema, table, or view.

- Go to the Permissions tab.

- Select a privilege that has been granted to a user, service principal, or group.

- Click Revoke.

- To confirm, click Revoke.

Run the following SQL command in a notebook or SQL query editor.

REVOKE <privilege-type> ON <securable-type> <securable-name> FROM <principal>

For example, the following command revokes a group named finance-team access to create tables in a schema named default with the parent catalog named main:

REVOKE CREATE TABLE ON SCHEMA main.default FROM `finance-team`;

A REVOKE statement succeeds even if the specified privileges were not granted in the first place. It ensures that the privileges are not present, regardless of their previous state.

Show grants on a metastore

Permissions required: Metastore admin or account admin. You can also view your own grants on a metastore.

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- At the top of the Catalog pane, click the

gear icon and select Metastore.

- Click the Permissions tab.

Run the following SQL command in a notebook or SQL query editor. You can show grants on a specific principal, or you can show all grants on a metastore.

SHOW GRANTS [principal] ON METASTORE

Grant permissions on a metastore

Permissions required: Metastore admin or account admin.

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- At the top of the Catalog pane, click the

gear icon and select Metastore.

- On the Permissions tab, click Grant.

- Enter the email address for a user or the name of a group.

- Select the permissions to grant.

- Click OK.

-

Run the following SQL command in a notebook or SQL query editor.

SQLGRANT <privilege-type> ON METASTORE TO <principal>;When you grant privileges on a metastore, you do not include the metastore name, because the metastore that is attached to your workspace is assumed.

Revoke permissions on a metastore

Permissions required: Metastore admin or account admin..

- Catalog Explorer

- SQL

- In your Databricks workspace, click

Catalog.

- At the top of the Catalog pane, click the

gear icon and select Metastore.

- On the Permissions tab, select a user or group and click Revoke.

- To confirm, click Revoke.

-

Run the following SQL command in a notebook or SQL query editor.

SQLREVOKE <privilege-type> ON METASTORE FROM <principal>;When you revoke privileges on a metastore, you do not include the metastore name, because the metastore that is attached to your workspace is assumed.