Manage model lifecycle in Unity Catalog

This page documents Models in Unity Catalog, which Databricks recommends for governing and deploying models. If your workspace is not enabled for Unity Catalog, the functionality on this page is not available. Instead, see Manage model lifecycle using the Workspace Model Registry (legacy). For guidance on how to upgrade from the Workspace Model Registry to Unity Catalog, see Migrate workflows and models to Unity Catalog.

This article describes how to use Models in Unity Catalog as part of your machine learning workflow to manage the full lifecycle of ML models. Databricks provides a hosted version of MLflow Model Registry in Unity Catalog. Models in Unity Catalog extends the benefits of Unity Catalog to ML models, including centralized access control, auditing, lineage, and model discovery across workspaces. Models in Unity Catalog is compatible with the open-source MLflow Python client.

For an overview of Model Registry concepts, see MLflow for ML model lifecycle.

MLflow 3 makes significant enhancements to the MLflow Model Registry in Unity Catalog, allowing your models to directly capture data like parameters and metrics and make them available across all workspaces and experiments. The default registry URI in MLflow 3 is databricks-uc, meaning the MLflow Model Registry in Unity Catalog will be used. For more details, see Get started with MLflow 3 for models and Model Registry improvements with MLflow 3.

Requirements

-

Unity Catalog must be enabled in your workspace. See Get started using Unity Catalog to create a Unity Catalog Metastore, enable it in a workspace, and create a catalog. If Unity Catalog is not enabled, use the workspace model registry.

-

You must use a compute resource that has access to Unity Catalog. For ML workloads, this means that the compute's access mode must be Dedicated (formerly single user). For more information, see Access modes. With Databricks Runtime 15.4 LTS ML and above, you can also use dedicated group access mode.

-

To create new registered models, you need the following privileges:

USE SCHEMAandUSE CATALOGprivileges on the schema and its enclosing catalog.CREATE MODELorCREATE FUNCTIONprivilege on the schema. To grant privileges, use the Catalog Explorer UI or the SQL GRANT command:

SQLGRANT CREATE MODEL ON SCHEMA <schema-name> TO <principal> -

If you are using Databricks on AWS GovCloud, you must set the environment variable

MLFLOW_USE_DATABRICKS_SDK_MODEL_ARTIFACTS_REPO_FOR_UCtoTrue. Include a cell in your notebook with the following code:Pythonimport os

os.environ['MLFLOW_USE_DATABRICKS_SDK_MODEL_ARTIFACTS_REPO_FOR_UC'] = 'True'This setting might also be useful in other cases if you run into authorization issues when trying to register a model. This approach cannot be used for models shared with Delta Sharing that use default storage.

Your workspace must be attached to a Unity Catalog metastore that supports privilege inheritance. This is true for all metastores created after August 25, 2022. If running on an older metastore, follow docs to upgrade.

Install and configure MLflow client for Unity Catalog

This section includes instructions for installing and configuring the MLflow client for Unity Catalog.

Install MLflow Python client

Support for models in Unity Catalog is included in Databricks Runtime 13.2 ML and above.

You can also use models in Unity Catalog on Databricks Runtime 11.3 LTS and above by installing the latest version of the MLflow Python client in your notebook, using the following code.

%pip install --upgrade "mlflow-skinny[databricks]"

dbutils.library.restartPython()

Configure MLflow client to access models in Unity Catalog

If your workspace's default catalog is in Unity Catalog (rather than hive_metastore), and you are either running a cluster using Databricks Runtime 13.3 LTS or above or using MLflow 3, models are automatically created in and loaded from the default catalog. You do not have to perform this step.

For other workspaces, the MLflow Python client creates models in the Databricks workspace model registry. To upgrade to models in Unity Catalog, use the following code in your notebooks to configure the MLflow client:

import mlflow

mlflow.set_registry_uri("databricks-uc")

For a small number of workspaces where both the default catalog was configured to a catalog in Unity Catalog prior to January 2024 and the workspace model registry was used prior to January 2024, you must manually set the default catalog to Unity Catalog using the command shown above.

Train and register Unity Catalog-compatible models

Permissions required: To create a new registered model, you need the CREATE MODEL and USE SCHEMA privileges on the enclosing schema, and USE CATALOG privilege on the enclosing catalog. To create new model versions under a registered model, you must be the owner of the registered model and have USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

If you run into authorization issues when trying to register a model, try setting the environment variable MLFLOW_USE_DATABRICKS_SDK_MODEL_ARTIFACTS_REPO_FOR_UC to True. This approach cannot be used for models shared with Delta Sharing that use default storage. See Requirements.

If you are using Databricks on AWS GovCloud, you must set the environment variable MLFLOW_USE_DATABRICKS_SDK_MODEL_ARTIFACTS_REPO_FOR_UC to True. See Requirements.

New ML model versions in UC must have a model signature. If you're not already logging MLflow models with signatures in your model training workloads, you can either:

- Use Databricks autologging, which automatically logs models with signatures for many popular ML frameworks. See supported frameworks in the MLflow docs.

- With MLflow 2.5.0 and above, you can specify an input example in your

mlflow.<flavor>.log_modelcall, and the model signature is automatically inferred. For further information, refer to the MLflow documentation.

Then, pass the three-level name of the model to MLflow APIs, in the form <catalog>.<schema>.<model>.

Model versions that do not have signatures have certain limitations. For a list of these limitations, and to add or update a signature for an existing model version, see Add or update a signature for an existing model version.

The examples in this section create and access models in the ml_team schema under the prod catalog.

The model training examples in this section create a new model version and register it in the prod catalog. Using the prod catalog doesn't necessarily mean that the model version serves production traffic. The model version's enclosing catalog, schema, and registered model reflect its environment (prod) and associated governance rules (for example, privileges can be set up so that only admins can delete from the prod catalog), but not its deployment status. To manage the deployment status, use model aliases.

Register a model to Unity Catalog using autologging

To register a model, use MLflow Client API register_model() method. See mlflow.register_model.

- MLflow 3

- MLflow 2.x

from sklearn import datasets

from sklearn.ensemble import RandomForestClassifier

# Train a sklearn model on the iris dataset

X, y = datasets.load_iris(return_X_y=True, as_frame=True)

clf = RandomForestClassifier(max_depth=7)

clf.fit(X, y)

# Note that the UC model name follows the pattern

# <catalog_name>.<schema_name>.<model_name>, corresponding to

# the catalog, schema, and registered model name

# in Unity Catalog under which to create the version

# The registered model will be created if it doesn't already exist,

# and the model version will contain all parameters and metrics

# logged with the corresponding MLflow Logged Model.

logged_model = mlflow.last_logged_model()

mlflow.register_model(logged_model.model_uri, "prod.ml_team.iris_model")

from sklearn import datasets

from sklearn.ensemble import RandomForestClassifier

# Train a sklearn model on the iris dataset

X, y = datasets.load_iris(return_X_y=True, as_frame=True)

clf = RandomForestClassifier(max_depth=7)

clf.fit(X, y)

# Note that the UC model name follows the pattern

# <catalog_name>.<schema_name>.<model_name>, corresponding to

# the catalog, schema, and registered model name

# in Unity Catalog under which to create the version

# The registered model will be created if it doesn't already exist

autolog_run = mlflow.last_active_run()

model_uri = "runs:/{}/model".format(autolog_run.info.run_id)

mlflow.register_model(model_uri, "prod.ml_team.iris_model")

Register a model using the API

- MLflow 3

- MLflow 2.x

mlflow.register_model(

"models:/<model_id>", "prod.ml_team.iris_model"

)

mlflow.register_model(

"runs:/<run_id>/model", "prod.ml_team.iris_model"

)

Register a model to Unity Catalog with automatically inferred signature

Support for automatically inferred signatures is available in MLflow version 2.5.0 and above, and is supported in Databricks Runtime 11.3 LTS ML and above. To use automatically inferred signatures, use the following code to install the latest MLflow Python client in your notebook:

%pip install --upgrade "mlflow-skinny[databricks]"

dbutils.library.restartPython()

The following code shows an example of an automatically inferred signature. Note that using registered_model_name in the log_model() call registers the model to Unity Catalog, so you must provide the full three-level name of the model in the format <catalog>.<schema>.<model>.

- MLflow 3

- MLflow 2.x

from sklearn import datasets

from sklearn.ensemble import RandomForestClassifier

with mlflow.start_run():

# Train a sklearn model on the iris dataset

X, y = datasets.load_iris(return_X_y=True, as_frame=True)

clf = RandomForestClassifier(max_depth=7)

clf.fit(X, y)

# Take the first row of the training dataset as the model input example.

input_example = X.iloc[[0]]

# Log the model and register it as a new version in UC.

mlflow.sklearn.log_model(

sk_model=clf,

name="model",

# The signature is automatically inferred from the input example and its predicted output.

input_example=input_example,

# Use three-level name to register model in Unity Catalog.

registered_model_name="prod.ml_team.iris_model",

)

from sklearn import datasets

from sklearn.ensemble import RandomForestClassifier

with mlflow.start_run():

# Train a sklearn model on the iris dataset

X, y = datasets.load_iris(return_X_y=True, as_frame=True)

clf = RandomForestClassifier(max_depth=7)

clf.fit(X, y)

# Take the first row of the training dataset as the model input example.

input_example = X.iloc[[0]]

# Log the model and register it as a new version in UC.

mlflow.sklearn.log_model(

sk_model=clf,

artifact_path="model",

# The signature is automatically inferred from the input example and its predicted output.

input_example=input_example,

# Use three-level name to register model in Unity Catalog.

registered_model_name="prod.ml_team.iris_model",

)

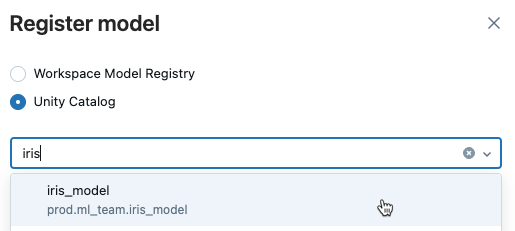

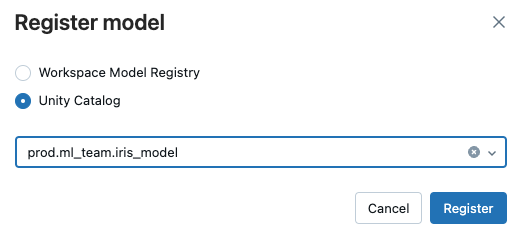

Register a model using the UI

Follow these steps:

-

From the experiment run page, click Register model in the upper-right corner of the UI.

-

In the dialog, select Unity Catalog, and select a destination model from the drop down list.

-

Click Register.

Registering a model can take time. To monitor progress, navigate to the destination model in Unity Catalog and refresh periodically.

Add or update a signature for an existing model version

Model versions that do not have signatures have the following limitations:

- If a signature is provided, model inputs are checked at inference and an error is reported if the inputs do not match the signature. Without a signature, there is no automatic input enforcement, and models need to be able to handle unexpected inputs.

- Using a model version with AI functions requires providing a schema in the function call.

- Using a model version with Model Serving does not auto-generate input examples.

To add or update a model version signature, see the MLflow documentation.

Use model aliases

Model aliases allow you to assign a mutable, named reference to a particular version of a registered model. You can use aliases to indicate the deployment status of a model version. For example, you could allocate a “Champion” alias to the model version currently in production and target this alias in workloads that use the production model. You can then update the production model by reassigning the “Champion” alias to a different model version.

Set and delete aliases on models

Permissions required: Owner of the registered model, plus USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

You can set, update, and remove aliases for models in Unity Catalog using Catalog Explorer. See View and manage models in the UI.

To set, update, and delete aliases using the MLflow Client API, see the examples below:

from mlflow import MlflowClient

client = MlflowClient()

# create "Champion" alias for version 1 of model "prod.ml_team.iris_model"

client.set_registered_model_alias("prod.ml_team.iris_model", "Champion", 1)

# reassign the "Champion" alias to version 2

client.set_registered_model_alias("prod.ml_team.iris_model", "Champion", 2)

# get a model version by alias

client.get_model_version_by_alias("prod.ml_team.iris_model", "Champion")

# delete the alias

client.delete_registered_model_alias("prod.ml_team.iris_model", "Champion")

For more details on alias client APIs, see the MLflow API documentation.

Load model version by alias for inference workloads

Permissions required: EXECUTE privilege on the registered model, plus USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

Batch inference workloads can reference a model version by alias. The snippet below loads and applies the “Champion” model version for batch inference. If the “Champion” version is updated to reference a new model version, the batch inference workload automatically picks it up on its next execution. This allows you to decouple model deployments from your batch inference workloads.

import mlflow.pyfunc

model_version_uri = "models:/prod.ml_team.iris_model@Champion"

champion_version = mlflow.pyfunc.load_model(model_version_uri)

champion_version.predict(test_x)

Model serving endpoints can also reference a model version by alias. You can write deployment workflows to get a model version by alias and update a model serving endpoint to serve that version, using the model serving REST API. For example:

import mlflow

import requests

client = mlflow.tracking.MlflowClient()

champion_version = client.get_model_version_by_alias("prod.ml_team.iris_model", "Champion")

# Invoke the model serving REST API to update endpoint to serve the current "Champion" version

model_name = champion_version.name

model_version = champion_version.version

requests.request(...)

Load model version by version number for inference workloads

You can also load model versions by version number:

import mlflow.pyfunc

# Load version 1 of the model "prod.ml_team.iris_model"

model_version_uri = "models:/prod.ml_team.iris_model/1"

first_version = mlflow.pyfunc.load_model(model_version_uri)

first_version.predict(test_x)

Share models across workspaces

Share models with users in the same region

As long as you have the appropriate privileges, you can access models in Unity Catalog from any workspace that is attached to the metastore containing the model. For example, you can access models from the prod catalog in a dev workspace, to facilitate comparing newly-developed models to the production baseline.

To collaborate with other users (share write privileges) on a registered model you created, you must grant ownership of the model to a group containing yourself and the users you'd like to collaborate with. Collaborators must also have the USE CATALOG and USE SCHEMA privileges on the catalog and schema containing the model. See Unity Catalog privileges and securable objects for details.

Share models with users in another region or account

To share models with users in other regions or accounts, use the Delta Sharing Databricks-to-Databricks sharing flow. See Add models to a share (for providers) and Get access in the Databricks-to-Databricks model (for recipients). As a recipient, after you create a catalog from a share, you access models in that shared catalog the same way as any other model in Unity Catalog.

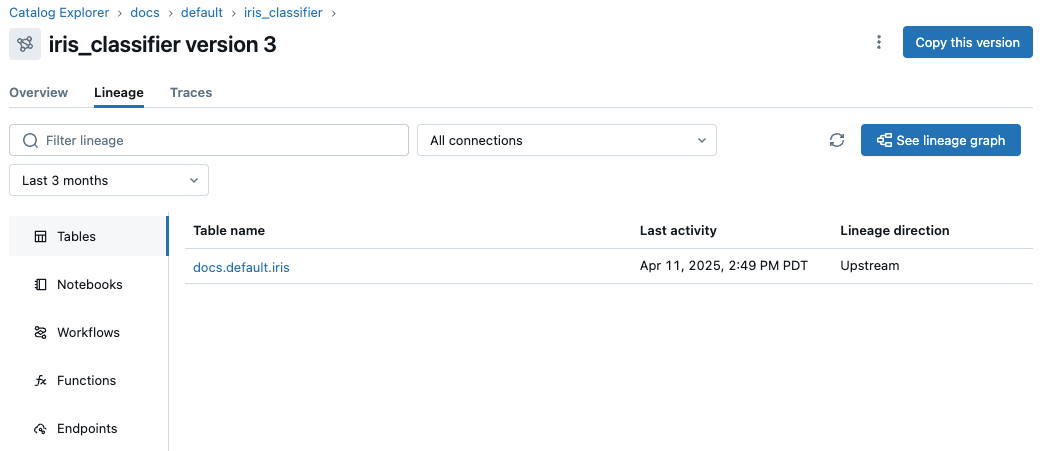

Track the data lineage of a model in Unity Catalog

Support for table to model lineage in Unity Catalog is available in MLflow 2.11.0 and above.

When you train a model on a table in Unity Catalog, you can track the lineage of the model to the upstream dataset(s) it was trained and evaluated on. To do this, use mlflow.log_input. This saves the input table information with the MLflow run that generated the model. Data lineage is also automatically captured for models logged using feature store APIs. See Feature governance and lineage.

When you register the model to Unity Catalog, lineage information is automatically saved and is visible in the Lineage tab on the model version page in Catalog Explorer. See View model version information and model lineage.

The following code shows an example.

- MLflow 3

- MLflow 2.x

import mlflow

import pandas as pd

import pyspark.pandas as ps

from sklearn.datasets import load_iris

from sklearn.ensemble import RandomForestRegressor

# Write a table to Unity Catalog

iris = load_iris()

iris_df = pd.DataFrame(iris.data, columns=iris.feature_names)

iris_df.rename(

columns = {

'sepal length (cm)':'sepal_length',

'sepal width (cm)':'sepal_width',

'petal length (cm)':'petal_length',

'petal width (cm)':'petal_width'},

inplace = True

)

iris_df['species'] = iris.target

ps.from_pandas(iris_df).to_table("prod.ml_team.iris", mode="overwrite")

# Load a Unity Catalog table, train a model, and log the input table

dataset = mlflow.data.load_delta(table_name="prod.ml_team.iris", version="0")

pd_df = dataset.df.toPandas()

X = pd_df.drop("species", axis=1)

y = pd_df["species"]

with mlflow.start_run():

clf = RandomForestRegressor(n_estimators=100)

clf.fit(X, y)

mlflow.log_input(dataset, "training")

# Take the first row of the training dataset as the model input example.

input_example = X.iloc[[0]]

# Log the model and register it as a new version in UC.

mlflow.sklearn.log_model(

sk_model=clf,

name="model",

# The signature is automatically inferred from the input example and its predicted output.

input_example=input_example,

# Use three-level name to register model in Unity Catalog.

registered_model_name="prod.ml_team.iris_classifier",

)

import mlflow

import pandas as pd

import pyspark.pandas as ps

from sklearn.datasets import load_iris

from sklearn.ensemble import RandomForestRegressor

# Write a table to Unity Catalog

iris = load_iris()

iris_df = pd.DataFrame(iris.data, columns=iris.feature_names)

iris_df.rename(

columns = {

'sepal length (cm)':'sepal_length',

'sepal width (cm)':'sepal_width',

'petal length (cm)':'petal_length',

'petal width (cm)':'petal_width'},

inplace = True

)

iris_df['species'] = iris.target

ps.from_pandas(iris_df).to_table("prod.ml_team.iris", mode="overwrite")

# Load a Unity Catalog table, train a model, and log the input table

dataset = mlflow.data.load_delta(table_name="prod.ml_team.iris", version="0")

pd_df = dataset.df.toPandas()

X = pd_df.drop("species", axis=1)

y = pd_df["species"]

with mlflow.start_run():

clf = RandomForestRegressor(n_estimators=100)

clf.fit(X, y)

mlflow.log_input(dataset, "training")

# Take the first row of the training dataset as the model input example.

input_example = X.iloc[[0]]

# Log the model and register it as a new version in UC.

mlflow.sklearn.log_model(

sk_model=clf,

artifact_path="model",

# The signature is automatically inferred from the input example and its predicted output.

input_example=input_example,

# Use three-level name to register model in Unity Catalog.

registered_model_name="prod.ml_team.iris_classifier",

)

Control access to models

In Unity Catalog, registered models are a subtype of the FUNCTION securable object. To grant access to a model registered in Unity Catalog, you use GRANT ON FUNCTION. You can also use Catalog Explorer to set model ownership and permissions. For details, see Manage privileges in Unity Catalog and The Unity Catalog object model.

You can configure model permissions programmatically using the Grants REST API. When you configure model permissions, set securable_type to "FUNCTION" in REST API requests. For example, use PATCH /api/2.1/unity-catalog/permissions/function/{full_name} to update registered model permissions.

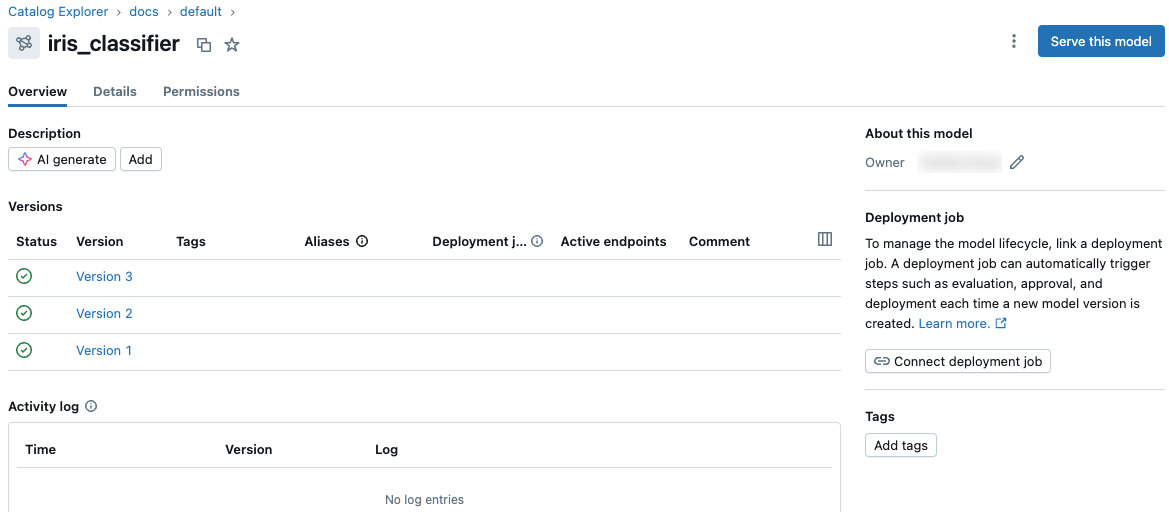

View and manage models in the UI

Permissions required: To view a registered model and its model versions in the UI, you need EXECUTE privilege on the registered model,

plus USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model

You can view and manage registered models and model versions in Unity Catalog using Catalog Explorer.

View model information

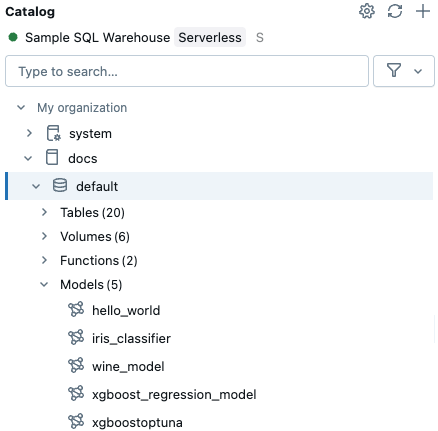

To view models in Catalog Explorer:

-

Click

Catalog in the sidebar.

-

Select a compute resource from the drop-down list at the top right.

-

In the Catalog Explorer tree at the left, open a catalog and select a schema.

-

If the schema contains any models, they appear in the tree under Models, as shown.

-

Click a model to see more information. The model details page shows a list of model versions with additional information.

Set model aliases

To set a model alias using the UI:

- On the model details page, hover over the row for the model version to which you want to add an alias. The Add alias button appears.

- Click Add alias.

- Enter an alias or select one from the drop-down menu. You can add multiple aliases in the dialog.

- Click Save aliases.

To remove an alias:

- Hover over the row for the model version and click the pencil icon next to the alias.

- In the dialog, click the

Xnext to the alias that you want to remove. - Click Save aliases.

View model version information and model lineage

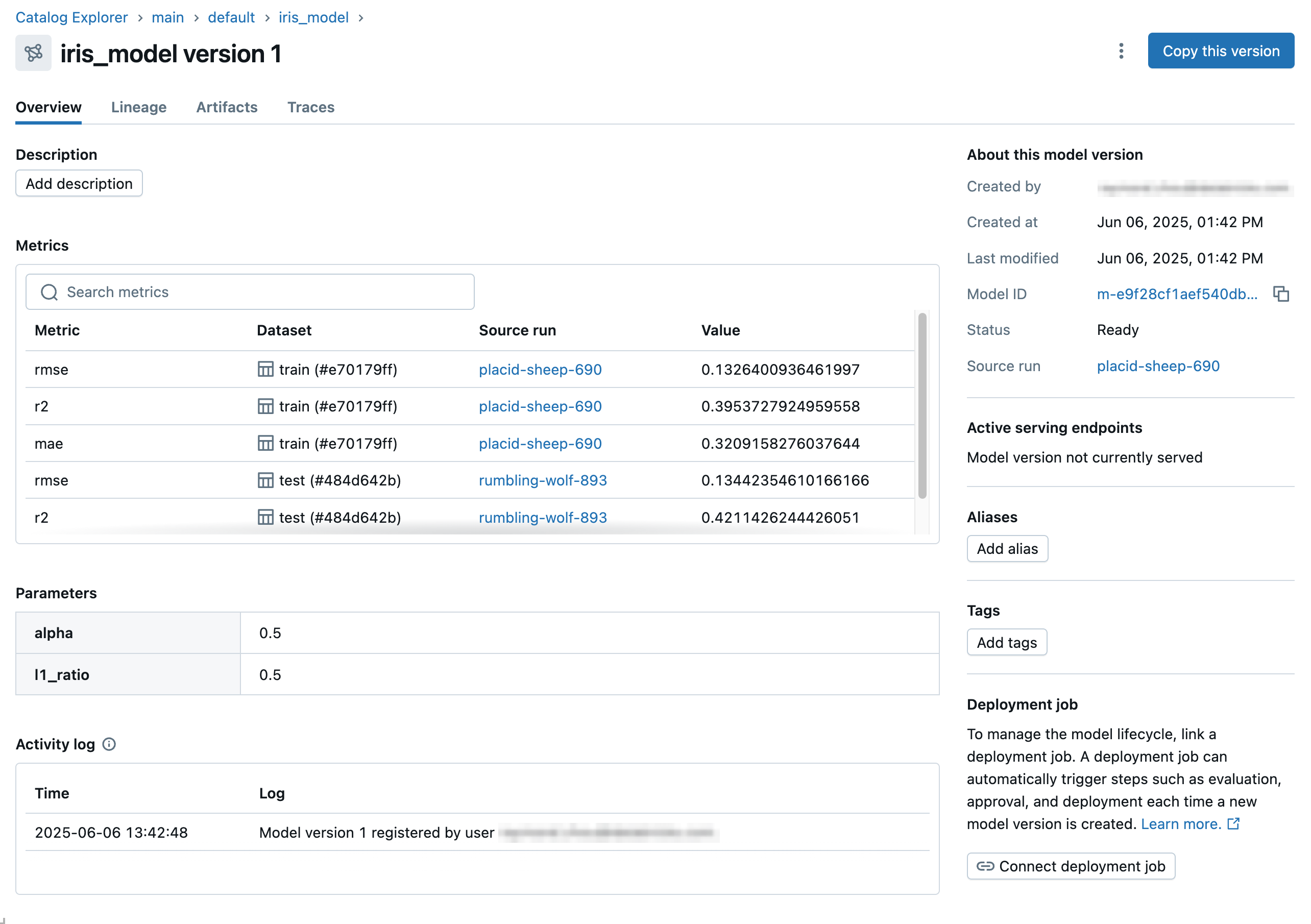

To view more information about a model version, click its name in the list of models. The model version page appears. This page includes a link to the MLflow source run that created the version. In MLflow 3, you can also view all parameters and metrics logged with the corresponding MLflow Logged Model.

- MLflow 3

- MLflow 2.x

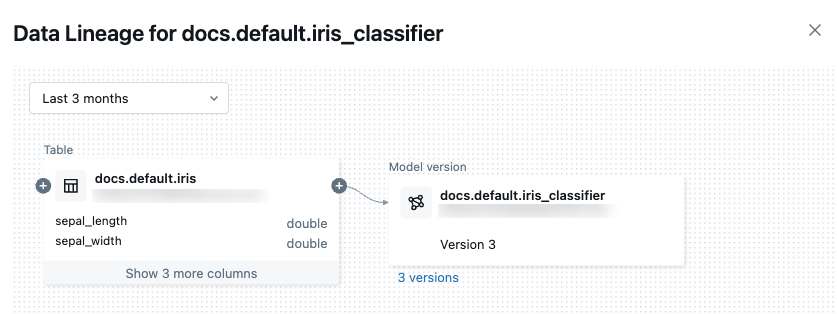

From this page, you can view the lineage of the model as follows:

-

Select the Lineage tab. The left sidebar shows components that were logged with the model.

-

Click See lineage graph. The lineage graph appears. For details about exploring the lineage graph, see Capture and explore lineage.

-

To close the lineage graph, click

in the upper-right corner.

Rename a model

Permissions required: Owner of the registered model, CREATE MODEL privilege on the schema containing the registered model, and USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

To rename a registered model, use the MLflow Client API rename_registered_model() method, where <full-model-name> is the full 3-level name of the model and <new-model-name> is the model name without the catalog or schema.

client=MlflowClient()

client.rename_registered_model("<full-model-name>", "<new-model-name>")

For example, the following code changes the name of the model hello_world to hello.

client=MlflowClient()

client.rename_registered_model("docs.models.hello_world", "hello")

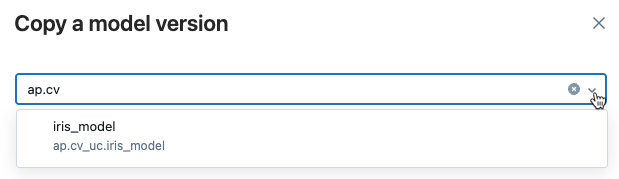

Copy a model version

You can copy a model version from one model to another in Unity Catalog.

Copy a model version using the UI

Follow these steps:

-

From the model version page, click Copy this version in the upper-right corner of the UI.

-

Select a destination model from the drop down list and click Copy.

Copying a model can take time. To monitor progress, navigate to the destination model in Unity Catalog and refresh periodically.

Copy a model version using the API

To copy a model version, use the MLflow's copy_model_version() Python API:

client = MlflowClient()

client.copy_model_version(

"models:/<source-model-name>/<source-model-version>",

"<destination-model-name>",

)

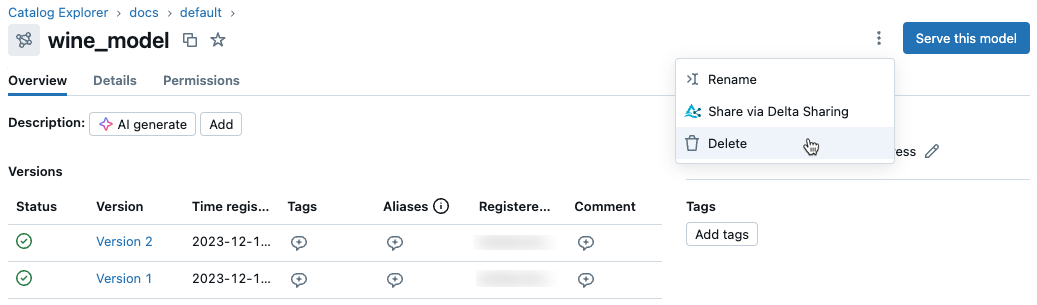

Delete a model or model version

Permissions required: Owner of the registered model, plus USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

You can delete a registered model or a model version within a registered model using the UI or the API.

You cannot undo this action. When you delete a model, all model artifacts stored by Unity Catalog and all the metadata associated with the registered model are deleted.

Delete a model version or model using the UI

To delete a model or model version in Unity Catalog, follow these steps.

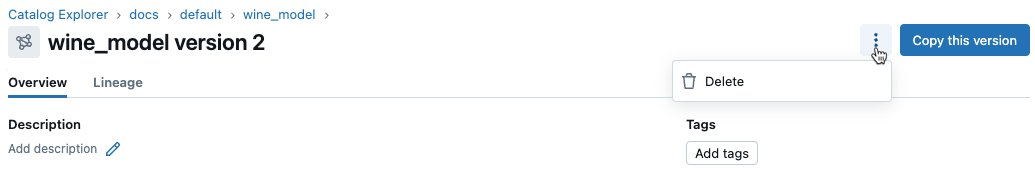

-

In Catalog Explorer, on the model page or model version page, click the kebab menu

in the upper-right corner.

From the model page:

From the model version page:

-

Select Delete.

-

A confirmation dialog appears. Click Delete to confirm.

Delete a model version or model using the API

To delete a model version, use the MLflow Client API delete_model_version() method:

# Delete versions 1,2, and 3 of the model

client = MlflowClient()

versions=[1, 2, 3]

for version in versions:

client.delete_model_version(name="<model-name>", version=version)

To delete a model, use the MLflow Client API delete_registered_model() method:

client = MlflowClient()

client.delete_registered_model(name="<model-name>")

Use tags on models

Tags are key-value pairs that you associate with registered models and model versions, allowing you to label and categorize them by function or status. For example, you could apply a tag with key "task" and value "question-answering" (displayed in the UI as task:question-answering) to registered models intended for question answering tasks. At the model version level, you could tag versions undergoing pre-deployment validation with validation_status:pending and those cleared for deployment with validation_status:approved.

Permissions required: Owner of or have APPLY TAG privilege on the registered model, plus USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

See Apply tags to Unity Catalog securable objects to learn how to set and delete tags using the UI.

To set and delete tags using the MLflow Client API, see the examples below:

from mlflow import MlflowClient

client = MlflowClient()

# Set registered model tag

client.set_registered_model_tag("prod.ml_team.iris_model", "task", "classification")

# Delete registered model tag

client.delete_registered_model_tag("prod.ml_team.iris_model", "task")

# Set model version tag

client.set_model_version_tag("prod.ml_team.iris_model", "1", "validation_status", "approved")

# Delete model version tag

client.delete_model_version_tag("prod.ml_team.iris_model", "1", "validation_status")

Both registered model and model version tags must meet the platform-wide constraints.

For more details on tag client APIs, see the MLflow API documentation.

Add a description (comments) to a model or model version

Permissions required: Owner of the registered model, plus USE SCHEMA and USE CATALOG privileges on the schema and catalog containing the model.

You can include a text description for any model or model version in Unity Catalog. For example, you can provide an overview of the problem or information about the methodology and algorithm used.

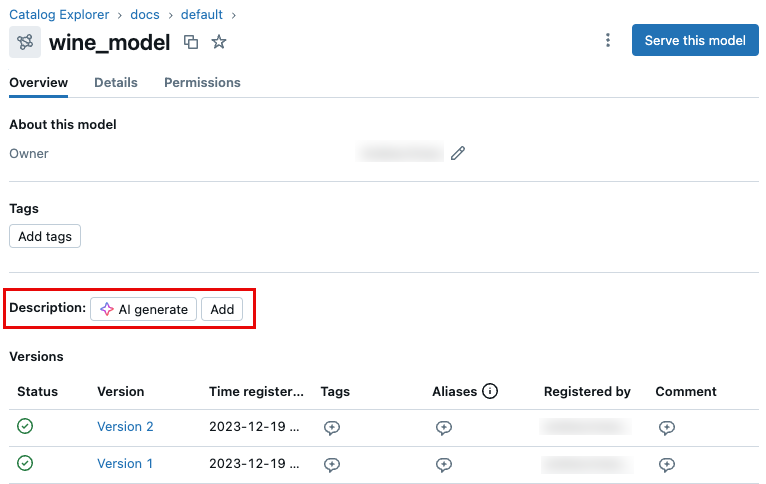

For models, you also have the option of using AI-generated comments. See Add AI-generated comments to Unity Catalog objects.

Add a description to a model using the UI

To add a description for a model, you can use AI-generated comments, or you can enter your own comments. You can edit AI-generated comments as necessary.

- To add automatically generated comments, click the AI generate button.

- To add your own comments, click Add. Enter your comments in the dialog, and click Save.

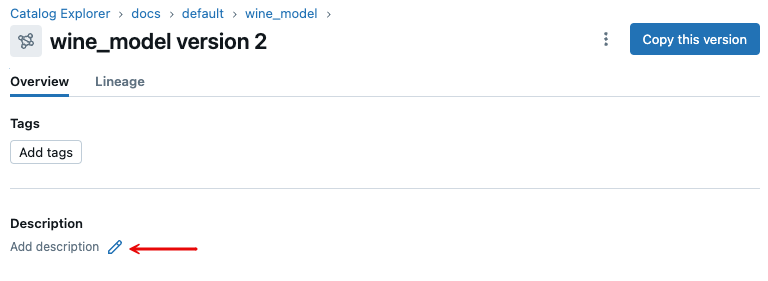

Add a description to a model version using the UI

To add a description to a model version in Unity Catalog, follow these steps:

-

On the model version page, click the pencil icon under Description.

-

Enter your comments in the dialog, and click Save.

Add a description to a model or model version using the API

To update a registered model description, use the MLflow Client API update_registered_model() method:

client = MlflowClient()

client.update_registered_model(

name="<model-name>",

description="<description>"

)

To update a model version description, use the MLflow Client API update_model_version() method:

client = MlflowClient()

client.update_model_version(

name="<model-name>",

version=<model-version>,

description="<description>"

)

List and search models

To get a list of registered models in Unity Catalog, use MLflow's search_registered_models() Python API:

mlflow.search_registered_models()

To search for a specific model name and get information about that model's versions, use search_model_versions():

from pprint import pprint

[pprint(mv) for mv in mlflow.search_model_versions("name='<model-name>'")]

Not all search API fields and operators are supported for models in Unity Catalog. See Limitations for details.

Download model files (advanced use case)

In most cases, to load models, you should use MLflow APIs like mlflow.pyfunc.load_model or mlflow.<flavor>.load_model (for example, mlflow.transformers.load_model for HuggingFace models).

In some cases you may need to download model files to debug model behavior or model loading issues. You can download model files using mlflow.artifacts.download_artifacts, as follows:

import mlflow

mlflow.set_registry_uri("databricks-uc")

model_uri = f"models:/{model_name}/{version}" # reference model by version or alias

destination_path = "/local_disk0/model"

mlflow.artifacts.download_artifacts(artifact_uri=model_uri, dst_path=destination_path)

Promote a model across environments

Databricks recommends that you deploy ML pipelines as code. This eliminates the need to promote models across environments, as all production models can be produced through automated training workflows in a production environment.

However, in some cases, it may be too expensive to retrain models across environments. Instead, you can copy model versions across registered models in Unity Catalog to promote them across environments.

You need the following privileges to execute the example code below:

USE CATALOGon thestagingandprodcatalogs.USE SCHEMAon thestaging.ml_teamandprod.ml_teamschemas.EXECUTEonstaging.ml_team.fraud_detection.

In addition, you must be the owner of the registered model prod.ml_team.fraud_detection.

The following code snippet uses the copy_model_version MLflow Client API, available in MLflow version 2.8.0 and above.

import mlflow

mlflow.set_registry_uri("databricks-uc")

client = mlflow.tracking.MlflowClient()

src_model_name = "staging.ml_team.fraud_detection"

src_model_version = "1"

src_model_uri = f"models:/{src_model_name}/{src_model_version}"

dst_model_name = "prod.ml_team.fraud_detection"

copied_model_version = client.copy_model_version(src_model_uri, dst_model_name)

After the model version is in the production environment, you can perform any necessary pre-deployment validation. Then, you can mark the model version for deployment using aliases.

client = mlflow.tracking.MlflowClient()

client.set_registered_model_alias(name="prod.ml_team.fraud_detection", alias="Champion", version=copied_model_version.version)

In the example above, only users who can read from the staging.ml_team.fraud_detection registered model and write to the prod.ml_team.fraud_detection registered model can promote staging models to the production environment. The same users can also use aliases to manage which model versions are deployed within the production environment. You don't need to configure any other rules or policies to govern model promotion and deployment.

You can customize this flow to promote the model version across multiple environments that match your setup, such as dev, qa, and prod. Access control is enforced as configured in each environment.

Example notebook

This example notebook illustrates how to use Models in Unity Catalog APIs to manage models in Unity Catalog, including registering models and model versions, adding descriptions, loading and deploying models, using model aliases, and deleting models and model versions.

- MLflow 3

- MLflow 2.x

Models in Unity Catalog example notebook for MLflow 3

Models in Unity Catalog example notebook

Limitations

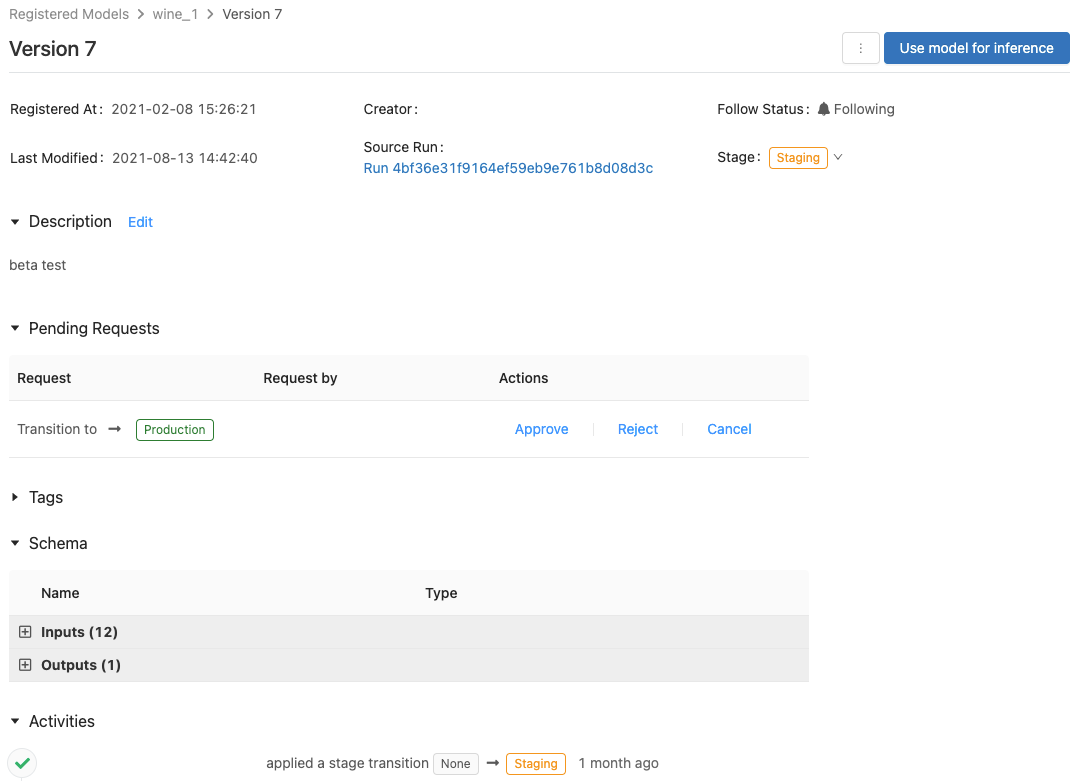

- Stages are not supported for models in Unity Catalog. Databricks recommends using the three-level namespace in Unity Catalog to express the environment a model is in, and using aliases to promote models for deployment. See Promote a model across environments for details.

- Webhooks are not supported for models in Unity Catalog. See suggested alternatives in the upgrade guide.

- Some search API fields and operators are not supported for models in Unity Catalog. This can be mitigated by calling the search APIs using supported filters and scanning the results. Following are some examples:

- The

order_byparameter is not supported in the search_model_versions or search_registered_models client APIs. - Tag-based filters (

tags.mykey = 'myvalue') are not supported forsearch_model_versionsorsearch_registered_models. - Operators other than exact equality (for example,

LIKE,ILIKE,!=) are not supported forsearch_model_versionsorsearch_registered_models. - Searching registered models by name (for example,

search_registered_models(filter_string="name='main.default.mymodel'")is not supported. To fetch a particular registered model by name, use get_registered_model.

- The

- Email notifications and comment discussion threads on registered models and model versions are not supported in Unity Catalog.

- The activity log is not supported for models in Unity Catalog. To track activity on models in Unity Catalog, use audit logs.

search_registered_modelsmight return stale results for models shared through Delta Sharing. To ensure the most recent results, use the Databricks CLI or SDK to list the models in a schema.