Package code for Databricks Model Serving

The track application versions page shows how to track application versions using LoggedModel as a metadata hub linking to external code (such as Git). There are also scenarios where you might need to package your application code directly into the LoggedModel.

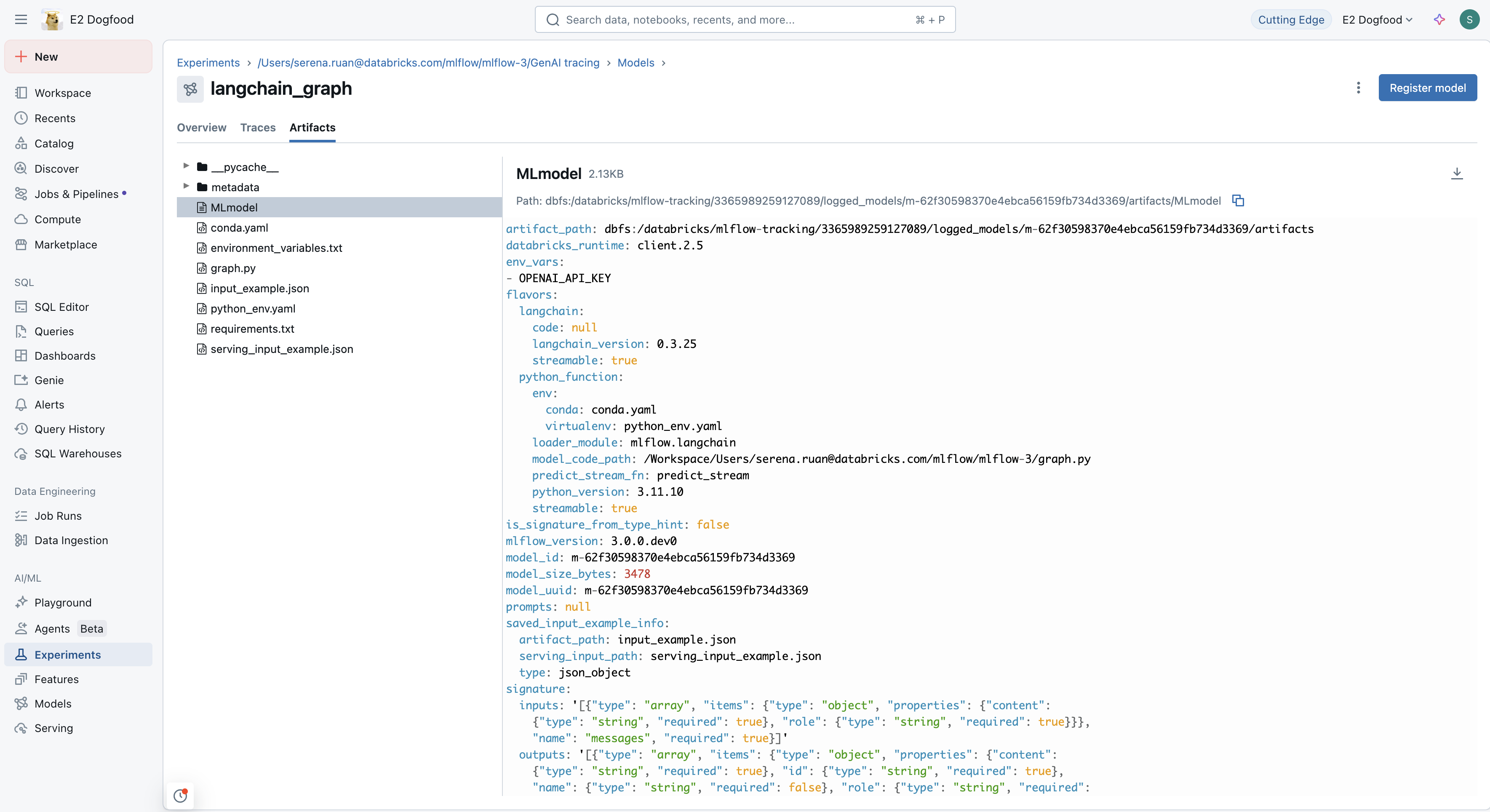

This is particularly useful for deployment to Databricks Model Serving or for deployments using Agent Framework, which expects self-contained model artifacts.

When to package code directly

Package your code into a LoggedModel when you need:

- Self-contained deployment artifacts that include all code and dependencies.

- Direct deployment to serving platforms without external code dependencies.

This is an optional step for deployment, not the default versioning approach for development iterations.

How to package code

MLflow recommends using the ResponsesAgent interface to package your GenAI applications.

See one of the following to get started:

- Quickstart. See build and deploy AI agents quickstart.

- For more details, see author AI agents in code.

Following these pages results in a deployment-ready LoggedModel that behaves in the same way as the metadata-only LoggedModel. Follow step 6 of the track application versions page to link your packaged model version to evaluation results.

Next steps

- Deploy to Model Serving - Deploy your packaged model to production

- Link production traces to app versions - Track deployed versions in production

- Run scorers in production - Monitor deployed model quality