Chat with LLMs and prototype generative AI apps using AI Playground

This feature is in Preview.

You can interact with supported large language models using the AI Playground. The AI Playground is a chat-like environment where you can test, prompt, and compare LLMs.

Requirements

Your Databricks workspace must be in a region that supports one of the following features:

- Foundation Model APIs pay-per-token

- Foundation Model APIs provisioned throughput

- External models

Use AI Playground

If Playground is not available in your workspace, reach out to your Databricks account team for more information.

To use the AI Playground:

- Select Playground from the left navigation pane under AI/ML.

- Select the model you want to interact with using the dropdown list on the top left.

- You can do either of the following:

- Type in your question or prompt.

- Select a sample AI instruction from those listed in the window.

- You can select the + to add an endpoint. Doing so allows you to compare multiple model responses side-by-side.

Prototype gen AI agents in AI Playground

You can also get started building gen AI agents in AI Playground.

To prototype a tool-calling agent:

-

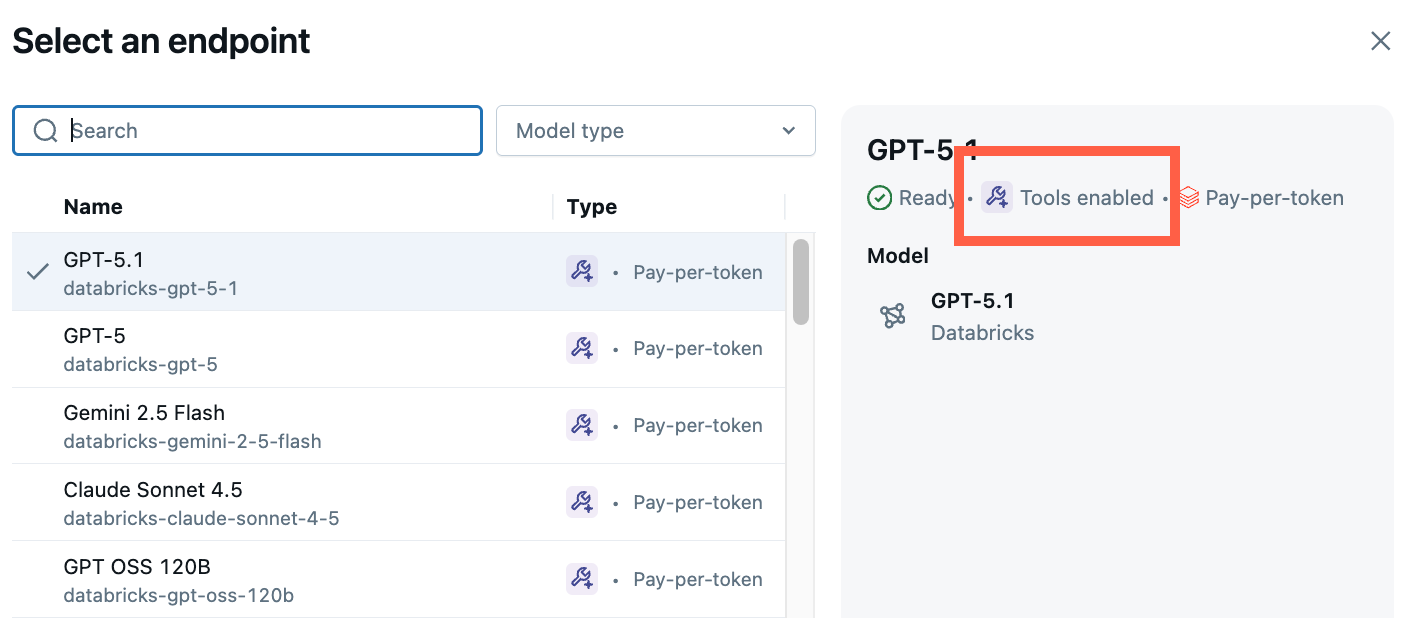

From Playground, select a model with the Tools enabled label.

-

Select Tools and select a tool to give to the agent. For this guide, select the built-in Unity Catalog function,

system.ai.python_exec. This function gives your agent the ability to run arbitrary Python code. -

Chat to test out the current combination of LLM, tools, and system prompt and try variations.

Export and deploy AI Playground agents

After prototyping the AI agent in AI Playground, export it to Python notebooks to deploy it to a model serving endpoint.

-

Click Export to generate the notebook that defines and deploys the AI agent.

After exporting the agent code, a folder with a driver notebook is saved to your workspace. This driver defines a tool-calling OpenAI Client ResponsesAgent, tests the agent locally, uses code-based logging, registers, and deploys the AI agent using Mosaic AI Agent Framework.

-

Address all the TODO's in the notebook.

The exported code might behave differently from your AI Playground session. Databricks recommends running the exported notebooks to iterate and debug further, evaluate agent quality, and then deploy the agent to share with others.