Build and share a chat UI with Databricks Apps (Model Serving)

For new use cases, Databricks recommends deploying agents on Databricks Apps for full control over agent code, server configuration, and deployment workflow. See Author an AI agent and deploy it on Databricks Apps. To migrate an existing agent, see Migrate an agent from Model Serving to Databricks Apps.

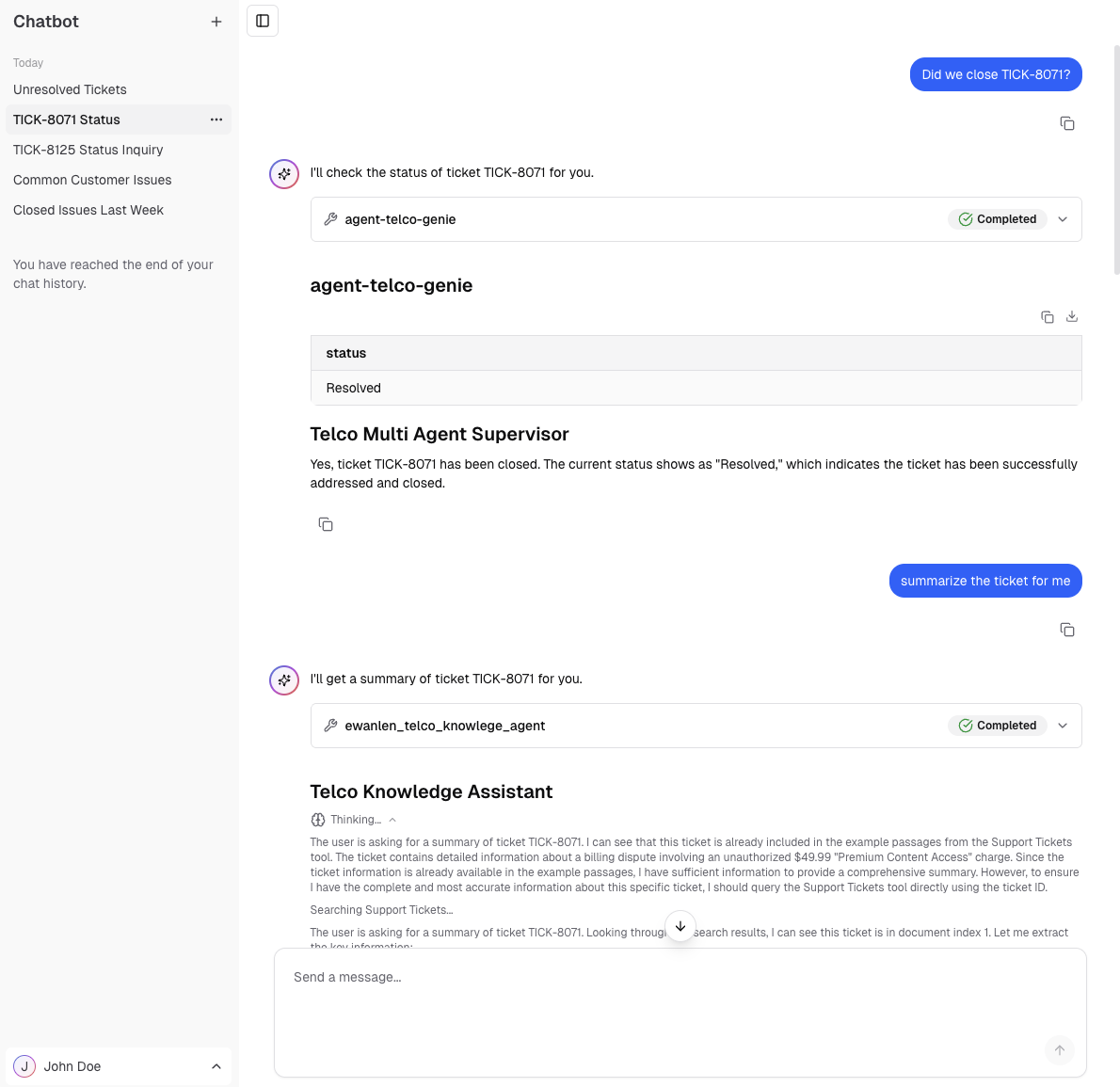

Use Databricks Apps to build a custom chat UI for your deployed agent. This lets you create branded, interactive interfaces that give you full control over how users interact with your agent.

For pre-production testing without custom UI requirements, use the built-in review app. The custom app approach described here is for production deployments that need branding, specialized features, or enhanced user experiences.

Requirements

You must have access to a Databricks workspace that contains one of the following endpoint types:

- Custom agent: Deployed using

agents.deploy()with Chat or Responses task type

- Agent Bricks: Knowledge Assistant or Supervisor Agent

-

Foundation model: Endpoint with Chat task type. See Supported foundation models on Mosaic AI Model Serving.

noteAgents using legacy schemas aren't supported.

You must have the following development tools:

-

NPM CLI: Required for local development. See GitHub - NPM CLI)

-

Databricks CLI: Required for authentication, see the installation guide.

- Install the Databricks CLI.

- Set your profile name:

Bash

export DATABRICKS_CONFIG_PROFILE='your_profile_name' - Configure authentication:

Bash

databricks auth login --profile "$DATABRICKS_CONFIG_PROFILE"

Example chat application

The example app, e2e-chatbot-app-next uses NextJS, React, and AI SDK to build a production-ready chat interface.

See the project README.md for detailed instructions on how to use the template.

The example app demonstrates the following:

- Streaming output: Displays agent responses as they generate with automatic fallback to non-streaming mode

- Tool calls: Renders tool calls for agents authored using Agent Framework best practices

- Databricks Agent and Foundation Model integration: Direct connection to Foundation Models, Databricks Agent serving endpoints, and Agent Bricks

- Databricks authentication: Uses Databricks authentication to identify end users of the chat app and securely manage their conversations.

- Persistent chat history: Stores conversations in Databricks Lakebase (Postgres) with full governance

The previous Streamlit template, e2e-chatbot-app, is still available but lacks the production features of the NextJS app.

Share the app

After testing your app, you can grant other users permission to view it. See Configure permissions for a Databricks app.

Share your app URL with others so they can chat with your agent and provide feedback.

Known limitations

- No support for image or other multi-modal inputs

- This app only supports the recommended authentication methods for Databricks: Databricks CLI auth for local development, and Databricks service principal auth for deployed apps.

- Other authentication mechanisms (PAT, Azure managed identities, etc) are not supported.

Host multiple apps on the same database instance

This examples only creates one database per app, because the app code targets a fixed ai_chatbot schema in the database instance. To host multiple apps out of the same instance, you must do the following:

- Update the database instance name in

databricks.yml. - Update references to

ai_chatbotin the codebase to your new desired schema name within the existing database instance. - Run

npm run db:generateto regenerate database migrations. - Deploy the app.