What is Databricks Clean Rooms?

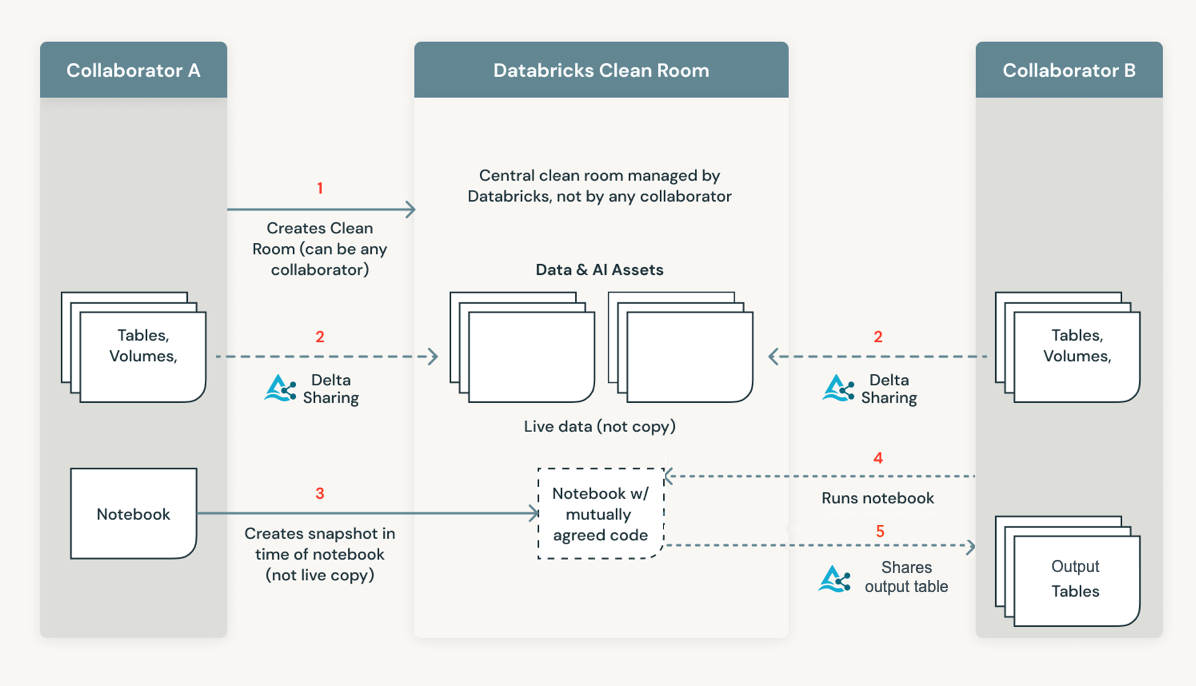

This page introduces Clean Rooms, a Databricks feature that uses Delta Sharing and serverless compute to provide a secure and privacy-protecting environment where multiple parties can work together on sensitive enterprise data without direct access to each other's data.

Requirements

To be eligible to use clean rooms, you must have:

- A workspace that is enabled for serverless compute. See Serverless compute requirements.

- A workspace that is enabled for Unity Catalog. See Enable a workspace for Unity Catalog.

- Delta Sharing enabled for your Unity Catalog metastore. See Enable Delta Sharing on a metastore.

How does Clean Rooms work?

When you create a clean room, you create the following:

- A securable clean room object in your Unity Catalog metastore.

- The “central” clean room, which is an isolated ephemeral environment managed by Databricks.

- A securable clean room object in your collaborator's Unity Catalog metastore.

Tables, volumes (non-tabular data), views, and notebooks that either collaborator shares in the clean room are shared with the central clean room only, using Delta Sharing.

Collaborators cannot see the data in other collaborators’ tables, views, or volumes, but they can see column names and column types, and they can run approved notebook code that operates over the data assets. The notebook code runs in the central clean room. Notebooks can also generate output tables that let your collaborator temporarily save read-only output to their Unity Catalog metastore so they can work with it in their workspaces.

How does Clean Rooms ensure a no-trust environment?

The Databricks Clean Rooms model is “no-trust.” All collaborators in a no-trust clean room have equal privileges, including the creator of the clean room. Clean Rooms is designed to prevent the running of unauthorized code and the unauthorized sharing of data. This trust is enforced explicitly by requiring all collaborators to approve a notebook before it can be run. While you can upload a notebook to yourself, it must be approved by the other collaborators before running. See Approve a notebook in a clean room.

Additional safeguards or restrictions

The following safeguards are in place in addition to the explicit notebook approval process mentioned above:

-

After a clean room is created, it is locked down to prevent new collaborators from joining the clean room.

-

If any collaborator deletes the clean room, the central clean room is void and no clean room tasks can be run by any user.

-

Each clean room is limited to ten collaborators.

-

You cannot rename the clean room.

The clean room name must be unique in every collaborator's metastore, so that all collaborators can refer to the same clean room unambiguously.

-

When a collaborator adds comments to a clean room securable in their workspace, these comments are not propagated to other collaborators.

What is shared with other collaborators?

- Clean room name.

- Cloud and region of the central clean room.

- Your organization name (which can be any name you choose).

- Your clean room sharing identifier (global metastore ID + workspace ID + user email address).

- Aliases of shared tables, views, or volumes.

- Column metadata (column name or alias and type).

- Notebooks (read-only).

- Output tables (read-only, temporary).

- Clean room events system table.

- Run history, including:

- The name of the notebook that is being run

- Collaborator that ran the notebook (not user).

- The state of the notebook run.

- The start time of the notebook run.

What is shared with the central clean room?

-

Everything that is listed in the previous section.

-

Read-only tables, volumes, views, and notebooks.

Tables, views, and volumes are registered in the central clean room's metastore with any supplied aliases. Data assets are shared throughout the lifecycle of the clean room.

Clean Rooms FAQ

The following are frequently asked questions about clean rooms.

How is my data managed in a clean room?

The central clean room is managed by Databricks. In the central clean room:

- Neither party has admin privileges.

- Only metadata is visible to all parties.

- Each party can add data to the central clean room.

- Clean rooms use Delta Sharing to share data securely to the clean room, but not between participants. See What is Delta Sharing?.

How is my data kept private?

Central clean rooms run in an isolated, Databricks-managed serverless compute plane hosted in a cloud provider region that the clean room creator chooses.

Clean rooms provide:

- Code approval: The clean room creator and collaborators can share tables and volumes with the central clean room but can only run notebooks uploaded by the other party. You can review the code added by the other party before approving. If you run a notebook added by another party, you implicitly approve the code.

- Version control: Clean rooms notebooks have version control to ensure that all parties can only run fully-approved notebooks. Only the most recent version of a notebook can be run. You can use the clean rooms system table to see which version of the notebook was run and monitor any changes made.

- Restricted access: When you create a clean room, you can use serverless egress control to manage outbound network connections. If you restrict access from your clean room, access to unauthorized storage is blocked. See What is serverless egress control?.

To learn more about security and the serverless compute plane, see Serverless compute plane networking.

How are actions recorded?

Clean room actions taken by you or your collaborators are recorded in the clean room events system table. These records include detailed metadata about the specific action taken. See Clean room events system table reference.

Clean room actions are also recorded in your account's audit log under the service clean-room. See Clean Rooms events.

When a collaborator modifies permissions on a clean room securable within their metastore, these changes are recorded in their audit logs under the unityCatalog service.

How does billing work?

To learn more about Databricks Clean Rooms pricing, see link.

Limitations

The following limitations apply:

- No service credential Scala libraries included in the required Databricks Runtime version.

- If you use Databricks-managed default storage for tables added to your clean room, you cannot use table partitions.

Resource quotas

Databricks enforces resource quotas on all Clean Room securable objects. These quotas are listed in Resource limits. If you expect to exceed these resource limits, contact your Databricks account team.

You can monitor your quota usage using the Unity Catalog resource quotas APIs. See Monitor your usage of Unity Catalog resource quotas.