Databricks AI assistive features trust and safety

Databricks understands the importance of your data and the trust you place in us when you use our platform and Databricks AI assistive features. Databricks is committed to the highest standards of data protection, and has implemented rigorous measures to ensure information you submit to Databricks AI assistive features is protected.

- Your data remains confidential.

- Databricks does not train generative foundation models with data you submit to these features, and Databricks does not use this data to generate suggestions displayed for other customers.

- Our model partners do not retain data you submit through these features, even for abuse monitoring. Our partner-powered AI assistive features use zero data retention endpoints from our model partners.

- Protection from harmful output. When using Azure OpenAI, Databricks also uses Azure OpenAI content filtering to protect users from harmful content. When using Anthropic models, Databricks relies on Anthropic’s built-in safety mechanisms and additional hardening against harmful outputs as described in Anthropic’s safety documentation. In addition, Databricks has performed an extensive evaluation with thousands of simulated user interactions to ensure that the protections put in place to protect against harmful content, jailbreaks, insecure code generation, and use of third-party copyright content are effective.

- Databricks uses only the data necessary to provide the service. Data is sent only when you interact with Databricks AI assistive features. Databricks sends your prompt, relevant table metadata and values, errors, as well as input code or queries to help return more relevant results.

- Databricks does not train generative foundation models with data, prompts or responses that you submit to these features. Databricks does not use this data to generate suggestions displayed for other customers.

- Data is protected in transit and at rest. All traffic between Databricks and model partners is encrypted in transit with industry standard TLS encryption. Any data stored within a Databricks workspace is AES-256 bit encrypted.

- Databricks offers data residency controls. Databricks AI assistive features are Designated Services and comply with data residency boundaries. For more details, see Databricks Geos: Data residency and Databricks Designated Services.

To learn about Databricks Assistant privacy, see Privacy and security FAQs.

Privacy and security FAQs

What services and models do partner-powered AI assistive features use?

If the partner-powered AI features setting is enabled, Databricks AI assistive features use models hosted by Azure OpenAI service or Anthropic on Databricks. If you disable the partner-powered AI features setting, some AI assistive features may use a Databricks-hosted model. For more information, see Partner-powered AI features.

What data is sent to the models?

Databricks sends only the data needed to provide the service, which may differ for each feature.

Databricks Assistant sends your prompt (for example, your question or code) as well as relevant metadata to the model powering the feature on each API request. This helps return more relevant results for your data. Examples include:

- Code and queries in the current notebook cell or SQL editor tab

- Table and column names and descriptions

- Previous questions

- Favorite tables

Databricks Assistant Agent Mode, now in Beta, can also analyze cell outputs and read data samples from tables, similar to other coding agents in the industry.

Genie uses your prompt, relevant table metadata and values, errors, as well as input code or queries when generating a response.

To process responses, Genie uses the following:

- The natural language prompt submitted by the user

- Table names and descriptions

- Relevant values

- General instructions

- Example SQL queries

- SQL functions

For AI-generated comments, Databricks sends the following metadata to the models with each API request:

- Catalog (catalog name, current comment, catalog type)

- Schema (catalog name, schema name, current comment)

- Table (catalog name, schema name, table name, current comment)

- Function (catalog name, schema name, function name, current comment, parameters, definition)

- Model (catalog name, schema name, model name, current comment, aliases)

- Volume (catalog name, schema name, volume name, current comment)

- Column names (column name, type, primary key or not, current column comment)

Do partner model providers store my data?

No. When using partner models through Databricks, partner model providers do not store prompts or responses.

Where are responses from AI assistive features stored?

Genie responses and approved AI-generated comments are stored in the Databricks control plane database. The control plane database is AES-256 bit encrypted.

Databricks Assistant chat history is stored in the same place as other notebook content.

Does the data sent to the models respect the user's Unity Catalog permissions?

Yes, all data sent to AI assistive feature models respect Unity Catalog permissions, so no data users do not have access to is sent to such models.

Can other users see my chat history with the Assistant or Genie?

Interactions with the Assistant are visible only to the user who initiated them.

Genie space managers can see other users’ messages, but not their query results.

Do Genie or Databricks Assistant execute code?

Genie is designed with read-only access to customer data, so it can only generate and run read-only SQL queries.

With Agent Mode, the Assistant can run code in the notebook and SQL editor. At first, the Assistant will ask you for confirmation to proceed with execution. You can choose to confirm, always allow execution in the current Databricks Assistant thread, or always allow execution. Other Databricks Assistant modes do not automatically run code on your behalf.

AI models can make mistakes, misunderstand intent, and hallucinate or give incorrect answers. Review and test AI-generated code before you run it.

Has Databricks done any assessment to evaluate the accuracy and appropriateness of the responses from AI assistive features?

Yes, Databricks has done extensive testing of all of our AI assistive features based on their expected use cases and using simulated user inputs to increase the accuracy and appropriateness of responses. That said, generative AI is an emerging technology, and AI assistive features may provide inaccurate or inappropriate responses.

How is my traffic routed through Geos?

Databricks AI assistive features are designated services that use Databricks Geos to manage data residency when processing customer content. Traffic routing depends on your region and whether cross-Geo processing is enabled (the Enforce data processing within workspace Geography for Designated Services is disabled).

How do AI assistive features work with Databricks-hosted models?

When Databricks AI assistive features use Databricks-hosted models, they use OpenAI GPT OSS or other models that are available for commercial use. See information about licensing and use of generative AI models.

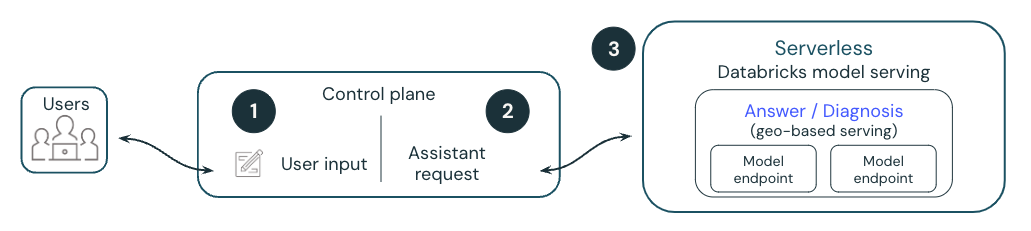

The following diagram provides an overview of how a Databricks-hosted model powers Databricks AI-powered features such as Quick Fix.

- A user executes a notebook cell, which results in an error.

- Databricks attaches metadata to a request and sends it to a Databricks-hosted large-language model (LLM). All data is encrypted at rest. Customers can use a customer-managed key (CMK).

- The Databricks-hosted model responds with the suggested code edits to fix the error, which is displayed to the user.