Get started: Query LLMs and prototype AI agents with no code

This 5-minute no-code tutorial introduces generative AI on Databricks. You will use the AI Playground to do the following:

- Query large language models (LLMs) and compare results side-by-side

- Prototype a tool-calling AI agent

- Export your agent to code

- Optional: Prototype a question-answer chatbot using retrieval-augmented generation (RAG)

Before you begin

Ensure your workspace can access the following:

- Unity Catalog.

- Agent Framework. See Features with limited regional availability.

Step 1: Query LLMs using AI Playground

Use the AI Playground to query LLMs in a chat interface.

- In your workspace, select Playground.

- Type a question such as, "What is RAG?"

Add a new LLM to compare responses side-by-side:

- In the upper-right, select + to add a model for comparison.

- In the new pane, select a different model using the dropdown selector.

- Select the Sync checkboxes to synchronize the queries.

- Try a new prompt, such as, "What is a compound AI system?" to see the two responses side-by-side.

Keep testing and comparing different LLMs to help you decide on the best one to use to build an AI agent.

Step 2: Prototype a tool-calling AI agent

Tools allow LLMs to do more than generate language. Tools can query external data, run code, and take other actions. AI Playground gives you a no-code option to prototype tool-calling agents:

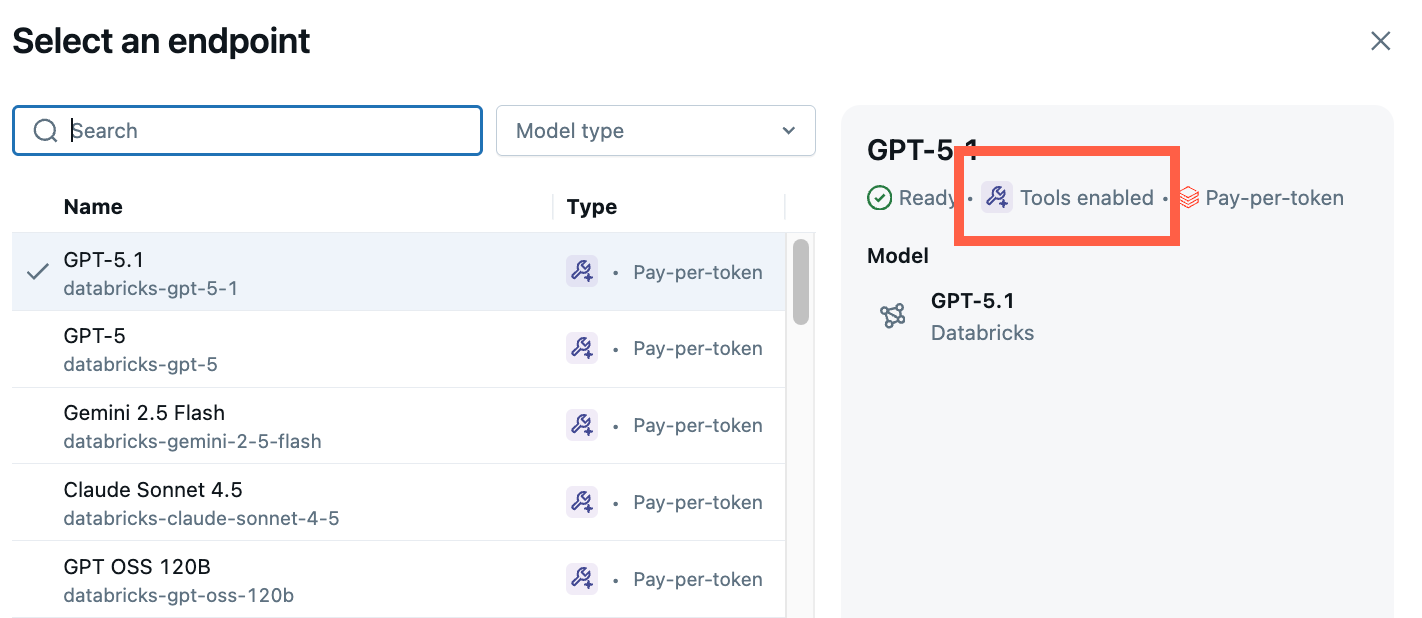

-

From Playground, choose a model labelled Tools enabled.

-

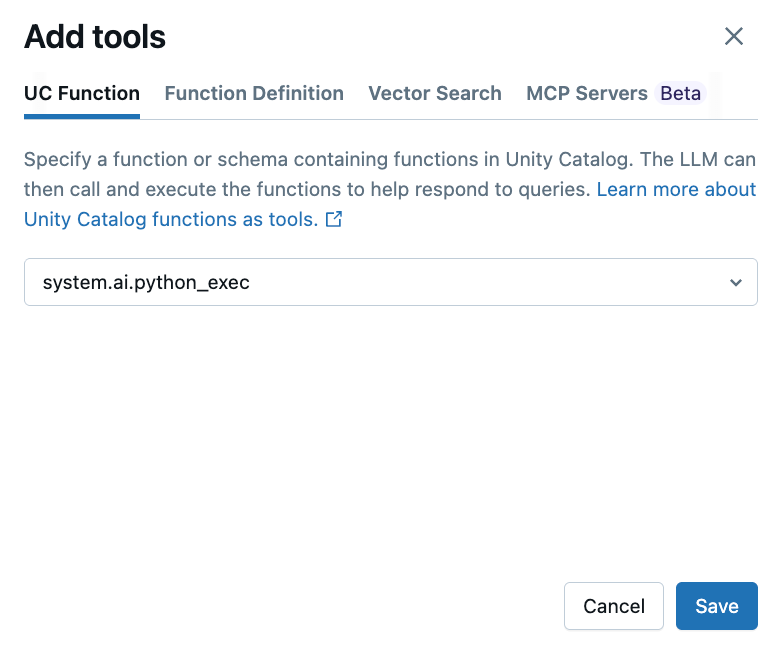

Select Tools > + Add tool and select the built-in Unity Catalog function,

system.ai.python_exec.This function lets your agent run arbitrary Python code.

Other tool options include:

- UC Function: Select a Unity Catalog function for your agent to use.

- Function definition: Define a custom function for your agent to call.

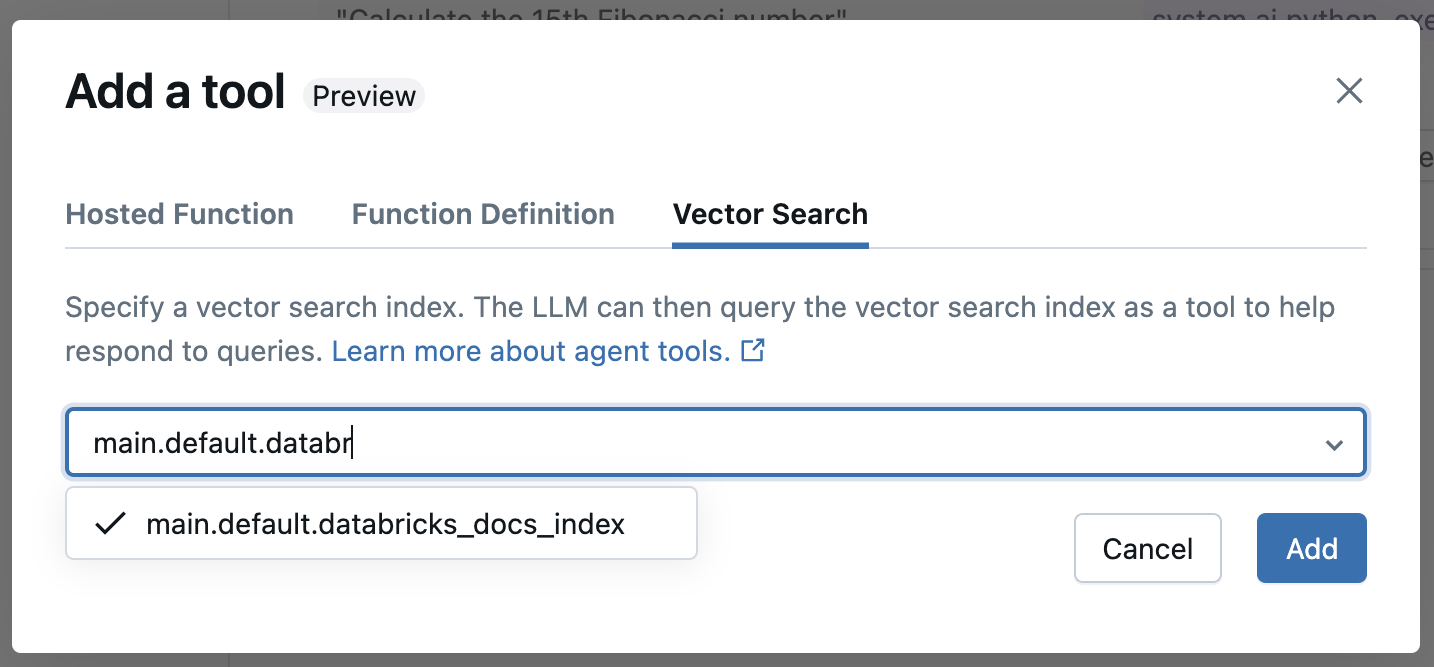

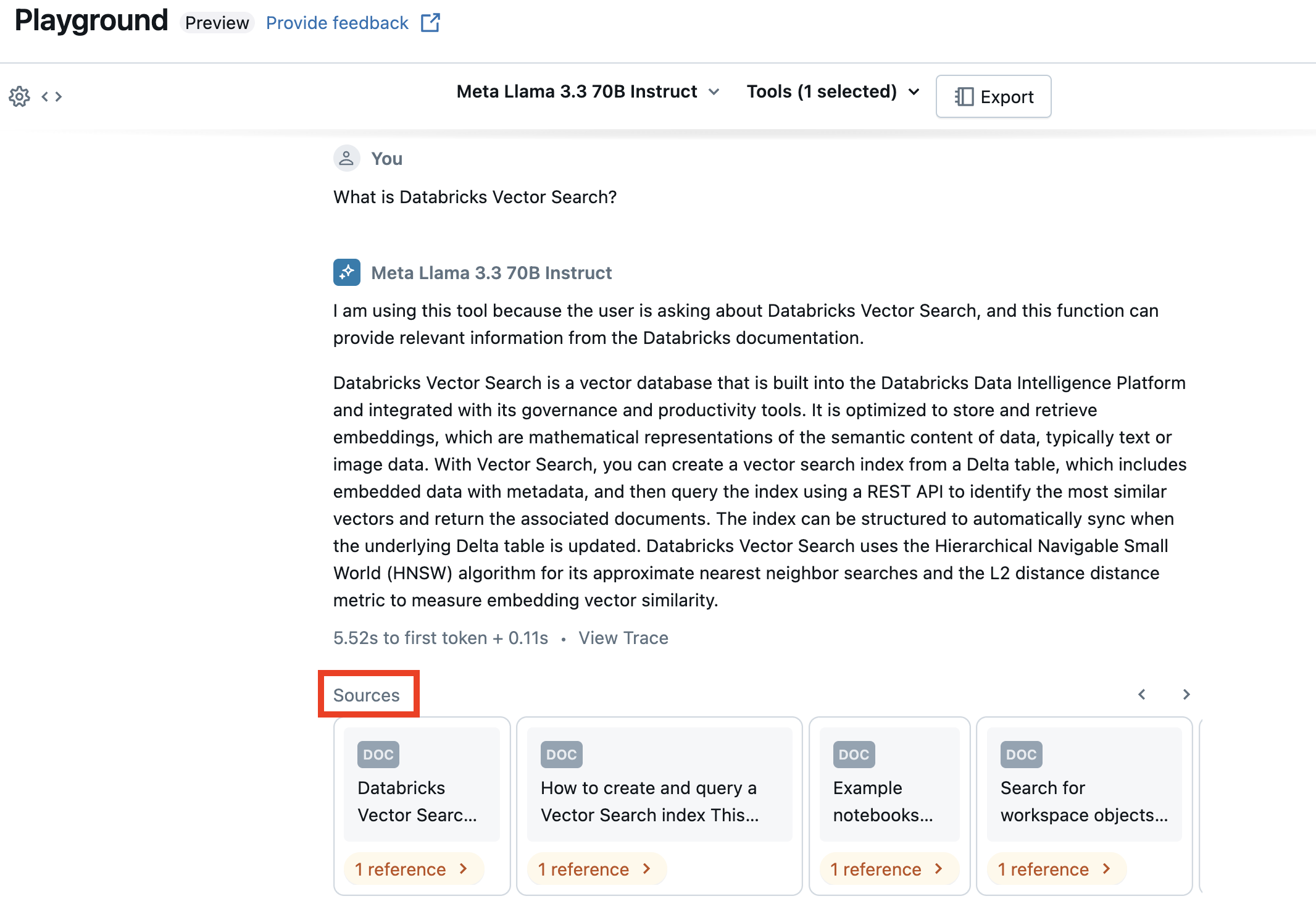

- Vector Search: Specify a vector search index. If your agent uses a vector search index, its response will cite the sources used.

- MCP: Specify MCP servers to use managed Databricks MCP servers or external MCP servers.

-

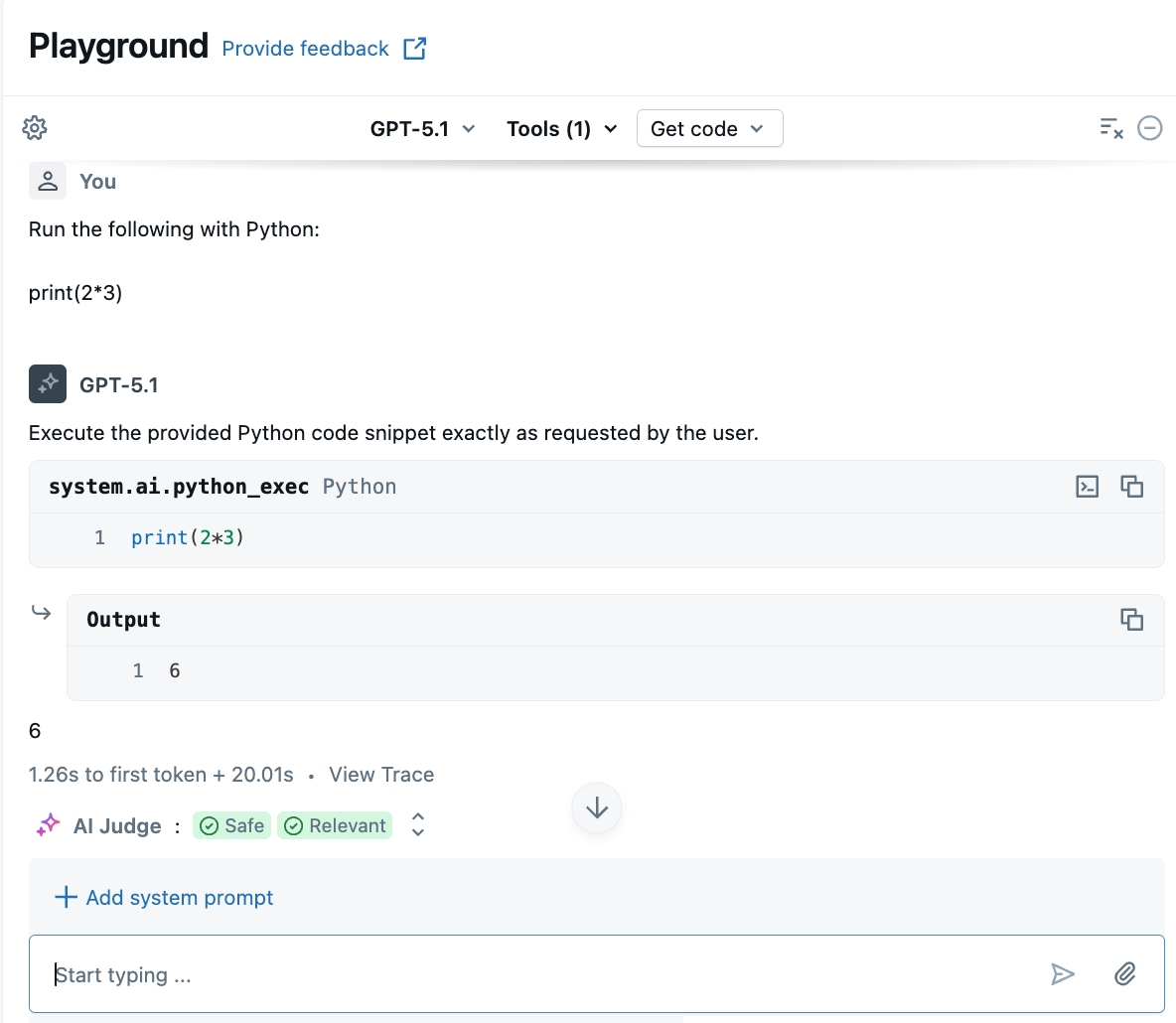

Ask a question that involves generating or running Python code. You can try different variations on your prompt phrasing. If you add multiple tools, the LLM selects the appropriate tool to generate a response.

Step 3: Export your agent to code

After testing your agent in AI Playground, click Get code > Create agent notebook to export your agent to a Python notebook.

The Python notebook contains code that defines the agent and deploys it to a model serving endpoint.

The exported notebook currently uses a legacy agent authoring workflow that deploys the agent to Model Serving. Databricks recommends authoring agents using Databricks Apps instead. See Author an AI agent and deploy it on Databricks Apps.

Optional: Prototype a RAG question-answering bot

If you have a vector search index set up in your workspace, you can prototype a question-answer bot. This type of agent uses documents in a vector search index to answer questions based on those documents.

-

Click Tools > + Add tool. Then, select your Vector Search index.

-

Ask a question related to your documents. The agent can use the vector index to look up relevant info and will cite any documents used in its answer.

To set up a vector search index, see Create a vector search index.

Export and deploy AI Playground agents

After prototyping the AI agent in AI Playground, export it to Python notebooks to deploy it to a model serving endpoint.

-

Click Get code > Create agent notebook to generate the notebook that defines and deploys the AI agent.

After exporting the agent code, a folder with a driver notebook is saved to your workspace. This driver defines a tool-calling ResponsesAgent, tests the agent locally, uses code-based logging, registers, and deploys the AI agent using Mosaic AI Agent Framework.

-

Address all the TODO's in the notebook.

The exported code might behave differently from your AI Playground session. Databricks recommends running the exported notebooks to iterate and debug further, evaluate agent quality, and then deploy the agent to share with others.

Next steps

To author agents using a code-first approach, see Author an AI agent and deploy it on Databricks Apps.