Use modelos de fundação

Neste artigo, você aprenderá quais opções estão disponíveis para escrever solicitações de consulta para modelos básicos e como enviá-las ao seu modelo em serviço endpoint. É possível consultar modelos básicos hospedados pela Databricks e modelos básicos hospedados fora da Databricks.

Para solicitações de consulta de modelos tradicionais ML ou Python, consulte Ponto de extremidade de serviço de consulta para modelos personalizados.

O Mosaic AI Model Serving é compatível com APIs de modelos de fundação e modelos externos para acessar modelos de fundação. A servindo modelo usa um site unificado compatível com OpenAI API e SDK para consultá-los. Isso possibilita experimentar e personalizar modelos básicos para produção em nuvens e fornecedores compatíveis.

Opções de consulta

Mosaic AI Model Serving oferece as seguintes opções para enviar solicitações de consulta ao ponto de extremidade que atende aos modelos básicos:

Método | Detalhes |

|---|---|

Cliente OpenAI | Consultar um modelo hospedado por um Mosaic AI Model Serving endpoint usando o cliente OpenAI. Especifique o nome do modelo de serviço endpoint como a entrada |

Funções de IA | Invoque a inferência do modelo diretamente do SQL usando a função |

UI de serviço | Selecione Query endpoint (Ponto de extremidade de consulta ) na página Serving endpoint (Ponto de extremidade de atendimento ). Insira os dados de entrada do modelo no formato JSON e clique em Send Request (Enviar solicitação ). Se o modelo tiver um registro de exemplo de entrada, use Show Example para carregá-lo. |

API REST | Chamar e consultar o modelo usando a API REST. Consulte POST /serving-endpoint/{name}/invocations para obter detalhes. Para solicitações de pontuação para o endpoint que atende a vários modelos, consulte Consultar modelos individuais em endpoint. |

SDK de implantações do MLflow | Use a função predict() do MLflow Deployments SDK para consultar o modelo. |

SDK Python da Databricks | O Databricks Python SDK é uma camada sobre a API REST. Ele lida com detalhes de baixo nível, como autenticação, facilitando a interação com os modelos. |

Requisitos

-

Um Databricks workspace em uma região com suporte.

-

Para enviar uma solicitação de pontuação por meio do cliente OpenAI, REST API ou MLflow Deployment SDK, o senhor deve ter um token Databricks API .

Como prática recomendada de segurança para cenários de produção, a Databricks recomenda que o senhor use tokens OAuth máquina a máquina para autenticação durante a produção.

Para testes e desenvolvimento, o site Databricks recomenda o uso de tokens de acesso pessoal pertencentes à entidade de serviço em vez de usuários do site workspace. Para criar tokens o site para uma entidade de serviço, consulte gerenciar tokens para uma entidade de serviço.

Instalar pacote

Depois de selecionar um método de consulta, o senhor deve primeiro instalar o pacote apropriado para o seu clustering.

- OpenAI client

- REST API

- MLflow Deployments SDK

- Databricks Python SDK

Para usar o cliente OpenAI, o pacote databricks-openai precisa ser instalado em seu cluster. Este pacote fornece um cliente OpenAI com autorização configurada automaticamente para consultar modelos generativos AI . Execute o seguinte comando no seu Notebook ou no seu terminal local:

pip install -U databricks-openai

Os itens a seguir são necessários apenas ao instalar o pacote em um site Databricks Notebook

dbutils.library.restartPython()

O acesso à API REST do Serving está disponível no Databricks Runtime for Machine Learning.

!pip install mlflow

Os itens a seguir são necessários apenas ao instalar o pacote em um site Databricks Notebook

dbutils.library.restartPython()

O Databricks SDK para Python já está instalado em todos os clusters Databricks que usam Databricks Runtime 13.3 LTS ou acima. Para o clustering Databricks que usa Databricks Runtime 12.2 LTS e abaixo, o senhor deve instalar primeiro o Databricks SDK para Python. Consulte Databricks SDK para Python.

Tipos de modelos de fundação

A tabela a seguir resume os modelos básicos compatíveis com base no tipo de tarefa.

Meta-Llama-3.1-405B-Instruct será descontinuado.

- A partir de 15 de fevereiro de 2026, para cargas de trabalho com pagamento por token.

- A partir de 15 de maio de 2026 para provisionamento Taxa de transferência de cargas de trabalho.

Consulte a seção Modelos desativados para obter o modelo de substituição recomendado e orientações sobre como migrar durante o processo de descontinuação.

Tipo de tarefa | Descrição | Modelos compatíveis | Quando usar? Casos de uso recomendados |

|---|---|---|---|

Modelos projetados para entender e participar de conversas naturais em vários turnos. Eles são ajustados com base em um grande conjunto de dados de diálogos humanos, o que lhes permite gerar respostas contextualmente relevantes, acompanhar o histórico da conversa e proporcionar interações coerentes e semelhantes às humanas em vários tópicos. | Os seguintes modelos básicos hospedados pela Databricks são compatíveis:

Os seguintes modelos externos são compatíveis:

| Recomendado para cenários em que é necessário um diálogo natural em várias voltas e uma compreensão contextual:

| |

Os modelos de incorporação são sistemas de aprendizado de máquina que transformam dados complexos, como texto, imagens ou áudio, em vetores numéricos compactos chamados de incorporações. Esses vetores capturam os recursos essenciais e as relações dentro dos dados, permitindo comparações eficientes, clustering e pesquisas semânticas. | Os seguintes modelos básicos hospedados pela Databricks são compatíveis: Os seguintes modelos externos são compatíveis:

| Recomendado para aplicações em que a compreensão semântica, a comparação de semelhanças e a recuperação ou e clustering e eficiente de dados complexos são essenciais:

| |

Modelos projetados para processar, interpretar e analisar dados visuais, como imagens e vídeos, para que as máquinas possam " ver " e entender o mundo visual. | Os seguintes modelos básicos hospedados pela Databricks são compatíveis:

Os seguintes modelos externos são compatíveis:

| Recomendado sempre que for necessária uma análise automatizada, precisa e escalável de informações visuais:

| |

AI Sistemas avançados de Inteligência Artificial projetados para simular o raciocínio lógico humano. Os modelos de raciocínio integram técnicas como lógica simbólica, raciocínio probabilístico e redes neurais para analisar o contexto, decompor tarefas e explicar sua tomada de decisão. | Os seguintes modelos básicos hospedados pela Databricks são compatíveis:

Os seguintes modelos externos são compatíveis:

| Recomendado sempre que for necessária uma análise automatizada, precisa e escalável de informações visuais:

|

Chamada de função

Databricks A Chamada de Função é compatível com OpenAI e só está disponível durante o atendimento ao modelo como parte do Foundation Model APIs e atendendo ao endpoint que atende a modelos externos. Para obter detalhes, consulte Chamada de função em Databricks.

Saídas estruturadas

As saídas estruturadas são compatíveis com o OpenAI e só estão disponíveis durante o servindo modelo como parte do Foundation Model APIs. Para obter detalhes, consulte Saídas estruturadas no Databricks.

cache imediato

O cache de prompts é compatível com modelos Claude hospedados no Databricks como parte das APIs do Foundation Model.

Você pode especificar o parâmetro cache_control em suas solicitações de consulta para armazenar em cache o seguinte:

- Mensagens de conteúdo de texto na matriz

messages.content. - Pensando no conteúdo das mensagens no array

messages.content. - Blocos de conteúdo de imagens na matriz

messages.content. - Uso da ferramenta, resultados e definições na matriz

tools.

Consulte a referência da API REST do modelo Foundation.

- TextContent

- ReasonContent

- ImageContent

- ToolCallContent

{

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What's the date today?",

"cache_control": { "type": "ephemeral" }

}

]

}

]

}

{

"messages": [

{

"role": "assistant",

"content": [

{

"type": "reasoning",

"summary": [

{

"type": "summary_text",

"text": "Thinking...",

"signature": "[optional]"

},

{

"type": "summary_encrypted_text",

"data": "[encrypted text]"

}

]

}

]

}

]

}

O conteúdo da mensagem em imagem deve usar os dados codificados como fonte. URLs não são suportadas.

{

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What’s in this image?"

},

{

"type": "image_url",

"image_url": {

"url": "data:image/jpeg;base64,[content]"

},

"cache_control": { "type": "ephemeral" }

}

]

}

]

}

{

"messages": [

{

"role": "assistant",

"content": "Ok, let’s get the weather in New York.",

"tool_calls": [

{

"type": "function",

"id": "123",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"New York, NY\"}"

},

"cache_control": { "type": "ephemeral" }

}

]

}

]

}

A API REST do Databricks é compatível com OpenAI e difere da API Anthropic. Essas diferenças também afetam objetos de resposta como os seguintes:

- O resultado é retornado no campo

choices. - transmissão formato de bloco. Todos os blocos seguem o mesmo formato, onde

choicescontém a respostadeltae o uso é retornado em cada bloco. - O motivo da parada é retornado no campo

finish_reason.- Usos Anthropic :

end_turn,stop_sequence,max_tokens, etool_use - Respectivamente, o Databricks usa:

stop,stop,length, etool_calls

- Usos Anthropic :

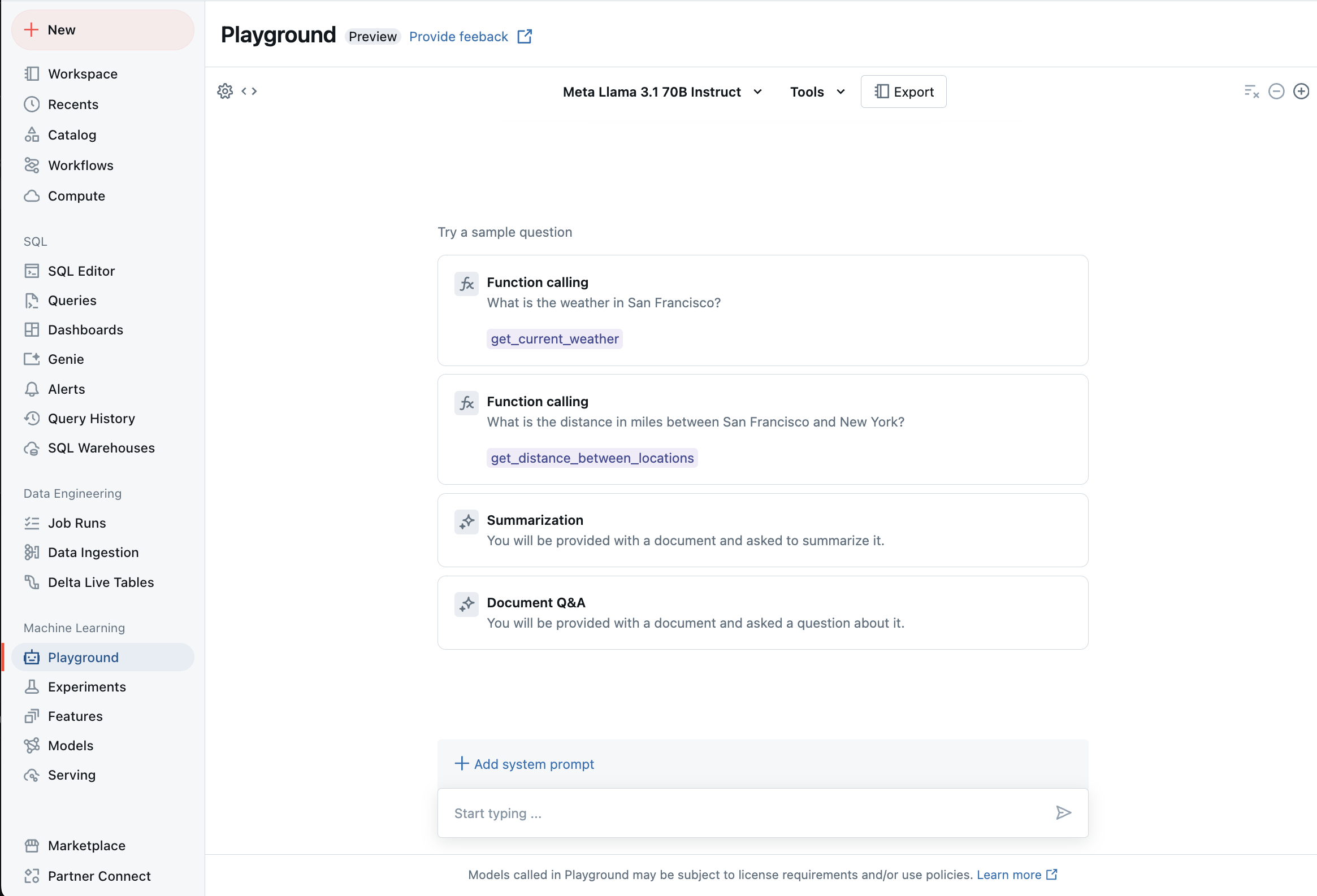

Converse com LLMs apoiados usando o AI Playground

O senhor pode interagir com grandes modelos de linguagem suportados usando o AI Playground. O AI Playground é um ambiente semelhante a um bate-papo em que o senhor pode testar, solicitar e comparar LLMs do seu Databricks workspace.

Recurso adicional

- Monitorar modelos atendidos usando tabelas de inferência habilitadas para AI Gateway

- pipeline de inferência de lotes implantado

- APIs do Foundation Model do Databricks

- Modelos externos no Mosaic AI Model Serving

- Tutorial: Crie pontos de extremidade de modelo externo para consultar modelos do OpenAI

- Modelos básicos hospedados pela Databricks disponíveis nas APIs do Modelo Básico

- Referência da API REST do Foundation Model