Create foundation model serving endpoints

In this article, you learn how to create model serving endpoints that deploy and serve foundation models.

Mosaic AI Model Serving supports the following models:

- External models. These are foundation models that are hosted outside of Databricks. Endpoints that serve external models can be centrally governed and customers can establish rate limits and access control for them. Examples include foundation models like OpenAI's GPT-4 and Anthropic's Claude.

- State-of-the-art open foundation models made available by Foundation Model APIs. These models are curated foundation model architectures that support optimized inference. Base models, like Meta-Llama-3.1-70B-Instruct, GTE-Large, and Mistral-7B are available for immediate use with pay-per-token pricing. Production workloads, using base or fine-tuned models, can be deployed with performance guarantees using provisioned throughput.

Model Serving provides the following options for model serving endpoint creation:

- The Serving UI

- REST API

- MLflow Deployments SDK

For creating endpoints that serve traditional ML or Python models, see Create custom model serving endpoints.

Requirements

- A Databricks workspace in a supported region.

- For creating endpoints using the MLflow Deployments SDK, you must install the MLflow Deployment client. To install it, run:

import mlflow.deployments

client = mlflow.deployments.get_deploy_client("databricks")

Create a foundation model serving endpoint

You can create an endpoint that serves fine-tuned variants of foundation models made available using Foundation Model APIs provisioned throughput. See Create your provisioned throughput endpoint using the REST API.

For foundation models that are made available using Foundation Model APIs pay-per-token, Databricks automatically provides specific endpoints to access the supported models in your Databricks workspace. To access them, select the Serving tab in the left sidebar of the workspace. The Foundation Model APIs are located at the top of the Endpoints list view.

For querying these endpoints, see Use foundation models.

Create an external model serving endpoint

The following describes how to create an endpoint that queries a foundation model made available using Databricks external models.

- Serving UI

- REST API

- MLflow Deployments SDK

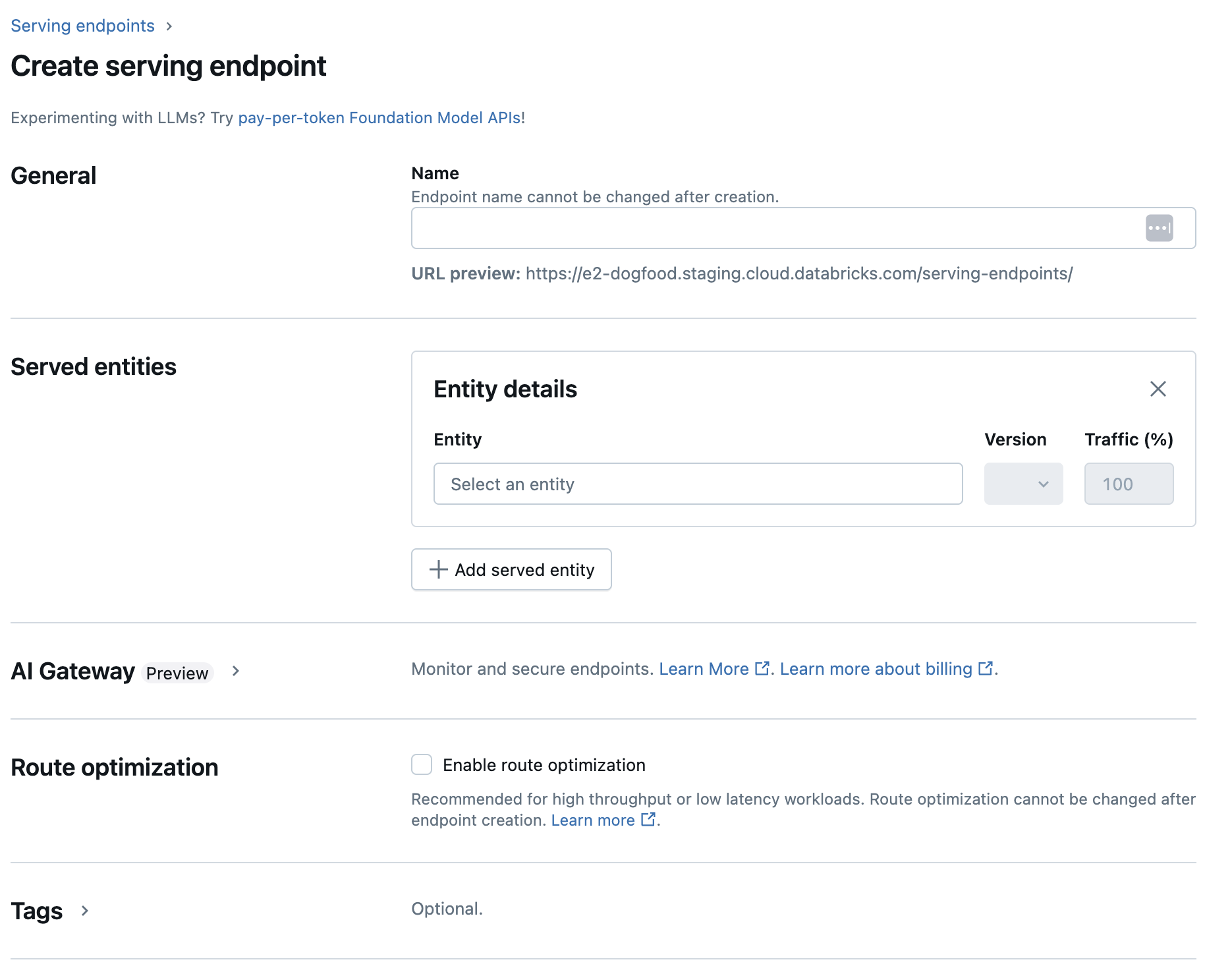

- In the Name field provide a name for your endpoint.

- In the Served entities section

- Click into the Entity field to open the Select served entity form.

- Select Foundation models.

- In the Select a foundation model field, select the model provider you want to use from those listed under External model providers. The form dynamically updates based on your model provider selection.

- Click Confirm.

- Provide the configuration details for accessing the selected model provider. This is typically the secret that references the personal access token you want the endpoint to use to access this model.

- Select the task. Available tasks are chat, completion, and embeddings.

- Select the name of the external model you want to use. The list of models dynamically updates based on your task selection. See the available external models.

- Click Create. The Serving endpoints page appears with Serving endpoint state shown as Not Ready.

The REST API parameters for creating serving endpoints that serve external models are in Public Preview.

The following example creates an endpoint that serves the first version of the text-embedding-ada-002 model provided by OpenAI.

See POST /api/2.0/serving-endpoints for endpoint configuration parameters.

{

"name": "openai_endpoint",

"config":

{

"served_entities":

[

{

"name": "openai_embeddings",

"external_model":{

"name": "text-embedding-ada-002",

"provider": "openai",

"task": "llm/v1/embeddings",

"openai_config":{

"openai_api_key": "{{secrets/my_scope/my_openai_api_key}}"

}

}

}

]

},

"rate_limits": [

{

"calls": 100,

"key": "user",

"renewal_period": "minute"

}

],

"tags": [

{

"key": "team",

"value": "gen-ai"

}

]

}

The following is an example response.

{

"name": "openai_endpoint",

"creator": "user@email.com",

"creation_timestamp": 1699617587000,

"last_updated_timestamp": 1699617587000,

"state": {

"ready": "READY"

},

"config": {

"served_entities": [

{

"name": "openai_embeddings",

"external_model": {

"provider": "openai",

"name": "text-embedding-ada-002",

"task": "llm/v1/embeddings",

"openai_config": {

"openai_api_key": "{{secrets/my_scope/my_openai_api_key}}"

}

},

"state": {

"deployment": "DEPLOYMENT_READY",

"deployment_state_message": ""

},

"creator": "user@email.com",

"creation_timestamp": 1699617587000

}

],

"traffic_config": {

"routes": [

{

"served_model_name": "openai_embeddings",

"traffic_percentage": 100

}

]

},

"config_version": 1

},

"tags": [

{

"key": "team",

"value": "gen-ai"

}

],

"id": "69962db6b9db47c4a8a222d2ac79d7f8",

"permission_level": "CAN_MANAGE",

"route_optimized": false

}

The following creates an endpoint for embeddings with OpenAI text-embedding-ada-002.

For external model endpoints, you must provide API keys for the model provider you want to use. See POST /api/2.0/serving-endpoints in the REST API for request and response schema details. For a step-by-step guide, see Tutorial: Create external model endpoints to query OpenAI models.

You can also create endpoints for completions and chat tasks, as specified by the task field in the external_model section of the configuration. See External models in Mosaic AI Model Serving for supported models and providers for each task.

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

endpoint = client.create_endpoint(

name="chat",

config={

"served_entities": [

{

"name": "completions",

"external_model": {

"name": "gpt-4",

"provider": "openai",

"task": "llm/v1/chat",

"openai_config": {

"openai_api_key": "{{secrets/scope/key}}",

},

},

}

],

},

)

assert endpoint == {

"name": "chat",

"creator": "alice@company.com",

"creation_timestamp": 0,

"last_updated_timestamp": 0,

"state": {...},

"config": {...},

"tags": [...],

"id": "88fd3f75a0d24b0380ddc40484d7a31b",

}

Update model serving endpoints

After enabling a model endpoint, you can set the compute configuration as desired. This configuration is particularly helpful if you need additional resources for your model. Workload size and compute configuration play a key role in what resources are allocated for serving your model.

Until the new configuration is ready, the old configuration keeps serving prediction traffic. While there is an update in progress, another update cannot be made. In the Serving UI, you can cancel an in progress configuration update by selecting Cancel update on the top right of the endpoint's details page. This functionality is only available in the Serving UI.

When an external_model is present in an endpoint configuration, the served entities list can only have one served_entity object. Existing endpoints with an external_model can not be updated to no longer have an external_model. If the endpoint is created without an external_model, you cannot update it to add an external_model.

- REST API

- MLflow Deployments SDK

To update your endpoint see the REST API update configuration documentation for request and response schema details.

{

"name": "openai_endpoint",

"served_entities":

[

{

"name": "openai_chat",

"external_model":{

"name": "gpt-4",

"provider": "openai",

"task": "llm/v1/chat",

"openai_config":{

"openai_api_key": "{{secrets/my_scope/my_openai_api_key}}"

}

}

}

]

}

To update your endpoint see the REST API update configuration documentation for request and response schema details.

from mlflow.deployments import get_deploy_client

client = get_deploy_client("databricks")

endpoint = client.update_endpoint(

endpoint="chat",

config={

"served_entities": [

{

"name": "chats",

"external_model": {

"name": "gpt-4",

"provider": "openai",

"task": "llm/v1/chat",

"openai_config": {

"openai_api_key": "{{secrets/scope/key}}",

},

},

}

],

},

)

assert endpoint == {

"name": "chats",

"creator": "alice@company.com",

"creation_timestamp": 0,

"last_updated_timestamp": 0,

"state": {...},

"config": {...},

"tags": [...],

"id": "88fd3f75a0d24b0380ddc40484d7a31b",

}

rate_limits = client.update_endpoint(

endpoint="chat",

config={

"rate_limits": [

{

"key": "user",

"renewal_period": "minute",

"calls": 10,

}

],

},

)

assert rate_limits == {

"rate_limits": [

{

"key": "user",

"renewal_period": "minute",

"calls": 10,

}

],

}